3Dprinting (172) A.I. (660) animation (334) blender (194) colour (225) commercials (46) composition (150) cool (358) design (627) Featured (65) hardware (302) IOS (109) jokes (134) lighting (278) modeling (116) music (183) photogrammetry (171) photography (744) production (1233) python (84) quotes (485) reference (305) software (1319) trailers (295) ves (522) VR (219)

Search results for: “nvidia”

-

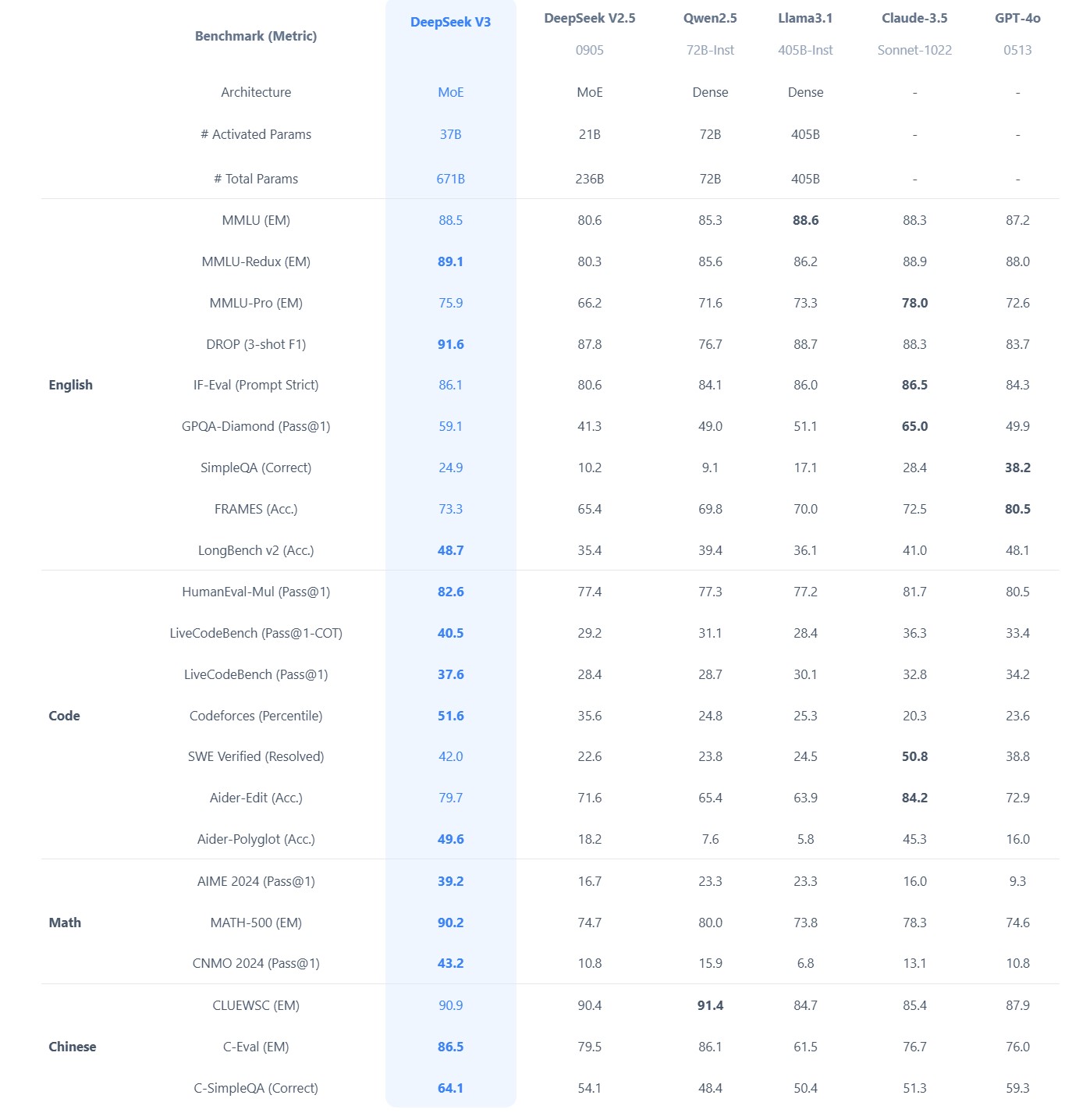

What did DeepSeek figure out about reasoning with DeepSeek-R1?

https://www.seangoedecke.com/deepseek-r1

The Chinese AI lab DeepSeek recently released their new reasoning model R1, which is supposedly (a) better than the current best reasoning models (OpenAI’s o1- series), and (b) was trained on a GPU cluster a fraction the size of any of the big western AI labs.

DeepSeek uses a reinforcement learning approach, not a fine-tuning approach. There’s no need to generate a huge body of chain-of-thought data ahead of time, and there’s no need to run an expensive answer-checking model. Instead, the model generates its own chains-of-thought as it goes.

The secret behind their success? A bold move to train their models using FP8 (8-bit floating-point precision) instead of the standard FP32 (32-bit floating-point precision).

…

By using a clever system that applies high precision only when absolutely necessary, they achieved incredible efficiency without losing accuracy.

…

The impressive part? These multi-token predictions are about 85–90% accurate, meaning DeepSeek R1 can deliver high-quality answers at double the speed of its competitors.Chinese AI firm DeepSeek has 50,000 NVIDIA H100 AI GPUs

-

Render in a Flash with Updates to Autodesk Arnold 7.2.5

Arnold 7.2.5 adds support for NVIDIA and Intel GPU denoising on Windows in the Intel Denoiser. Denoising with a GPU using the Intel Denoiser should be now between 10x and 20x faster.

https://help.autodesk.com/view/ARNOL/ENU/?guid=arnold_core_7250_html

-

Denoisers available in Arnold

https://help.autodesk.com/view/ARNOL/ENU/?guid=arnold_user_guide_ac_denoising_html

AOV denoising: While all denoisers work on arbitrary AOVs, not all denoisers guarantee that the denoised AOVs composite together to match the denoised beauty. The AOV column indicates whether a denoiser has robust AOV denoising and can produce a result where denoised_AOV_1 + denoised_AOV_2 + … + denoised_AOV_N = denoised_Beauty.

OptiX™ Denoiser imager

This imager is available as a post-processing effect. The imager also exposes additional controls for clamping and blending the result. It is based on Nvidia AI technology and is integrated into Arnold for use with IPR and look dev. The OptiX™ denoiser is meant to be used during IPR (so that you get a very quickly denoised image as you’re moving the camera and making other adjustments).

OIDN Denoiser imager

The OIDN denoiser (based on Intel’s Open Image Denoise technology) is available as a post-processing effect. It is integrated into Arnold for use with IPR as an imager (so that you get a very quickly denoised image as you’re moving the camera and making other adjustments).

Arnold Denoiser (Noice)

The Arnold Denoiser (Noice) can be run from a dedicated UI, exposed in the Denoiser, or as an imager, you will need to render images out first via the Arnold EXR driver with variance AOVs enabled. It is also available as a stand-alone program (noice.exe).

This imager is available as a post-processing effect. You can automatically denoise images every time you render a scene, edit the denoising settings and see the resulting image directly in the render view. It favors quality over speed and is, therefore, more suitable for high-quality final frame denoising and animation sequences.

Note:imager_denoiser_noice does not support temporal denoising (required for denoising an animation).

-

HDRI Resources

Text2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360

-

Autodesk open sources Aurora – an interactive path tracing renderer that leverages graphics processing unit (GPU) hardware ray tracing

https://github.com/autodesk/Aurora

Goals for Aurora

- Renders noise-free in 50 milliseconds or less per frame.

- Intended for design iteration (viewport, performance) rather than final frames (production, quality), which are produced from a renderer like Autodesk Arnold.

- OS-independent: Runs on Windows, Linux, MacOS.

- Vendor-independent: Runs on GPUs from AMD, Apple, Intel, NVIDIA.

Features

- Path tracing and the global effects that come with it: soft shadows, reflections, refractions, bounced light, and others.

- Autodesk Standard Surface materials defined with MaterialX documents.

- Arbitrary blended layers of materials, which can be used to implement decals.

- Environment lighting with a wrap-around lat-long image.

- Triangle geometry with object instancing.

- Real-time denoising

- Interactive performance for complex scenes.

- A USD Hydra render delegate called HdAurora.

-

Virtual Production Offers Real Savings For Studios Of All Sizes

Rob Legato, the award-winning FFX Supervisor whose work you may have seen in movies like Titanic, Avatar and The Jungle Book, is incredibly bullish on virtual production. At the Microsoft Production Summit, presented by NVIDIA NVDA +0.2%& Unreal Engine in Los Angeles, he reported that he recently did a movie with Ben Affleck and Matt Damon in twenty-four days, “Cutting down the days cut down the budget, and it’s amazing what a difference that can make. Productions can now do for $25 million what used to cost $100 million.”

-

OpenVDB and NanoVDB in Unreal Engine

NanoVDB, is NVIDIA’s version of the OpenVDB library. This solution offers one significant advantage over OpenVDB, namely GPU support. It accelerates processes such as filtering, volume rendering, collision detection, ray tracing, etc., and allows you to generate and load complex special effects, all in real time.

Nevertheless, the NanoVDB structure does not significantly compress volume size. Therefore, it’s not so commonly applied in game development.

github.com/eidosmontreal/unreal-vdb

Example file: https://lnkd.in/gMqmFwCj

-

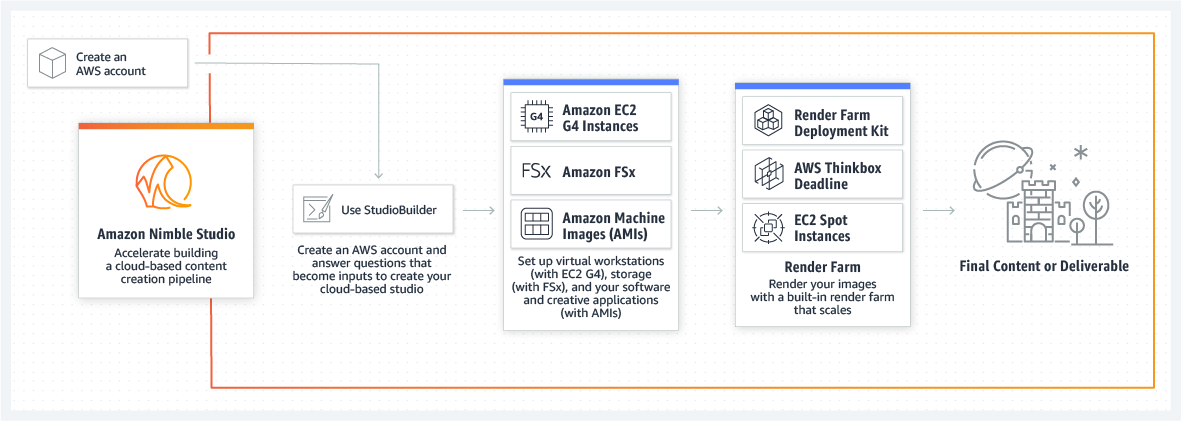

Amazon Nimble Studio – cloud based virtual production studios

Using Amazon Nimble Studio, customers can create a new content production studio in just a few hours. Artists then have immediate access to high-performance workstations powered by Amazon Elastic Compute Cloud (EC2) G4dn instances with NVIDIA GPUs, shared file storage from Amazon FSx, and low-latency streaming via the AWS global network. Content production studios can onboard remote teams from around the world and provide them access to just the right amount of high-performance infrastructure for only as long as needed – all without having to procure, set up, and manage local workstations, file systems, and low-latency networking.

https://aws.amazon.com/nimble-studio

-

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

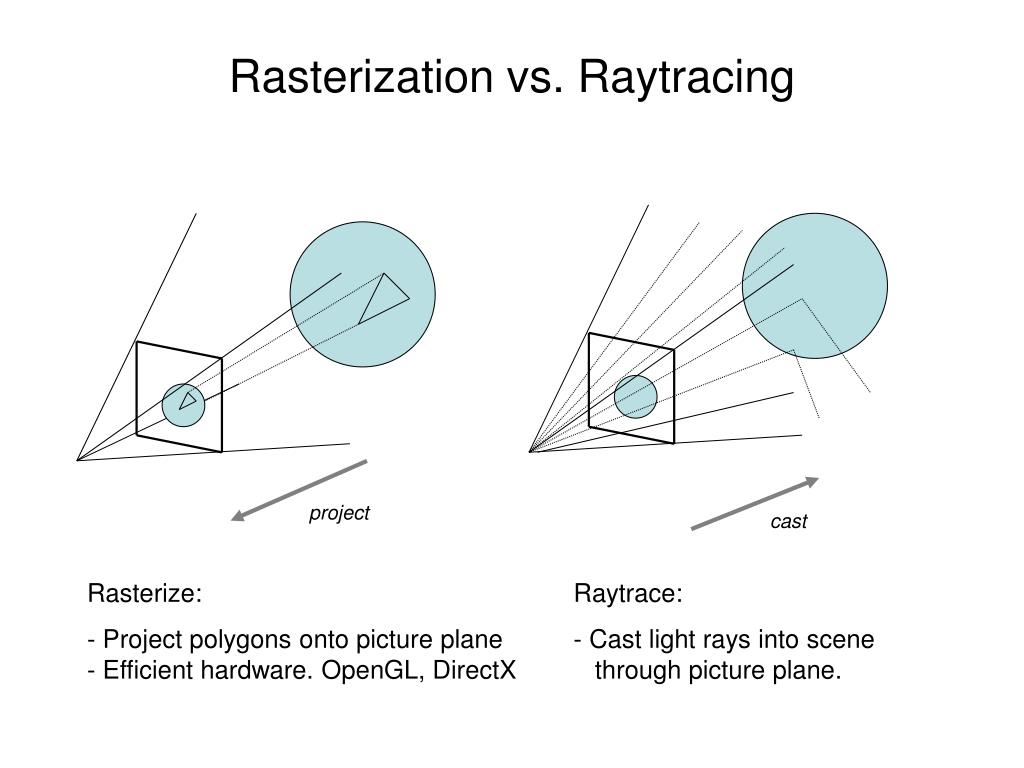

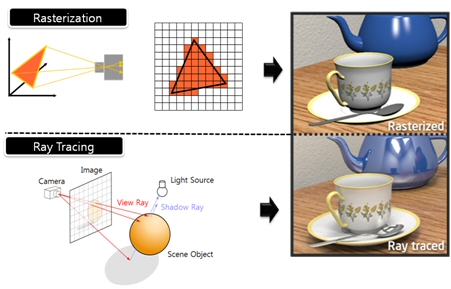

RASTERIZATION

Rasterisation (or rasterization) is the task of taking the information described in a vector graphics format OR the vertices of triangles making 3D shapes and converting them into a raster image (a series of pixels, dots or lines, which, when displayed together, create the image which was represented via shapes), or in other words “rasterizing” vectors or 3D models onto a 2D plane for display on a computer screen.For each triangle of a 3D shape, you project the corners of the triangle on the virtual screen with some math (projective geometry). Then you have the position of the 3 corners of the triangle on the pixel screen. Those 3 points have texture coordinates, so you know where in the texture are the 3 corners. The cost is proportional to the number of triangles, and is only a little bit affected by the screen resolution.

In computer graphics, a raster graphics or bitmap image is a dot matrix data structure that represents a generally rectangular grid of pixels (points of color), viewable via a monitor, paper, or other display medium.

With rasterization, objects on the screen are created from a mesh of virtual triangles, or polygons, that create 3D models of objects. A lot of information is associated with each vertex, including its position in space, as well as information about color, texture and its “normal,” which is used to determine the way the surface of an object is facing.

Computers then convert the triangles of the 3D models into pixels, or dots, on a 2D screen. Each pixel can be assigned an initial color value from the data stored in the triangle vertices.

Further pixel processing or “shading,” including changing pixel color based on how lights in the scene hit the pixel, and applying one or more textures to the pixel, combine to generate the final color applied to a pixel.

The main advantage of rasterization is its speed. However, rasterization is simply the process of computing the mapping from scene geometry to pixels and does not prescribe a particular way to compute the color of those pixels. So it cannot take shading, especially the physical light, into account and it cannot promise to get a photorealistic output. That’s a big limitation of rasterization.

There are also multiple problems:

If you have two triangles one is behind the other, you will draw twice all the pixels. you only keep the pixel from the triangle that is closer to you (Z-buffer), but you still do the work twice.

The borders of your triangles are jagged as it is hard to know if a pixel is in the triangle or out. You can do some smoothing on those, that is anti-aliasing.

You have to handle every triangles (including the ones behind you) and then see that they do not touch the screen at all. (we have techniques to mitigate this where we only look at triangles that are in the field of view)

Transparency is hard to handle (you can’t just do an average of the color of overlapping transparent triangles, you have to do it in the right order)

RAY CASTING

It is almost the exact reverse of rasterization: you start from the virtual screen instead of the vector or 3D shapes, and you project a ray, starting from each pixel of the screen, until it intersect with a triangle.The cost is directly correlated to the number of pixels in the screen and you need a really cheap way of finding the first triangle that intersect a ray. In the end, it is more expensive than rasterization but it will, by design, ignore the triangles that are out of the field of view.

You can use it to continue after the first triangle it hit, to take a little bit of the color of the next one, etc… This is useful to handle the border of the triangle cleanly (less jagged) and to handle transparency correctly.

RAYTRACING

Same idea as ray casting except once you hit a triangle you reflect on it and go into a different direction. The number of reflection you allow is the “depth” of your ray tracing. The color of the pixel can be calculated, based off the light source and all the polygons it had to reflect off of to get to that screen pixel.The easiest way to think of ray tracing is to look around you, right now. The objects you’re seeing are illuminated by beams of light. Now turn that around and follow the path of those beams backwards from your eye to the objects that light interacts with. That’s ray tracing.

Ray tracing is eye-oriented process that needs walking through each pixel looking for what object should be shown there, which is also can be described as a technique that follows a beam of light (in pixels) from a set point and simulates how it reacts when it encounters objects.

Compared with rasterization, ray tracing is hard to be implemented in real time, since even one ray can be traced and processed without much trouble, but after one ray bounces off an object, it can turn into 10 rays, and those 10 can turn into 100, 1000…The increase is exponential, and the the calculation for all these rays will be time consuming.

Historically, computer hardware hasn’t been fast enough to use these techniques in real time, such as in video games. Moviemakers can take as long as they like to render a single frame, so they do it offline in render farms. Video games have only a fraction of a second. As a result, most real-time graphics rely on the another technique called rasterization.

PATH TRACING

Path tracing can be used to solve more complex lighting situations.

Path tracing is a type of ray tracing. When using path tracing for rendering, the rays only produce a single ray per bounce. The rays do not follow a defined line per bounce (to a light, for example), but rather shoot off in a random direction. The path tracing algorithm then takes a random sampling of all of the rays to create the final image. This results in sampling a variety of different types of lighting.When a ray hits a surface it doesn’t trace a path to every light source, instead it bounces the ray off the surface and keeps bouncing it until it hits a light source or exhausts some bounce limit.

It then calculates the amount of light transferred all the way to the pixel, including any color information gathered from surfaces along the way.

It then averages out the values calculated from all the paths that were traced into the scene to get the final pixel color value.It requires a ton of computing power and if you don’t send out enough rays per pixel or don’t trace the paths far enough into the scene then you end up with a very spotty image as many pixels fail to find any light sources from their rays. So when you increase the the samples per pixel, you can see the image quality becomes better and better.

Ray tracing tends to be more efficient than path tracing. Basically, the render time of a ray tracer depends on the number of polygons in the scene. The more polygons you have, the longer it will take.

Meanwhile, the rendering time of a path tracer can be indifferent to the number of polygons, but it is related to light situation: If you add a light, transparency, translucence, or other shader effects, the path tracer will slow down considerably.blogs.nvidia.com/blog/2018/03/19/whats-difference-between-ray-tracing-rasterization/

https://en.wikipedia.org/wiki/Rasterisation

https://www.quora.com/Whats-the-difference-between-ray-tracing-and-path-tracing

-

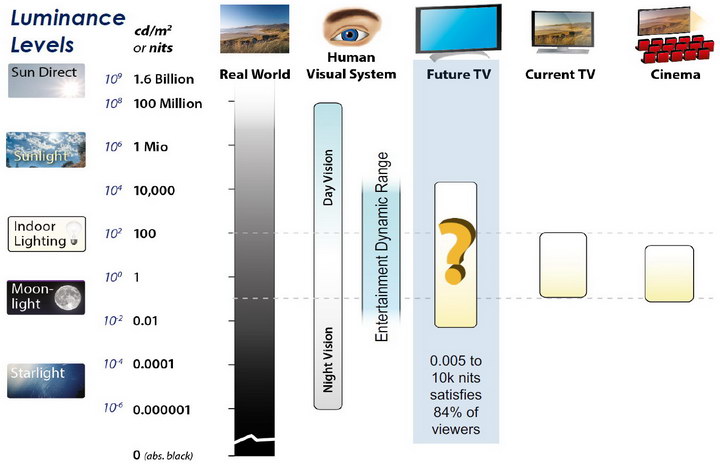

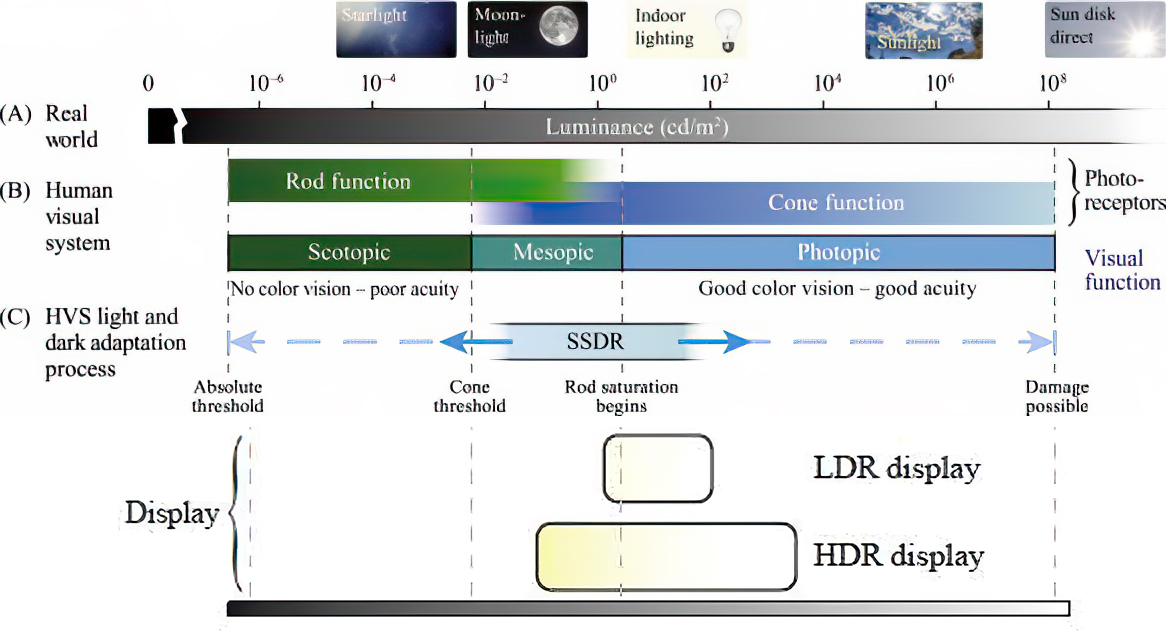

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

https://www.hdrsoft.com/resources/dri.html#bit-depth

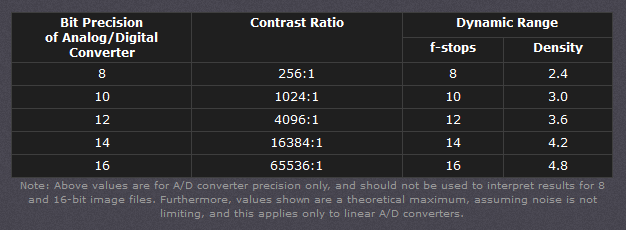

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

The Dynamic Range of real-world scenes can be quite high — ratios of 100,000:1 are common in the natural world. An HDR (High Dynamic Range) image stores pixel values that span the whole tonal range of real-world scenes. Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel. Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera.

For TVs HDR is great, but it’s not the only new TV feature worth discussing.

Wide color gamut, or WCG, is often lumped in with HDR. While they’re often found together, they’re not intrinsically linked. Where HDR is an increase in the dynamic range of the picture (with contrast and brighter highlights in particular), a TV’s wide color gamut coverage refers to how much of the new, larger color gamuts a TV can display.

Wide color gamuts only really matter for HDR video sources like UHD Blu-rays and some streaming video, as only HDR sources are meant to take advantage of the ability to display more colors.

www.cnet.com/how-to/what-is-wide-color-gamut-wcg/

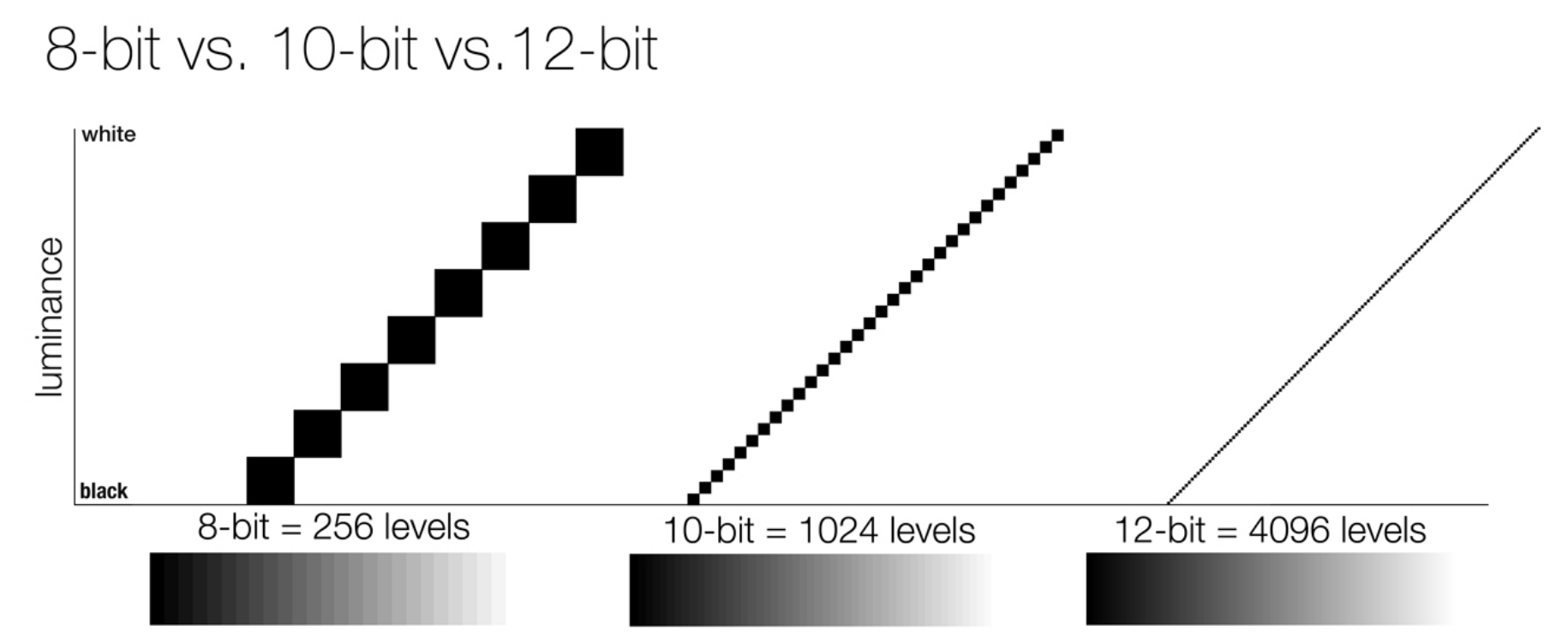

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed through a pixel; the other aspect is how broad a range of colors can be expressed (the gamut)

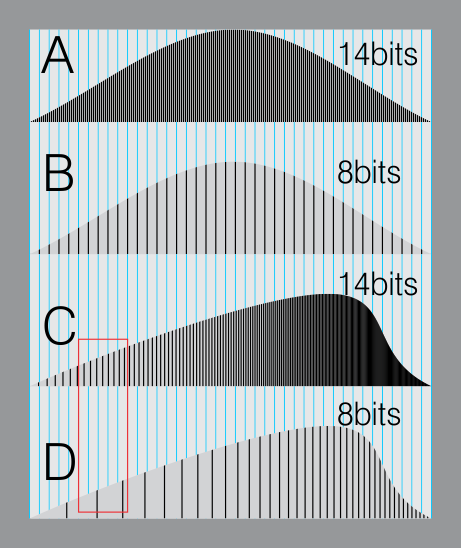

Image rendering bit depth

Wide color gamuts include a greater number of colors than what most current TVs can display, so the greater a TV’s coverage of a wide color gamut, the more colors a TV will be able to reproduce.

When we talk about a color space or color gamut we refer to the range of color values stored in an image. The perception of these color also requires a display that has been tuned with to resolve these color profiles at best. This is often referred to as a ‘viewer lut’.

So this comes also usually paired with an increase in bit depth, going from the old 8 bit system (256 shades per color, with the potential of over 16.7 million colors: 256 green x 256 blue x 256 red) to 10 (1024+ shades per color, with access to over a billion colors) or higher bits, like 12 bit (4096 shades per RGB for 68 billion colors).

The advantage of higher bit depth is in the ability to bias color with the minimum loss.

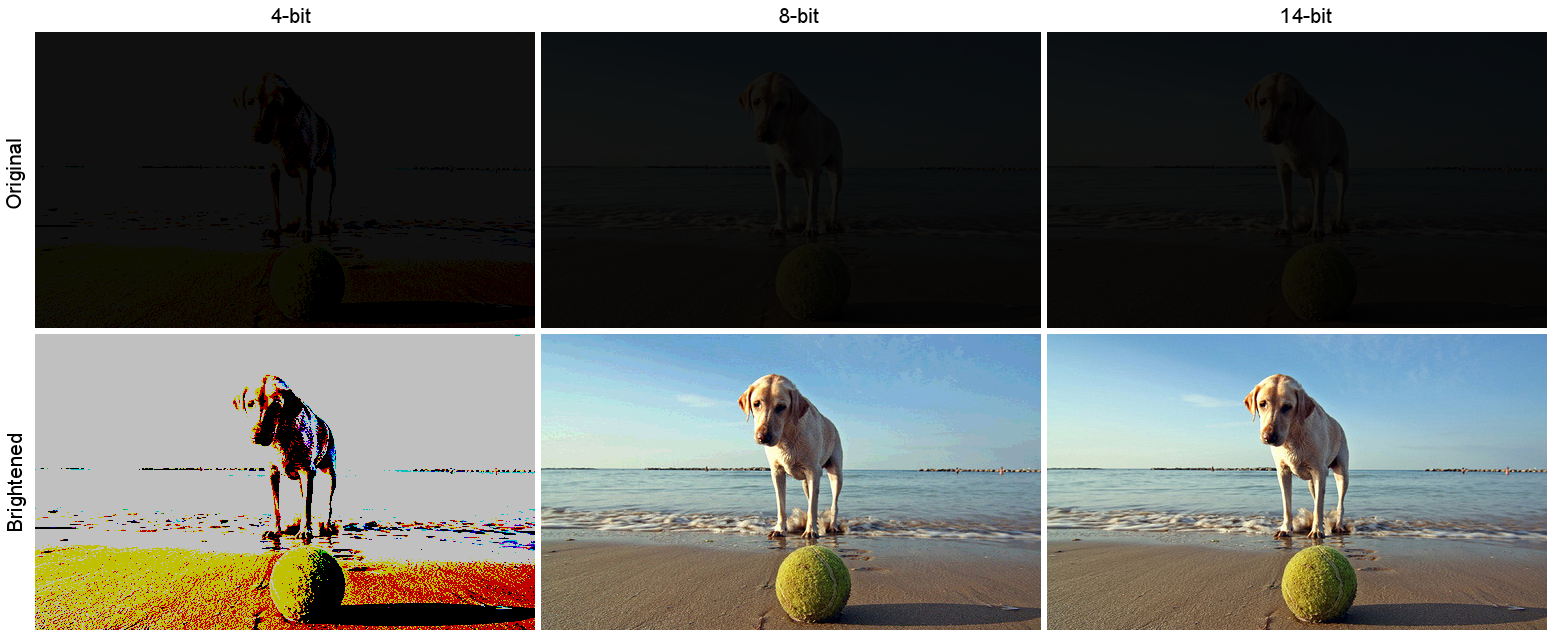

For an extreme example, raising the brightness from a completely dark image allows for better reproduction, independently on the reproduction medium, due to the amount of data available at editing time:

https://www.cambridgeincolour.com/tutorials/dynamic-range.htm

https://www.hdrsoft.com/resources/dri.html#bit-depth

Note that the number of bits itself may be a misleading indication of the real dynamic range that the image reproduces — converting a Low Dynamic Range image to a higher bit depth does not change its dynamic range, of course.

- 8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

- 16-bit images (i.e. 48 bits per pixel for a color image) resulting from RAW conversion are still considered Low Dynamic Range, even though the range of values they can encode is significantly higher than for 8-bit images (65536 versus 256). Note that converting a RAW file involves applying a tonal curve that compresses the dynamic range of the RAW data so that the converted image shows correctly on low dynamic range monitors. The need to adapt the output image file to the dynamic range of the display is the factor that dictates how much the dynamic range is compressed, not the output bit-depth. By using 16 instead of 8 bits, you will gain precision but you will not gain dynamic range.

- 32-bit images (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range.Unlike 8- and 16-bit images which can take a finite number of values, 32-bit images are coded using floating point numbers, which means the values they can take is unlimited.It is important to note, though, that storing an image in a 32-bit HDR format is a necessary condition for an HDR image but not a sufficient one. When an image comes from a single capture with a standard camera, it will remain a Low Dynamic Range image,

Also note that bit depth and dynamic range are often confused as one, but are indeed separate concepts and there is no direct one to one relationship between them. Bit depth is about capacity, dynamic range is about the actual ratio of data stored.

The bit depth of a capturing or displaying device gives you an indication of its dynamic range capacity. That is, the highest dynamic range that the device would be capable of reproducing if all other constraints are eliminated.https://rawpedia.rawtherapee.com/Bit_Depth

Finally, note that there are two ways to “count” bits for an image — either the number of bits per color channel (BPC) or the number of bits per pixel (BPP). A bit (0,1) is the smallest unit of data stored in a computer.

For a grayscale image, 8-bit means that each pixel can be one of 256 levels of gray (256 is 2 to the power 8).

For an RGB color image, 8-bit means that each one of the three color channels can be one of 256 levels of color.

Since each pixel is represented by 3 colors in this case, 8-bit per color channel actually means 24-bit per pixel.Similarly, 16-bit for an RGB image means 65,536 levels per color channel and 48-bit per pixel.

To complicate matters, when an image is classified as 16-bit, it just means that it can store a maximum 65,535 values. It does not necessarily mean that it actually spans that range. If the camera sensors can not capture more than 12 bits of tonal values, the actual bit depth of the image will be at best 12-bit and probably less because of noise.

The following table attempts to summarize the above for the case of an RGB color image.

Type of digital support Bit depth per color channel Bit depth per pixel FStops Theoretical maximum Dynamic Range Reality 8-bit 8 24 8 256:1 most consumer images 12-bit CCD 12 36 12 4,096:1 real maximum limited by noise 14-bit CCD 14 42 14 16,384:1 real maximum limited by noise 16-bit TIFF (integer) 16 48 16 65,536:1 bit-depth in this case is not directly related to the dynamic range captured 16-bit float EXR 16 48 30 65,536:1 values are distributed more closely in the (lower) darker tones than in the (higher) lighter ones, thus allowing for a more accurate description of the tones more significant to humans. The range of normalized 16-bit floats can represent thirty stops of information with 1024 steps per stop. We have eighteen and a half stops over middle gray, and eleven and a half below. The denormalized numbers provide an additional ten stops with decreasing precision per stop.

http://download.nvidia.com/developer/GPU_Gems/CD_Image/Image_Processing/OpenEXR/OpenEXR-1.0.6/doc/#recsHDR image (e.g. Radiance format) 32 96 “infinite” 4.3 billion:1 real maximum limited by the captured dynamic range 32-bit floats are often called “single-precision” floats, and 64-bit floats are often called “double-precision” floats. 16-bit floats therefore are called “half-precision” floats, or just “half floats”.

https://petapixel.com/2018/09/19/8-12-14-vs-16-bit-depth-what-do-you-really-need

On a separate note, even Photoshop does not handle 16bit per channel. Photoshop does actually use 16-bits per channel. However, it treats the 16th digit differently – it is simply added to the value created from the first 15-digits. This is sometimes called 15+1 bits. This means that instead of 216 possible values (which would be 65,536 possible values) there are only 215+1 possible values (which is 32,768 +1 = 32,769 possible values).

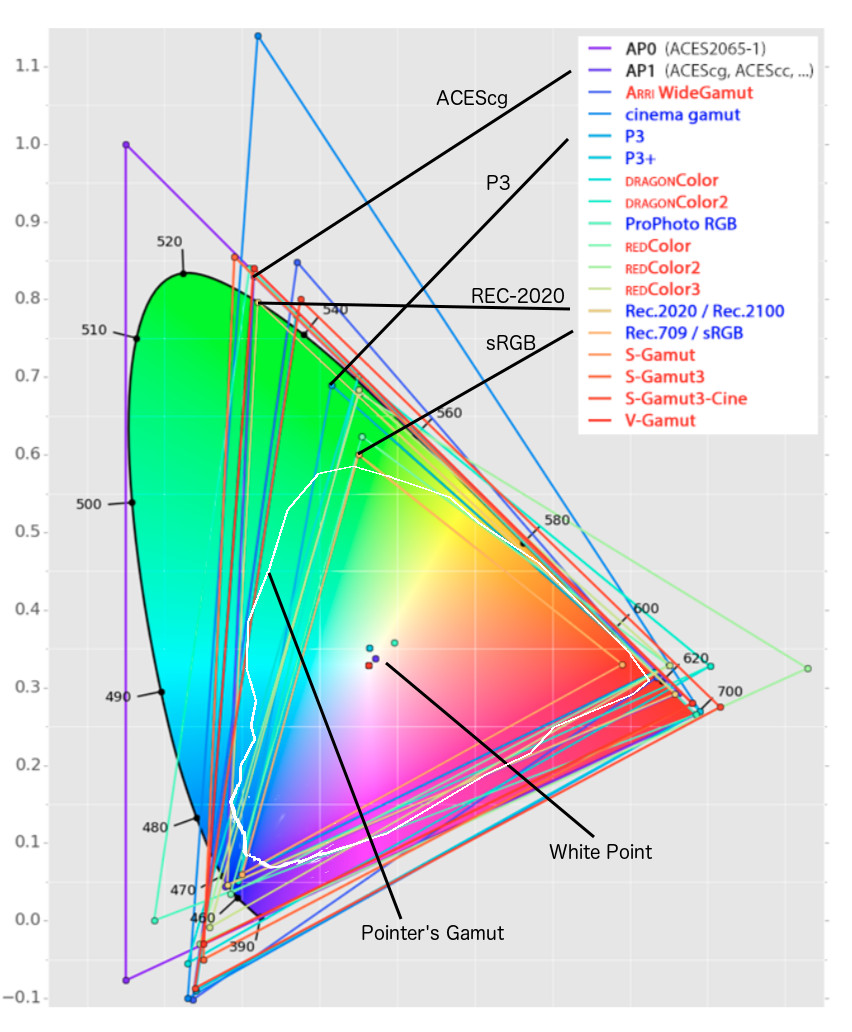

Rec-601 (for the older SDTV format, very similar to rec-709) and Rec-709 (the HDTV’s recommended set of color standards, at times also referred to sRGB, although not exactly the same) are currently the most spread color formats and hardware configurations in the world.

Following those you can find the larger P3 gamut, more commonly used in theaters and in digital production houses (with small variations and improvements to color coverage), as well as most of best 4K/WCG TVs.

And a new standard is now promoted against P3, referred to Rec-2020 and UHDTV.

It is still debatable if this is going to be adopted at consumer level beyond the P3, mainly due to lack of hardware supporting it. But initial tests do prove that it would be a future proof investment.

www.colour-science.org/anders-langlands/

Rec. 2020 is ultimately designed for television, and not cinema. Therefore, it is to be expected that its properties must behave according to current signal processing standards. In this respect, its foundation is based on current HD and SD video signal characteristics.

As far as color bit depth is concerned, it allows for a maximum of 12 bits, which is more than enough for humans.

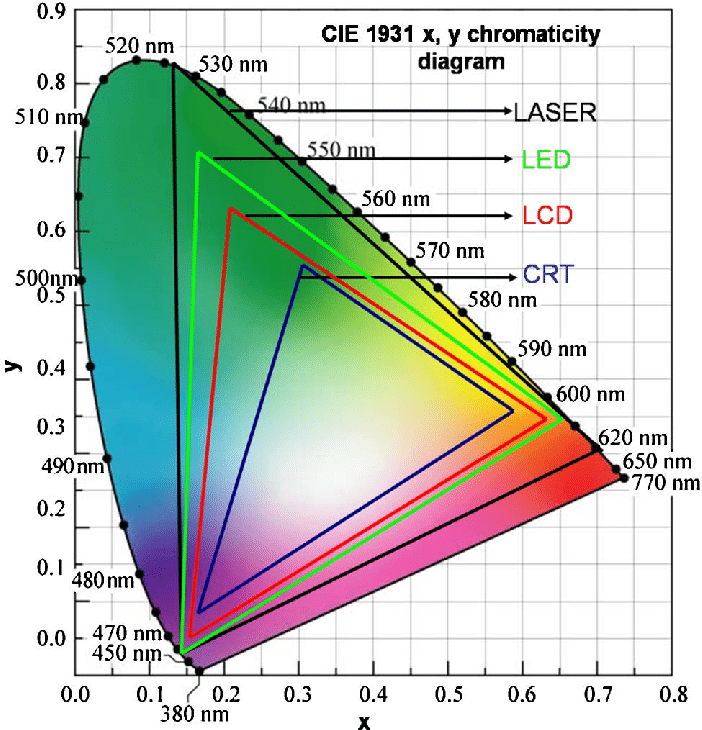

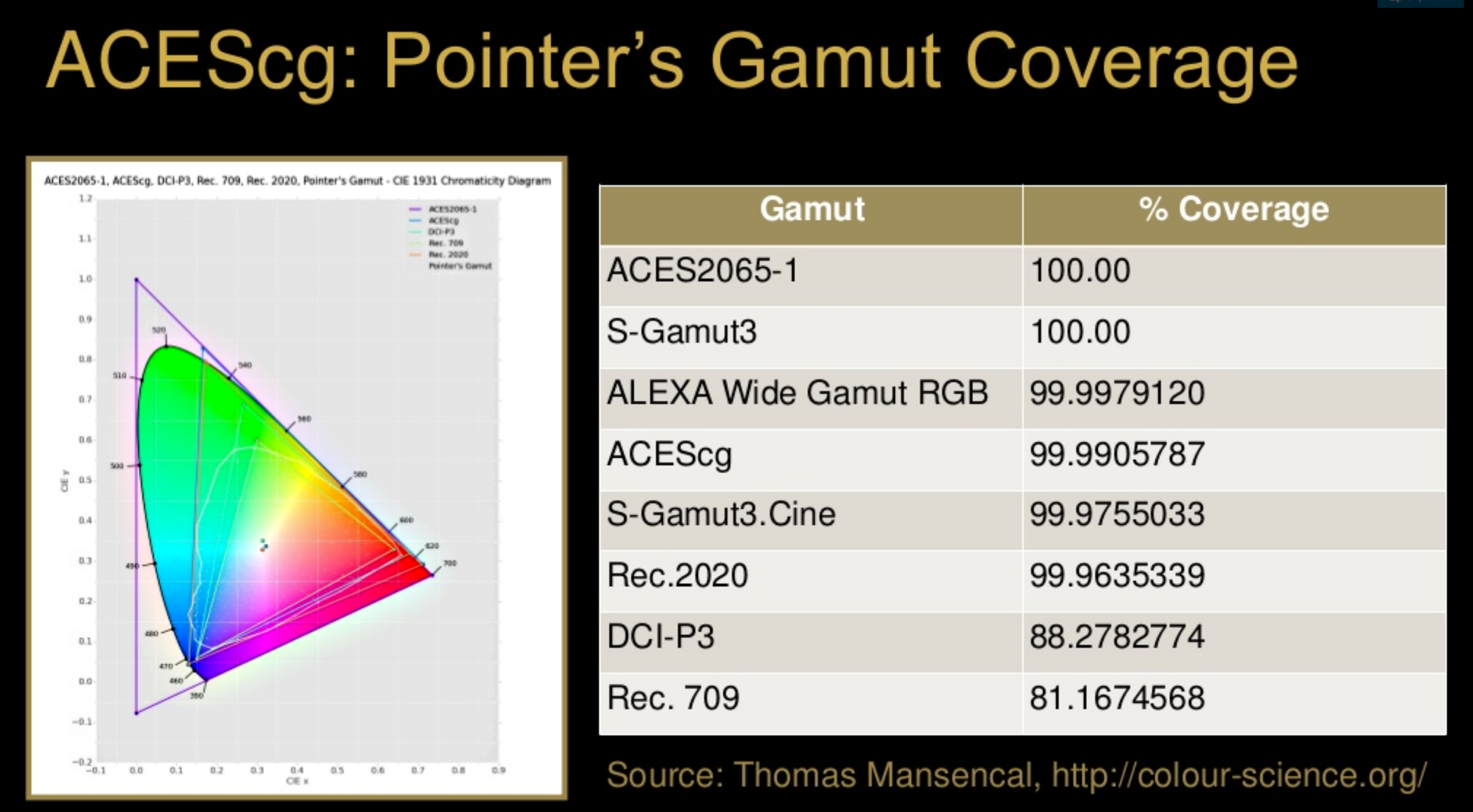

Comparing standards, REC-709 covers 35.9% of the human visible spectrum. P3 45.5%. And REC-2020 75.8%.

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.htmlComparing coverage to hardware devices

To note that all the new standards generally score very high on the Pointer’s Gamut chart. But with REC-2020 scoring 99.9% vs P3 at 88.2%.

www.tftcentral.co.uk/articles/pointers_gamut.htmhttps://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

The Pointer’s gamut is (an approximation of) the gamut of real surface colors as can be seen by the human eye, based on the research by Michael R. Pointer (1980). What this means is that every color that can be reflected by the surface of an object of any material is inside the Pointer’s gamut. Basically establishing a widely respected target for color reproduction. Visually, Pointers Gamut represents the colors we see about us in the natural world. Colors outside Pointers Gamut include those that do not occur naturally, such as neon lights and computer-generated colors possible in animation. Which would partially be accounted for with the new gamuts.

cinepedia.com/picture/color-gamut/

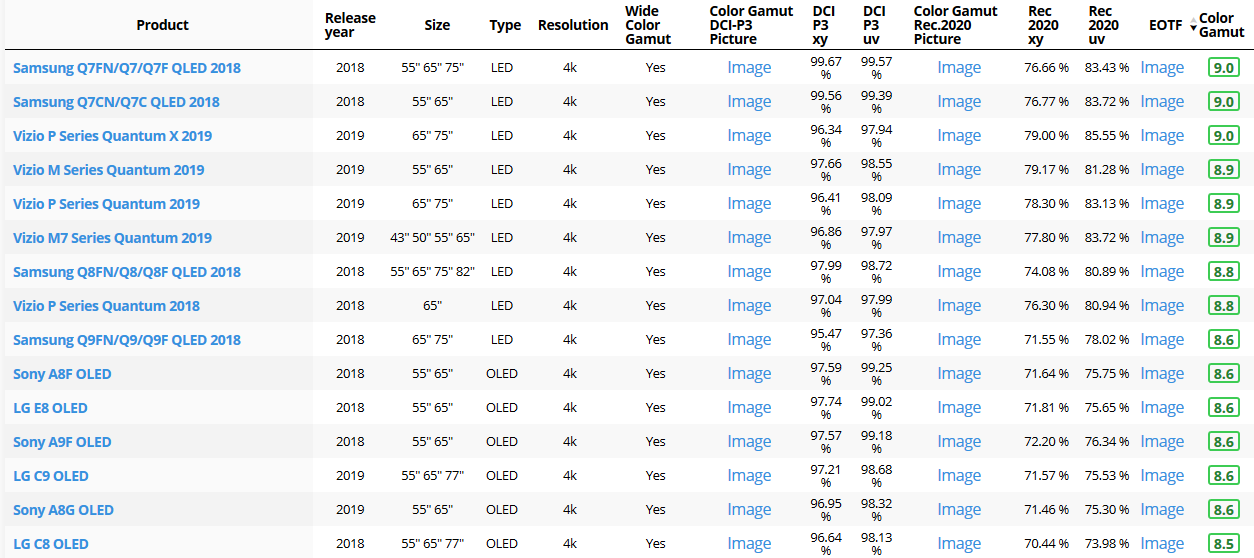

Not all current TVs can support the full spread of the new gamuts. Here is a list of modern TVs’ color coverage in percentage:

www.rtings.com/tv/tests/picture-quality/wide-color-gamut-rec-709-dci-p3-rec-2020There are no TVs that can come close to displaying all the colors within Rec.2020, and there likely won’t be for at least a few years. However, to help future-proof the technology, Rec.2020 support is already baked into the HDR spec. That means that the same genuine HDR media that fills the DCI P3 space on a compatible TV now, will in a few years also fill Rec.2020 on a TV supporting that larger space.

Rec.2020’s main gains are in the number of new tones of green that it will display, though it also offers improvements to the number of blue and red colors as well. Altogether, Rec.2020 will cover about 75% of the visual spectrum, which is a sizeable increase in coverage even over DCI P3.

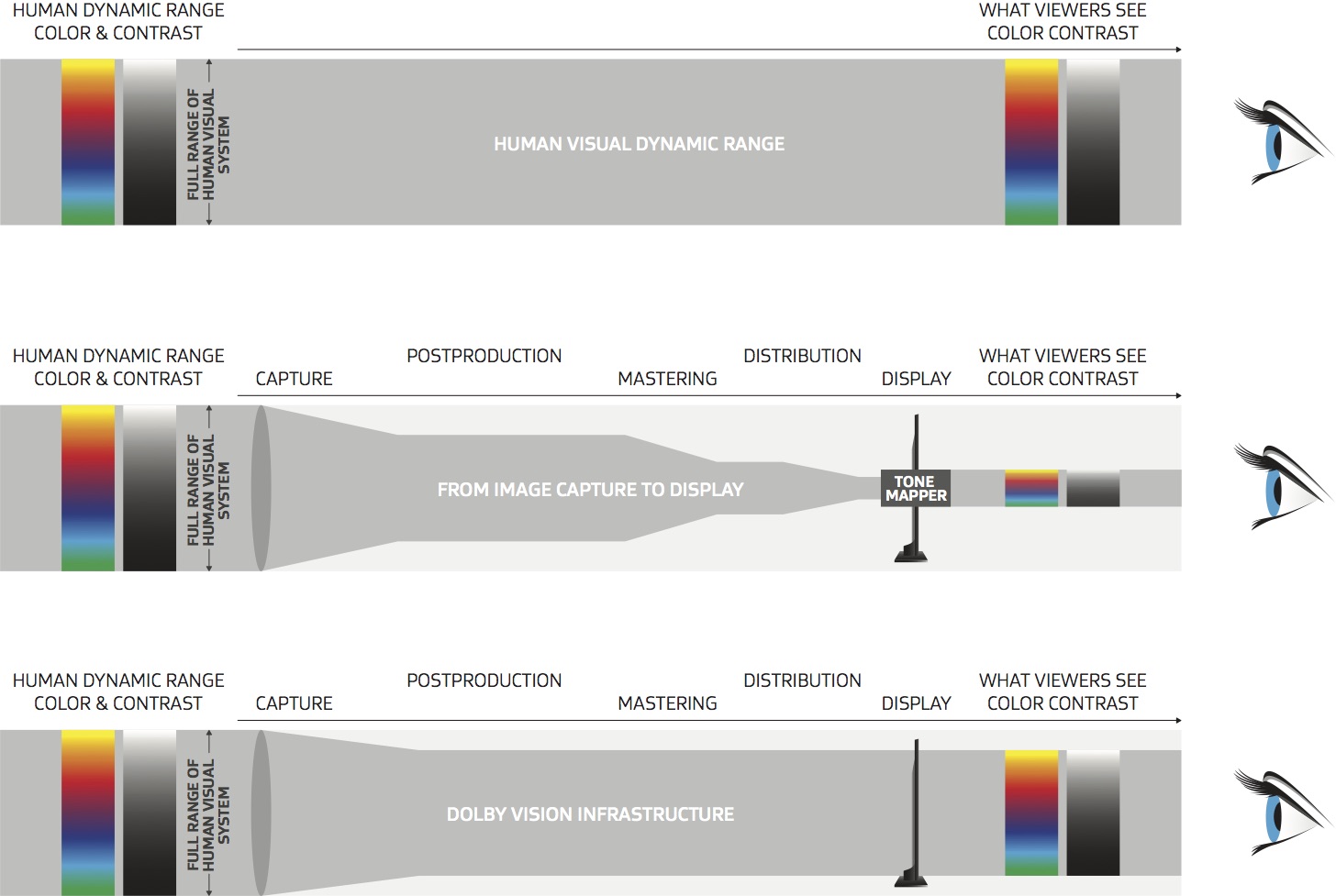

Dolby Vision

https://www.highdefdigest.com/news/show/what-is-dolby-vision/39049

https://www.techhive.com/article/3237232/dolby-vision-vs-hdr10-which-is-best.html

Dolby Vision is a proprietary end-to-end High Dynamic Range (HDR) format that covers content creation and playback through select cinemas, Ultra HD displays, and 4K titles. Like other HDR standards, the process uses expanded brightness to improve contrast between dark and light aspects of an image, bringing out deeper black levels and more realistic details in specular highlights — like the sun reflecting off of an ocean — in specially graded Dolby Vision material.

The iPhone 12 Pro gets the ability to record 4K 10-bit HDR video. According to Apple, it is the very first smartphone that is capable of capturing Dolby Vision HDR.

The iPhone 12 Pro takes two separate exposures and runs them through Apple’s custom image signal processor to create a histogram, which is a graph of the tonal values in each frame. The Dolby Vision metadata is then generated based on that histogram. In Laymen’s terms, it is essentially doing real-time grading while you are shooting. This is only possible due to the A14 Bionic chip.

Dolby Vision also allows for 12-bit color, as opposed to HDR10’s and HDR10+’s 10-bit color. While no retail TV we’re aware of supports 12-bit color, Dolby claims it can be down-sampled in such a way as to render 10-bit color more accurately.

Resources for more reading:

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.html

wolfcrow.com/say-hello-to-rec-2020-the-color-space-of-the-future/

www.cnet.com/news/ultra-hd-tv-color-part-ii-the-future/

-

Unreal Engine 3 official Samaritan real time demo

http://www.gamespot.com/features/nvidias-kepler-gtx-680-powering-the-next-gen-6367388/

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Eddie Yoon – There’s a big misconception about AI creative

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

-

What Is The Resolution and view coverage Of The human Eye. And what distance is TV at best?

-

Photography basics: Production Rendering Resolution Charts

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

-

Mastering The Art Of Photography – PixelSham.com Photography Basics

-

copypastecharacter.com – alphabets, special characters and symbols library

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.