Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.

https://www.streetdirectory.com/travel_guide/141307/photography/exposure_value_ev_and_exposure_compensation.html

Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidental exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

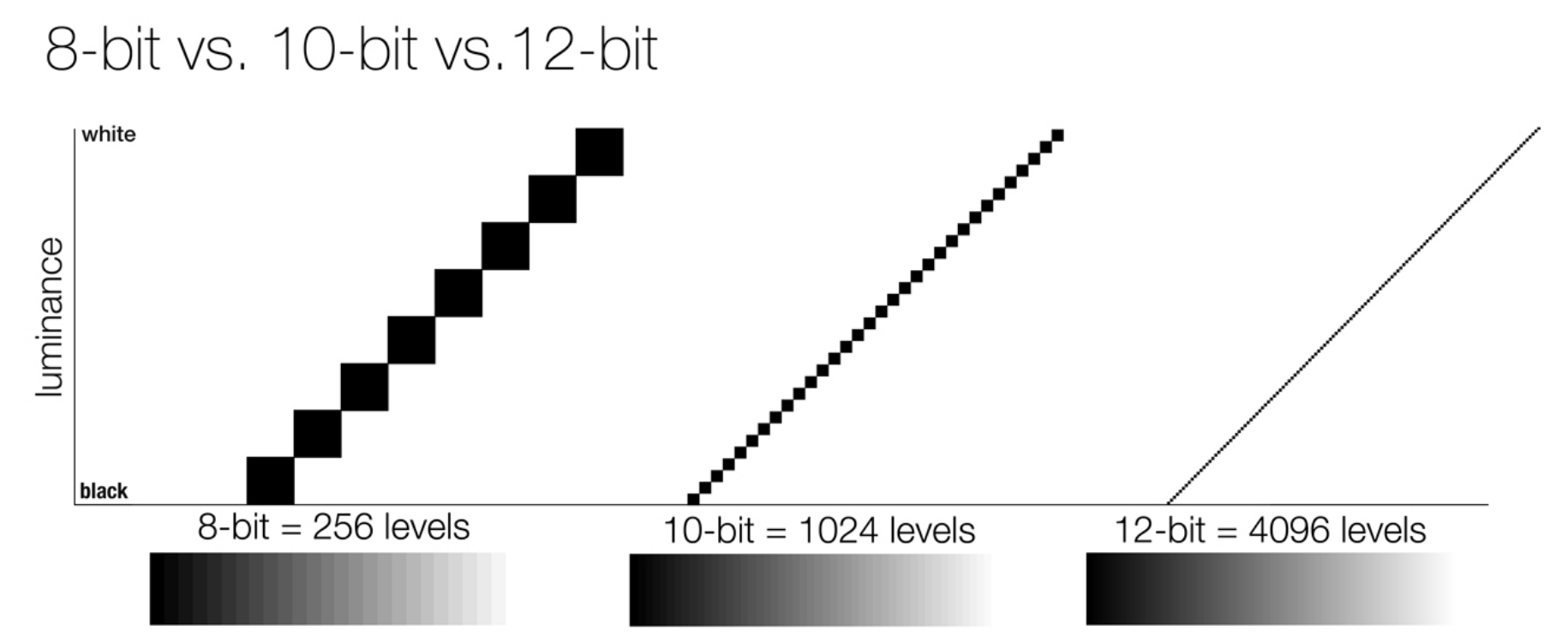

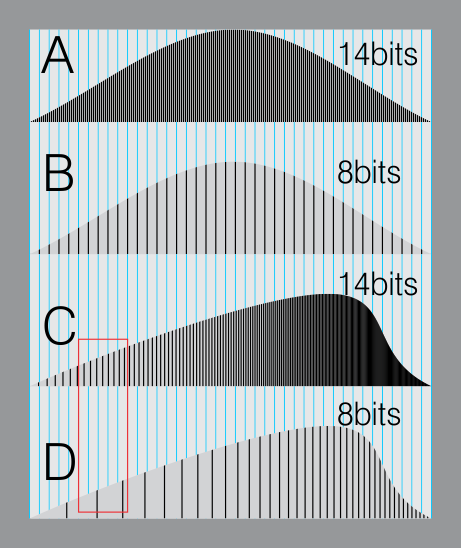

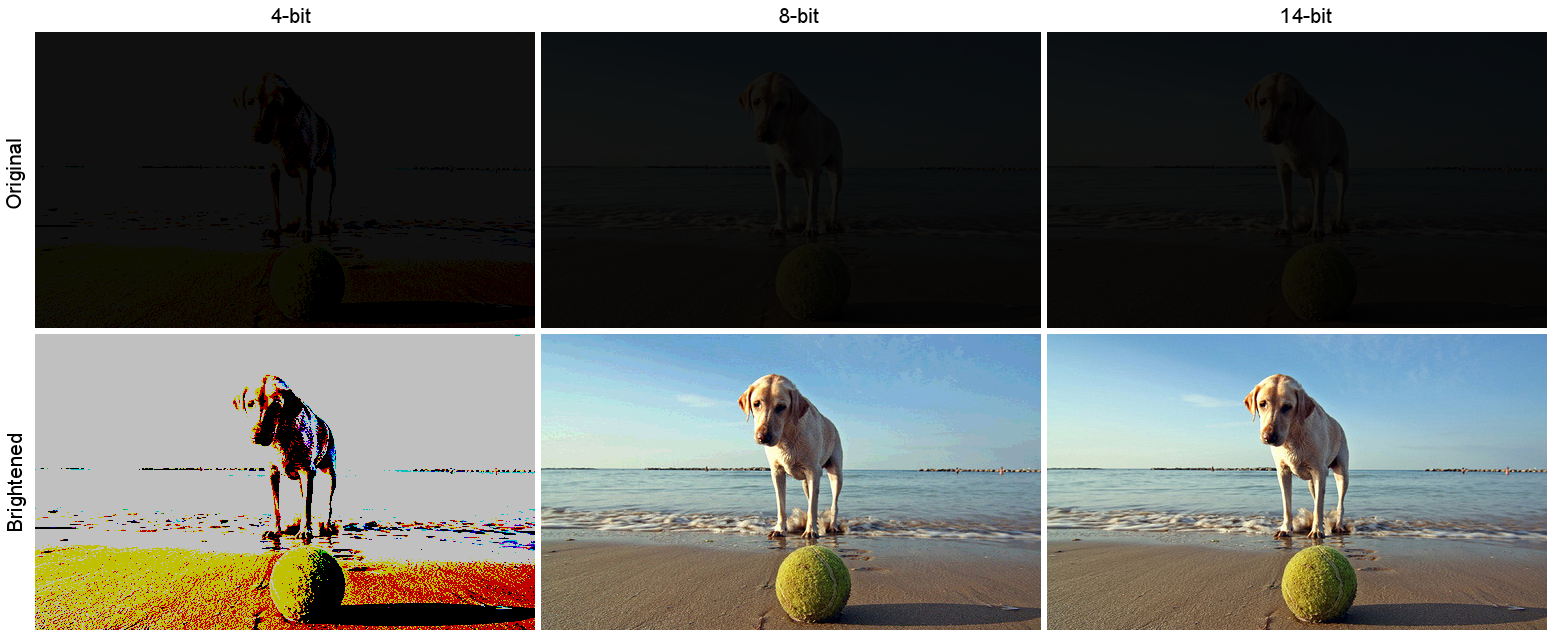

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.

https://www.streetdirectory.com/travel_guide/141307/photography/exposure_value_ev_and_exposure_compensation.html

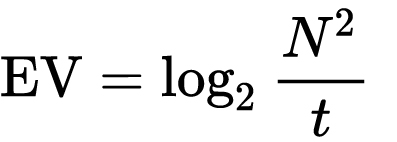

Formula

https://en.wikipedia.org/wiki/Exposure_value

https://www.scantips.com/lights/math.html

which means 2EV = N² / t

where

- N is the relative aperture (f-number) Important: Note that f/stop values must first be squared in most calculations

- t is the exposure time (shutter speed) in seconds

EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

Example: If f/16 and 1/4 second, then this is:

(N² / t) = (16 × 16 ÷ 1/4) = (16 × 16 × 4) = 1024.

Log₂(1024) is EV 10. Meaning, 210 = 1024.

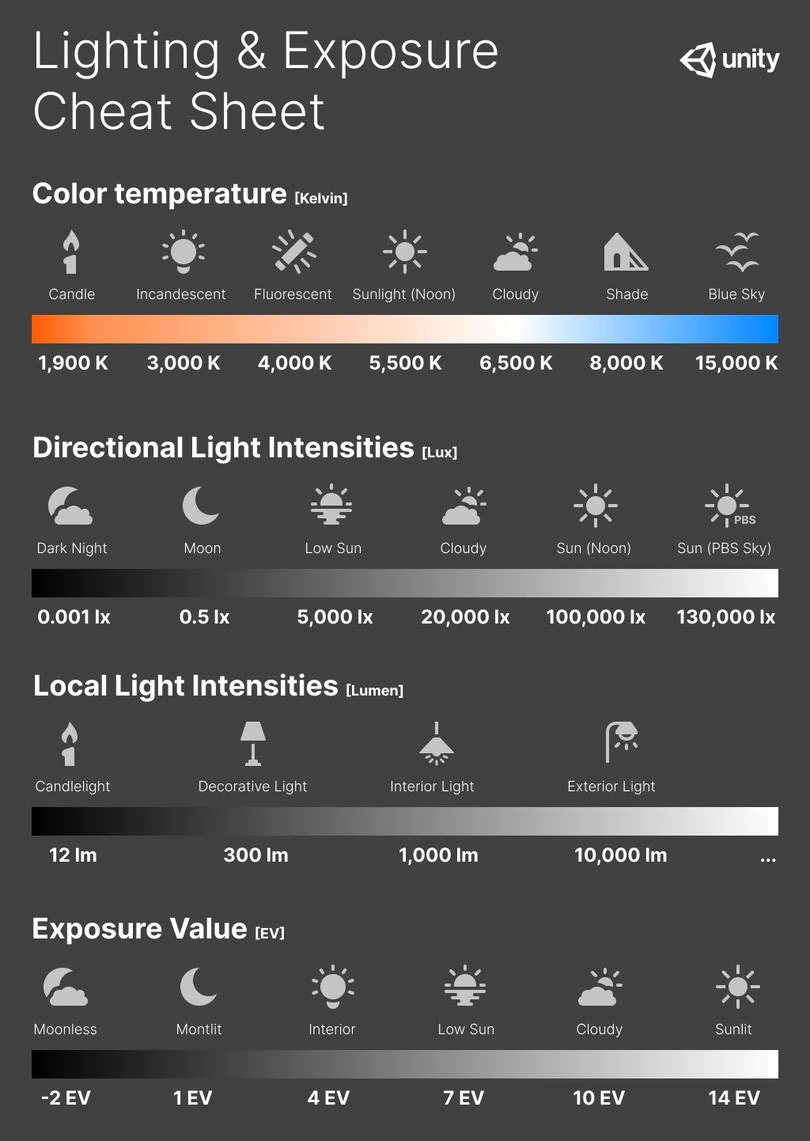

Collecting photographic exposure using Light Meters

https://photo.stackexchange.com/questions/968/how-can-i-correctly-measure-light-using-a-built-in-camera-meter

The exposure meter in the camera does not know whether the subject itself is bright or not. It simply measures the amount of light that comes in, and makes a guess based on that. The camera will aim for 18% gray, meaning if you take a photo of an entirely white surface, and an entirely black surface you should get two identical images which both are gray (at least in theory)

https://en.wikipedia.org/wiki/Light_meter

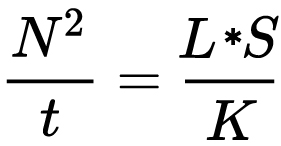

For reflected-light meters, camera settings are related to ISO speed and subject luminance by the reflected-light exposure equation:

where

- N is the relative aperture (f-number)

- t is the exposure time (“shutter speed”) in seconds

- L is the average scene luminance

- S is the ISO arithmetic speed

- K is the reflected-light meter calibration constant

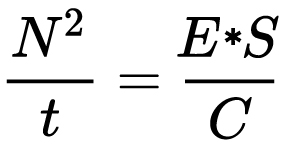

For incident-light meters, camera settings are related to ISO speed and subject illuminance by the incident-light exposure equation:

where

- E is the illuminance (in lux)

- C is the incident-light meter calibration constant

Two values for K are in common use: 12.5 (Canon, Nikon, and Sekonic) and 14 (Minolta, Kenko, and Pentax); the difference between the two values is approximately 1/6 EV.

For C a value of 250 is commonly used.

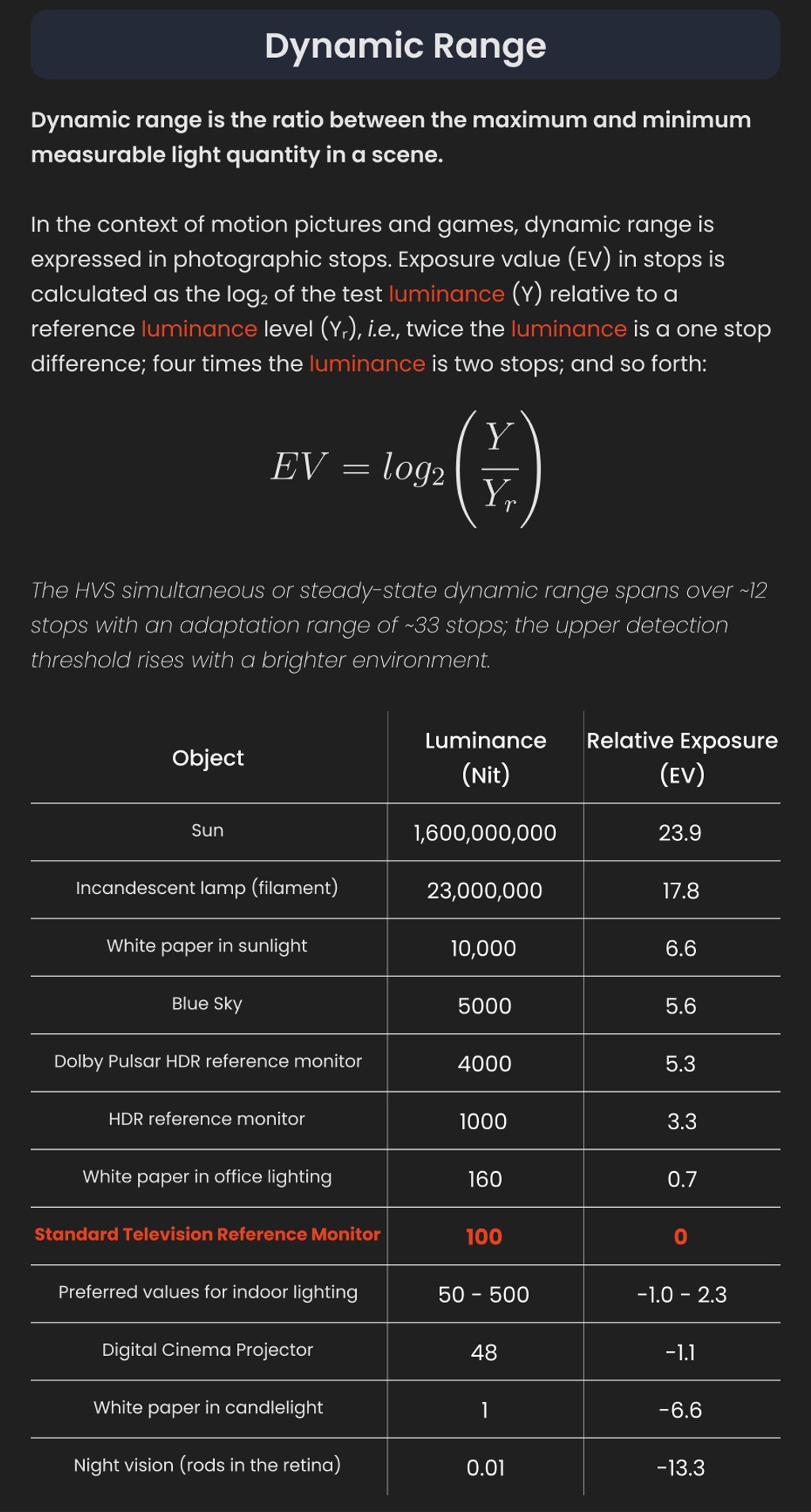

Nonetheless, it is common practice among photographic equipment manufacturers to also express luminance in EV for ISO 100 speed. Using K = 12.5, the relationship between EV at ISO 100 and luminance L is then :

L = 2(EV-3)

The situation with incident-light meters is more complicated than that for reflected-light meters, because the calibration constant C depends on the sensor type. Illuminance is measured with a flat sensor; a typical value for C is 250 with illuminance in lux. Using C = 250, the relationship between EV at ISO 100 and illuminance E is then :

E = 2.5 * 2(EV)

https://nofilmschool.com/2018/03/want-easier-and-faster-way-calculate-exposure-formula

Three basic factors go into the exposure formula itself instead: aperture, shutter, and ISO. Plus a light meter calibration constant.

f-stop²/shutter (in seconds) = lux * ISO/C

If you at least know four of those variables, you’ll be able to calculate the missing value.

So, say you want to figure out how much light you’re going to need in order to shoot at a certain f-stop. Well, all you do is plug in your values (you should know the f-stop, ISO, and your light meter calibration constant) into the formula below:

lux = C (f-stop²/shutter (in seconds))/ISO

Exposure Value Calculator:

https://snapheadshots.com/resources/exposure-and-light-calculator

https://www.scantips.com/lights/exposurecalc.html

https://www.pointsinfocus.com/tools/exposure-settings-ev-calculator/#google_vignette

From that perspective, an exposure stop is a measurement of Exposure and provides a universal linear scale to measure the increase and decrease in light, exposed to the image sensor, due to changes in shutter speed, iso & f-stop.

+-1 stop is a doubling or halving of the amount of light let in when taking a photo.

1 EV is just another way to say one stop of exposure change.

One major use of EV (Exposure Value) is just to measure any change of exposure, where one EV implies a change of one stop of exposure. Like when we compensate our picture in the camera.

If the picture comes out too dark, our manual exposure could correct the next one by directly adjusting one of the three exposure controls (f/stop, shutter speed, or ISO). Or if using camera automation, the camera meter is controlling it, but we might apply +1 EV exposure compensation (or +1 EV flash compensation) to make the result goal brighter, as desired. This use of 1 EV is just another way to say one stop of exposure change.

On a perfect day the difference from sampling the sky vs the sun exposure with diffusing spot meters is about 3.2 exposure difference.

~15.4 EV for the sun

~12.2 EV for the sky

That is as a ballpark. All still influenced by surroundings, accuracy parameters, fov of the sensor…

EV calculator

https://www.scantips.com/lights/evchart.html#calc

http://www.fredparker.com/ultexp1.htm

Exposure value is basically used to indicate an interval on the photographic exposure scale, with a difference of 1 EV corresponding to a standard power-of-2 exposure step, also commonly referred to as a “stop”.

https://contrastly.com/a-guide-to-understanding-exposure-value-ev/

Retrieving photographic exposure from an image

All you can hope to measure with your camera and some images is the relative reflected luminance. Even if you have the camera settings. https://en.wikipedia.org/wiki/Relative_luminance

If you REALLY want to know the amount of light in absolute radiometric units, you’re going to need to use some kind of absolute light meter or measured light source to calibrate your camera. For references on how to do this, see: Section 2.5 Obtaining Absolute Radiance from http://www.pauldebevec.com/Research/HDR/debevec-siggraph97.pdf

IF you are still trying to gauge relative brightness, the level of the sun in Nuke can vary, but it should be in the thousands. Ie: between 30,000 and 65,0000 rgb value depending on time of the day, season and atmospherics.

The values for a 12 o’clock sun, with the sun sampled at EV 15.5 (shutter 1/30, ISO 100, F22) is 32.000 RGB max values (or 32,000 pixel luminance).

The thing to keep an eye for is the level of contrast between sunny side/fill side. The terminator should be quite obvious, there can be up to 3 stops difference between fill/key in sunny lit objects.

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).

One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.

If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

Note: make sure to set your Nuke read node to ‘raw data’

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

If you are trying to gauge the EV from the pixel luminance in the image:

– Setting the center f-stop to 0 means that in a neutral plate, the middle 18% gray will equal to exposure value 0.

– So if EV 0 = 0.18 middle gray in nuke which equal to a pixel luminance of 0.18, doubling that value, doubles the EV.

.18 pixel luminance = 0EV

.36 pixel luminance = 1EV

.72 pixel luminance = 2EV

1.46 pixel luminance = 3EV

...

This is a Geometric Progression function: xn = ar(n-1)

The most basic example of this function is 1,2,4,8,16,32,… The sequence starts at 1 and doubles each time, so

- a=1 (the first term)

- r=2 (the “common ratio” between terms is a doubling)

And we get:

{a, ar, ar2, ar3, … }

= {1, 1×2, 1×22, 1×23, … }

= {1, 2, 4, 8, … }

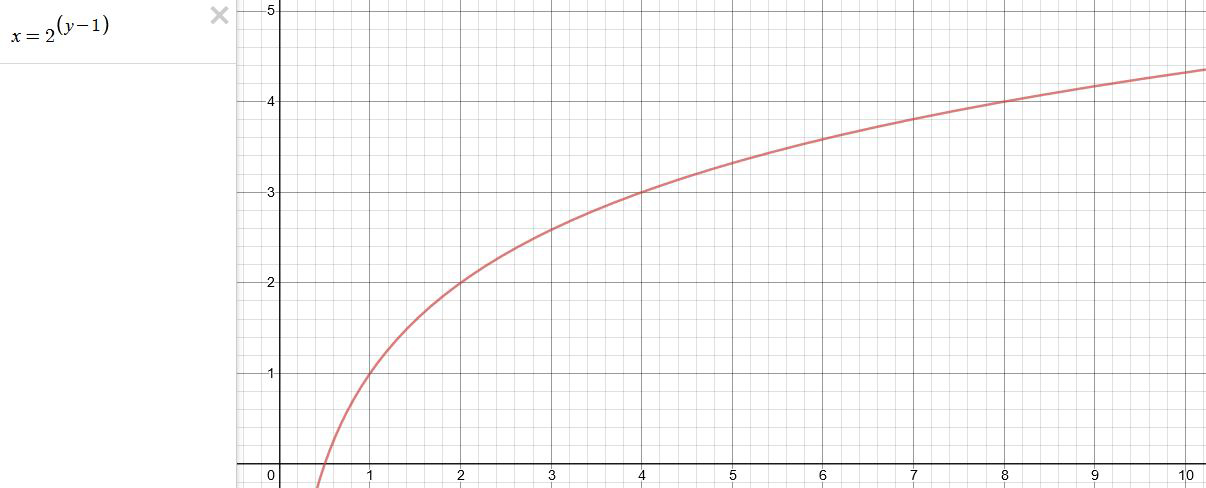

In this example the function translates to: n = 2(n-1)

You can graph this curve through this expression: x = 2(y-1) :

You can go back and forth between the two values through a geometric progression function and a log function:

(Note: in a spreadsheet this is: = POWER(2; cell# -1) and =LOG(cell#, 2)+1) )

| 2(y-1) |

log2(x)+1 |

| x |

y |

| 1 |

1 |

| 2 |

2 |

| 4 |

3 |

| 8 |

4 |

| 16 |

5 |

| 32 |

6 |

| 64 |

7 |

| 128 |

8 |

| 256 |

9 |

| 512 |

10 |

| 1024 |

11 |

| 2048 |

12 |

| 4096 |

13 |

Translating this into a geometric progression between an image pixel luminance and EV:

(more…)

![]()

![]()