-

Shanhai based StepFun – Open source Step-Video-T2V

https://huggingface.co/stepfun-ai/stepvideo-t2v

The model generates videos up to 204 frames, using a high-compression Video-VAE (16×16 spatial, 8x temporal). It processes English and Chinese prompts via bilingual text encoders. A 3D full-attention DiT, trained with Flow Matching, denoises latent frames conditioned on text and timesteps. A video-based DPO further reduces artifacts, enhancing realism and smoothness.

-

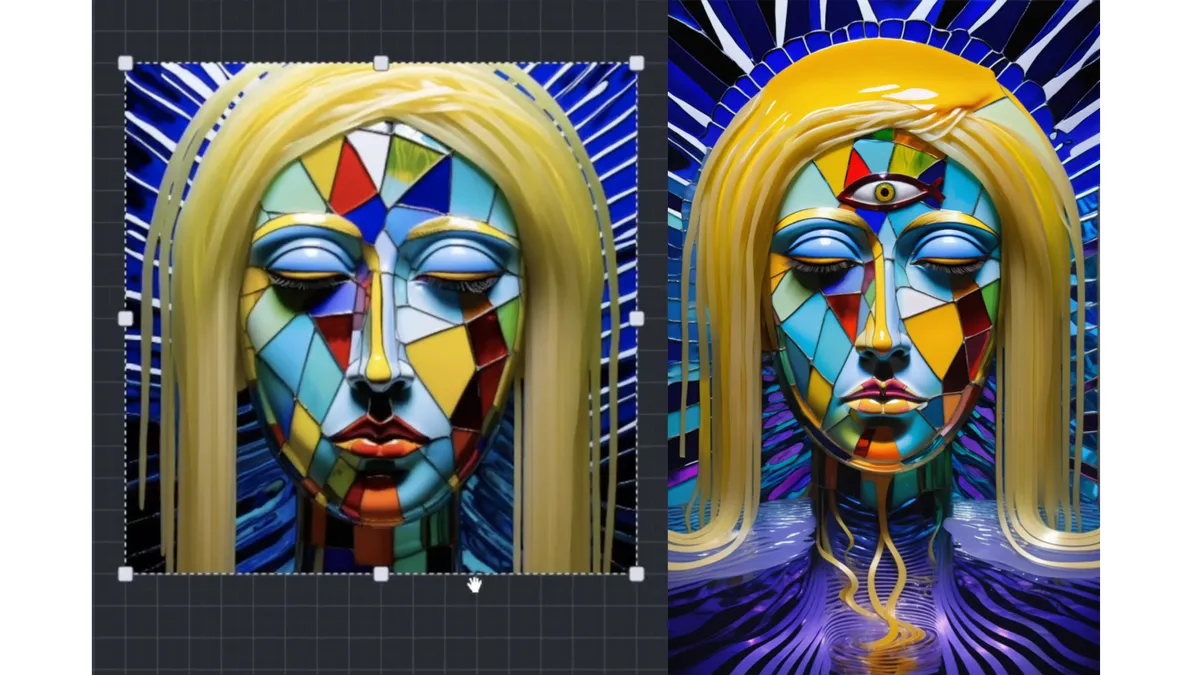

InvokeAI Got a Copyright for an Image Made Entirely With AI. Here’s How

-

Micro LED displays

Micro LED displays are a cutting-edge technology that promise significant improvements over existing display methods like OLED and LCD. By using tiny, individual LEDs for each pixel, these displays can deliver exceptional brightness, contrast, and energy efficiency. Their inherent durability and superior performance make them an attractive option for high-end consumer electronics, wearable devices, and even large-scale display panels.

The technology is seen as the future of display innovation, aiming to merge high-quality visuals with low power consumption and long-lasting performance.Despite their advantages, micro LED displays face substantial manufacturing hurdles that have slowed their mass-market adoption. The production process requires the precise transfer and alignment of millions of microscopic LEDs onto a substrate—a task that is both technically challenging and cost-intensive. Issues with yield, scalability, and quality control continue to persist, making it difficult to achieve the economies of scale necessary for widespread commercial use. As industry leaders invest heavily in research and development to overcome these obstacles, the technology remains on the cusp of becoming a viable alternative to current display technologies.

-

Nvidia CUDA Toolkit – a development environment for creating high-performance, GPU-accelerated applications

https://developer.nvidia.com/cuda-toolkit

With it, you can develop, optimize, and deploy your applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centers, cloud-based platforms, and supercomputers. The toolkit includes GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library.

-

HumanDiT – Pose-Guided Diffusion Transformer for Long-form Human Motion Video Generation

https://agnjason.github.io/HumanDiT-page

By inputting a single character image and template pose video, our method can generate vocal avatar videos featuring not only pose-accurate rendering but also realistic body shapes.

-

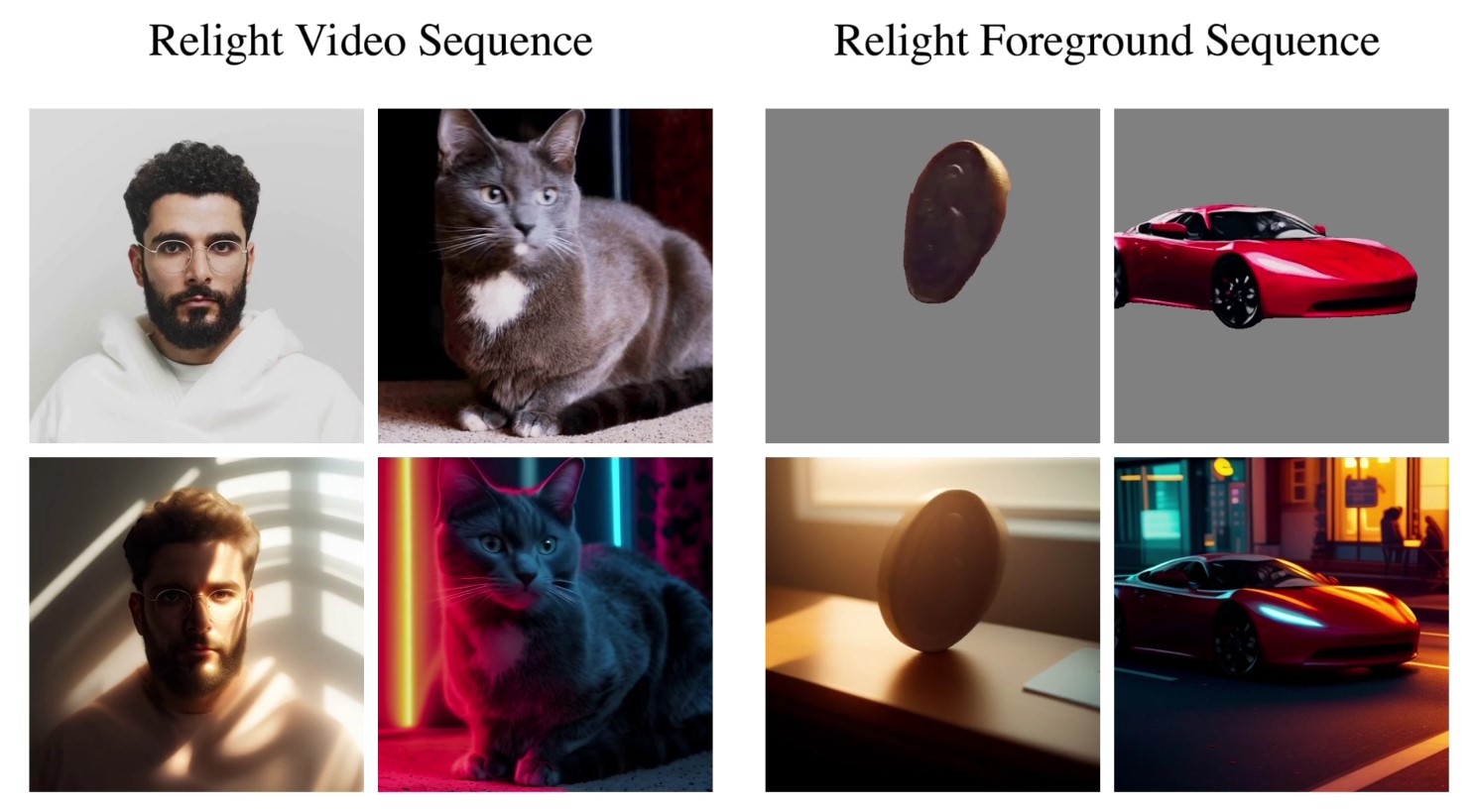

DynVFX – Augmenting Real Videoswith Dynamic Content

Given an input video and a simple user-provided text instruction describing the desired content, our method synthesizes dynamic objects or complex scene effects that naturally interact with the existing scene over time. The position, appearance, and motion of the new content are seamlessly integrated into the original footage while accounting for camera motion, occlusions, and interactions with other dynamic objects in the scene, resulting in a cohesive and realistic output video.

https://dynvfx.github.io/sm/index.html

-

ByteDance OmniHuman-1

https://omnihuman-lab.github.io

They propose an end-to-end multimodality-conditioned human video generation framework named OmniHuman, which can generate human videos based on a single human image and motion signals (e.g., audio only, video only, or a combination of audio and video). In OmniHuman, we introduce a multimodality motion conditioning mixed training strategy, allowing the model to benefit from data scaling up of mixed conditioning. This overcomes the issue that previous end-to-end approaches faced due to the scarcity of high-quality data. OmniHuman significantly outperforms existing methods, generating extremely realistic human videos based on weak signal inputs, especially audio. It supports image inputs of any aspect ratio, whether they are portraits, half-body, or full-body images, delivering more lifelike and high-quality results across various scenarios.

-

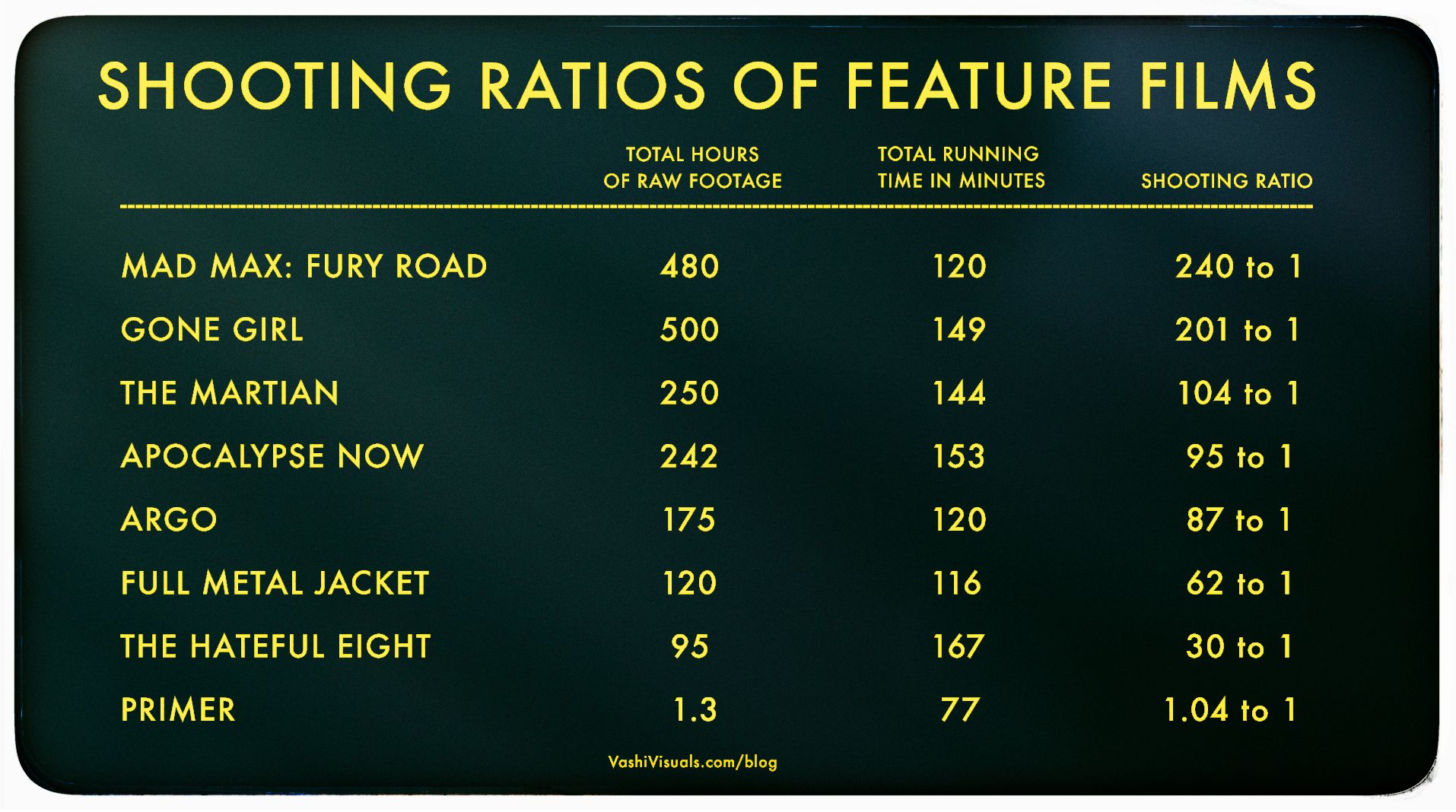

Vashi Nedomansky – Shooting ratios of feature films

In the Golden Age of Hollywood (1930-1959), a 10:1 shooting ratio was the norm—a 90-minute film meant about 15 hours of footage. Directors like Alfred Hitchcock famously kept it tight with a 3:1 ratio, giving studios little wiggle room in the edit.

Fast forward to today: the digital era has sent shooting ratios skyrocketing. Affordable cameras roll endlessly, capturing multiple takes, resets, and everything in between. Gone are the disciplined “Action to Cut” days of film.https://en.wikipedia.org/wiki/Shooting_ratio

-

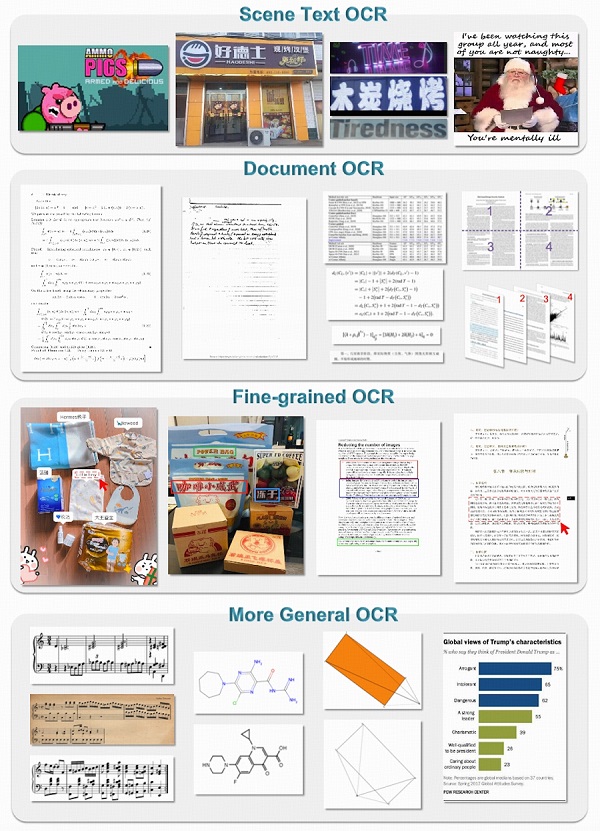

General OCR Theory – Towards OCR-2.0 via a Unified End-to-end Model – HF Transformers implementation

https://huggingface.co/stepfun-ai/GOT-OCR-2.0-hf

GOT-OCR2 works on a wide range of tasks, including plain document OCR, scene text OCR, formatted document OCR, and even OCR for tables, charts, mathematical formulas, geometric shapes, molecular formulas and sheet music.

-

QNTM – Developer Philosophy

- Avoid, at all costs, arriving at a scenario where the ground-up rewrite starts to look attractive

- Aim to be 90% done in 50% of the available time

- Automate good practice

- Think about pathological data

- There is usually a simpler way to write it

- Write code to be testable

- It is insufficient for code to be provably correct; it should be obviously, visibly, trivially correct

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

PixelSham – Introduction to Python 2022

-

Methods for creating motion blur in Stop motion

-

Image rendering bit depth

-

Top 3D Printing Website Resources

-

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

-

Emmanuel Tsekleves – Writing Research Papers

-

VFX pipeline – Render Wall management topics

-

HDRI Median Cut plugin

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.