Featured AI

-

StarVector – A multimodal LLM for Scalable Vector Graphics (SVG) generation from images and text

Read more: StarVector – A multimodal LLM for Scalable Vector Graphics (SVG) generation from images and texthttps://huggingface.co/collections/starvector/starvector-models-6783b22c7bd4b43d13cb5289

https://github.com/joanrod/star-vector

-

Reve Image 1.0 Halfmoon – A new model trained from the ground up to excel at prompt adherence, aesthetics, and typography

Read more: Reve Image 1.0 Halfmoon – A new model trained from the ground up to excel at prompt adherence, aesthetics, and typographyA little-known AI image generator called Reve Image 1.0 is trying to make a name in the text-to-image space, potentially outperforming established tools like Midjourney, Flux, and Ideogram. Users receive 100 free credits to test the service after signing up, with additional credits available at $5 for 500 generations—pretty cheap when compared to options like MidJourney or Ideogram, which start at $8 per month and can reach $120 per month, depending on the usage. It also offers 20 free generations per day.

-

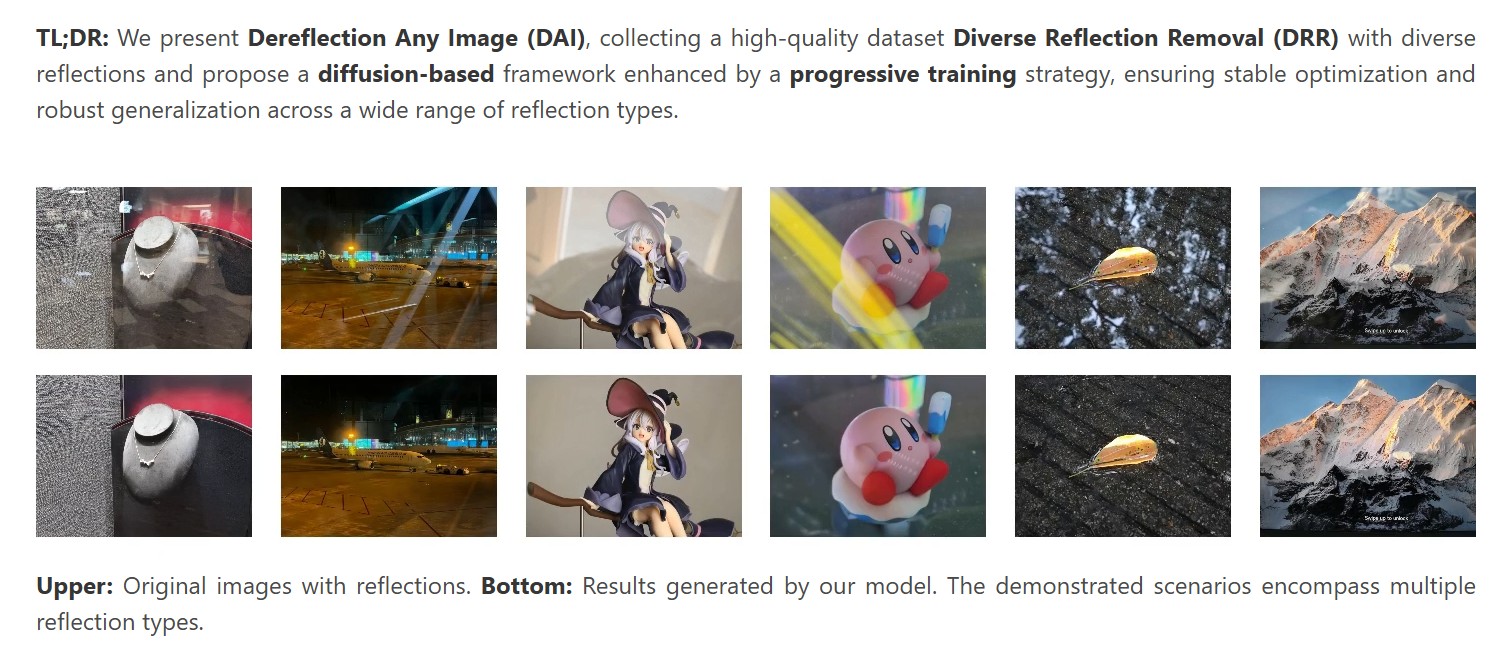

De-reflection – Remove Reflections From Any Image with Diffusion Priors and Diversified Data

Read more: De-reflection – Remove Reflections From Any Image with Diffusion Priors and Diversified Datahttps://arxiv.org/pdf/2503.17347

https://abuuu122.github.io/DAI.github.io

https://github.com/Abuuu122/Dereflection-Any-Image

https://huggingface.co/spaces/sjtu-deepvision/Dereflection-Any-Image

-

Robert Legato joins Stability AI as Chief Pipeline Architect

Read more: Robert Legato joins Stability AI as Chief Pipeline Architecthttps://stability.ai/news/introducing-our-new-chief-pipeline-architect-rob-legato

“Joining Stability AI is an incredible opportunity, and I couldn’t be more excited to help shape the next era of filmmaking,” said Legato. “With dynamic leaders like Prem Akkaraju and James Cameron driving the vision, the potential here is limitless. What excites me most is Stability AI’s commitment to filmmakers—building a tool that is as intuitive as it is powerful, designed to elevate creativity rather than replace it. It’s an artist-first approach to AI, and I’m thrilled to be part of it.”

-

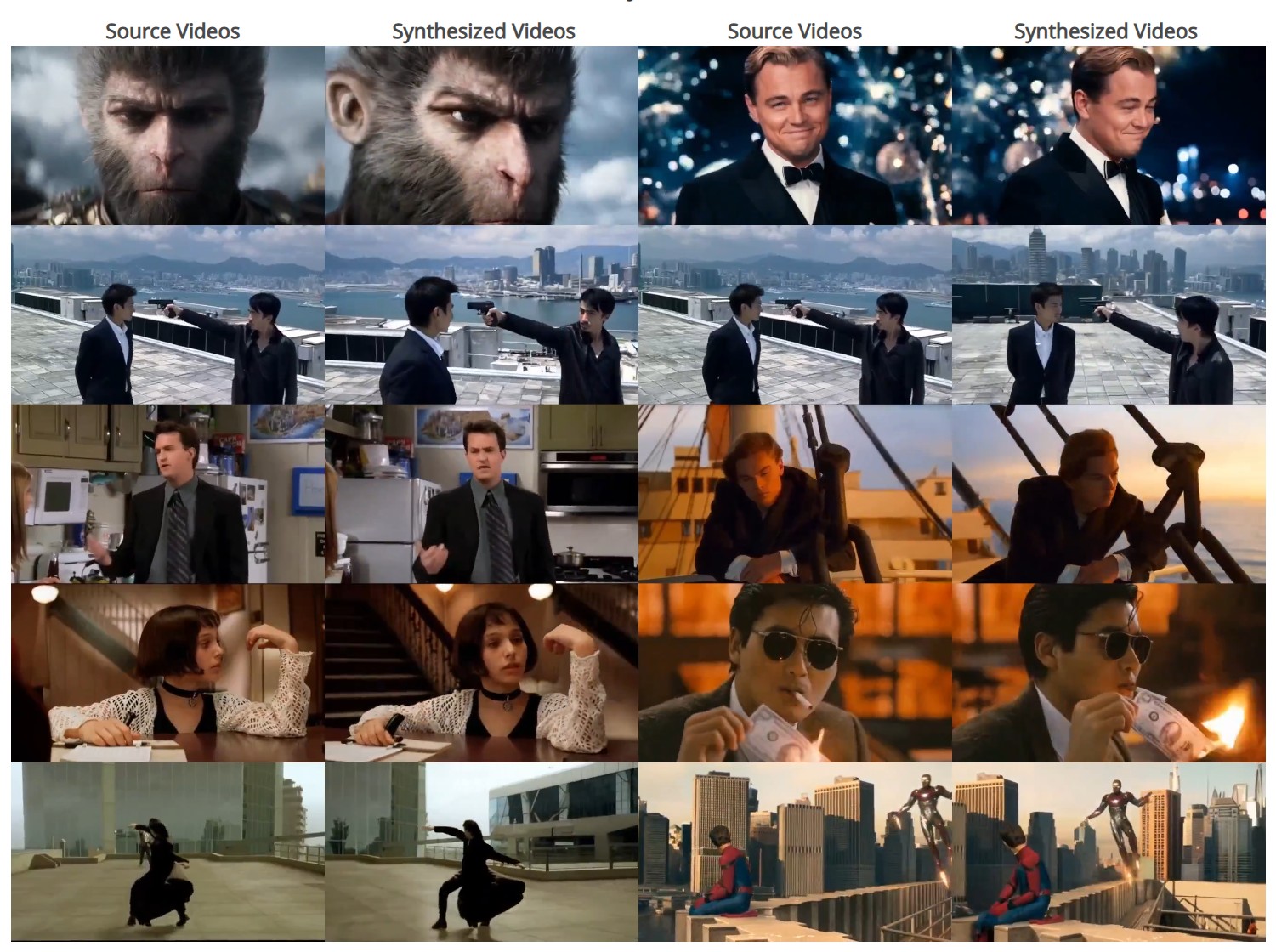

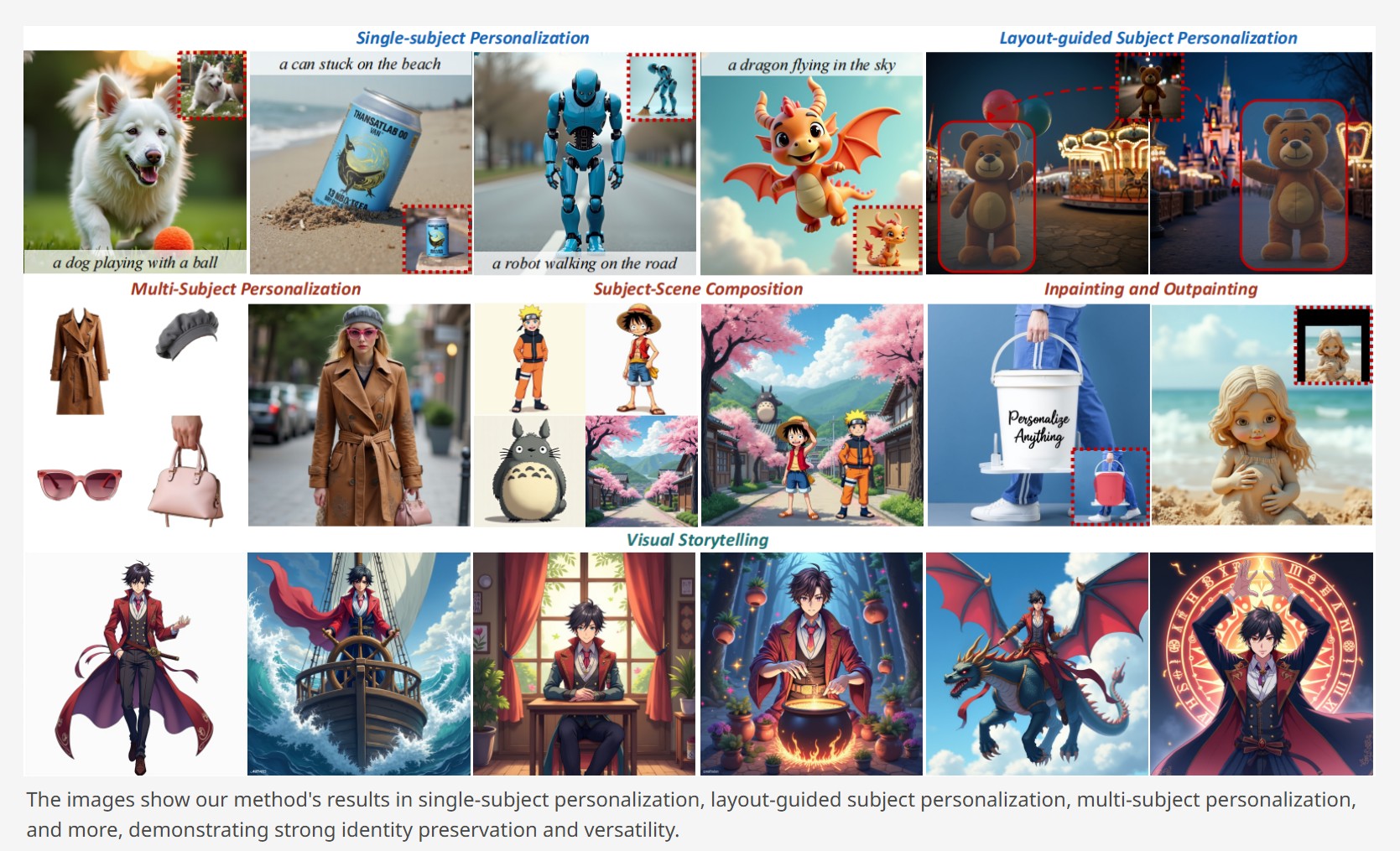

Personalize Anything – For Free with Diffusion Transformer

Read more: Personalize Anything – For Free with Diffusion Transformerhttps://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

-

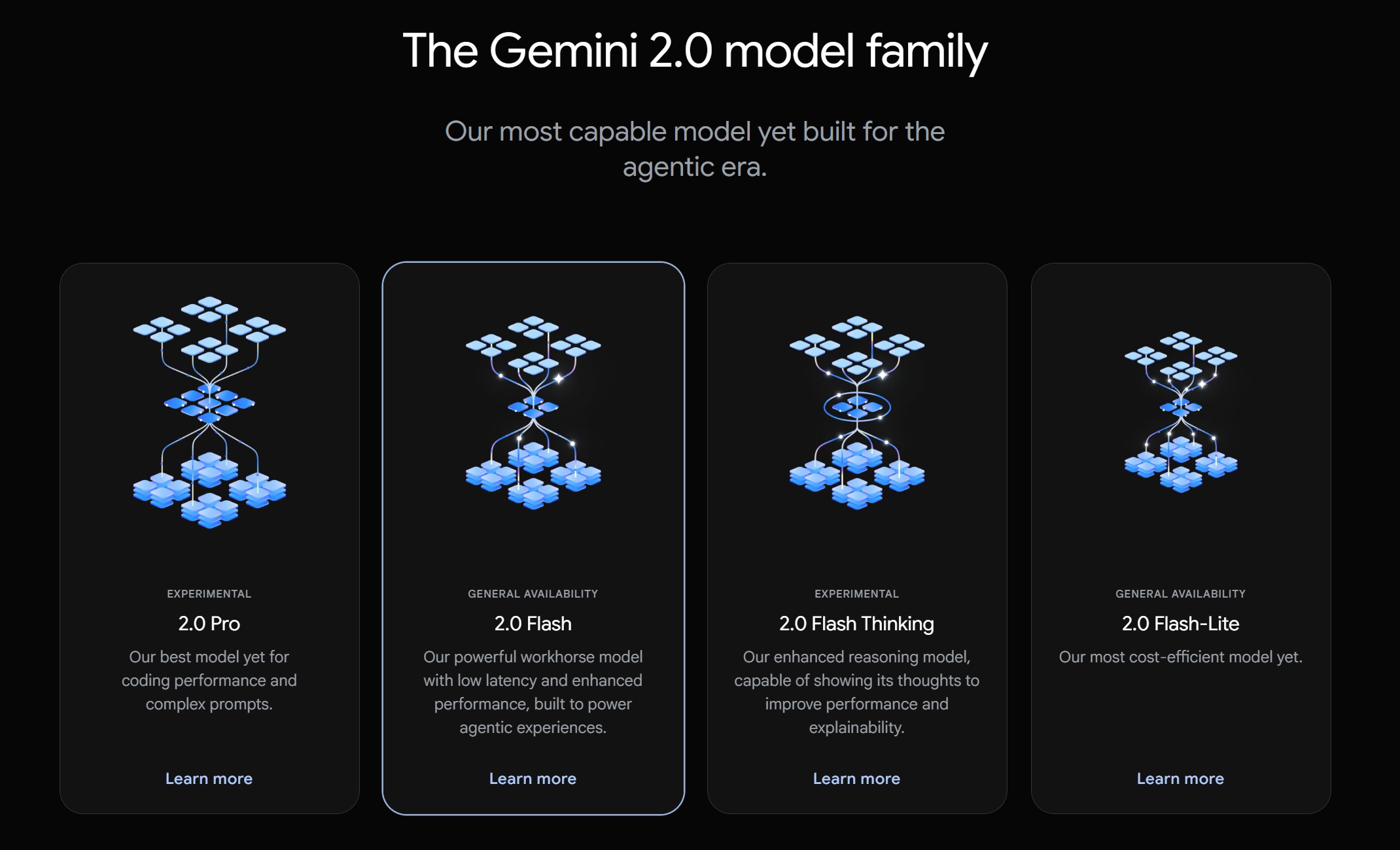

Google Gemini 2.0 Flash new AI model extremely proficient at removing watermarks from images

Read more: Google Gemini 2.0 Flash new AI model extremely proficient at removing watermarks from imagesGemini 2.0 Flash won’t just remove watermarks, but will also attempt to fill in any gaps created by a watermark’s deletion. Other AI-powered tools do this, too, but Gemini 2.0 Flash seems to be exceptionally skilled at it — and free to use.

-

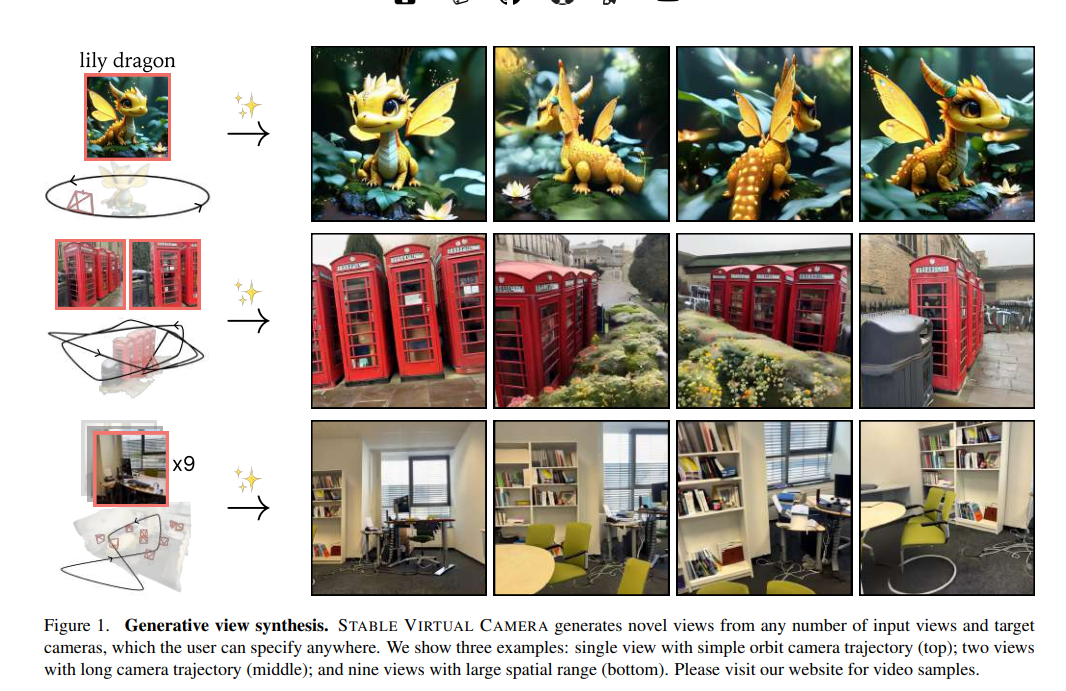

Stability.ai – Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera Control

Read more: Stability.ai – Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera ControlCapabilities

Stable Virtual Camera offers advanced capabilities for generating 3D videos, including:

- Dynamic Camera Control: Supports user-defined camera trajectories as well as multiple dynamic camera paths, including: 360°, Lemniscate (∞ shaped path), Spiral, Dolly Zoom In, Dolly Zoom Out, Zoom In, Zoom Out, Move Forward, Move Backward, Pan Up, Pan Down, Pan Left, Pan Right, and Roll.

- Flexible Inputs: Generates 3D videos from just one input image or up to 32.

- Multiple Aspect Ratios: Capable of producing videos in square (1:1), portrait (9:16), landscape (16:9), and other custom aspect ratios without additional training.

- Long Video Generation: Ensures 3D consistency in videos up to 1,000 frames, enabling seamless

Model limitations

In its initial version, Stable Virtual Camera may produce lower-quality results in certain scenarios. Input images featuring humans, animals, or dynamic textures like water often lead to degraded outputs. Additionally, highly ambiguous scenes, complex camera paths that intersect objects or surfaces, and irregularly shaped objects can cause flickering artifacts, especially when target viewpoints differ significantly from the input images.

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

Read more: ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Containerhttps://www.reddit.com/r/comfyui/comments/1j2x4qv/comfydock_the_easiest_free_way_to_run_comfyui_in/

ComfyDock is a tool that allows you to easily manage your ComfyUI environments via Docker.

Common Challenges with ComfyUI

- Custom Node Installation Issues: Installing new custom nodes can inadvertently change settings across the whole installation, potentially breaking the environment.

- Workflow Compatibility: Workflows are often tested with specific custom nodes and ComfyUI versions. Running these workflows on different setups can lead to errors and frustration.

- Security Risks: Installing custom nodes directly on your host machine increases the risk of malicious code execution.

How ComfyDock Helps

- Environment Duplication: Easily duplicate your current environment before installing custom nodes. If something breaks, revert to the original environment effortlessly.

- Deployment and Sharing: Workflow developers can commit their environments to a Docker image, which can be shared with others and run on cloud GPUs to ensure compatibility.

- Enhanced Security: Containers help to isolate the environment, reducing the risk of malicious code impacting your host machine.

-

Crypto Mining Attack via ComfyUI/Ultralytics in 2024

Read more: Crypto Mining Attack via ComfyUI/Ultralytics in 2024https://github.com/ultralytics/ultralytics/issues/18037

zopieux on Dec 5, 2024 : Ultralytics was attacked (or did it on purpose, waiting for a post mortem there), 8.3.41 contains nefarious code downloading and running a crypto miner hosted as a GitHub blob.

-

PixVerse – Prompt, lypsync and extended video generation

Read more: PixVerse – Prompt, lypsync and extended video generationhttps://app.pixverse.ai/onboard

PixVerse now has 3 main features:

text to video➡️ How To Generate Videos With Text Promptsimage to video➡️ How To Animate Your Images And Bring Them To Lifeupscale➡️ How to Upscale Your Video

Enhanced Capabilities

– Improved Prompt Understanding: Achieve more accurate prompt interpretation and stunning video dynamics.

– Supports Various Video Ratios: Choose from 16:9, 9:16, 3:4, 4:3, and 1:1 ratios.

– Upgraded Styles: Style functionality returns with options like Anime, Realistic, Clay, and 3D. It supports both text-to-video and image-to-video stylization.New Features

– Lipsync: The new Lipsync feature enables users to add text or upload audio, and PixVerse will automatically sync the characters’ lip movements in the generated video based on the text or audio.

– Effect: Offers 8 creative effects, including Zombie Transformation, Wizard Hat, Monster Invasion, and other Halloween-themed effects, enabling one-click creativity.

– Extend: Extend the generated video by an additional 5-8 seconds, with control over the content of the extended segment. -

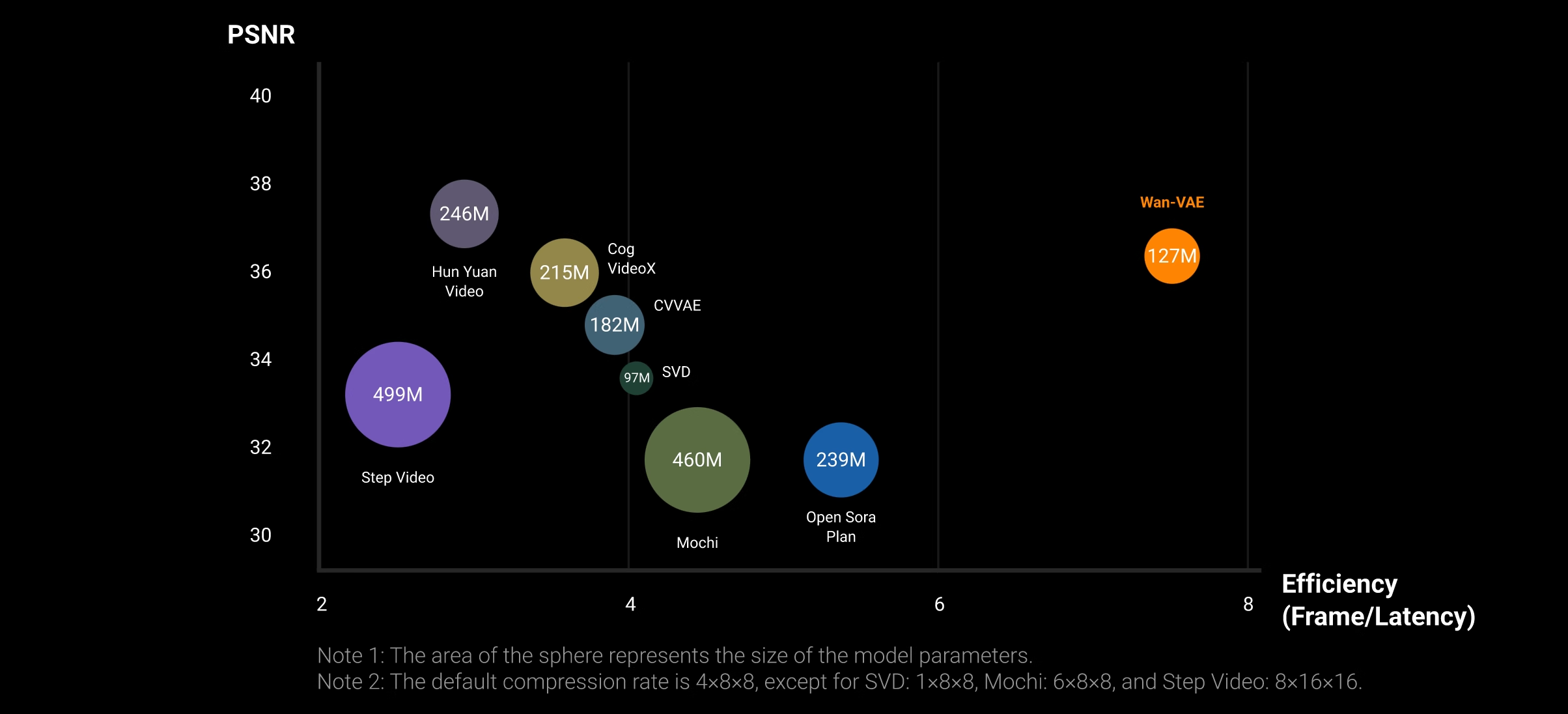

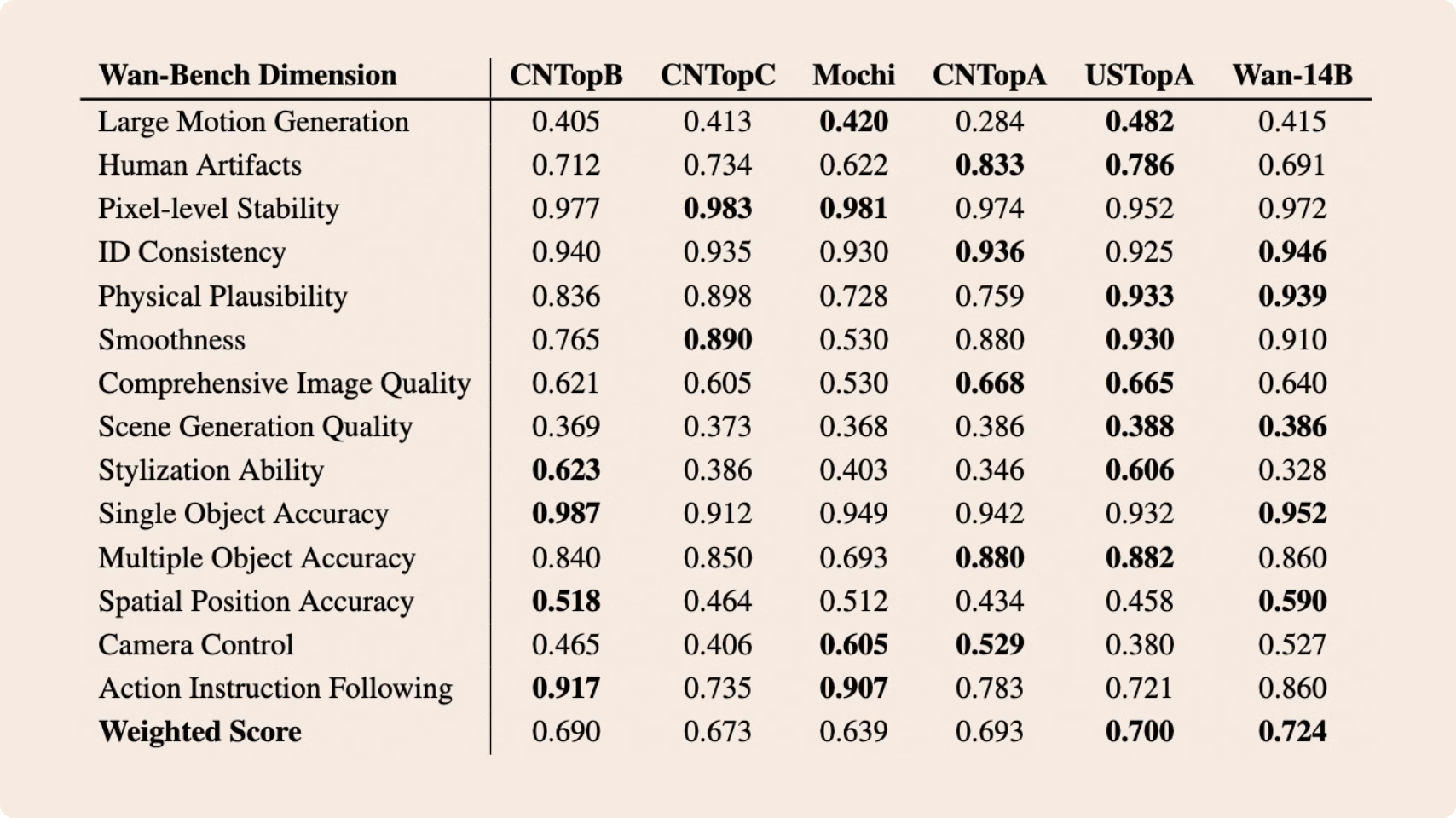

Alibaba Group Tongyi Lab WanxAI Wan2.1 – open source model

Read more: Alibaba Group Tongyi Lab WanxAI Wan2.1 – open source model👍 SOTA Performance: Wan2.1 consistently outperforms existing open-source models and state-of-the-art commercial solutions across multiple benchmarks.

🚀 Supports Consumer-grade GPUs: The T2V-1.3B model requires only 8.19 GB VRAM, making it compatible with almost all consumer-grade GPUs. It can generate a 5-second 480P video on an RTX 4090 in about 4 minutes (without optimization techniques like quantization). Its performance is even comparable to some closed-source models.

🎉 Multiple tasks: Wan2.1 excels in Text-to-Video, Image-to-Video, Video Editing, Text-to-Image, and Video-to-Audio, advancing the field of video generation.

🔮 Visual Text Generation: Wan2.1 is the first video model capable of generating both Chinese and English text, featuring robust text generation that enhances its practical applications.

💪 Powerful Video VAE: Wan-VAE delivers exceptional efficiency and performance, encoding and decoding 1080P videos of any length while preserving temporal information, making it an ideal foundation for video and image generation.

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/split_files

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/example%20workflows_Wan2.1

https://huggingface.co/Wan-AI/Wan2.1-T2V-14B

https://huggingface.co/Kijai/WanVideo_comfy/tree/main

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

copypastecharacter.com – alphabets, special characters, alt codes and symbols library

-

Types of AI Explained in a few Minutes – AI Glossary

-

How do LLMs like ChatGPT (Generative Pre-Trained Transformer) work? Explained by Deep-Fake Ryan Gosling

-

Python and TCL: Tips and Tricks for Foundry Nuke

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

-

PixelSham – Introduction to Python 2022

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

-

Glossary of Lighting Terms – cheat sheet

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.