COMPOSITION

-

9 Best Hacks to Make a Cinematic Video with Any Camera

Read more: 9 Best Hacks to Make a Cinematic Video with Any Camerahttps://www.flexclip.com/learn/cinematic-video.html

- Frame Your Shots to Create Depth

- Create Shallow Depth of Field

- Avoid Shaky Footage and Use Flexible Camera Movements

- Properly Use Slow Motion

- Use Cinematic Lighting Techniques

- Apply Color Grading

- Use Cinematic Music and SFX

- Add Cinematic Fonts and Text Effects

- Create the Cinematic Bar at the Top and the Bottom

DESIGN

-

Kristina Kashtanova – “This is how GPT-4 sees and hears itself”

Read more: Kristina Kashtanova – “This is how GPT-4 sees and hears itself”“I used GPT-4 to describe itself. Then I used its description to generate an image, a video based on this image and a soundtrack.

Tools I used: GPT-4, Midjourney, Kaiber AI, Mubert, RunwayML

This is the description I used that GPT-4 had of itself as a prompt to text-to-image, image-to-video, and text-to-music. I put the video and sound together in RunwayML.

GPT-4 described itself as: “Imagine a sleek, metallic sphere with a smooth surface, representing the vast knowledge contained within the model. The sphere emits a soft, pulsating glow that shifts between various colors, symbolizing the dynamic nature of the AI as it processes information and generates responses. The sphere appears to float in a digital environment, surrounded by streams of data and code, reflecting the complex algorithms and computing power behind the AI”

-

COLOR

-

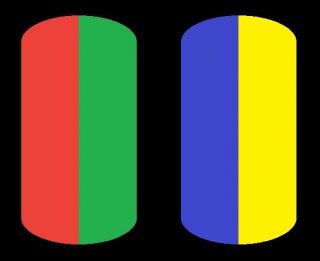

The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t See

Read more: The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t Seewww.livescience.com/17948-red-green-blue-yellow-stunning-colors.html

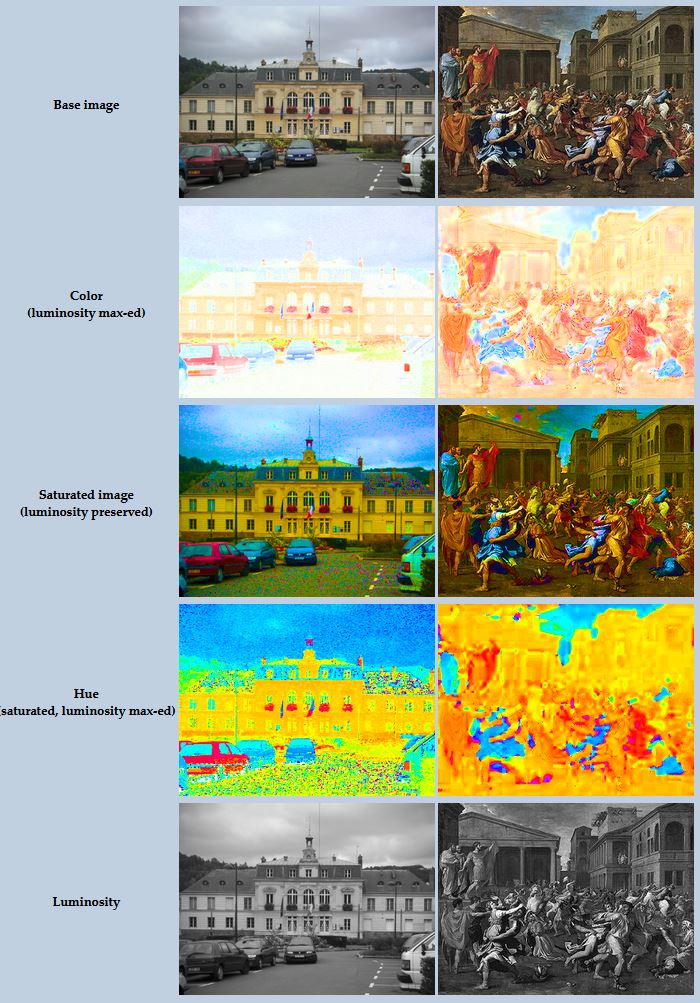

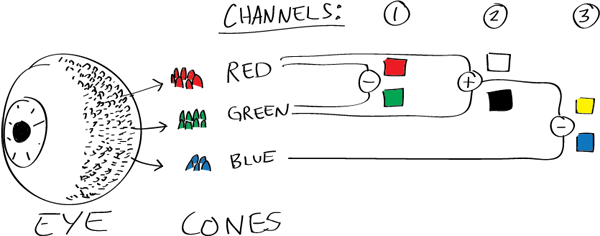

While the human eye has red, green, and blue-sensing cones, those cones are cross-wired in the retina to produce a luminance channel plus a red-green and a blue-yellow channel, and it’s data in that color space (known technically as “LAB”) that goes to the brain. That’s why we can’t perceive a reddish-green or a yellowish-blue, whereas such colors can be represented in the RGB color space used by digital cameras.

https://en.rockcontent.com/blog/the-use-of-yellow-in-data-design

The back of the retina is covered in light-sensitive neurons known as cone cells and rod cells. There are three types of cone cells, each sensitive to different ranges of light. These ranges overlap, but for convenience the cones are referred to as blue (short-wavelength), green (medium-wavelength), and red (long-wavelength). The rod cells are primarily used in low-light situations, so we’ll ignore those for now.

When light enters the eye and hits the cone cells, the cones get excited and send signals to the brain through the visual cortex. Different wavelengths of light excite different combinations of cones to varying levels, which generates our perception of color. You can see that the red cones are most sensitive to light, and the blue cones are least sensitive. The sensitivity of green and red cones overlaps for most of the visible spectrum.

Here’s how your brain takes the signals of light intensity from the cones and turns it into color information. To see red or green, your brain finds the difference between the levels of excitement in your red and green cones. This is the red-green channel.

To get “brightness,” your brain combines the excitement of your red and green cones. This creates the luminance, or black-white, channel. To see yellow or blue, your brain then finds the difference between this luminance signal and the excitement of your blue cones. This is the yellow-blue channel.

From the calculations made in the brain along those three channels, we get four basic colors: blue, green, yellow, and red. Seeing blue is what you experience when low-wavelength light excites the blue cones more than the green and red.

Seeing green happens when light excites the green cones more than the red cones. Seeing red happens when only the red cones are excited by high-wavelength light.

Here’s where it gets interesting. Seeing yellow is what happens when BOTH the green AND red cones are highly excited near their peak sensitivity. This is the biggest collective excitement that your cones ever have, aside from seeing pure white.

Notice that yellow occurs at peak intensity in the graph to the right. Further, the lens and cornea of the eye happen to block shorter wavelengths, reducing sensitivity to blue and violet light.

-

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

-

Mysterious animation wins best illusion of 2011 – Motion silencing illusion

Read more: Mysterious animation wins best illusion of 2011 – Motion silencing illusionThe 2011 Best Illusion of the Year uses motion to render color changes invisible, and so reveals a quirk in our visual systems that is new to scientists.

https://en.wikipedia.org/wiki/Motion_silencing_illusion

“It is a really beautiful effect, revealing something about how our visual system works that we didn’t know before,” said Daniel Simons, a professor at the University of Illinois, Champaign-Urbana. Simons studies visual cognition, and did not work on this illusion. Before its creation, scientists didn’t know that motion had this effect on perception, Simons said.

A viewer stares at a speck at the center of a ring of colored dots, which continuously change color. When the ring begins to rotate around the speck, the color changes appear to stop. But this is an illusion. For some reason, the motion causes our visual system to ignore the color changes. (You can, however, see the color changes if you follow the rotating circles with your eyes.)

-

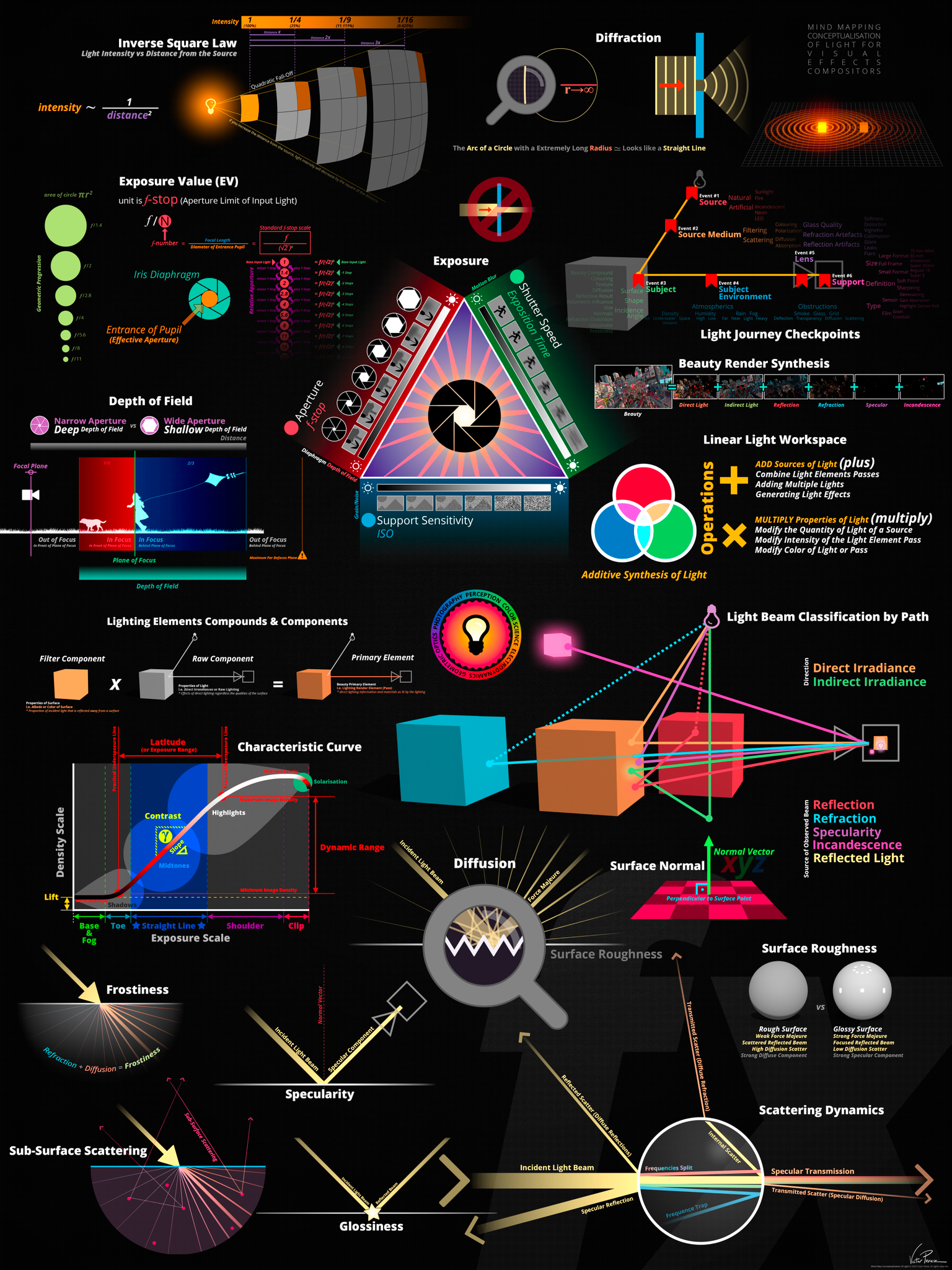

VES Cinematic Color – Motion-Picture Color Management

Read more: VES Cinematic Color – Motion-Picture Color ManagementThis paper presents an introduction to the color pipelines behind modern feature-film visual-effects and animation.

Authored by Jeremy Selan, and reviewed by the members of the VES Technology Committee including Rob Bredow, Dan Candela, Nick Cannon, Paul Debevec, Ray Feeney, Andy Hendrickson, Gautham Krishnamurti, Sam Richards, Jordan Soles, and Sebastian Sylwan.

-

Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022

Read more: Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022Comparison to the commercial side

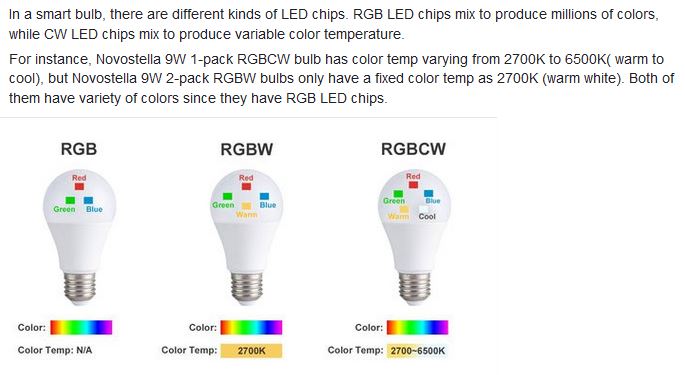

https://www.ecolorled.com/blog/detail/what-is-rgb-rgbw-rgbic-strip-lights

RGBW (RGB + White) LED strip uses a 4-in-1 LED chip made up of red, green, blue, and white.

RGBWW (RGB + White + Warm White) LED strip uses either a 5-in-1 LED chip with red, green, blue, white, and warm white for color mixing. The only difference between RGBW and RGBWW is the intensity of the white color. The term RGBCCT consists of RGB and CCT. CCT (Correlated Color Temperature) means that the color temperature of the led strip light can be adjusted to change between warm white and white. Thus, RGBWW strip light is another name of RGBCCT strip.

RGBCW is the acronym for Red, Green, Blue, Cold, and Warm. These 5-in-1 chips are used in supper bright smart LED lighting products

-

Tim Kang – calibrated white light values in sRGB color space

Read more: Tim Kang – calibrated white light values in sRGB color space8bit sRGB encoded

2000K 255 139 22

2700K 255 172 89

3000K 255 184 109

3200K 255 190 122

4000K 255 211 165

4300K 255 219 178

D50 255 235 205

D55 255 243 224

D5600 255 244 227

D6000 255 249 240

D65 255 255 255

D10000 202 221 255

D20000 166 196 2558bit Rec709 Gamma 2.4

2000K 255 145 34

2700K 255 177 97

3000K 255 187 117

3200K 255 193 129

4000K 255 214 170

4300K 255 221 182

D50 255 236 208

D55 255 243 226

D5600 255 245 229

D6000 255 250 241

D65 255 255 255

D10000 204 222 255

D20000 170 199 2558bit Display P3 encoded

2000K 255 154 63

2700K 255 185 109

3000K 255 195 127

3200K 255 201 138

4000K 255 219 176

4300K 255 225 187

D50 255 239 212

D55 255 245 228

D5600 255 246 231

D6000 255 251 242

D65 255 255 255

D10000 208 223 255

D20000 175 199 25510bit Rec2020 PQ (100 nits)

2000K 520 435 273

2700K 520 466 358

3000K 520 475 384

3200K 520 480 399

4000K 520 495 446

4300K 520 500 458

D50 520 510 482

D55 520 514 497

D5600 520 514 500

D6000 520 517 509

D65 520 520 520

D10000 479 489 520

D20000 448 464 520 -

If a blind person gained sight, could they recognize objects previously touched?

Read more: If a blind person gained sight, could they recognize objects previously touched?Blind people who regain their sight may find themselves in a world they don’t immediately comprehend. “It would be more like a sighted person trying to rely on tactile information,” Moore says.

Learning to see is a developmental process, just like learning language, Prof Cathleen Moore continues. “As far as vision goes, a three-and-a-half year old child is already a well-calibrated system.”

-

Black Body color aka the Planckian Locus curve for white point eye perception

Read more: Black Body color aka the Planckian Locus curve for white point eye perceptionhttp://en.wikipedia.org/wiki/Black-body_radiation

Black-body radiation is the type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature. The radiation has a specific spectrum and intensity that depends only on the temperature of the body.

A black-body at room temperature appears black, as most of the energy it radiates is infra-red and cannot be perceived by the human eye. At higher temperatures, black bodies glow with increasing intensity and colors that range from dull red to blindingly brilliant blue-white as the temperature increases.

The Black Body Ultraviolet Catastrophe Experiment

In photography, color temperature describes the spectrum of light which is radiated from a “blackbody” with that surface temperature. A blackbody is an object which absorbs all incident light — neither reflecting it nor allowing it to pass through.

The Sun closely approximates a black-body radiator. Another rough analogue of blackbody radiation in our day to day experience might be in heating a metal or stone: these are said to become “red hot” when they attain one temperature, and then “white hot” for even higher temperatures. Similarly, black bodies at different temperatures also have varying color temperatures of “white light.”

Despite its name, light which may appear white does not necessarily contain an even distribution of colors across the visible spectrum.

Although planets and stars are neither in thermal equilibrium with their surroundings nor perfect black bodies, black-body radiation is used as a first approximation for the energy they emit. Black holes are near-perfect black bodies, and it is believed that they emit black-body radiation (called Hawking radiation), with a temperature that depends on the mass of the hole.

LIGHTING

-

Sun cone angle (angular diameter) as perceived by earth viewers

Read more: Sun cone angle (angular diameter) as perceived by earth viewersAlso see:

https://www.pixelsham.com/2020/08/01/solid-angle-measures/

The cone angle of the sun refers to the angular diameter of the sun as observed from Earth, which is related to the apparent size of the sun in the sky.

The angular diameter of the sun, or the cone angle of the sunlight as perceived from Earth, is approximately 0.53 degrees on average. This value can vary slightly due to the elliptical nature of Earth’s orbit around the sun, but it generally stays within a narrow range.

Here’s a more precise breakdown:

-

- Average Angular Diameter: About 0.53 degrees (31 arcminutes)

- Minimum Angular Diameter: Approximately 0.52 degrees (when Earth is at aphelion, the farthest point from the sun)

- Maximum Angular Diameter: Approximately 0.54 degrees (when Earth is at perihelion, the closest point to the sun)

This angular diameter remains relatively constant throughout the day because the sun’s distance from Earth does not change significantly over a single day.

To summarize, the cone angle of the sun’s light, or its angular diameter, is typically around 0.53 degrees, regardless of the time of day.

https://en.wikipedia.org/wiki/Angular_diameter

-

-

Composition – These are the basic lighting techniques you need to know for photography and film

Read more: Composition – These are the basic lighting techniques you need to know for photography and filmhttp://www.diyphotography.net/basic-lighting-techniques-need-know-photography-film/

Amongst the basic techniques, there’s…

1- Side lighting – Literally how it sounds, lighting a subject from the side when they’re faced toward you

2- Rembrandt lighting – Here the light is at around 45 degrees over from the front of the subject, raised and pointing down at 45 degrees

3- Back lighting – Again, how it sounds, lighting a subject from behind. This can help to add drama with silouettes

4- Rim lighting – This produces a light glowing outline around your subject

5- Key light – The main light source, and it’s not necessarily always the brightest light source

6- Fill light – This is used to fill in the shadows and provide detail that would otherwise be blackness

7- Cross lighting – Using two lights placed opposite from each other to light two subjects

-

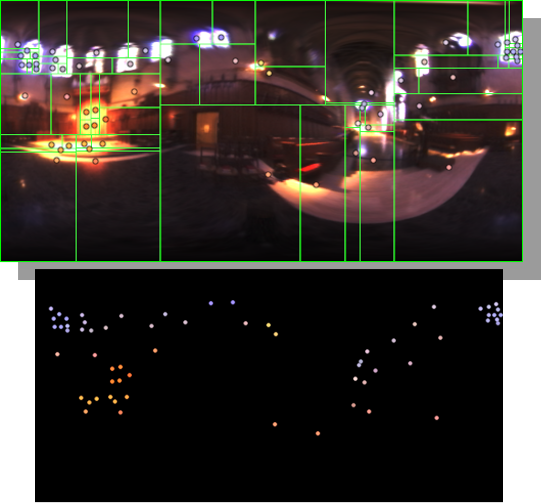

HDRI Median Cut plugin

Read more: HDRI Median Cut pluginwww.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

# bottom left coordinates = 0,0 import numpy as np import cv2 # Load the HDR or EXR image image = cv2.imread('your_image_path.exr', cv2.IMREAD_UNCHANGED) # Load as-is without modification # Calculate the luminance from the HDR channels (assuming RGB format) luminance = np.dot(image[..., :3], [0.299, 0.587, 0.114]) # Set a threshold value based on estimated EV threshold_value = 2.4 # Estimated threshold value based on 4.8 EV # Apply the threshold to identify bright areas # Theluminancearray contains the calculated luminance values for each pixel in the image. # Thethreshold_valueis a user-defined value that represents a cutoff point, separating "bright" and "dark" areas in terms of perceived luminance.thresholded = (luminance > threshold_value) * 255 # Convert the thresholded image to uint8 for contour detection thresholded = thresholded.astype(np.uint8) # Find contours of the bright areas contours, _ = cv2.findContours(thresholded, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # Create a list to store the bounding boxes of bright areas bright_areas = [] # Iterate through contours and extract bounding boxes for contour in contours: x, y, w, h = cv2.boundingRect(contour) # Adjust y-coordinate based on bottom-left origin y_bottom_left_origin = image.shape[0] - (y + h) bright_areas.append((x, y_bottom_left_origin, x + w, y_bottom_left_origin + h)) # Store as (x1, y1, x2, y2) # Print the identified bright areas print("Bright Areas (x1, y1, x2, y2):") for area in bright_areas: print(area)More details

Luminance and Exposure in an EXR Image:

- An EXR (Extended Dynamic Range) image format is often used to store high dynamic range (HDR) images that contain a wide range of luminance values, capturing both dark and bright areas.

- Luminance refers to the perceived brightness of a pixel in an image. In an RGB image, luminance is often calculated using a weighted sum of the red, green, and blue channels, where different weights are assigned to each channel to account for human perception.

- In an EXR image, the pixel values can represent radiometrically accurate scene values, including actual radiance or irradiance levels. These values are directly related to the amount of light emitted or reflected by objects in the scene.

The luminance line is calculating the luminance of each pixel in the image using a weighted sum of the red, green, and blue channels. The three float values [0.299, 0.587, 0.114] are the weights used to perform this calculation.

These weights are based on the concept of luminosity, which aims to approximate the perceived brightness of a color by taking into account the human eye’s sensitivity to different colors. The values are often derived from the NTSC (National Television System Committee) standard, which is used in various color image processing operations.

Here’s the breakdown of the float values:

- 0.299: Weight for the red channel.

- 0.587: Weight for the green channel.

- 0.114: Weight for the blue channel.

The weighted sum of these channels helps create a grayscale image where the pixel values represent the perceived brightness. This technique is often used when converting a color image to grayscale or when calculating luminance for certain operations, as it takes into account the human eye’s sensitivity to different colors.

For the threshold, remember that the exact relationship between EV values and pixel values can depend on the tone-mapping or normalization applied to the HDR image, as well as the dynamic range of the image itself.

To establish a relationship between exposure and the threshold value, you can consider the relationship between linear and logarithmic scales:

- Linear and Logarithmic Scales:

- Exposure values in an EXR image are often represented in logarithmic scales, such as EV (exposure value). Each increment in EV represents a doubling or halving of the amount of light captured.

- Threshold values for luminance thresholding are usually linear, representing an actual luminance level.

- Conversion Between Scales:

- To establish a mathematical relationship, you need to convert between the logarithmic exposure scale and the linear threshold scale.

- One common method is to use a power function. For instance, you can use a power function to convert EV to a linear intensity value.

threshold_value = base_value * (2 ** EV)Here,

EVis the exposure value,base_valueis a scaling factor that determines the relationship between EV and threshold_value, and2 ** EVis used to convert the logarithmic EV to a linear intensity value. - Choosing the Base Value:

- The

base_valuefactor should be determined based on the dynamic range of your EXR image and the specific luminance values you are dealing with. - You may need to experiment with different values of

base_valueto achieve the desired separation of bright areas from the rest of the image.

- The

Let’s say you have an EXR image with a dynamic range of 12 EV, which is a common range for many high dynamic range images. In this case, you want to set a threshold value that corresponds to a certain number of EV above the middle gray level (which is often considered to be around 0.18).

Here’s an example of how you might determine a

base_valueto achieve this:# Define the dynamic range of the image in EV dynamic_range = 12 # Choose the desired number of EV above middle gray for thresholding desired_ev_above_middle_gray = 2 # Calculate the threshold value based on the desired EV above middle gray threshold_value = 0.18 * (2 ** (desired_ev_above_middle_gray / dynamic_range)) print("Threshold Value:", threshold_value)

-

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Glossary of Lighting Terms – cheat sheet

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

-

Sensitivity of human eye

-

Types of AI Explained in a few Minutes – AI Glossary

-

Guide to Prompt Engineering

-

Emmanuel Tsekleves – Writing Research Papers

-

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

-

AI Search – Find The Best AI Tools & Apps

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

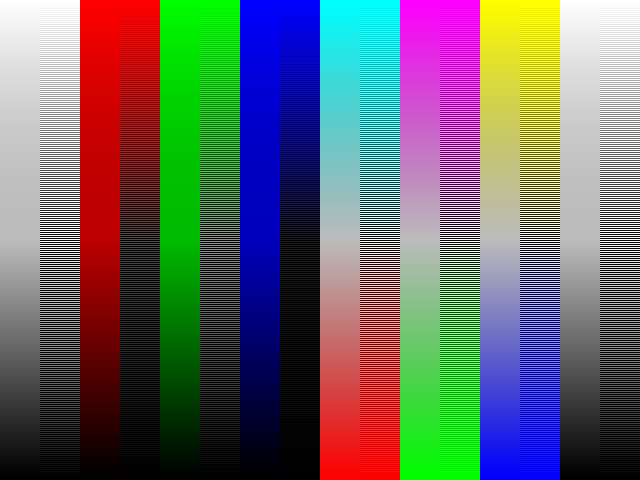

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)