COMPOSITION

-

StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniques

Read more: StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniqueshttps://www.studiobinder.com/blog/camera-lens-buying-guide/

https://www.studiobinder.com/blog/e-books/camera-lenses-explained-volume-1-ebook

-

SlowMoVideo – How to make a slow motion shot with the open source program

Read more: SlowMoVideo – How to make a slow motion shot with the open source programhttp://slowmovideo.granjow.net/

slowmoVideo is an OpenSource program that creates slow-motion videos from your footage.

Slow motion cinematography is the result of playing back frames for a longer duration than they were exposed. For example, if you expose 240 frames of film in one second, then play them back at 24 fps, the resulting movie is 10 times longer (slower) than the original filmed event….

Film cameras are relatively simple mechanical devices that allow you to crank up the speed to whatever rate the shutter and pull-down mechanism allow. Some film cameras can operate at 2,500 fps or higher (although film shot in these cameras often needs some readjustment in postproduction). Video, on the other hand, is always captured, recorded, and played back at a fixed rate, with a current limit around 60fps. This makes extreme slow motion effects harder to achieve (and less elegant) on video, because slowing down the video results in each frame held still on the screen for a long time, whereas with high-frame-rate film there are plenty of frames to fill the longer durations of time. On video, the slow motion effect is more like a slide show than smooth, continuous motion.

One obvious solution is to shoot film at high speed, then transfer it to video (a case where film still has a clear advantage, sorry George). Another possibility is to cross dissolve or blur from one frame to the next. This adds a smooth transition from one still frame to the next. The blur reduces the sharpness of the image, and compared to slowing down images shot at a high frame rate, this is somewhat of a cheat. However, there isn’t much you can do about it until video can be recorded at much higher rates. Of course, many film cameras can’t shoot at high frame rates either, so the whole super-slow-motion endeavor is somewhat specialized no matter what medium you are using. (There are some high speed digital cameras available now that allow you to capture lots of digital frames directly to your computer, so technology is starting to catch up with film. However, this feature isn’t going to appear in consumer camcorders any time soon.)

DESIGN

COLOR

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

Read more: Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminancehttps://www.translatorscafe.com/unit-converter/en-US/illumination/1-11/

The power output of a light source is measured using the unit of watts W. This is a direct measure to calculate how much power the light is going to drain from your socket and it is not relatable to the light brightness itself.

The amount of energy emitted from it per second. That energy comes out in a form of photons which we can crudely represent with rays of light coming out of the source. The higher the power the more rays emitted from the source in a unit of time.

Not all energy emitted is visible to the human eye, so we often rely on photometric measurements, which takes in account the sensitivity of human eye to different wavelenghts

Details in the post

(more…) -

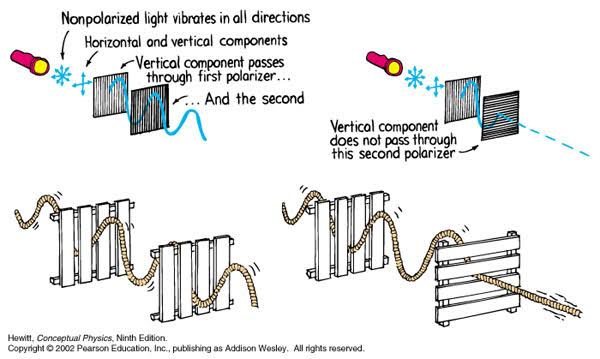

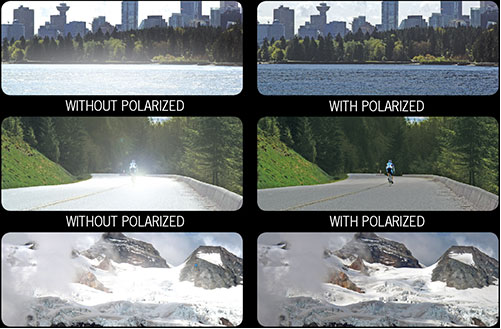

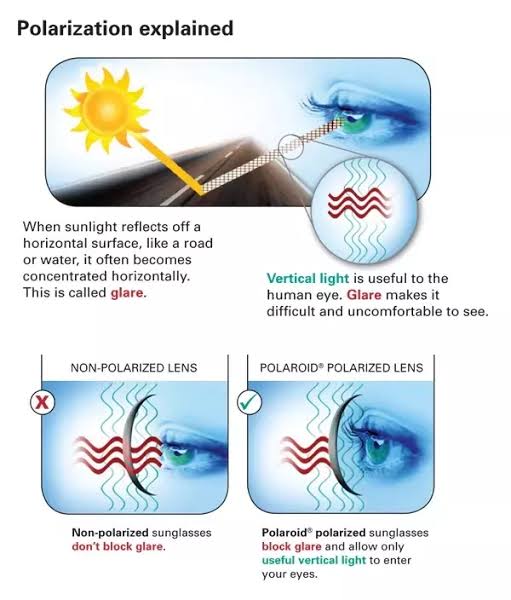

Polarised vs unpolarized filtering

Read more: Polarised vs unpolarized filteringA light wave that is vibrating in more than one plane is referred to as unpolarized light. …

Polarized light waves are light waves in which the vibrations occur in a single plane. The process of transforming unpolarized light into polarized light is known as polarization.

en.wikipedia.org/wiki/Polarizing_filter_(photography)

The most common use of polarized technology is to reduce lighting complexity on the subject.

Details such as glare and hard edges are not removed, but greatly reduced.This method is usually used in VFX to capture raw images with the least amount of specular diffusion or pollution, thus allowing artists to infer detail back through typical shading and rendering techniques and on demand.

Light reflected from a non-metallic surface becomes polarized; this effect is maximum at Brewster’s angle, about 56° from the vertical for common glass.

A polarizer rotated to pass only light polarized in the direction perpendicular to the reflected light will absorb much of it. This absorption allows glare reflected from, for example, a body of water or a road to be reduced. Reflections from shiny surfaces (e.g. vegetation, sweaty skin, water surfaces, glass) are also reduced. This allows the natural color and detail of what is beneath to come through. Reflections from a window into a dark interior can be much reduced, allowing it to be seen through. (The same effects are available for vision by using polarizing sunglasses.)

www.physicsclassroom.com/class/light/u12l1e.cfm

Some of the light coming from the sky is polarized (bees use this phenomenon for navigation). The electrons in the air molecules cause a scattering of sunlight in all directions. This explains why the sky is not dark during the day. But when looked at from the sides, the light emitted from a specific electron is totally polarized.[3] Hence, a picture taken in a direction at 90 degrees from the sun can take advantage of this polarization.

Use of a polarizing filter, in the correct direction, will filter out the polarized component of skylight, darkening the sky; the landscape below it, and clouds, will be less affected, giving a photograph with a darker and more dramatic sky, and emphasizing the clouds.

There are two types of polarizing filters readily available, linear and “circular”, which have exactly the same effect photographically. But the metering and auto-focus sensors in certain cameras, including virtually all auto-focus SLRs, will not work properly with linear polarizers because the beam splitters used to split off the light for focusing and metering are polarization-dependent.

Polarizing filters reduce the light passed through to the film or sensor by about one to three stops (2–8×) depending on how much of the light is polarized at the filter angle selected. Auto-exposure cameras will adjust for this by widening the aperture, lengthening the time the shutter is open, and/or increasing the ASA/ISO speed of the camera.

www.adorama.com/alc/nd-filter-vs-polarizer-what%25e2%2580%2599s-the-difference

Neutral Density (ND) filters help control image exposure by reducing the light that enters the camera so that you can have more control of your depth of field and shutter speed. Polarizers or polarizing filters work in a similar way, but the difference is that they selectively let light waves of a certain polarization pass through. This effect helps create more vivid colors in an image, as well as manage glare and reflections from water surfaces. Both are regarded as some of the best filters for landscape and travel photography as they reduce the dynamic range in high-contrast images, thus enabling photographers to capture more realistic and dramatic sceneries.

shopfelixgray.com/blog/polarized-vs-non-polarized-sunglasses/

www.eyebuydirect.com/blog/difference-polarized-nonpolarized-sunglasses/

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

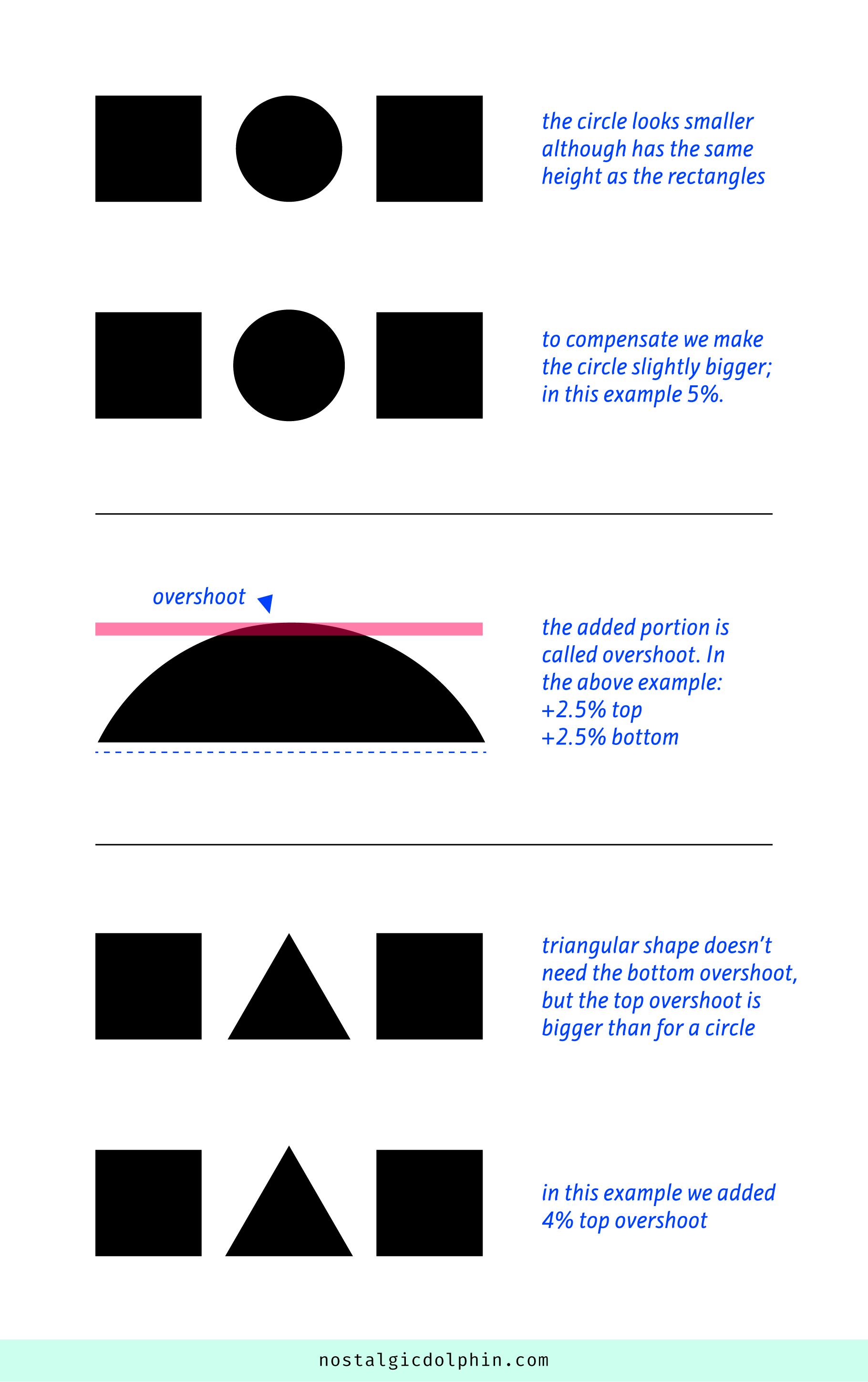

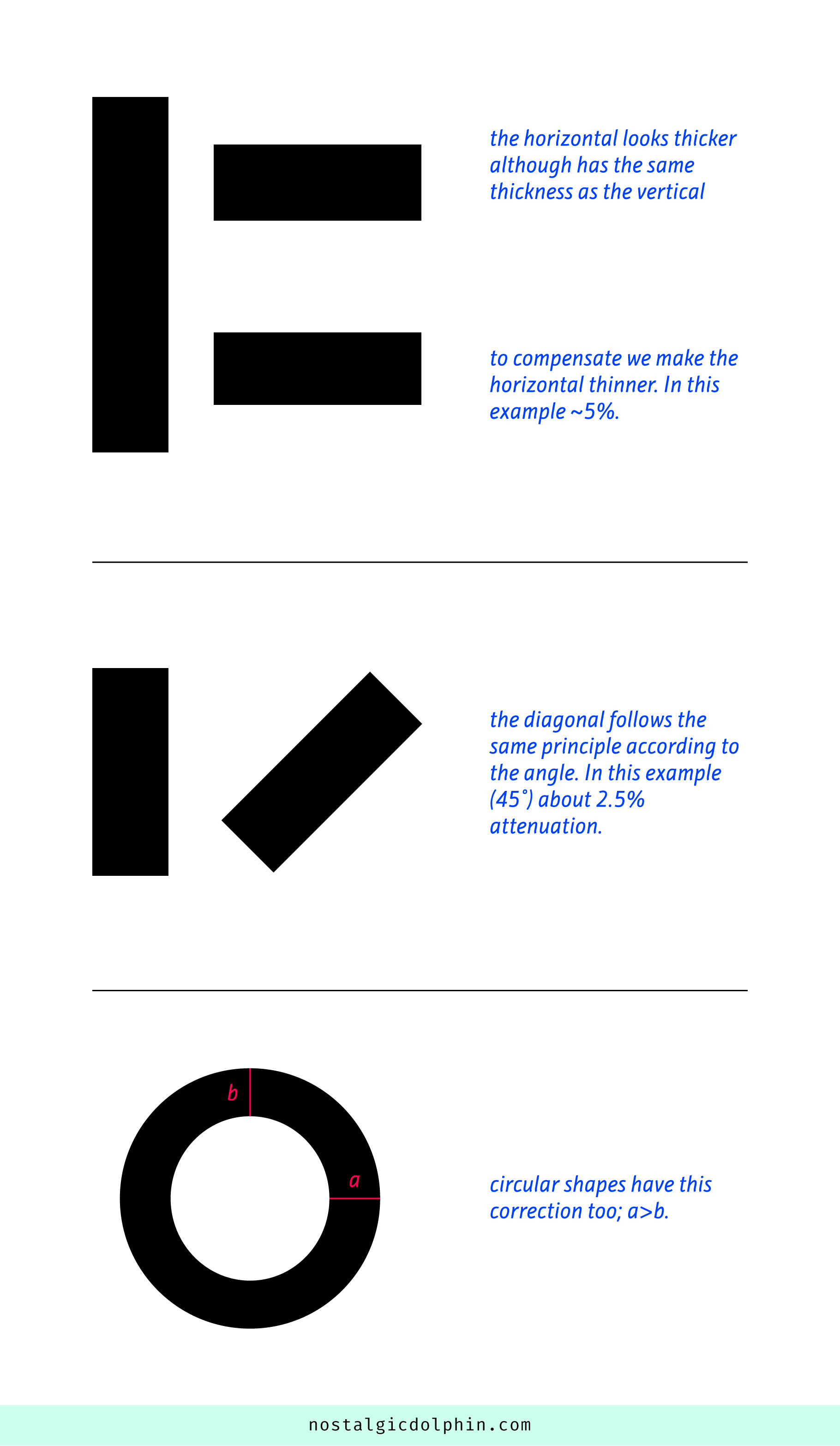

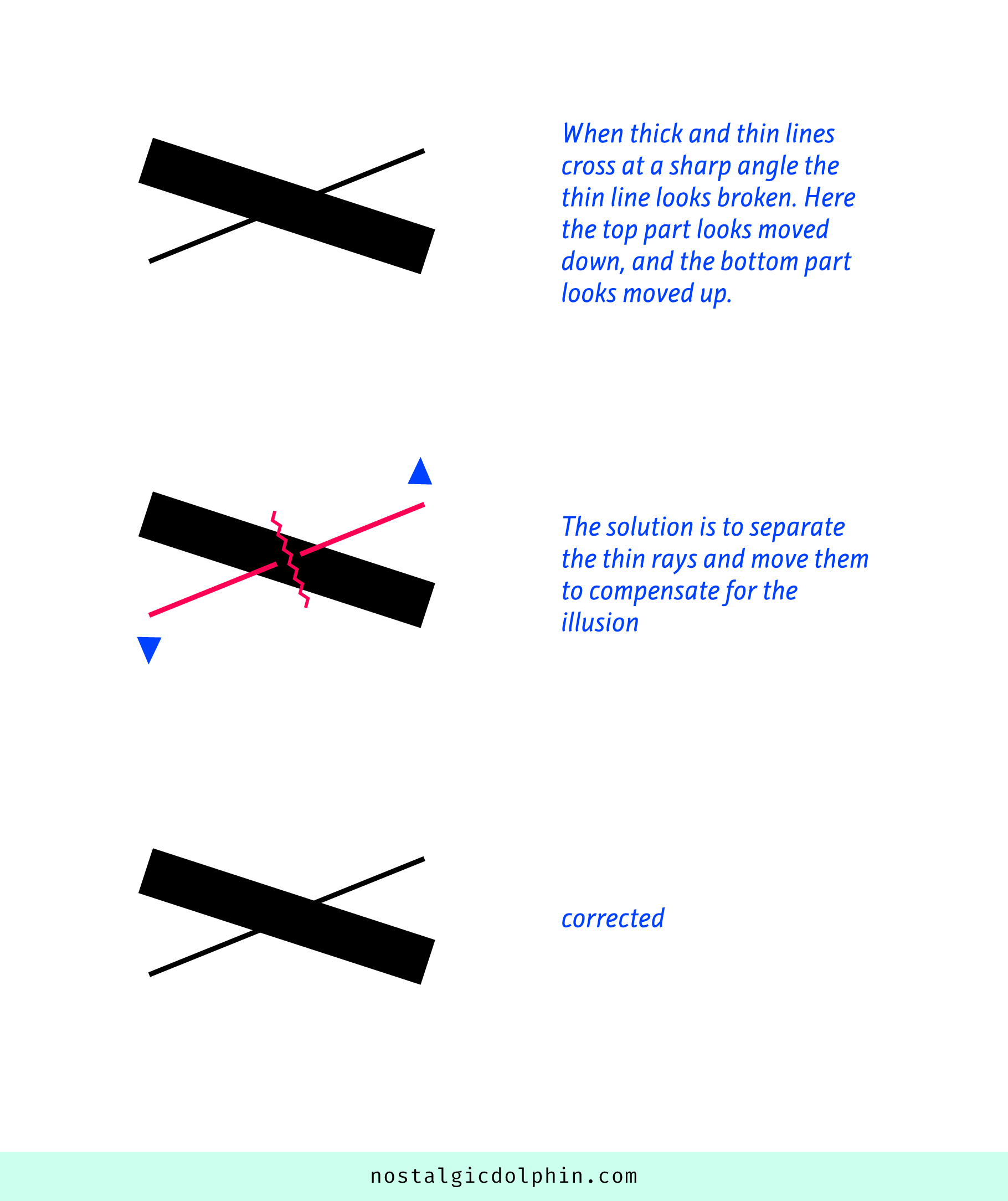

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stage

Read more: Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stagehttps://arxiv.org/pdf/2205.12403.pdf

RGB LEDs vs RGBWP (RGB + lime + phospor converted amber) LEDs

Local copy:

-

Gamma correction

Read more: Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

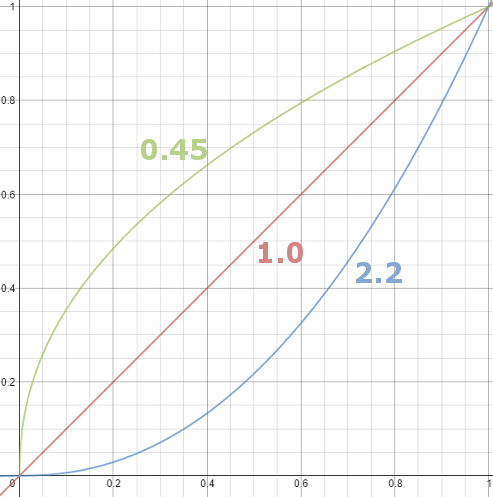

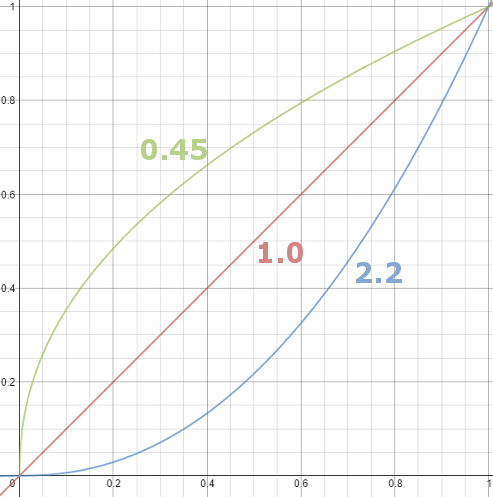

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.Our eyes, different camera or video recorder devices do not correctly capture luminance. (they are not linear)

Different display devices (monitor, phone screen, TV) do not display luminance correctly neither. So, one needs to correct them, therefore the gamma correction function.The human perception of brightness, under common illumination conditions (not pitch black nor blindingly bright), follows an approximate power function (note: no relation to the gamma function), with greater sensitivity to relative differences between darker tones than between lighter ones, consistent with the Stevens’ power law for brightness perception. If images are not gamma-encoded, they allocate too many bits or too much bandwidth to highlights that humans cannot differentiate, and too few bits or too little bandwidth to shadow values that humans are sensitive to and would require more bits/bandwidth to maintain the same visual quality.

https://blog.amerlux.com/4-things-architects-should-know-about-lumens-vs-perceived-brightness/

cones manage color receptivity, rods determine how large our pupils should be. The larger (more dilated) our pupils are, the more light enters our eyes. In dark situations, our rods dilate our pupils so we can see better. This impacts how we perceive brightness.

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

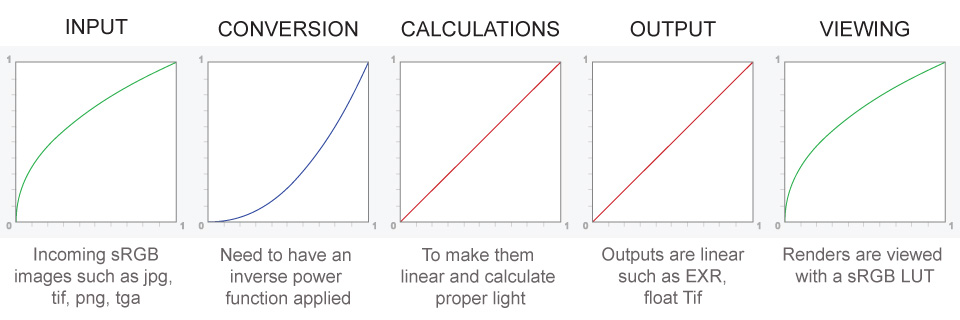

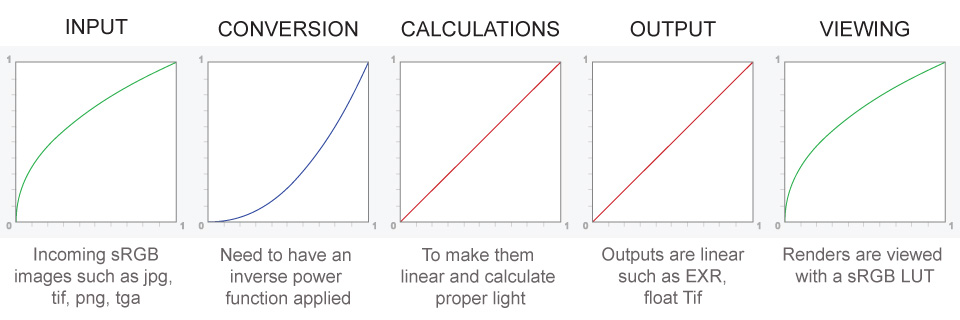

A gamma encoded image has to have “gamma correction” applied when it is viewed — which effectively converts it back into light from the original scene. In other words, the purpose of gamma encoding is for recording the image — not for displaying the image. Fortunately this second step (the “display gamma”) is automatically performed by your monitor and video card. The following diagram illustrates how all of this fits together:

Display gamma

The display gamma can be a little confusing because this term is often used interchangeably with gamma correction, since it corrects for the file gamma. This is the gamma that you are controlling when you perform monitor calibration and adjust your contrast setting. Fortunately, the industry has converged on a standard display gamma of 2.2, so one doesn’t need to worry about the pros/cons of different values.Gamma encoding of images is used to optimize the usage of bits when encoding an image, or bandwidth used to transport an image, by taking advantage of the non-linear manner in which humans perceive light and color. Human response to luminance is also biased. Especially sensible to dark areas.

Thus, the human visual system has a non-linear response to the power of the incoming light, so a fixed increase in power will not have a fixed increase in perceived brightness.

We perceive a value as half bright when it is actually 18% of the original intensity not 50%. As such, our perception is not linear.You probably already know that a pixel can have any ‘value’ of Red, Green, and Blue between 0 and 255, and you would therefore think that a pixel value of 127 would appear as half of the maximum possible brightness, and that a value of 64 would represent one-quarter brightness, and so on. Well, that’s just not the case.

Pixar Color Management

https://renderman.pixar.com/color-management

– Why do we need linear gamma?

Because light works linearly and therefore only works properly when it lights linear values.– Why do we need to view in sRGB?

Because the resulting linear image in not suitable for viewing, but contains all the proper data. Pixar’s IT viewer can compensate by showing the rendered image through a sRGB look up table (LUT), which is identical to what will be the final image after the sRGB gamma curve is applied in post.This would be simple enough if every software would play by the same rules, but they don’t. In fact, the default gamma workflow for many 3D software is incorrect. This is where the knowledge of a proper imaging workflow comes in to save the day.

Cathode-ray tubes have a peculiar relationship between the voltage applied to them, and the amount of light emitted. It isn’t linear, and in fact it follows what’s called by mathematicians and other geeks, a ‘power law’ (a number raised to a power). The numerical value of that power is what we call the gamma of the monitor or system.

Thus. Gamma describes the nonlinear relationship between the pixel levels in your computer and the luminance of your monitor (the light energy it emits) or the reflectance of your prints. The equation is,

Luminance = C * value^gamma + black level

– C is set by the monitor Contrast control.

– Value is the pixel level normalized to a maximum of 1. For an 8 bit monitor with pixel levels 0 – 255, value = (pixel level)/255.

– Black level is set by the (misnamed) monitor Brightness control. The relationship is linear if gamma = 1. The chart illustrates the relationship for gamma = 1, 1.5, 1.8 and 2.2 with C = 1 and black level = 0.

Gamma affects middle tones; it has no effect on black or white. If gamma is set too high, middle tones appear too dark. Conversely, if it’s set too low, middle tones appear too light.

The native gamma of monitors– the relationship between grid voltage and luminance– is typically around 2.5, though it can vary considerably. This is well above any of the display standards, so you must be aware of gamma and correct it.

A display gamma of 2.2 is the de facto standard for the Windows operating system and the Internet-standard sRGB color space.

The old standard for Mcintosh and prepress file interchange is 1.8. It is now 2.2 as well.

Video cameras have gammas of approximately 0.45– the inverse of 2.2. The viewing or system gamma is the product of the gammas of all the devices in the system– the image acquisition device (film+scanner or digital camera), color lookup table (LUT), and monitor. System gamma is typically between 1.1 and 1.5. Viewing flare and other factor make images look flat at system gamma = 1.0.

Most laptop LCD screens are poorly suited for critical image editing because gamma is extremely sensitive to viewing angle.

More about screens

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

CRT Monitors. Due to an odd bit of engineering luck, the native gamma of a CRT is 2.5 — almost the inverse of our eyes. Values from a gamma-encoded file could therefore be sent straight to the screen and they would automatically be corrected and appear nearly OK. However, a small gamma correction of ~1/1.1 needs to be applied to achieve an overall display gamma of 2.2. This is usually already set by the manufacturer’s default settings, but can also be set during monitor calibration.

LCD Monitors. LCD monitors weren’t so fortunate; ensuring an overall display gamma of 2.2 often requires substantial corrections, and they are also much less consistent than CRT’s. LCDs therefore require something called a look-up table (LUT) in order to ensure that input values are depicted using the intended display gamma (amongst other things). See the tutorial on monitor calibration: look-up tables for more on this topic.

About black level (brightness). Your monitor’s brightness control (which should actually be called black level) can be adjusted using the mostly black pattern on the right side of the chart. This pattern contains two dark gray vertical bars, A and B, which increase in luminance with increasing gamma. (If you can’t see them, your black level is way low.) The left bar (A) should be just above the threshold of visibility opposite your chosen gamma (2.2 or 1.8)– it should be invisible where gamma is lower by about 0.3. The right bar (B) should be distinctly visible: brighter than (A), but still very dark. This chart is only for monitors; it doesn’t work on printed media.

The 1.8 and 2.2 gray patterns at the bottom of the image represent a test of monitor quality and calibration. If your monitor is functioning properly and calibrated to gamma = 2.2 or 1.8, the corresponding pattern will appear smooth neutral gray when viewed from a distance. Any waviness, irregularity, or color banding indicates incorrect monitor calibration or poor performance.

Another test to see whether one’s computer monitor is properly hardware adjusted and can display shadow detail in sRGB images properly, they should see the left half of the circle in the large black square very faintly but the right half should be clearly visible. If not, one can adjust their monitor’s contrast and/or brightness setting. This alters the monitor’s perceived gamma. The image is best viewed against a black background.

This procedure is not suitable for calibrating or print-proofing a monitor. It can be useful for making a monitor display sRGB images approximately correctly, on systems in which profiles are not used (for example, the Firefox browser prior to version 3.0 and many others) or in systems that assume untagged source images are in the sRGB colorspace.

On some operating systems running the X Window System, one can set the gamma correction factor (applied to the existing gamma value) by issuing the command xgamma -gamma 0.9 for setting gamma correction factor to 0.9, and xgamma for querying current value of that factor (the default is 1.0). In OS X systems, the gamma and other related screen calibrations are made through the System Preference

https://www.kinematicsoup.com/news/2016/6/15/gamma-and-linear-space-what-they-are-how-they-differ

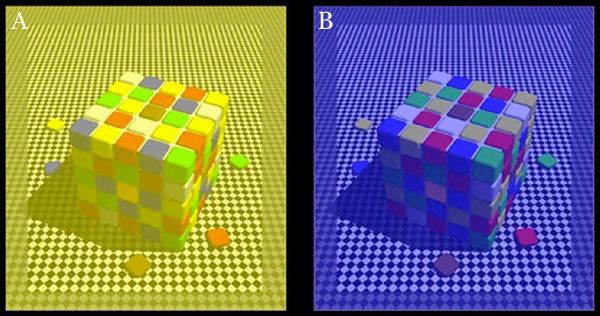

Linear color space means that numerical intensity values correspond proportionally to their perceived intensity. This means that the colors can be added and multiplied correctly. A color space without that property is called ”non-linear”. Below is an example where an intensity value is doubled in a linear and a non-linear color space. While the corresponding numerical values in linear space are correct, in the non-linear space (gamma = 0.45, more on this later) we can’t simply double the value to get the correct intensity.

The need for gamma arises for two main reasons: The first is that screens have been built with a non-linear response to intensity. The other is that the human eye can tell the difference between darker shades better than lighter shades. This means that when images are compressed to save space, we want to have greater accuracy for dark intensities at the expense of lighter intensities. Both of these problems are resolved using gamma correction, which is to say the intensity of every pixel in an image is put through a power function. Specifically, gamma is the name given to the power applied to the image.

CRT screens, simply by how they work, apply a gamma of around 2.2, and modern LCD screens are designed to mimic that behavior. A gamma of 2.2, the reciprocal of 0.45, when applied to the brightened images will darken them, leaving the original image.

-

No one could see the colour blue until modern times

Read more: No one could see the colour blue until modern timeshttps://www.businessinsider.com/what-is-blue-and-how-do-we-see-color-2015-2

The way that humans see the world… until we have a way to describe something, even something so fundamental as a colour, we may not even notice that something it’s there.

Ancient languages didn’t have a word for blue — not Greek, not Chinese, not Japanese, not Hebrew, not Icelandic cultures. And without a word for the colour, there’s evidence that they may not have seen it at all.

https://www.wnycstudios.org/story/211119-colors

Every language first had a word for black and for white, or dark and light. The next word for a colour to come into existence — in every language studied around the world — was red, the colour of blood and wine.

After red, historically, yellow appears, and later, green (though in a couple of languages, yellow and green switch places). The last of these colours to appear in every language is blue.

The only ancient culture to develop a word for blue was the Egyptians — and as it happens, they were also the only culture that had a way to produce a blue dye.

https://mymodernmet.com/shades-of-blue-color-history/

Considered to be the first ever synthetically produced color pigment, Egyptian blue (also known as cuprorivaite) was created around 2,200 B.C. It was made from ground limestone mixed with sand and a copper-containing mineral, such as azurite or malachite, which was then heated between 1470 and 1650°F. The result was an opaque blue glass which then had to be crushed and combined with thickening agents such as egg whites to create a long-lasting paint or glaze.

If you think about it, blue doesn’t appear much in nature — there aren’t animals with blue pigments (except for one butterfly, Obrina Olivewing, all animals generate blue through light scattering), blue eyes are rare (also blue through light scattering), and blue flowers are mostly human creations. There is, of course, the sky, but is that really blue?

So before we had a word for it, did people not naturally see blue? Do you really see something if you don’t have a word for it?

A researcher named Jules Davidoff traveled to Namibia to investigate this, where he conducted an experiment with the Himba tribe, who speak a language that has no word for blue or distinction between blue and green. When shown a circle with 11 green squares and one blue, they couldn’t pick out which one was different from the others.

When looking at a circle of green squares with only one slightly different shade, they could immediately spot the different one. Can you?

Davidoff says that without a word for a colour, without a way of identifying it as different, it’s much harder for us to notice what’s unique about it — even though our eyes are physically seeing the blocks it in the same way.

Further research brought to wider discussions about color perception in humans. Everything that we make is based on the fact that humans are trichromatic. The television only has 3 colors. Our color printers have 3 different colors. But some people, and in specific some women seemed to be more sensible to color differences… mainly because they’re just more aware or – because of the job that they do.

Eventually this brought to the discovery of a small percentage of the population, referred to as tetrachromats, which developed an extra cone sensitivity to yellow, likely due to gene modifications.

The interesting detail about these is that even between tetrachromats, only the ones that had a reason to develop, label and work with extra color sensitivity actually developed the ability to use their native skills.

So before blue became a common concept, maybe humans saw it. But it seems they didn’t know they were seeing it.

If you see something yet can’t see it, does it exist? Did colours come into existence over time? Not technically, but our ability to notice them… may have…

-

Björn Ottosson – How software gets color wrong

Read more: Björn Ottosson – How software gets color wronghttps://bottosson.github.io/posts/colorwrong/

Most software around us today are decent at accurately displaying colors. Processing of colors is another story unfortunately, and is often done badly.

To understand what the problem is, let’s start with an example of three ways of blending green and magenta:

- Perceptual blend – A smooth transition using a model designed to mimic human perception of color. The blending is done so that the perceived brightness and color varies smoothly and evenly.

- Linear blend – A model for blending color based on how light behaves physically. This type of blending can occur in many ways naturally, for example when colors are blended together by focus blur in a camera or when viewing a pattern of two colors at a distance.

- sRGB blend – This is how colors would normally be blended in computer software, using sRGB to represent the colors.

Let’s look at some more examples of blending of colors, to see how these problems surface more practically. The examples use strong colors since then the differences are more pronounced. This is using the same three ways of blending colors as the first example.

Instead of making it as easy as possible to work with color, most software make it unnecessarily hard, by doing image processing with representations not designed for it. Approximating the physical behavior of light with linear RGB models is one easy thing to do, but more work is needed to create image representations tailored for image processing and human perception.

Also see:

LIGHTING

-

Gamma correction

Read more: Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.Our eyes, different camera or video recorder devices do not correctly capture luminance. (they are not linear)

Different display devices (monitor, phone screen, TV) do not display luminance correctly neither. So, one needs to correct them, therefore the gamma correction function.The human perception of brightness, under common illumination conditions (not pitch black nor blindingly bright), follows an approximate power function (note: no relation to the gamma function), with greater sensitivity to relative differences between darker tones than between lighter ones, consistent with the Stevens’ power law for brightness perception. If images are not gamma-encoded, they allocate too many bits or too much bandwidth to highlights that humans cannot differentiate, and too few bits or too little bandwidth to shadow values that humans are sensitive to and would require more bits/bandwidth to maintain the same visual quality.

https://blog.amerlux.com/4-things-architects-should-know-about-lumens-vs-perceived-brightness/

cones manage color receptivity, rods determine how large our pupils should be. The larger (more dilated) our pupils are, the more light enters our eyes. In dark situations, our rods dilate our pupils so we can see better. This impacts how we perceive brightness.

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

A gamma encoded image has to have “gamma correction” applied when it is viewed — which effectively converts it back into light from the original scene. In other words, the purpose of gamma encoding is for recording the image — not for displaying the image. Fortunately this second step (the “display gamma”) is automatically performed by your monitor and video card. The following diagram illustrates how all of this fits together:

Display gamma

The display gamma can be a little confusing because this term is often used interchangeably with gamma correction, since it corrects for the file gamma. This is the gamma that you are controlling when you perform monitor calibration and adjust your contrast setting. Fortunately, the industry has converged on a standard display gamma of 2.2, so one doesn’t need to worry about the pros/cons of different values.Gamma encoding of images is used to optimize the usage of bits when encoding an image, or bandwidth used to transport an image, by taking advantage of the non-linear manner in which humans perceive light and color. Human response to luminance is also biased. Especially sensible to dark areas.

Thus, the human visual system has a non-linear response to the power of the incoming light, so a fixed increase in power will not have a fixed increase in perceived brightness.

We perceive a value as half bright when it is actually 18% of the original intensity not 50%. As such, our perception is not linear.You probably already know that a pixel can have any ‘value’ of Red, Green, and Blue between 0 and 255, and you would therefore think that a pixel value of 127 would appear as half of the maximum possible brightness, and that a value of 64 would represent one-quarter brightness, and so on. Well, that’s just not the case.

Pixar Color Management

https://renderman.pixar.com/color-management

– Why do we need linear gamma?

Because light works linearly and therefore only works properly when it lights linear values.– Why do we need to view in sRGB?

Because the resulting linear image in not suitable for viewing, but contains all the proper data. Pixar’s IT viewer can compensate by showing the rendered image through a sRGB look up table (LUT), which is identical to what will be the final image after the sRGB gamma curve is applied in post.This would be simple enough if every software would play by the same rules, but they don’t. In fact, the default gamma workflow for many 3D software is incorrect. This is where the knowledge of a proper imaging workflow comes in to save the day.

Cathode-ray tubes have a peculiar relationship between the voltage applied to them, and the amount of light emitted. It isn’t linear, and in fact it follows what’s called by mathematicians and other geeks, a ‘power law’ (a number raised to a power). The numerical value of that power is what we call the gamma of the monitor or system.

Thus. Gamma describes the nonlinear relationship between the pixel levels in your computer and the luminance of your monitor (the light energy it emits) or the reflectance of your prints. The equation is,

Luminance = C * value^gamma + black level

– C is set by the monitor Contrast control.

– Value is the pixel level normalized to a maximum of 1. For an 8 bit monitor with pixel levels 0 – 255, value = (pixel level)/255.

– Black level is set by the (misnamed) monitor Brightness control. The relationship is linear if gamma = 1. The chart illustrates the relationship for gamma = 1, 1.5, 1.8 and 2.2 with C = 1 and black level = 0.

Gamma affects middle tones; it has no effect on black or white. If gamma is set too high, middle tones appear too dark. Conversely, if it’s set too low, middle tones appear too light.

The native gamma of monitors– the relationship between grid voltage and luminance– is typically around 2.5, though it can vary considerably. This is well above any of the display standards, so you must be aware of gamma and correct it.

A display gamma of 2.2 is the de facto standard for the Windows operating system and the Internet-standard sRGB color space.

The old standard for Mcintosh and prepress file interchange is 1.8. It is now 2.2 as well.

Video cameras have gammas of approximately 0.45– the inverse of 2.2. The viewing or system gamma is the product of the gammas of all the devices in the system– the image acquisition device (film+scanner or digital camera), color lookup table (LUT), and monitor. System gamma is typically between 1.1 and 1.5. Viewing flare and other factor make images look flat at system gamma = 1.0.

Most laptop LCD screens are poorly suited for critical image editing because gamma is extremely sensitive to viewing angle.

More about screens

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

CRT Monitors. Due to an odd bit of engineering luck, the native gamma of a CRT is 2.5 — almost the inverse of our eyes. Values from a gamma-encoded file could therefore be sent straight to the screen and they would automatically be corrected and appear nearly OK. However, a small gamma correction of ~1/1.1 needs to be applied to achieve an overall display gamma of 2.2. This is usually already set by the manufacturer’s default settings, but can also be set during monitor calibration.

LCD Monitors. LCD monitors weren’t so fortunate; ensuring an overall display gamma of 2.2 often requires substantial corrections, and they are also much less consistent than CRT’s. LCDs therefore require something called a look-up table (LUT) in order to ensure that input values are depicted using the intended display gamma (amongst other things). See the tutorial on monitor calibration: look-up tables for more on this topic.

About black level (brightness). Your monitor’s brightness control (which should actually be called black level) can be adjusted using the mostly black pattern on the right side of the chart. This pattern contains two dark gray vertical bars, A and B, which increase in luminance with increasing gamma. (If you can’t see them, your black level is way low.) The left bar (A) should be just above the threshold of visibility opposite your chosen gamma (2.2 or 1.8)– it should be invisible where gamma is lower by about 0.3. The right bar (B) should be distinctly visible: brighter than (A), but still very dark. This chart is only for monitors; it doesn’t work on printed media.

The 1.8 and 2.2 gray patterns at the bottom of the image represent a test of monitor quality and calibration. If your monitor is functioning properly and calibrated to gamma = 2.2 or 1.8, the corresponding pattern will appear smooth neutral gray when viewed from a distance. Any waviness, irregularity, or color banding indicates incorrect monitor calibration or poor performance.

Another test to see whether one’s computer monitor is properly hardware adjusted and can display shadow detail in sRGB images properly, they should see the left half of the circle in the large black square very faintly but the right half should be clearly visible. If not, one can adjust their monitor’s contrast and/or brightness setting. This alters the monitor’s perceived gamma. The image is best viewed against a black background.

This procedure is not suitable for calibrating or print-proofing a monitor. It can be useful for making a monitor display sRGB images approximately correctly, on systems in which profiles are not used (for example, the Firefox browser prior to version 3.0 and many others) or in systems that assume untagged source images are in the sRGB colorspace.

On some operating systems running the X Window System, one can set the gamma correction factor (applied to the existing gamma value) by issuing the command xgamma -gamma 0.9 for setting gamma correction factor to 0.9, and xgamma for querying current value of that factor (the default is 1.0). In OS X systems, the gamma and other related screen calibrations are made through the System Preference

https://www.kinematicsoup.com/news/2016/6/15/gamma-and-linear-space-what-they-are-how-they-differ

Linear color space means that numerical intensity values correspond proportionally to their perceived intensity. This means that the colors can be added and multiplied correctly. A color space without that property is called ”non-linear”. Below is an example where an intensity value is doubled in a linear and a non-linear color space. While the corresponding numerical values in linear space are correct, in the non-linear space (gamma = 0.45, more on this later) we can’t simply double the value to get the correct intensity.

The need for gamma arises for two main reasons: The first is that screens have been built with a non-linear response to intensity. The other is that the human eye can tell the difference between darker shades better than lighter shades. This means that when images are compressed to save space, we want to have greater accuracy for dark intensities at the expense of lighter intensities. Both of these problems are resolved using gamma correction, which is to say the intensity of every pixel in an image is put through a power function. Specifically, gamma is the name given to the power applied to the image.

CRT screens, simply by how they work, apply a gamma of around 2.2, and modern LCD screens are designed to mimic that behavior. A gamma of 2.2, the reciprocal of 0.45, when applied to the brightened images will darken them, leaving the original image.

-

domeble – Hi-Resolution CGI Backplates and 360° HDRI

Read more: domeble – Hi-Resolution CGI Backplates and 360° HDRIWhen collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

-

Romain Chauliac – LightIt a lighting script for Maya and Arnold

Read more: Romain Chauliac – LightIt a lighting script for Maya and ArnoldLightIt is a script for Maya and Arnold that will help you and improve your lighting workflow.

Thanks to preset studio lighting components (lights, backdrop…), high quality studio scenes and HDRI library manager.https://www.artstation.com/artwork/393emJ

-

HDRI Resources

Read more: HDRI ResourcesText2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360

-

Tracing Spherical harmonics and how Weta used them in production

Read more: Tracing Spherical harmonics and how Weta used them in productionA way to approximate complex lighting in ultra realistic renders.

All SH lighting techniques involve replacing parts of standard lighting equations with spherical functions that have been projected into frequency space using the spherical harmonics as a basis.

http://www.cs.columbia.edu/~cs4162/slides/spherical-harmonic-lighting.pdf

Spherical harmonics as used at Weta Digital

https://www.fxguide.com/fxfeatured/the-science-of-spherical-harmonics-at-weta-digital/

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

Scene Referred vs Display Referred color workflows

-

Image rendering bit depth

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent

-

UV maps

-

Principles of Animation with Alan Becker, Dermot OConnor and Shaun Keenan

-

Glossary of Lighting Terms – cheat sheet

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.