COMPOSITION

DESIGN

-

Glenn Marshall – The Crow

Read more: Glenn Marshall – The CrowCreated with AI ‘Style Transfer’ processes to transform video footage into AI video art.

COLOR

-

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat SheetWhere is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

What type of lighting?-> High key lighting.

Features bright, even illumination and few conspicuous shadows. This lighting key is often used in musicals and comedies.Low key lighting

Features diffused shadows and atmospheric pools of light. This lighting key is often used in mysteries and thrillers.High contrast lighting

Features harsh shafts of lights and dramatic streaks of blackness. This type of lighting is often used in tragedies and melodramas.What type of shot?

Extreme long shot

Taken from a great distance, showing much of the locale. Ifpeople are included in these shots, they usually appear as mere specks-> Long shot

Corresponds to the space between the audience and the stage in a live theater. The long shots show the characters and some of the locale.Full shot

Range with just enough space to contain the human body in full. The full shot shows the character and a minimal amount of the locale.Medium shot

Shows the human figure from the knees or waist up.Close-Up

Concentrates on a relatively small object and show very little if any locale.Extreme close-up

Focuses on an unnaturally small portion of an object, giving that part great detail and symbolic significance.What angle?

Bird’s-eye view.

The shot is photographed directly from above. This type of shot can be disorienting, and the people photographed seem insignificant.High angle.

This angle reduces the size of the objects photographed. A person photographed from this angle seems harmless and insignificant, but to a lesser extent than with the bird’s-eye view.-> Eye-level shot.

The clearest view of an object, but seldom intrinsically dramatic, because it tends to be the norm.Low angle.

This angle increases high and a sense of verticality, heightening the importance of the object photographed. A person shot from this angle is given a sense of power and respect.Oblique angle.

For this angle, the camera is tilted laterally, giving the image a slanted appearance. Oblique angles suggest tension, transition, a impending movement. They are also called canted or dutch angles.What is the dominant color?

The use of color in this shot is symbolic. The scene is set in warehouse. Both the set and characters are blues, blacks and whites.

This was intentional allowing for the scenes and shots with blood to have a great level of contrast.

What is the Lens/Filter/Stock?

Telephoto lens.

A lens that draws objects closer but also diminishes the illusion of depth.Wide-angle lens.

A lens that takes in a broad area and increases the illusion of depth but sometimes distorts the edges of the image.Fast film stock.

Highly sensitive to light, it can register an image with little illumination. However, the final product tends to be grainy.Slow film stock.

Relatively insensitive to light, it requires a great deal of illumination. The final product tends to look polished.The lens is not wide-angle because there isn’t a great sense of depth, nor are several planes in focus. The lens is probably long but not necessarily a telephoto lens because the depth isn’t inordinately compressed.

The stock is fast because of the grainy quality of the image.

Subsidiary Contrast; where does the eye go next?

The two guns.

How much visual information is packed into the image? Is the texture stark, moderate, or highly detailed?

Minimalist clutter in the warehouse allows a focus on a character driven thriller.

What is the Composition?

Horizontal.

Compositions based on horizontal lines seem visually at rest and suggest placidity or peacefulness.Vertical.

Compositions based on vertical lines seem visually at rest and suggest strength.-> Diagonal.

Compositions based on diagonal, or oblique, lines seem dynamic and suggest tension or anxiety.-> Binary. Binary structures emphasize parallelism.

Triangle.

Triadic compositions stress the dynamic interplay among three mainCircle.

Circular compositions suggest security and enclosure.Is the form open or closed? Does the image suggest a window that arbitrarily isolates a fragment of the scene? Or a proscenium arch, in which the visual elements are carefully arranged and held in balance?

The most nebulous of all the categories of mise en scene, the type of form is determined by how consciously structured the mise en scene is. Open forms stress apparently simple techniques, because with these unself-conscious methods the filmmaker is able to emphasize the immediate, the familiar, the intimate aspects of reality. In open-form images, the frame tends to be deemphasized. In closed form images, all the necessary information is carefully structured within the confines of the frame. Space seems enclosed and self-contained rather than continuous.

Could argue this is a proscenium arch because this is such a classic shot with parallels and juxtapositions.

Is the framing tight or loose? Do the character have no room to move around, or can they move freely without impediments?

Shots where the characters are placed at the edges of the frame and have little room to move around within the frame are considered tight.

Longer shots, in which characters have room to move around within the frame, are considered loose and tend to suggest freedom.

Center-framed giving us the entire scene showing isolation, place and struggle.

Depth of Field. On how many planes is the image composed (how many are in focus)? Does the background or foreground comment in any way on the mid-ground?

Standard DOF, one background and clearly defined foreground.

Which way do the characters look vis-a-vis the camera?

An actor can be photographed in any of five basic positions, each conveying different psychological overtones.

Full-front (facing the camera):

the position with the most intimacy. The character is looking in our direction, inviting our complicity.Quarter Turn:

the favored position of most filmmakers. This position offers a high degree of intimacy but with less emotional involvement than the full-front.-> Profile (looking of the frame left or right):

More remote than the quarter turn, the character in profile seems unaware of being observed, lost in his or her own thoughts.Three-quarter Turn:

More anonymous than the profile, this position is useful for conveying a character’s unfriendly or antisocial feelings, for in effect, the character is partially turning his or her back on us, rejecting our interest.Back to Camera:

The most anonymous of all positions, this position is often used to suggest a character’s alienation from the world. When a character has his or her back to the camera, we can only guess what’s taking place internally, conveying a sense of concealment, or mystery.How much space is there between the characters?

Extremely close, for a gunfight.

The way people use space can be divided into four proxemic patterns.

Intimate distances.

The intimate distance ranges from skin contact to about eighteen inches away. This is the distance of physical involvement–of love, comfort, and tenderness between individuals.-> Personal distances.

The personal distance ranges roughly from eighteen inches away to about four feet away. These distances tend to be reserved for friends and acquaintances. Personal distances preserve the privacy between individuals, yet these rages don’t necessarily suggest exclusion, as intimate distances often do.Social distances.

The social distance rages from four feet to about twelve feet. These distances are usually reserved for impersonal business and casual social gatherings. It’s a friendly range in most cases, yet somewhat more formal than the personal distance.Public distances.

The public distance extends from twelve feet to twenty-five feet or more. This range tends to be formal and rather detached. -

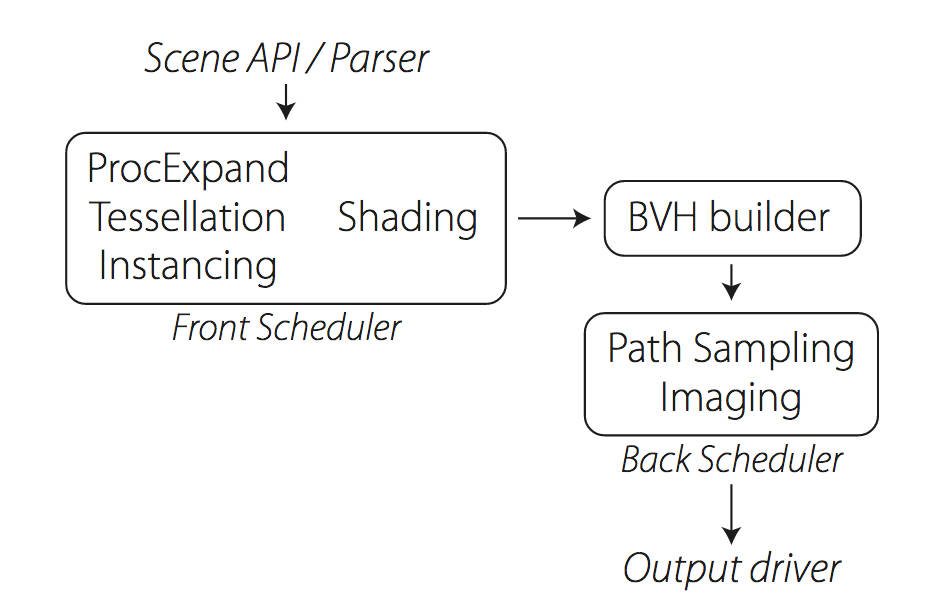

Weta Digital – Manuka Raytracer and Gazebo GPU renderers – pipeline

Read more: Weta Digital – Manuka Raytracer and Gazebo GPU renderers – pipelinehttps://jo.dreggn.org/home/2018_manuka.pdf

http://www.fxguide.com/featured/manuka-weta-digitals-new-renderer/

The Manuka rendering architecture has been designed in the spirit of the classic reyes rendering architecture. In its core, reyes is based on stochastic rasterisation of micropolygons, facilitating depth of field, motion blur, high geometric complexity,and programmable shading.

This is commonly achieved with Monte Carlo path tracing, using a paradigm often called shade-on-hit, in which the renderer alternates tracing rays with running shaders on the various ray hits. The shaders take the role of generating the inputs of the local material structure which is then used bypath sampling logic to evaluate contributions and to inform what further rays to cast through the scene.

Over the years, however, the expectations have risen substantially when it comes to image quality. Computing pictures which are indistinguishable from real footage requires accurate simulation of light transport, which is most often performed using some variant of Monte Carlo path tracing. Unfortunately this paradigm requires random memory accesses to the whole scene and does not lend itself well to a rasterisation approach at all.

Manuka is both a uni-directional and bidirectional path tracer and encompasses multiple importance sampling (MIS). Interestingly, and importantly for production character skin work, it is the first major production renderer to incorporate spectral MIS in the form of a new ‘Hero Spectral Sampling’ technique, which was recently published at Eurographics Symposium on Rendering 2014.

Manuka propose a shade-before-hit paradigm in-stead and minimise I/O strain (and some memory costs) on the system, leveraging locality of reference by running pattern generation shaders before we execute light transport simulation by path sampling, “compressing” any bvh structure as needed, and as such also limiting duplication of source data.

The difference with reyes is that instead of baking colors into the geometry like in Reyes, manuka bakes surface closures. This means that light transport is still calculated with path tracing, but all texture lookups etc. are done up-front and baked into the geometry.The main drawback with this method is that geometry has to be tessellated to its highest, stable topology before shading can be evaluated properly. As such, the high cost to first pixel. Even a basic 4 vertices square becomes a much more complex model with this approach.

Manuka use the RenderMan Shading Language (rsl) for programmable shading [Pixar Animation Studios 2015], but we do not invoke rsl shaders when intersecting a ray with a surface (often called shade-on-hit). Instead, we pre-tessellate and pre-shade all the input geometry in the front end of the renderer.

This way, we can efficiently order shading computations to sup-port near-optimal texture locality, vectorisation, and parallelism. This system avoids repeated evaluation of shaders at the same surface point, and presents a minimal amount of memory to be accessed during light transport time. An added benefit is that the acceleration structure for ray tracing (abounding volume hierarchy, bvh) is built once on the final tessellated geometry, which allows us to ray trace more efficiently than multi-level bvhs and avoids costly caching of on-demand tessellated micropolygons and the associated scheduling issues.For the shading reasons above, in terms of AOVs, the studio approach is to succeed at combining complex shading with ray paths in the render rather than pass a multi-pass render to compositing.

For the Spectral Rendering component. The light transport stage is fully spectral, using a continuously sampled wavelength which is traced with each path and used to apply the spectral camera sensitivity of the sensor. This allows for faithfully support any degree of observer metamerism as the camera footage they are intended to match as well as complex materials which require wavelength dependent phenomena such as diffraction, dispersion, interference, iridescence, or chromatic extinction and Rayleigh scattering in participating media.

As opposed to the original reyes paper, we use bilinear interpolation of these bsdf inputs later when evaluating bsdfs per pathv ertex during light transport4. This improves temporal stability of geometry which moves very slowly with respect to the pixel raster

In terms of the pipeline, everything rendered at Weta was already completely interwoven with their deep data pipeline. Manuka very much was written with deep data in mind. Here, Manuka not so much extends the deep capabilities, rather it fully matches the already extremely complex and powerful setup Weta Digital already enjoy with RenderMan. For example, an ape in a scene can be selected, its ID is available and a NUKE artist can then paint in 3D say a hand and part of the way up the neutral posed ape.

We called our system Manuka, as a respectful nod to reyes: we had heard a story froma former ILM employee about how reyes got its name from how fond the early Pixar people were of their lunches at Point Reyes, and decided to name our system after our surrounding natural environment, too. Manuka is a kind of tea tree very common in New Zealand which has very many very small leaves, in analogy to micropolygons ina tree structure for ray tracing. It also happens to be the case that Weta Digital’s main site is on Manuka Street.

-

THOMAS MANSENCAL – The Apparent Simplicity of RGB Rendering

Read more: THOMAS MANSENCAL – The Apparent Simplicity of RGB Renderinghttps://thomasmansencal.substack.com/p/the-apparent-simplicity-of-rgb-rendering

The primary goal of physically-based rendering (PBR) is to create a simulation that accurately reproduces the imaging process of electro-magnetic spectrum radiation incident to an observer. This simulation should be indistinguishable from reality for a similar observer.

Because a camera is not sensitive to incident light the same way than a human observer, the images it captures are transformed to be colorimetric. A project might require infrared imaging simulation, a portion of the electro-magnetic spectrum that is invisible to us. Radically different observers might image the same scene but the act of observing does not change the intrinsic properties of the objects being imaged. Consequently, the physical modelling of the virtual scene should be independent of the observer.

-

colorhunt.co

Read more: colorhunt.coColor Hunt is a free and open platform for color inspiration with thousands of trendy hand-picked color palettes.

LIGHTING

-

HDRI shooting and editing by Xuan Prada and Greg Zaal

Read more: HDRI shooting and editing by Xuan Prada and Greg Zaalwww.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

- Plant the tripod where the action happens, stabilise it and level it

- Set manual focus

- Set white balance

- Set ISO

- Set raw+jpg

- Set apperture

- Metering exposure

- Set neutral exposure

- Read histogram and adjust neutral exposure if necessary

- Shot slate (operator name, location, date, time, project code name, etc)

- Set auto bracketing

- Shot 5 to 7 exposures with 3 stops difference covering the whole environment

- Place the aromatic kit where the tripod was placed, and take 3 exposures. Keep half of the grey sphere hit by the sun and half in shade.

- Place the Macbeth chart 1m away from tripod on the floor and take 3 exposures

- Take backplates and ground/floor texture references

- Shoot reference materials

- Write down measurements of the scene, specially if you are shooting interiors.

- If shooting artificial lights take HDR samples of each individual lighting source.

Exposures starting point

- Day light sun visible ISO 100 F22

- Day light sun hidden ISO 100 F16

- Cloudy ISO 320 F16

- Sunrise/Sunset ISO 100 F11

- Interior well lit ISO 320 F16

- Interior ambient bright ISO 320 F10

- Interior bad light ISO 640 F10

- Interior ambient dark ISO 640 F8

- Low light situation ISO 640 F5

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

Here are the tips for using the chromatic ball in VFX projects, written in English:

https://www.linkedin.com/posts/bellrodrigo_here-are-the-tips-for-using-the-chromatic-activity-7200950595438940160-AGBpTips for Using the Chromatic Ball in VFX Projects**

The chromatic ball is an invaluable tool in VFX work, helping to capture lighting and reflection data crucial for integrating CGI elements seamlessly. Here are some tips to maximize its effectiveness:

1. **Positioning**:

– Place the chromatic ball in the same lighting conditions as the main subject. Ensure it is visible in the camera frame but not obstructing the main action.

– Ideally, place the ball where the CGI elements will be integrated to match the lighting and reflections accurately.2. **Recording Reference Footage**:

– Capture reference footage of the chromatic ball at the beginning and end of each scene or lighting setup. This ensures you have consistent lighting data for the entire shoot.3. **Consistent Angles**:

– Use consistent camera angles and heights when recording the chromatic ball. This helps in comparing and matching lighting setups across different shots.4. **Combine with a Gray Ball**:

– Use a gray ball alongside the chromatic ball. The gray ball provides a neutral reference for exposure and color balance, complementing the chromatic ball’s reflection data.5. **Marking Positions**:

– Mark the position of the chromatic ball on the set to ensure consistency when shooting multiple takes or different camera angles.6. **Lighting Analysis**:

– Analyze the chromatic ball footage to understand the light sources, intensity, direction, and color temperature. This information is crucial for creating realistic CGI lighting and shadows.7. **Reflection Analysis**:

– Use the chromatic ball to capture the environment’s reflections. This helps in accurately reflecting the CGI elements within the same scene, making them blend seamlessly.8. **Use HDRI**:

– Capture High Dynamic Range Imagery (HDRI) of the chromatic ball. HDRI provides detailed lighting information and can be used to light CGI scenes with greater realism.9. **Communication with VFX Team**:

– Ensure that the VFX team is aware of the chromatic ball’s data and how it was captured. Clear communication ensures that the data is used effectively in post-production.10. **Post-Production Adjustments**:

– In post-production, use the chromatic ball data to adjust the CGI elements’ lighting and reflections. This ensures that the final output is visually cohesive and realistic. -

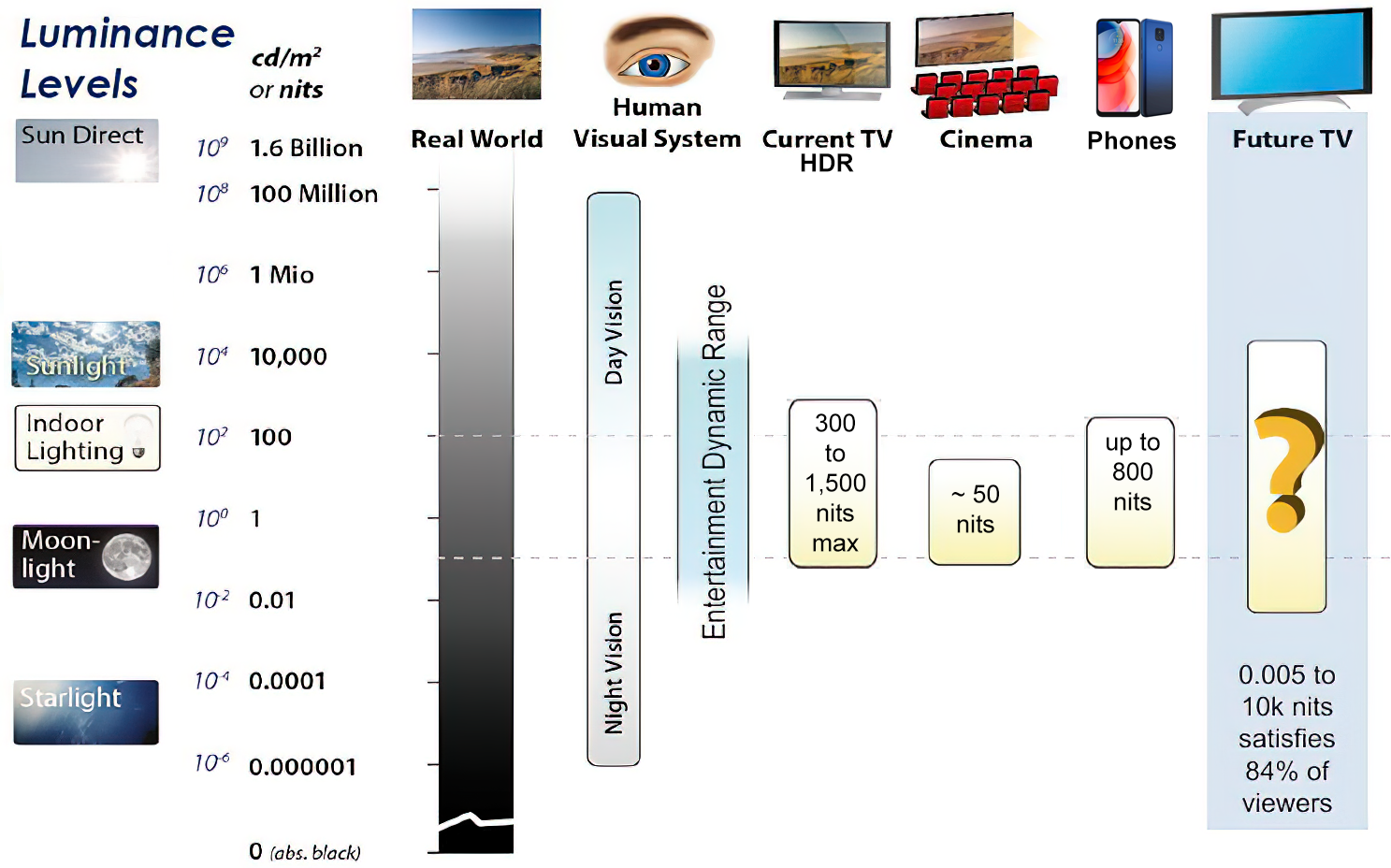

LUX vs LUMEN vs NITS vs CANDELA – What is the difference

Read more: LUX vs LUMEN vs NITS vs CANDELA – What is the differenceMore details here: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

https://www.inhouseav.com.au/blog/beginners-guide-nits-lumens-brightness/

Candela

Candela is the basic unit of measure of the entire volume of light intensity from any point in a single direction from a light source. Note the detail: it measures the total volume of light within a certain beam angle and direction.

While the luminance of starlight is around 0.001 cd/m2, that of a sunlit scene is around 100,000 cd/m2, which is a hundred millions times higher. The luminance of the sun itself is approximately 1,000,000,000 cd/m2.NIT

https://en.wikipedia.org/wiki/Candela_per_square_metre

The candela per square metre (symbol: cd/m2) is the unit of luminance in the International System of Units (SI). The unit is based on the candela, the SI unit of luminous intensity, and the square metre, the SI unit of area. The nit (symbol: nt) is a non-SI name also used for this unit (1 nt = 1 cd/m2).[1] The term nit is believed to come from the Latin word nitēre, “to shine”. As a measure of light emitted per unit area, this unit is frequently used to specify the brightness of a display device.

NIT and cd/m2 (candela power) represent the same thing and can be used interchangeably. One nit is equivalent to one candela per square meter, where the candela is the amount of light which has been emitted by a common tallow candle, but NIT is not part of the International System of Units (abbreviated SI, from Systeme International, in French).

It’s easiest to think of a TV as emitting light directly, in much the same way as the Sun does. Nits are simply the measurement of the level of light (luminance) in a given area which the emitting source sends to your eyes or a camera sensor.

The Nit can be considered a unit of visible-light intensity which is often used to specify the brightness level of an LCD.

1 Nit is approximately equal to 3.426 Lumens. To work out a comparable number of Nits to Lumens, you need to multiply the number of Nits by 3.426. If you know the number of Lumens, and wish to know the Nits, simply divide the number of Lumens by 3.426.

Most consumer desktop LCDs have Nits of 200 to 300, the average TV most likely has an output capability of between 100 and 200 Nits, and an HDR TV ranges from 400 to 1,500 Nits.

Virtual Production sets currently sport around 6000 NIT ceiling and 1000 NIT wall panels.The ambient brightness of a sunny day with clear blue skies is between 7000-10,000 nits (between 3000-7000 nits for overcast skies and indirect sunlight).

A bright sunny day can have specular highlights that reach over 100,000 nits. Direct sunlight is around 1,600,000,000 nits.

10,000 nits is also the typical brightness of a fluorescent tube – bright, but not painful to look at.

https://www.displaydaily.com/article/display-daily/dolby-vision-vs-hdr10-clarified

Tests showed that a “black level” of 0.005 nits (cd/m²) satisfied the vast majority of viewers. While 0.005 nits is very close to true black, Griffis says Dolby can go down to a black of 0.0001 nits, even though there is no need or ability for displays to get that dark today.

How bright is white? Dolby says the range of 0.005 nits – 10,000 nits satisfied 84% of the viewers in their viewing tests.

The brightest consumer HDR displays today are about 1,500 nits. Professional displays where HDR content is color-graded can achieve up to 4,000 nits peak brightness.High brightness that would be in danger of damaging the eye would be in the neighborhood of 250,000 nits.

Lumens

Lumen is a measure of how much light is emitted (luminance, luminous flux) by an object. It indicates the total potential amount of light from a light source that is visible to the human eye.

Lumen is commonly used in the context of light bulbs or video-projectors as a metric for their brightness power.Lumen is used to describe light output, and about video projectors, it is commonly referred to as ANSI Lumens. Simply put, lumens is how to find out how bright a LED display is. The higher the lumens, the brighter to display!

Technically speaking, a Lumen is the SI unit of luminous flux, which is equal to the amount of light which is emitted per second in a unit solid angle of one steradian from a uniform source of one-candela intensity radiating in all directions.

LUX

Lux (lx) or often Illuminance, is a photometric unit along a given area, which takes in account the sensitivity of human eye to different wavelenghts. It is the measure of light at a specific distance within a specific area at that distance. Often used to measure the incidental sun’s intensity.

-

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

Collections

| Explore posts

| Design And Composition

| Featured AI

Popular Searches

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.