COMPOSITION

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. ”

Use CRI, Luminous Efficacy and color temperature controls to match your needs.

Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”https://www.studiobinder.com/blog/what-is-color-rendering-index/

https://en.wikipedia.org/wiki/Color_rendering_index

Light source CCT (K) CRI Low-pressure sodium (LPS/SOX) 1800 −44 Clear mercury-vapor 6410 17 High-pressure sodium (HPS/SON) 2100 24 Coated mercury-vapor 3600 49 Halophosphate warm-white fluorescent 2940 51 Halophosphate cool-white fluorescent 4230 64 Tri-phosphor warm-white fluorescent 2940 73 Halophosphate cool-daylight fluorescent 6430 76 “White” SON 2700 82 Standard LED Lamp 2700–5000 83 Quartz metal halide 4200 85 Tri-phosphor cool-white fluorescent 4080 89 High-CRI LED lamp (blue LED) 2700–5000 95 Ceramic discharge metal-halide lamp 5400 96 Ultra-high-CRI LED lamp (violet LED) 2700–5000 99 Incandescent/halogen bulb 3200 100 Luminous Efficacy

Luminous efficacy is a measure of how well a light source produces visible light, watts out versus watts in, measured in lumens per watt. In other words it is a measurement that indicates the ability of a light source to emit visible light using a given amount of power. It is a ratio of the visible energy to the power that goes into the bulb.FILM LIGHT TYPES

Consumer light types

Tungsten Lights

Light interiors and match domestic places or office locations. Daylight.Advantages of Tungsten Lights

Almost perfect color rendition

Low cost

Does not use mercury like CFLs (fluorescent) or mercury vapor lights

Better color temperature than standard tungsten

Longer life than a conventional incandescent

Instant on to full brightness, no warm-up time, and it is dimmableDisadvantages of Tungsten Lights

Extremely hot

High power requirement

The lamp is sensitive to oils and cannot be touched

The bulb is capable of blowing and sending hot glass shards outward. A screen or layer of glass on the outside of the lamp can protect users.Hydrargyrum medium-arc iodide lights

HMI’s are used when high output is required. They are also used to recreate sun shining through windows or to fake additional sun while shooting exteriors. HMIs can light huge areas at once.Advantages of HMI lights

High light output

Higher efficiency

High color temperatureDisadvantages of HMI lights:

High cost

High power requirement

Dims only to about 50%

the color temperature increases with dimming

HMI bulbs will explode is dropped and release toxic chemicalsFluorescent

Fluorescent film lighting is achieved by laying multiple tubes next to each other, combining as many as you want for the desired brightness. The good news is you can choose your bulbs to either be warm or cool depending on the scenario you’re shooting. You want to get these bulbs close to the subject because they’re not great at opening up spaces. Fluorescent lighting is used to light interiors and is more compact and cooler than tungsten or HMI lighting.Advantages of Fluorescent lights

High efficiency

Low power requirement

Low cost

Long lamp life

Cool

Capable of soft even lighting over a large area

LightweightDisadvantages of Fluorescent lights

Flicker

High CRI

Domestic tubes have low CRI & poor color rendition.LED

LED’s are more and more common on film sets. You can use batteries to power them. That makes them portable and sleek – no messy cabled needed. You can rig your own panels of LED lights to fit any space necessary as well. LED’s can also power Fresnel style lamp heads such as the Arri L-series.Advantages of LED light

Soft, even lighting

Pure light without UV-artifacts

High efficiency

Low power consumption, can be battery powered

Excellent dimming by means of pulse width modulation control

Long lifespan

Environmentally friendly

Insensitive to shock

No risk of explosionDisadvantages of LED light

High cost.

LED’s are currently still expensive for their total light output

DESIGN

COLOR

-

No one could see the colour blue until modern times

Read more: No one could see the colour blue until modern timeshttps://www.businessinsider.com/what-is-blue-and-how-do-we-see-color-2015-2

The way that humans see the world… until we have a way to describe something, even something so fundamental as a colour, we may not even notice that something it’s there.

Ancient languages didn’t have a word for blue — not Greek, not Chinese, not Japanese, not Hebrew, not Icelandic cultures. And without a word for the colour, there’s evidence that they may not have seen it at all.

https://www.wnycstudios.org/story/211119-colors

Every language first had a word for black and for white, or dark and light. The next word for a colour to come into existence — in every language studied around the world — was red, the colour of blood and wine.

After red, historically, yellow appears, and later, green (though in a couple of languages, yellow and green switch places). The last of these colours to appear in every language is blue.

The only ancient culture to develop a word for blue was the Egyptians — and as it happens, they were also the only culture that had a way to produce a blue dye.

https://mymodernmet.com/shades-of-blue-color-history/

Considered to be the first ever synthetically produced color pigment, Egyptian blue (also known as cuprorivaite) was created around 2,200 B.C. It was made from ground limestone mixed with sand and a copper-containing mineral, such as azurite or malachite, which was then heated between 1470 and 1650°F. The result was an opaque blue glass which then had to be crushed and combined with thickening agents such as egg whites to create a long-lasting paint or glaze.

If you think about it, blue doesn’t appear much in nature — there aren’t animals with blue pigments (except for one butterfly, Obrina Olivewing, all animals generate blue through light scattering), blue eyes are rare (also blue through light scattering), and blue flowers are mostly human creations. There is, of course, the sky, but is that really blue?

So before we had a word for it, did people not naturally see blue? Do you really see something if you don’t have a word for it?

A researcher named Jules Davidoff traveled to Namibia to investigate this, where he conducted an experiment with the Himba tribe, who speak a language that has no word for blue or distinction between blue and green. When shown a circle with 11 green squares and one blue, they couldn’t pick out which one was different from the others.

When looking at a circle of green squares with only one slightly different shade, they could immediately spot the different one. Can you?

Davidoff says that without a word for a colour, without a way of identifying it as different, it’s much harder for us to notice what’s unique about it — even though our eyes are physically seeing the blocks it in the same way.

Further research brought to wider discussions about color perception in humans. Everything that we make is based on the fact that humans are trichromatic. The television only has 3 colors. Our color printers have 3 different colors. But some people, and in specific some women seemed to be more sensible to color differences… mainly because they’re just more aware or – because of the job that they do.

Eventually this brought to the discovery of a small percentage of the population, referred to as tetrachromats, which developed an extra cone sensitivity to yellow, likely due to gene modifications.

The interesting detail about these is that even between tetrachromats, only the ones that had a reason to develop, label and work with extra color sensitivity actually developed the ability to use their native skills.

So before blue became a common concept, maybe humans saw it. But it seems they didn’t know they were seeing it.

If you see something yet can’t see it, does it exist? Did colours come into existence over time? Not technically, but our ability to notice them… may have…

-

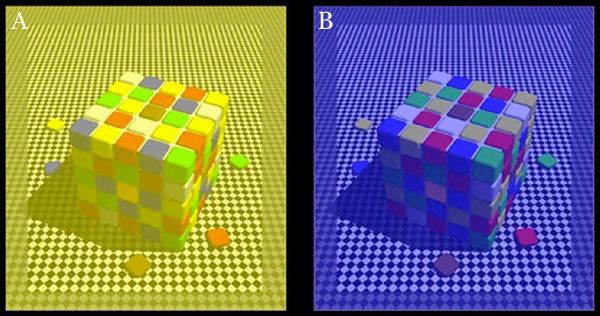

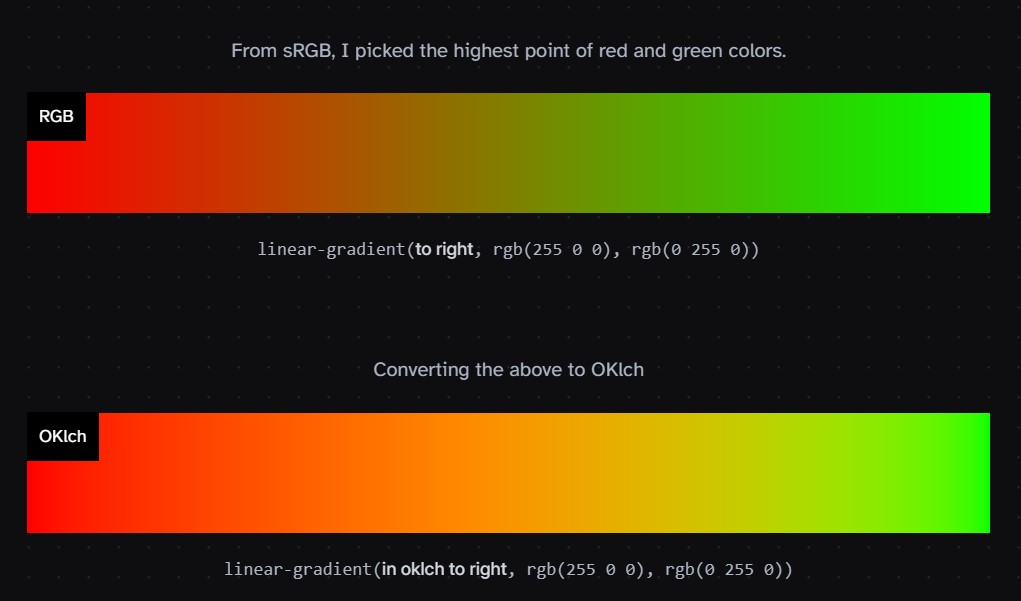

Björn Ottosson – OKlch color space

Read more: Björn Ottosson – OKlch color spaceBjörn Ottosson proposed OKlch in 2020 to create a color space that can closely mimic how color is perceived by the human eye, predicting perceived lightness, chroma, and hue.

The OK in OKLCH stands for Optimal Color.

- L: Lightness (the perceived brightness of the color)

- C: Chroma (the intensity or saturation of the color)

- H: Hue (the actual color, such as red, blue, green, etc.)

Also read:

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

Read more: GretagMacbeth Color Checker Numeric Values and Middle GrayThe human eye perceives half scene brightness not as the linear 50% of the present energy (linear nature values) but as 18% of the overall brightness. We are biased to perceive more information in the dark and contrast areas. A Macbeth chart helps with calibrating back into a photographic capture into this “human perspective” of the world.

https://en.wikipedia.org/wiki/Middle_gray

In photography, painting, and other visual arts, middle gray or middle grey is a tone that is perceptually about halfway between black and white on a lightness scale in photography and printing, it is typically defined as 18% reflectance in visible light

Light meters, cameras, and pictures are often calibrated using an 18% gray card[4][5][6] or a color reference card such as a ColorChecker. On the assumption that 18% is similar to the average reflectance of a scene, a grey card can be used to estimate the required exposure of the film.

https://en.wikipedia.org/wiki/ColorChecker

The exposure meter in the camera does not know whether the subject itself is bright or not. It simply measures the amount of light that comes in, and makes a guess based on that. The camera will aim for 18% gray independently, meaning if you take a photo of an entirely white surface, and an entirely black surface you should get two identical images which both are gray (at least in theory). Thus enters the Macbeth chart.

<!–more–>

Note that Chroma Key Green is reasonably close to an 18% gray reflectance.

http://www.rags-int-inc.com/PhotoTechStuff/MacbethTarget/

https://upload.wikimedia.org/wikipedia/commons/b/b4/CIE1931xy_ColorChecker_SMIL.svg

RGB coordinates of the Macbeth ColorChecker

https://pdfs.semanticscholar.org/0e03/251ad1e6d3c3fb9cb0b1f9754351a959e065.pdf

-

Gamma correction

Read more: Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

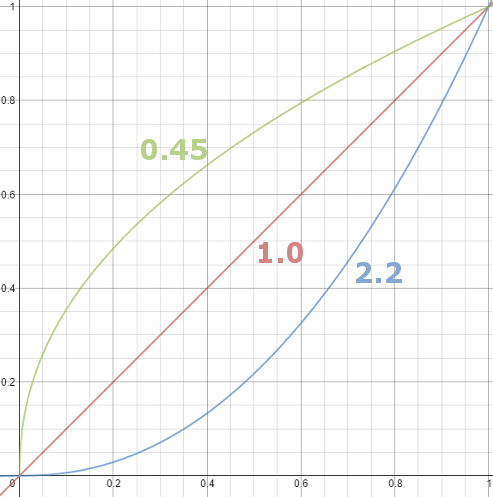

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.Our eyes, different camera or video recorder devices do not correctly capture luminance. (they are not linear)

Different display devices (monitor, phone screen, TV) do not display luminance correctly neither. So, one needs to correct them, therefore the gamma correction function.The human perception of brightness, under common illumination conditions (not pitch black nor blindingly bright), follows an approximate power function (note: no relation to the gamma function), with greater sensitivity to relative differences between darker tones than between lighter ones, consistent with the Stevens’ power law for brightness perception. If images are not gamma-encoded, they allocate too many bits or too much bandwidth to highlights that humans cannot differentiate, and too few bits or too little bandwidth to shadow values that humans are sensitive to and would require more bits/bandwidth to maintain the same visual quality.

https://blog.amerlux.com/4-things-architects-should-know-about-lumens-vs-perceived-brightness/

cones manage color receptivity, rods determine how large our pupils should be. The larger (more dilated) our pupils are, the more light enters our eyes. In dark situations, our rods dilate our pupils so we can see better. This impacts how we perceive brightness.

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

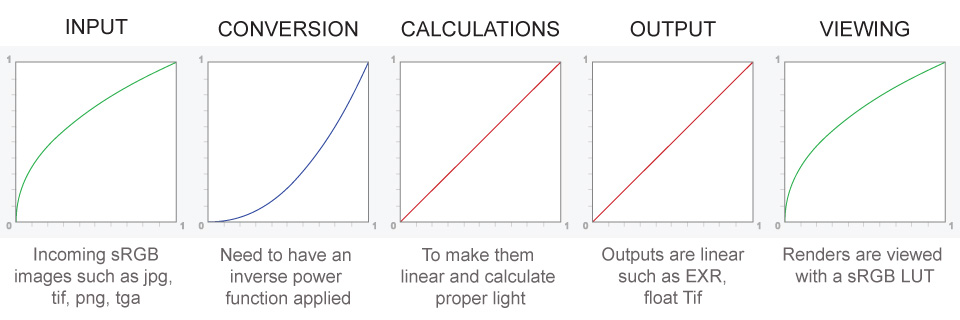

A gamma encoded image has to have “gamma correction” applied when it is viewed — which effectively converts it back into light from the original scene. In other words, the purpose of gamma encoding is for recording the image — not for displaying the image. Fortunately this second step (the “display gamma”) is automatically performed by your monitor and video card. The following diagram illustrates how all of this fits together:

Display gamma

The display gamma can be a little confusing because this term is often used interchangeably with gamma correction, since it corrects for the file gamma. This is the gamma that you are controlling when you perform monitor calibration and adjust your contrast setting. Fortunately, the industry has converged on a standard display gamma of 2.2, so one doesn’t need to worry about the pros/cons of different values.Gamma encoding of images is used to optimize the usage of bits when encoding an image, or bandwidth used to transport an image, by taking advantage of the non-linear manner in which humans perceive light and color. Human response to luminance is also biased. Especially sensible to dark areas.

Thus, the human visual system has a non-linear response to the power of the incoming light, so a fixed increase in power will not have a fixed increase in perceived brightness.

We perceive a value as half bright when it is actually 18% of the original intensity not 50%. As such, our perception is not linear.You probably already know that a pixel can have any ‘value’ of Red, Green, and Blue between 0 and 255, and you would therefore think that a pixel value of 127 would appear as half of the maximum possible brightness, and that a value of 64 would represent one-quarter brightness, and so on. Well, that’s just not the case.

Pixar Color Management

https://renderman.pixar.com/color-management

– Why do we need linear gamma?

Because light works linearly and therefore only works properly when it lights linear values.– Why do we need to view in sRGB?

Because the resulting linear image in not suitable for viewing, but contains all the proper data. Pixar’s IT viewer can compensate by showing the rendered image through a sRGB look up table (LUT), which is identical to what will be the final image after the sRGB gamma curve is applied in post.This would be simple enough if every software would play by the same rules, but they don’t. In fact, the default gamma workflow for many 3D software is incorrect. This is where the knowledge of a proper imaging workflow comes in to save the day.

Cathode-ray tubes have a peculiar relationship between the voltage applied to them, and the amount of light emitted. It isn’t linear, and in fact it follows what’s called by mathematicians and other geeks, a ‘power law’ (a number raised to a power). The numerical value of that power is what we call the gamma of the monitor or system.

Thus. Gamma describes the nonlinear relationship between the pixel levels in your computer and the luminance of your monitor (the light energy it emits) or the reflectance of your prints. The equation is,

Luminance = C * value^gamma + black level

– C is set by the monitor Contrast control.

– Value is the pixel level normalized to a maximum of 1. For an 8 bit monitor with pixel levels 0 – 255, value = (pixel level)/255.

– Black level is set by the (misnamed) monitor Brightness control. The relationship is linear if gamma = 1. The chart illustrates the relationship for gamma = 1, 1.5, 1.8 and 2.2 with C = 1 and black level = 0.

Gamma affects middle tones; it has no effect on black or white. If gamma is set too high, middle tones appear too dark. Conversely, if it’s set too low, middle tones appear too light.

The native gamma of monitors – the relationship between grid voltage and luminance – is typically around 2.5, though it can vary considerably. This is well above any of the display standards, so you must be aware of gamma and correct it.

A display gamma of 2.2 is the de facto standard for the Windows operating system and the Internet-standard sRGB color space.

The old standard for Mcintosh and prepress file interchange is 1.8. It is now 2.2 as well.

Video cameras have gammas of approximately 0.45 – the inverse of 2.2. The viewing or system gamma is the product of the gammas of all the devices in the system – the image acquisition device (film+scanner or digital camera), color lookup table (LUT), and monitor. System gamma is typically between 1.1 and 1.5. Viewing flare and other factor make images look flat at system gamma = 1.0.

Most laptop LCD screens are poorly suited for critical image editing because gamma is extremely sensitive to viewing angle.

More about screens

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

CRT Monitors. Due to an odd bit of engineering luck, the native gamma of a CRT is 2.5 — almost the inverse of our eyes. Values from a gamma-encoded file could therefore be sent straight to the screen and they would automatically be corrected and appear nearly OK. However, a small gamma correction of ~1/1.1 needs to be applied to achieve an overall display gamma of 2.2. This is usually already set by the manufacturer’s default settings, but can also be set during monitor calibration.

LCD Monitors. LCD monitors weren’t so fortunate; ensuring an overall display gamma of 2.2 often requires substantial corrections, and they are also much less consistent than CRT’s. LCDs therefore require something called a look-up table (LUT) in order to ensure that input values are depicted using the intended display gamma (amongst other things). See the tutorial on monitor calibration: look-up tables for more on this topic.

About black level (brightness). Your monitor’s brightness control (which should actually be called black level) can be adjusted using the mostly black pattern on the right side of the chart. This pattern contains two dark gray vertical bars, A and B, which increase in luminance with increasing gamma. (If you can’t see them, your black level is way low.) The left bar (A) should be just above the threshold of visibility opposite your chosen gamma (2.2 or 1.8) – it should be invisible where gamma is lower by about 0.3. The right bar (B) should be distinctly visible: brighter than (A), but still very dark. This chart is only for monitors; it doesn’t work on printed media.

The 1.8 and 2.2 gray patterns at the bottom of the image represent a test of monitor quality and calibration. If your monitor is functioning properly and calibrated to gamma = 2.2 or 1.8, the corresponding pattern will appear smooth neutral gray when viewed from a distance. Any waviness, irregularity, or color banding indicates incorrect monitor calibration or poor performance.

Another test to see whether one’s computer monitor is properly hardware adjusted and can display shadow detail in sRGB images properly, they should see the left half of the circle in the large black square very faintly but the right half should be clearly visible. If not, one can adjust their monitor’s contrast and/or brightness setting. This alters the monitor’s perceived gamma. The image is best viewed against a black background.

This procedure is not suitable for calibrating or print-proofing a monitor. It can be useful for making a monitor display sRGB images approximately correctly, on systems in which profiles are not used (for example, the Firefox browser prior to version 3.0 and many others) or in systems that assume untagged source images are in the sRGB colorspace.

On some operating systems running the X Window System, one can set the gamma correction factor (applied to the existing gamma value) by issuing the command xgamma -gamma 0.9 for setting gamma correction factor to 0.9, and xgamma for querying current value of that factor (the default is 1.0). In OS X systems, the gamma and other related screen calibrations are made through the System Preference

https://www.kinematicsoup.com/news/2016/6/15/gamma-and-linear-space-what-they-are-how-they-differ

Linear color space means that numerical intensity values correspond proportionally to their perceived intensity. This means that the colors can be added and multiplied correctly. A color space without that property is called ”non-linear”. Below is an example where an intensity value is doubled in a linear and a non-linear color space. While the corresponding numerical values in linear space are correct, in the non-linear space (gamma = 0.45, more on this later) we can’t simply double the value to get the correct intensity.

The need for gamma arises for two main reasons: The first is that screens have been built with a non-linear response to intensity. The other is that the human eye can tell the difference between darker shades better than lighter shades. This means that when images are compressed to save space, we want to have greater accuracy for dark intensities at the expense of lighter intensities. Both of these problems are resolved using gamma correction, which is to say the intensity of every pixel in an image is put through a power function. Specifically, gamma is the name given to the power applied to the image.

CRT screens, simply by how they work, apply a gamma of around 2.2, and modern LCD screens are designed to mimic that behavior. A gamma of 2.2, the reciprocal of 0.45, when applied to the brightened images will darken them, leaving the original image.

-

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

LIGHTING

-

Bella – Fast Spectral Rendering

Read more: Bella – Fast Spectral RenderingBella works in spectral space, allowing effects such as BSDF wavelength dependency, diffraction, or atmosphere to be modeled far more accurately than in color space.

https://superrendersfarm.com/blog/uncategorized/bella-a-new-spectral-physically-based-renderer/

Collections

| Explore posts

| Design And Composition

| Featured AI

Popular Searches

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.