COMPOSITION

-

Cinematographers Blueprint 300dpi poster

Read more: Cinematographers Blueprint 300dpi posterThe 300dpi digital poster is now available to all PixelSham.com subscribers.

If you have already subscribed and wish a copy, please send me a note through the contact page.

DESIGN

-

The 7 key elements of brand identity design + 10 corporate identity examples

Read more: The 7 key elements of brand identity design + 10 corporate identity exampleswww.lucidpress.com/blog/the-7-key-elements-of-brand-identity-design

1. Clear brand purpose and positioning

2. Thorough market research

3. Likable brand personality

4. Memorable logo

5. Attractive color palette

6. Professional typography

7. On-brand supporting graphics

-

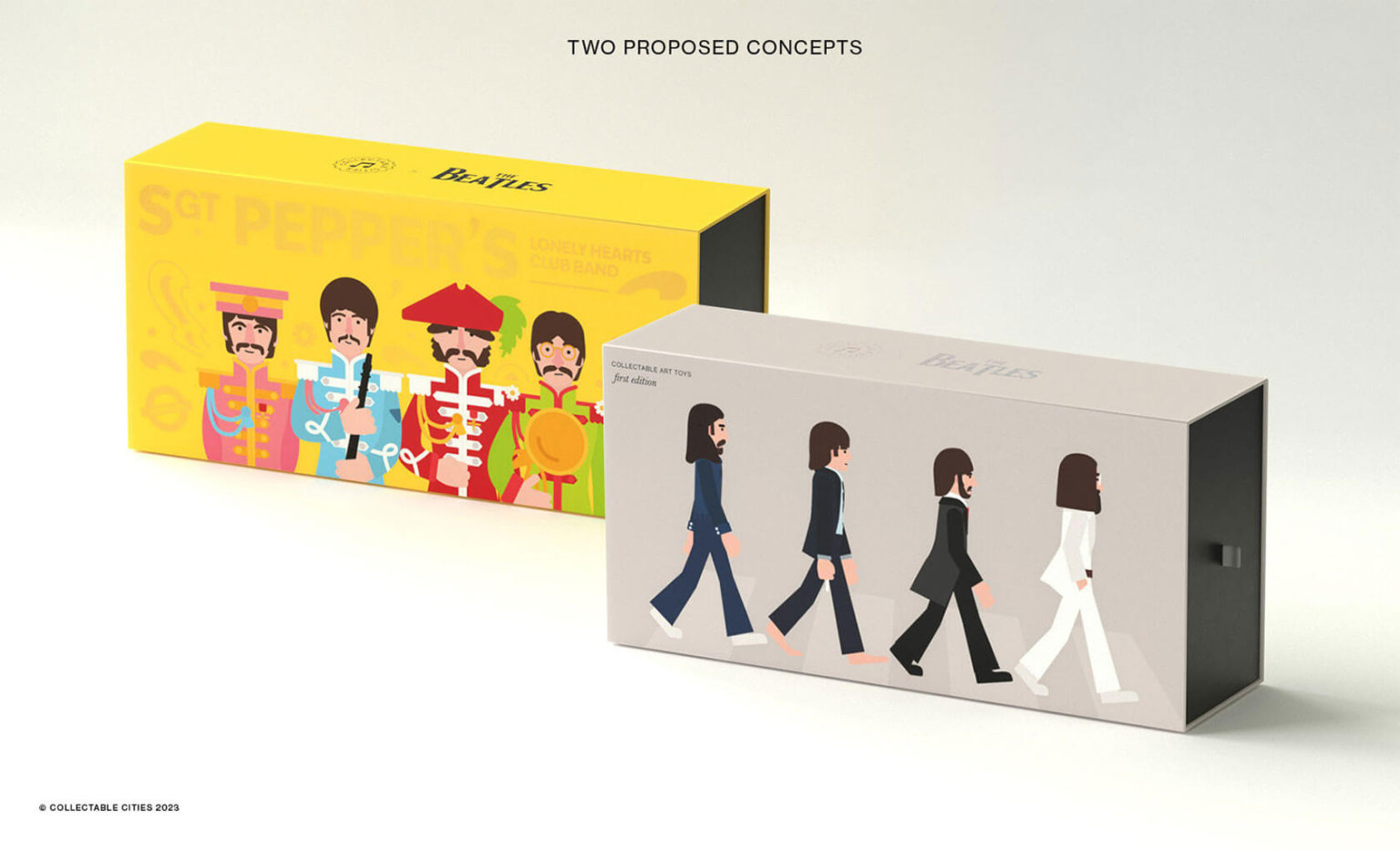

Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toys

Read more: Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toyshttps://designyoutrust.com/2024/11/artist-duo-creates-absolutely-amazing-the-beatles-collectable-toys

COLOR

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. ”

Use CRI, Luminous Efficacy and color temperature controls to match your needs.

Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”https://www.studiobinder.com/blog/what-is-color-rendering-index/

https://en.wikipedia.org/wiki/Color_rendering_index

Light source CCT (K) CRI Low-pressure sodium (LPS/SOX) 1800 −44 Clear mercury-vapor 6410 17 High-pressure sodium (HPS/SON) 2100 24 Coated mercury-vapor 3600 49 Halophosphate warm-white fluorescent 2940 51 Halophosphate cool-white fluorescent 4230 64 Tri-phosphor warm-white fluorescent 2940 73 Halophosphate cool-daylight fluorescent 6430 76 “White” SON 2700 82 Standard LED Lamp 2700–5000 83 Quartz metal halide 4200 85 Tri-phosphor cool-white fluorescent 4080 89 High-CRI LED lamp (blue LED) 2700–5000 95 Ceramic discharge metal-halide lamp 5400 96 Ultra-high-CRI LED lamp (violet LED) 2700–5000 99 Incandescent/halogen bulb 3200 100 Luminous Efficacy

Luminous efficacy is a measure of how well a light source produces visible light, watts out versus watts in, measured in lumens per watt. In other words it is a measurement that indicates the ability of a light source to emit visible light using a given amount of power. It is a ratio of the visible energy to the power that goes into the bulb.FILM LIGHT TYPES

Consumer light types

Tungsten Lights

Light interiors and match domestic places or office locations. Daylight.Advantages of Tungsten Lights

Almost perfect color rendition

Low cost

Does not use mercury like CFLs (fluorescent) or mercury vapor lights

Better color temperature than standard tungsten

Longer life than a conventional incandescent

Instant on to full brightness, no warm-up time, and it is dimmableDisadvantages of Tungsten Lights

Extremely hot

High power requirement

The lamp is sensitive to oils and cannot be touched

The bulb is capable of blowing and sending hot glass shards outward. A screen or layer of glass on the outside of the lamp can protect users.Hydrargyrum medium-arc iodide lights

HMI’s are used when high output is required. They are also used to recreate sun shining through windows or to fake additional sun while shooting exteriors. HMIs can light huge areas at once.Advantages of HMI lights

High light output

Higher efficiency

High color temperatureDisadvantages of HMI lights:

High cost

High power requirement

Dims only to about 50%

the color temperature increases with dimming

HMI bulbs will explode is dropped and release toxic chemicalsFluorescent

Fluorescent film lighting is achieved by laying multiple tubes next to each other, combining as many as you want for the desired brightness. The good news is you can choose your bulbs to either be warm or cool depending on the scenario you’re shooting. You want to get these bulbs close to the subject because they’re not great at opening up spaces. Fluorescent lighting is used to light interiors and is more compact and cooler than tungsten or HMI lighting.Advantages of Fluorescent lights

High efficiency

Low power requirement

Low cost

Long lamp life

Cool

Capable of soft even lighting over a large area

LightweightDisadvantages of Fluorescent lights

Flicker

High CRI

Domestic tubes have low CRI & poor color rendition.LED

LED’s are more and more common on film sets. You can use batteries to power them. That makes them portable and sleek – no messy cabled needed. You can rig your own panels of LED lights to fit any space necessary as well. LED’s can also power Fresnel style lamp heads such as the Arri L-series.Advantages of LED light

Soft, even lighting

Pure light without UV-artifacts

High efficiency

Low power consumption, can be battery powered

Excellent dimming by means of pulse width modulation control

Long lifespan

Environmentally friendly

Insensitive to shock

No risk of explosionDisadvantages of LED light

High cost.

LED’s are currently still expensive for their total light output -

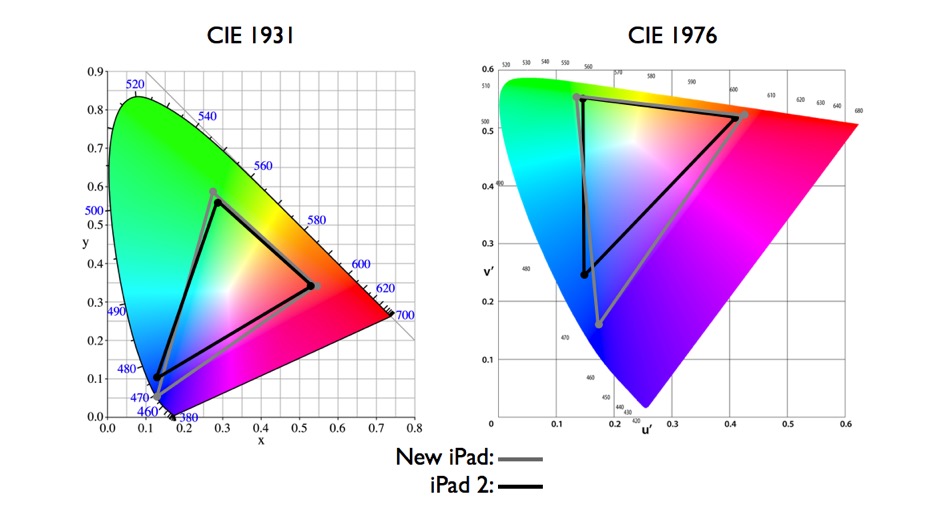

What is a Gamut or Color Space and why do I need to know about CIE

Read more: What is a Gamut or Color Space and why do I need to know about CIEhttp://www.xdcam-user.com/2014/05/what-is-a-gamut-or-color-space-and-why-do-i-need-to-know-about-it/

In video terms gamut is normally related to as the full range of colours and brightness that can be either captured or displayed.

Generally speaking all color gamuts recommendations are trying to define a reasonable level of color representation based on available technology and hardware. REC-601 represents the old TVs. REC-709 is currently the most distributed solution. P3 is mainly available in movie theaters and is now being adopted in some of the best new 4K HDR TVs. Rec2020 (a wider space than P3 that improves on visibke color representation) and ACES (the full coverage of visible color) are other common standards which see major hardware development these days.

To compare and visualize different solution (across video and printing solutions), most developers use the CIE color model chart as a reference.

The CIE color model is a color space model created by the International Commission on Illumination known as the Commission Internationale de l’Elcairage (CIE) in 1931. It is also known as the CIE XYZ color space or the CIE 1931 XYZ color space.

This chart represents the first defined quantitative link between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. Or basically, the range of color a typical human eye can perceive through visible light.Note that while the human perception is quite wide, and generally speaking biased towards greens (we are apes after all), the amount of colors available through nature, generated through light reflection, tend to be a much smaller section. This is defined by the Pointer’s Chart.

In short. Color gamut is a representation of color coverage, used to describe data stored in images against available hardware and viewer technologies.

Camera color encoding from

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applicationsCIE 1976

http://bernardsmith.eu/computatrum/scan_and_restore_archive_and_print/scanning/

https://store.yujiintl.com/blogs/high-cri-led/understanding-cie1931-and-cie-1976

The CIE 1931 standard has been replaced by a CIE 1976 standard. Below we can see the significance of this.

People have observed that the biggest issue with CIE 1931 is the lack of uniformity with chromaticity, the three dimension color space in rectangular coordinates is not visually uniformed.

The CIE 1976 (also called CIELUV) was created by the CIE in 1976. It was put forward in an attempt to provide a more uniform color spacing than CIE 1931 for colors at approximately the same luminance

The CIE 1976 standard colour space is more linear and variations in perceived colour between different people has also been reduced. The disproportionately large green-turquoise area in CIE 1931, which cannot be generated with existing computer screens, has been reduced.

If we move from CIE 1931 to the CIE 1976 standard colour space we can see that the improvements made in the gamut for the “new” iPad screen (as compared to the “old” iPad 2) are more evident in the CIE 1976 colour space than in the CIE 1931 colour space, particularly in the blues from aqua to deep blue.

https://dot-color.com/2012/08/14/color-space-confusion/

Despite its age, CIE 1931, named for the year of its adoption, remains a well-worn and familiar shorthand throughout the display industry. CIE 1931 is the primary language of customers. When a customer says that their current display “can do 72% of NTSC,” they implicitly mean 72% of NTSC 1953 color gamut as mapped against CIE 1931.

-

Scene Referred vs Display Referred color workflows

Read more: Scene Referred vs Display Referred color workflowsDisplay Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

3D Lighting Tutorial by Amaan Kram

Read more: 3D Lighting Tutorial by Amaan Kramhttp://www.amaanakram.com/lightingT/part1.htm

The goals of lighting in 3D computer graphics are more or less the same as those of real world lighting.

Lighting serves a basic function of bringing out, or pushing back the shapes of objects visible from the camera’s view.

It gives a two-dimensional image on the monitor an illusion of the third dimension-depth.But it does not just stop there. It gives an image its personality, its character. A scene lit in different ways can give a feeling of happiness, of sorrow, of fear etc., and it can do so in dramatic or subtle ways. Along with personality and character, lighting fills a scene with emotion that is directly transmitted to the viewer.

Trying to simulate a real environment in an artificial one can be a daunting task. But even if you make your 3D rendering look absolutely photo-realistic, it doesn’t guarantee that the image carries enough emotion to elicit a “wow” from the people viewing it.

Making 3D renderings photo-realistic can be hard. Putting deep emotions in them can be even harder. However, if you plan out your lighting strategy for the mood and emotion that you want your rendering to express, you make the process easier for yourself.

Each light source can be broken down in to 4 distinct components and analyzed accordingly.

· Intensity

· Direction

· Color

· SizeThe overall thrust of this writing is to produce photo-realistic images by applying good lighting techniques.

LIGHTING

-

StudioBinder.com – Photography basics: What is Dynamic Range in Photography

Read more: StudioBinder.com – Photography basics: What is Dynamic Range in Photographyhttps://www.studiobinder.com/blog/what-is-dynamic-range-photography/

https://www.hdrsoft.com/resources/dri.html#bit-depth

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

-

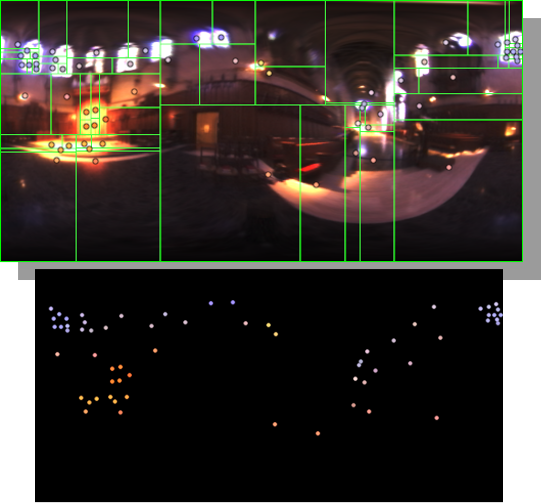

HDRI Median Cut plugin

Read more: HDRI Median Cut pluginwww.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

# bottom left coordinates = 0,0 import numpy as np import cv2 # Load the HDR or EXR image image = cv2.imread('your_image_path.exr', cv2.IMREAD_UNCHANGED) # Load as-is without modification # Calculate the luminance from the HDR channels (assuming RGB format) luminance = np.dot(image[..., :3], [0.299, 0.587, 0.114]) # Set a threshold value based on estimated EV threshold_value = 2.4 # Estimated threshold value based on 4.8 EV # Apply the threshold to identify bright areas # Theluminancearray contains the calculated luminance values for each pixel in the image. # Thethreshold_valueis a user-defined value that represents a cutoff point, separating "bright" and "dark" areas in terms of perceived luminance.thresholded = (luminance > threshold_value) * 255 # Convert the thresholded image to uint8 for contour detection thresholded = thresholded.astype(np.uint8) # Find contours of the bright areas contours, _ = cv2.findContours(thresholded, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # Create a list to store the bounding boxes of bright areas bright_areas = [] # Iterate through contours and extract bounding boxes for contour in contours: x, y, w, h = cv2.boundingRect(contour) # Adjust y-coordinate based on bottom-left origin y_bottom_left_origin = image.shape[0] - (y + h) bright_areas.append((x, y_bottom_left_origin, x + w, y_bottom_left_origin + h)) # Store as (x1, y1, x2, y2) # Print the identified bright areas print("Bright Areas (x1, y1, x2, y2):") for area in bright_areas: print(area)More details

Luminance and Exposure in an EXR Image:

- An EXR (Extended Dynamic Range) image format is often used to store high dynamic range (HDR) images that contain a wide range of luminance values, capturing both dark and bright areas.

- Luminance refers to the perceived brightness of a pixel in an image. In an RGB image, luminance is often calculated using a weighted sum of the red, green, and blue channels, where different weights are assigned to each channel to account for human perception.

- In an EXR image, the pixel values can represent radiometrically accurate scene values, including actual radiance or irradiance levels. These values are directly related to the amount of light emitted or reflected by objects in the scene.

The luminance line is calculating the luminance of each pixel in the image using a weighted sum of the red, green, and blue channels. The three float values [0.299, 0.587, 0.114] are the weights used to perform this calculation.

These weights are based on the concept of luminosity, which aims to approximate the perceived brightness of a color by taking into account the human eye’s sensitivity to different colors. The values are often derived from the NTSC (National Television System Committee) standard, which is used in various color image processing operations.

Here’s the breakdown of the float values:

- 0.299: Weight for the red channel.

- 0.587: Weight for the green channel.

- 0.114: Weight for the blue channel.

The weighted sum of these channels helps create a grayscale image where the pixel values represent the perceived brightness. This technique is often used when converting a color image to grayscale or when calculating luminance for certain operations, as it takes into account the human eye’s sensitivity to different colors.

For the threshold, remember that the exact relationship between EV values and pixel values can depend on the tone-mapping or normalization applied to the HDR image, as well as the dynamic range of the image itself.

To establish a relationship between exposure and the threshold value, you can consider the relationship between linear and logarithmic scales:

- Linear and Logarithmic Scales:

- Exposure values in an EXR image are often represented in logarithmic scales, such as EV (exposure value). Each increment in EV represents a doubling or halving of the amount of light captured.

- Threshold values for luminance thresholding are usually linear, representing an actual luminance level.

- Conversion Between Scales:

- To establish a mathematical relationship, you need to convert between the logarithmic exposure scale and the linear threshold scale.

- One common method is to use a power function. For instance, you can use a power function to convert EV to a linear intensity value.

threshold_value = base_value * (2 ** EV)Here,

EVis the exposure value,base_valueis a scaling factor that determines the relationship between EV and threshold_value, and2 ** EVis used to convert the logarithmic EV to a linear intensity value. - Choosing the Base Value:

- The

base_valuefactor should be determined based on the dynamic range of your EXR image and the specific luminance values you are dealing with. - You may need to experiment with different values of

base_valueto achieve the desired separation of bright areas from the rest of the image.

- The

Let’s say you have an EXR image with a dynamic range of 12 EV, which is a common range for many high dynamic range images. In this case, you want to set a threshold value that corresponds to a certain number of EV above the middle gray level (which is often considered to be around 0.18).

Here’s an example of how you might determine a

base_valueto achieve this:# Define the dynamic range of the image in EV dynamic_range = 12 # Choose the desired number of EV above middle gray for thresholding desired_ev_above_middle_gray = 2 # Calculate the threshold value based on the desired EV above middle gray threshold_value = 0.18 * (2 ** (desired_ev_above_middle_gray / dynamic_range)) print("Threshold Value:", threshold_value)

Collections

| Explore posts

| Design And Composition

| Featured AI

Popular Searches

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.