COMPOSITION

DESIGN

COLOR

-

Image rendering bit depth

Read more: Image rendering bit depthThe terms 16-bit, 16-bit float, and 32-bit refer to different data formats used to store and represent image information, as bits per pixel.

https://en.wikipedia.org/wiki/Color_depth

In color technology, color depth also known as bit depth, is either the number of bits used to indicate the color of a single pixel, OR the number of bits used for each color component of a single pixel.

When referring to a pixel, the concept can be defined as bits per pixel (bpp).

When referring to a color component, the concept can be defined as bits per component, bits per channel, bits per color (all three abbreviated bpc), and also bits per pixel component, bits per color channel or bits per sample (bps). Modern standards tend to use bits per component, but historical lower-depth systems used bits per pixel more often.

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed; the other aspect is how broad a range of colors can be expressed (the gamut). The definition of both color precision and gamut is accomplished with a color encoding specification which assigns a digital code value to a location in a color space.

-

mmColorTarget – Nuke Gizmo for color matching a MacBeth chart

Read more: mmColorTarget – Nuke Gizmo for color matching a MacBeth charthttps://www.marcomeyer-vfx.de/posts/2014-04-11-mmcolortarget-nuke-gizmo/

https://www.marcomeyer-vfx.de/posts/mmcolortarget-nuke-gizmo/

https://vimeo.com/9.1652466e+07

https://www.nukepedia.com/gizmos/colour/mmcolortarget

-

What is a Gamut or Color Space and why do I need to know about CIE

Read more: What is a Gamut or Color Space and why do I need to know about CIEhttp://www.xdcam-user.com/2014/05/what-is-a-gamut-or-color-space-and-why-do-i-need-to-know-about-it/

In video terms gamut is normally related to as the full range of colours and brightness that can be either captured or displayed.

Generally speaking all color gamuts recommendations are trying to define a reasonable level of color representation based on available technology and hardware. REC-601 represents the old TVs. REC-709 is currently the most distributed solution. P3 is mainly available in movie theaters and is now being adopted in some of the best new 4K HDR TVs. Rec2020 (a wider space than P3 that improves on visibke color representation) and ACES (the full coverage of visible color) are other common standards which see major hardware development these days.

To compare and visualize different solution (across video and printing solutions), most developers use the CIE color model chart as a reference.

The CIE color model is a color space model created by the International Commission on Illumination known as the Commission Internationale de l’Elcairage (CIE) in 1931. It is also known as the CIE XYZ color space or the CIE 1931 XYZ color space.

This chart represents the first defined quantitative link between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. Or basically, the range of color a typical human eye can perceive through visible light.Note that while the human perception is quite wide, and generally speaking biased towards greens (we are apes after all), the amount of colors available through nature, generated through light reflection, tend to be a much smaller section. This is defined by the Pointer’s Chart.

In short. Color gamut is a representation of color coverage, used to describe data stored in images against available hardware and viewer technologies.

Camera color encoding from

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applicationsCIE 1976

http://bernardsmith.eu/computatrum/scan_and_restore_archive_and_print/scanning/

https://store.yujiintl.com/blogs/high-cri-led/understanding-cie1931-and-cie-1976

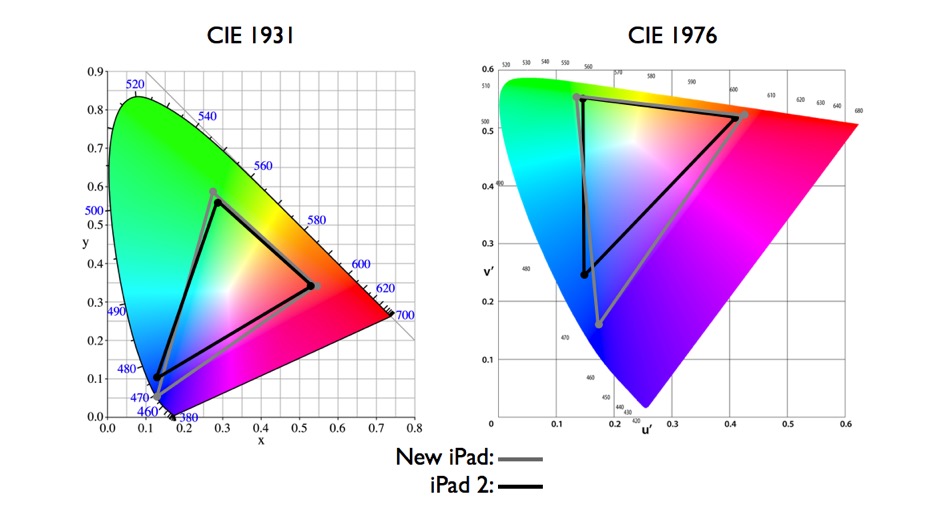

The CIE 1931 standard has been replaced by a CIE 1976 standard. Below we can see the significance of this.

People have observed that the biggest issue with CIE 1931 is the lack of uniformity with chromaticity, the three dimension color space in rectangular coordinates is not visually uniformed.

The CIE 1976 (also called CIELUV) was created by the CIE in 1976. It was put forward in an attempt to provide a more uniform color spacing than CIE 1931 for colors at approximately the same luminance

The CIE 1976 standard colour space is more linear and variations in perceived colour between different people has also been reduced. The disproportionately large green-turquoise area in CIE 1931, which cannot be generated with existing computer screens, has been reduced.

If we move from CIE 1931 to the CIE 1976 standard colour space we can see that the improvements made in the gamut for the “new” iPad screen (as compared to the “old” iPad 2) are more evident in the CIE 1976 colour space than in the CIE 1931 colour space, particularly in the blues from aqua to deep blue.

https://dot-color.com/2012/08/14/color-space-confusion/

Despite its age, CIE 1931, named for the year of its adoption, remains a well-worn and familiar shorthand throughout the display industry. CIE 1931 is the primary language of customers. When a customer says that their current display “can do 72% of NTSC,” they implicitly mean 72% of NTSC 1953 color gamut as mapped against CIE 1931.

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

Read more: Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminancehttps://www.translatorscafe.com/unit-converter/en-US/illumination/1-11/

The power output of a light source is measured using the unit of watts W. This is a direct measure to calculate how much power the light is going to drain from your socket and it is not relatable to the light brightness itself.

The amount of energy emitted from it per second. That energy comes out in a form of photons which we can crudely represent with rays of light coming out of the source. The higher the power the more rays emitted from the source in a unit of time.

Not all energy emitted is visible to the human eye, so we often rely on photometric measurements, which takes in account the sensitivity of human eye to different wavelenghts

Details in the post

(more…)

LIGHTING

-

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

9 Best Hacks to Make a Cinematic Video with Any Camera

Read more: 9 Best Hacks to Make a Cinematic Video with Any Camerahttps://www.flexclip.com/learn/cinematic-video.html

- Frame Your Shots to Create Depth

- Create Shallow Depth of Field

- Avoid Shaky Footage and Use Flexible Camera Movements

- Properly Use Slow Motion

- Use Cinematic Lighting Techniques

- Apply Color Grading

- Use Cinematic Music and SFX

- Add Cinematic Fonts and Text Effects

- Create the Cinematic Bar at the Top and the Bottom

Collections

| Explore posts

| Design And Composition

| Featured AI

Popular Searches

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.