COMPOSITION

-

Composition and The Expressive Nature Of Light

Read more: Composition and The Expressive Nature Of Lighthttp://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”

-

StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniques

Read more: StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniqueshttps://www.studiobinder.com/blog/camera-lens-buying-guide/

https://www.studiobinder.com/blog/e-books/camera-lenses-explained-volume-1-ebook

DESIGN

COLOR

-

Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through Projectors

Read more: Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through ProjectorsVideo Projection Tool Software

https://hcgilje.wordpress.com/vpt/https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/MadMapper

https://madmapper.com/ -

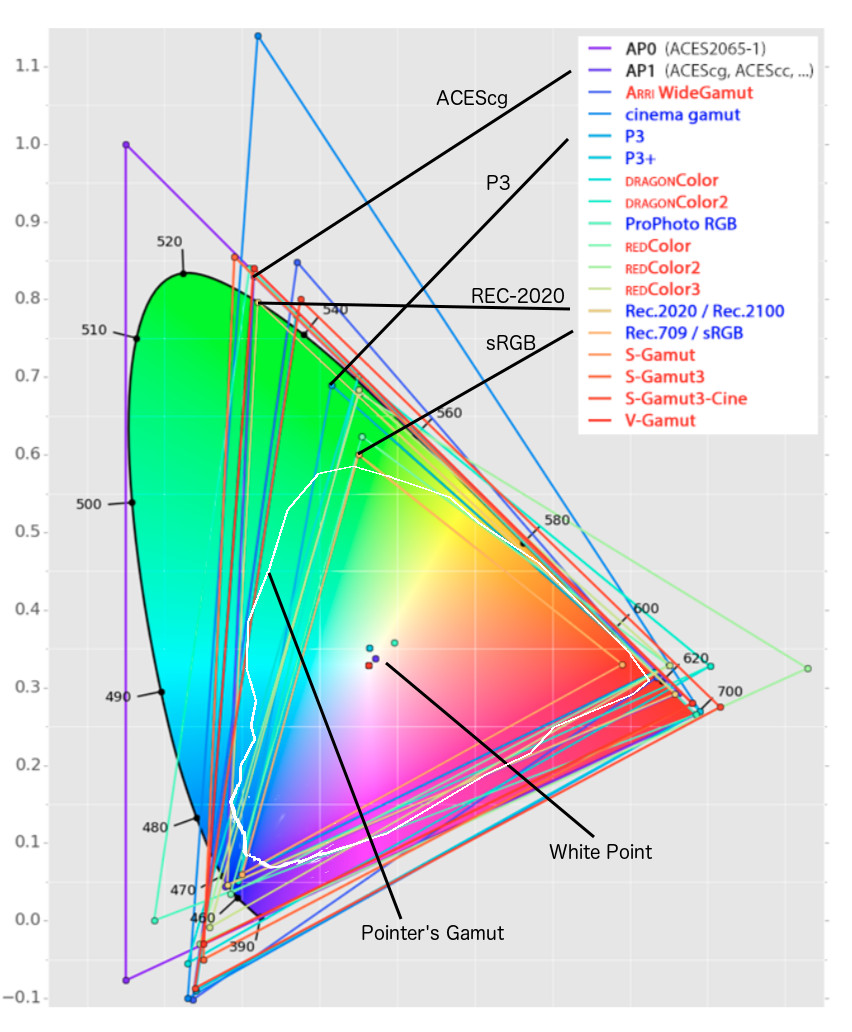

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

Read more: Rec-2020 – TVs new color gamut standard used by Dolby Vision?https://www.hdrsoft.com/resources/dri.html#bit-depth

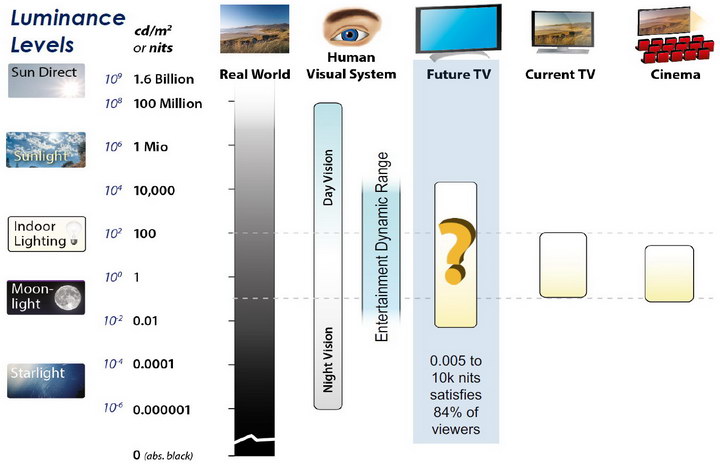

The dynamic range is a ratio between the maximum and minimum values of a physical measurement. Its definition depends on what the dynamic range refers to.

For a scene: Dynamic range is the ratio between the brightest and darkest parts of the scene.

For a camera: Dynamic range is the ratio of saturation to noise. More specifically, the ratio of the intensity that just saturates the camera to the intensity that just lifts the camera response one standard deviation above camera noise.

For a display: Dynamic range is the ratio between the maximum and minimum intensities emitted from the screen.

The Dynamic Range of real-world scenes can be quite high — ratios of 100,000:1 are common in the natural world. An HDR (High Dynamic Range) image stores pixel values that span the whole tonal range of real-world scenes. Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel. Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera.

For TVs HDR is great, but it’s not the only new TV feature worth discussing.

Wide color gamut, or WCG, is often lumped in with HDR. While they’re often found together, they’re not intrinsically linked. Where HDR is an increase in the dynamic range of the picture (with contrast and brighter highlights in particular), a TV’s wide color gamut coverage refers to how much of the new, larger color gamuts a TV can display.

Wide color gamuts only really matter for HDR video sources like UHD Blu-rays and some streaming video, as only HDR sources are meant to take advantage of the ability to display more colors.

www.cnet.com/how-to/what-is-wide-color-gamut-wcg/

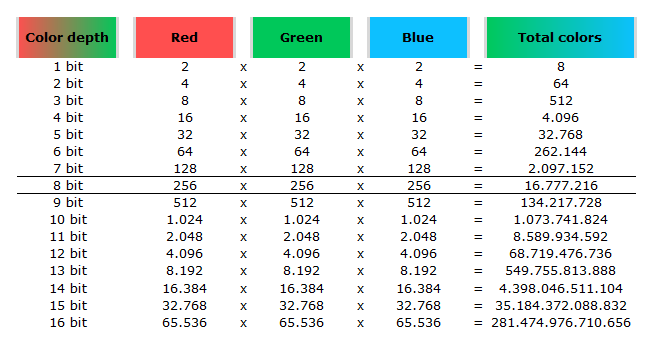

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed through a pixel; the other aspect is how broad a range of colors can be expressed (the gamut)

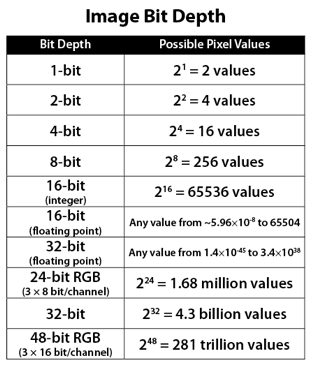

Image rendering bit depth

Wide color gamuts include a greater number of colors than what most current TVs can display, so the greater a TV’s coverage of a wide color gamut, the more colors a TV will be able to reproduce.

When we talk about a color space or color gamut we refer to the range of color values stored in an image. The perception of these color also requires a display that has been tuned with to resolve these color profiles at best. This is often referred to as a ‘viewer lut’.

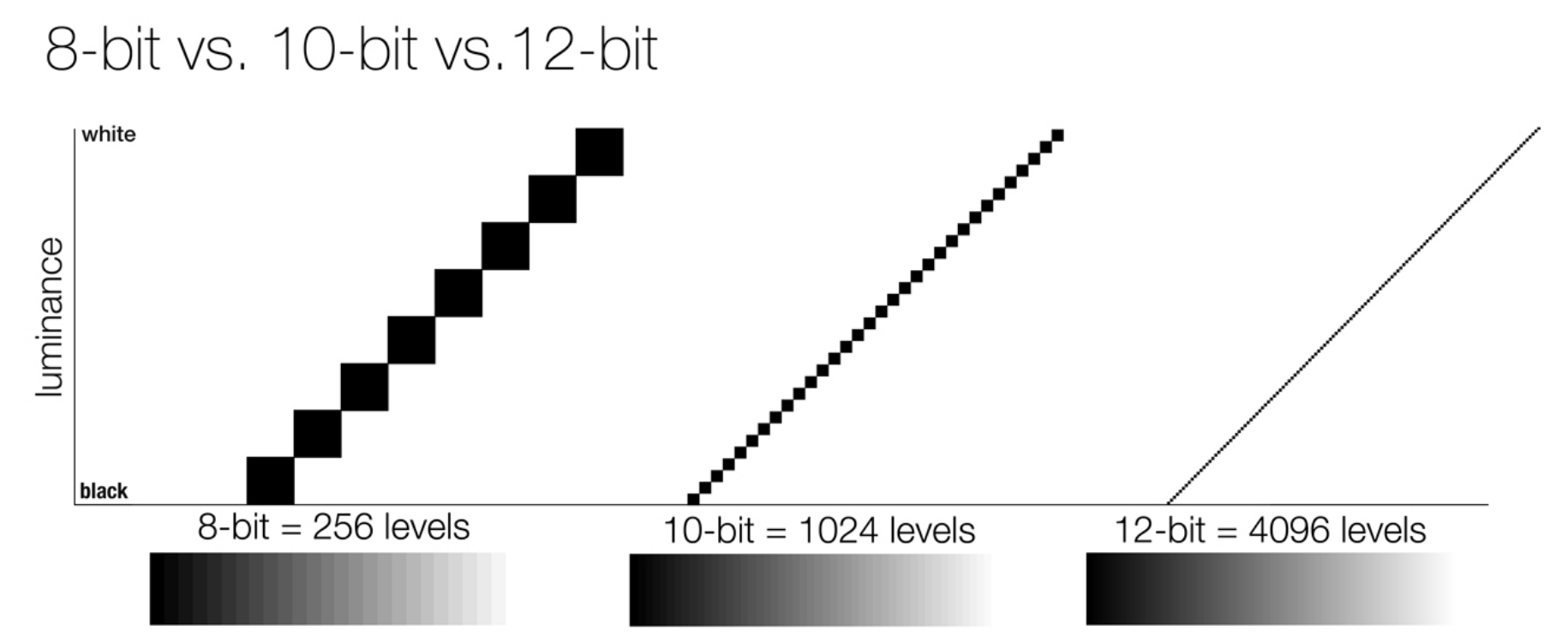

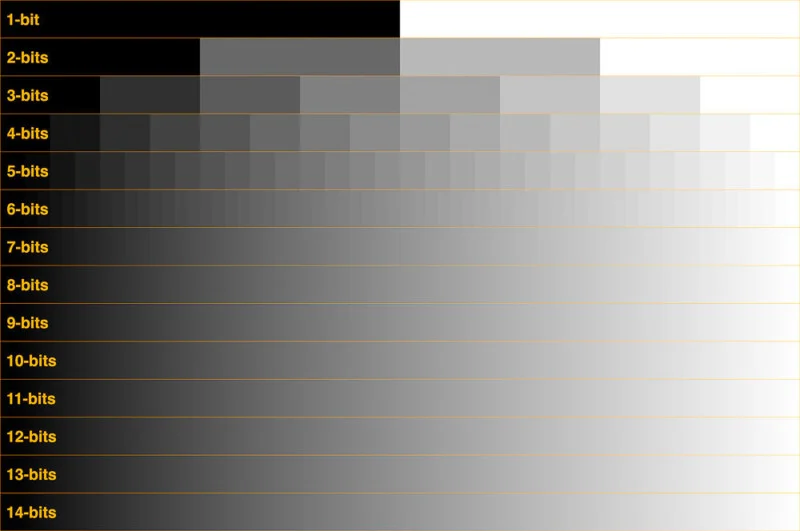

So this comes also usually paired with an increase in bit depth, going from the old 8 bit system (256 shades per color, with the potential of over 16.7 million colors: 256 green x 256 blue x 256 red) to 10 (1024+ shades per color, with access to over a billion colors) or higher bits, like 12 bit (4096 shades per RGB for 68 billion colors).

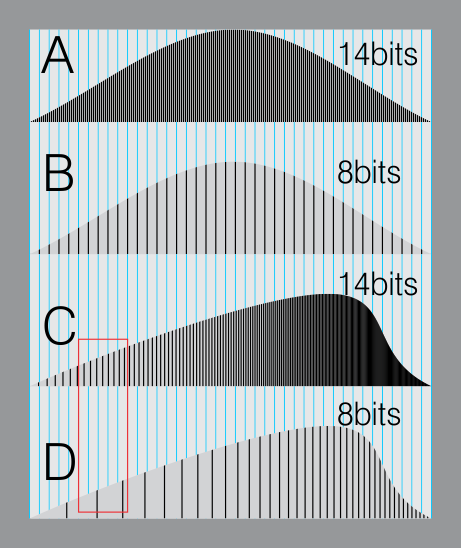

The advantage of higher bit depth is in the ability to bias color with the minimum loss.

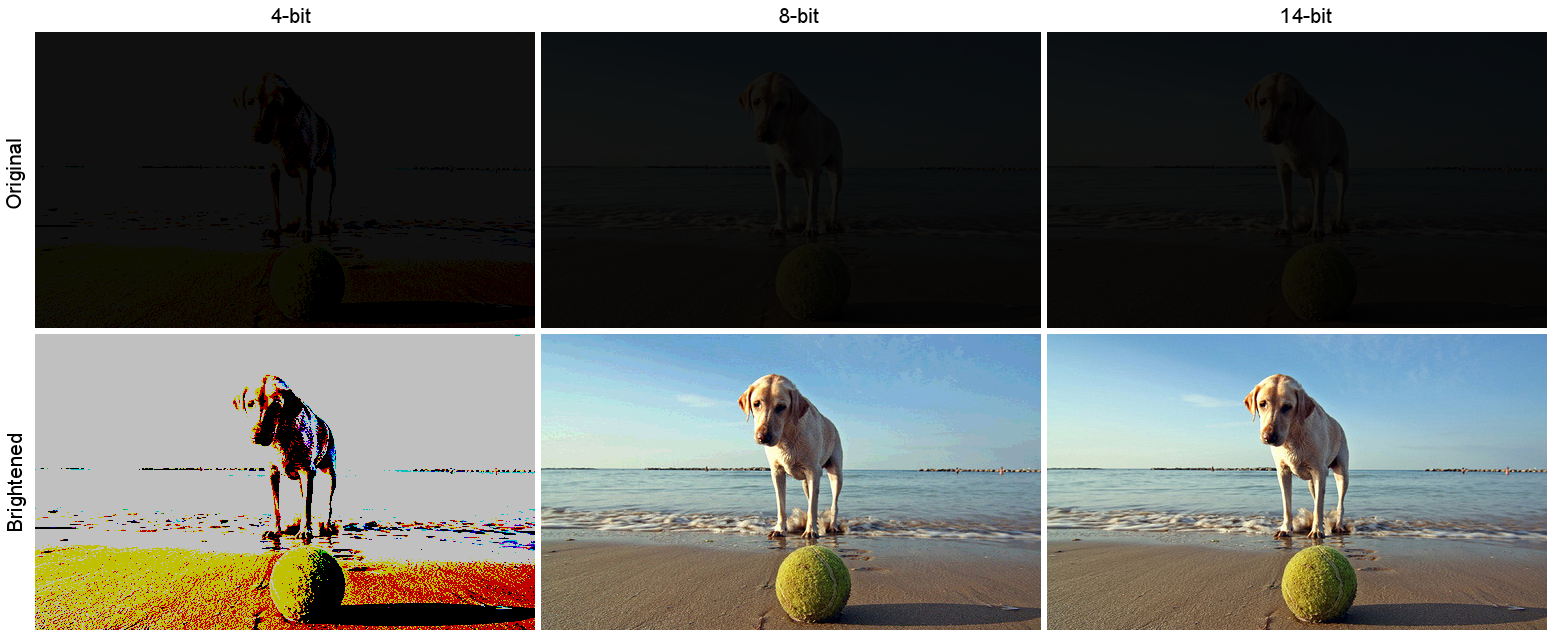

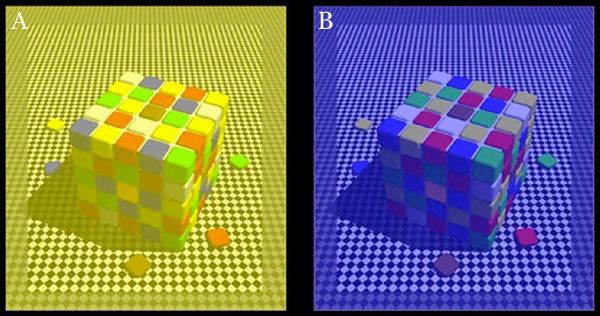

For an extreme example, raising the brightness from a completely dark image allows for better reproduction, independently on the reproduction medium, due to the amount of data available at editing time:

For reference, 8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

They can store around 5 stops of light and each pixel carry a value from 0 (black) to 255 (white).

As a comparison, DSLR cameras can capture ~12-15 stops of light and they use RAW files to store the information.

https://www.cambridgeincolour.com/tutorials/dynamic-range.htm

https://www.hdrsoft.com/resources/dri.html#bit-depth

Note that the number of bits itself may be a misleading indication of the real dynamic range that the image reproduces — converting a Low Dynamic Range image to a higher bit depth does not change its dynamic range, of course.

- 8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

- 16-bit images (i.e. 48 bits per pixel for a color image) resulting from RAW conversion are still considered Low Dynamic Range, even though the range of values they can encode is significantly higher than for 8-bit images (65536 versus 256). Note that converting a RAW file involves applying a tonal curve that compresses the dynamic range of the RAW data so that the converted image shows correctly on low dynamic range monitors. The need to adapt the output image file to the dynamic range of the display is the factor that dictates how much the dynamic range is compressed, not the output bit-depth. By using 16 instead of 8 bits, you will gain precision but you will not gain dynamic range.

- 32-bit images (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range.Unlike 8- and 16-bit images which can take a finite number of values, 32-bit images are coded using floating point numbers, which means the values they can take is unlimited.It is important to note, though, that storing an image in a 32-bit HDR format is a necessary condition for an HDR image but not a sufficient one. When an image comes from a single capture with a standard camera, it will remain a Low Dynamic Range image,

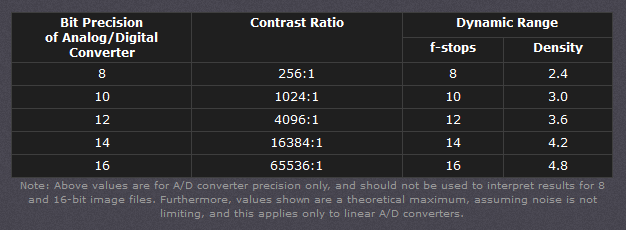

Also note that bit depth and dynamic range are often confused as one, but are indeed separate concepts and there is no direct one to one relationship between them. Bit depth is about capacity, dynamic range is about the actual ratio of data stored.

The bit depth of a capturing or displaying device gives you an indication of its dynamic range capacity. That is, the highest dynamic range that the device would be capable of reproducing if all other constraints are eliminated.https://rawpedia.rawtherapee.com/Bit_Depth

Finally, note that there are two ways to “count” bits for an image — either the number of bits per color channel (BPC) or the number of bits per pixel (BPP). A bit (0,1) is the smallest unit of data stored in a computer.

For a grayscale image, 8-bit means that each pixel can be one of 256 levels of gray (256 is 2 to the power 8).

For an RGB color image, 8-bit means that each one of the three color channels can be one of 256 levels of color.

Since each pixel is represented by 3 colors in this case, 8-bit per color channel actually means 24-bit per pixel.Similarly, 16-bit for an RGB image means 65,536 levels per color channel and 48-bit per pixel.

To complicate matters, when an image is classified as 16-bit, it just means that it can store a maximum 65,535 values. It does not necessarily mean that it actually spans that range. If the camera sensors can not capture more than 12 bits of tonal values, the actual bit depth of the image will be at best 12-bit and probably less because of noise.

The following table attempts to summarize the above for the case of an RGB color image.

Type of digital support Bit depth per color channel Bit depth per pixel FStops Theoretical maximum Dynamic Range Reality 8-bit 8 24 8 256:1 most consumer images 12-bit CCD 12 36 12 4,096:1 real maximum limited by noise 14-bit CCD 14 42 14 16,384:1 real maximum limited by noise 16-bit TIFF (integer) 16 48 16 65,536:1 bit-depth in this case is not directly related to the dynamic range captured 16-bit float EXR 16 48 30 65,536:1 values are distributed more closely in the (lower) darker tones than in the (higher) lighter ones, thus allowing for a more accurate description of the tones more significant to humans. The range of normalized 16-bit floats can represent thirty stops of information with 1024 steps per stop. We have eighteen and a half stops over middle gray, and eleven and a half below. The denormalized numbers provide an additional ten stops with decreasing precision per stop.

http://download.nvidia.com/developer/GPU_Gems/CD_Image/Image_Processing/OpenEXR/OpenEXR-1.0.6/doc/#recsHDR image (e.g. Radiance format) 32 96 “infinite” 4.3 billion:1 real maximum limited by the captured dynamic range 32-bit floats are often called “single-precision” floats, and 64-bit floats are often called “double-precision” floats. 16-bit floats therefore are called “half-precision” floats, or just “half floats”.

https://petapixel.com/2018/09/19/8-12-14-vs-16-bit-depth-what-do-you-really-need

On a separate note, even Photoshop does not handle 16bit per channel. Photoshop does actually use 16-bits per channel. However, it treats the 16th digit differently – it is simply added to the value created from the first 15-digits. This is sometimes called 15+1 bits. This means that instead of 216 possible values (which would be 65,536 possible values) there are only 215+1 possible values (which is 32,768 +1 = 32,769 possible values).

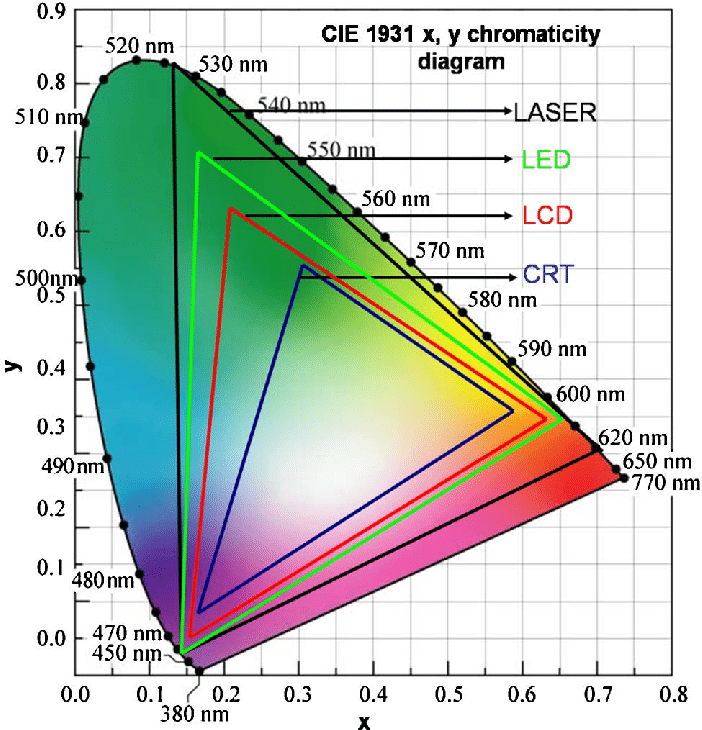

Rec-601 (for the older SDTV format, very similar to rec-709) and Rec-709 (the HDTV’s recommended set of color standards, at times also referred to sRGB, although not exactly the same) are currently the most spread color formats and hardware configurations in the world.

Following those you can find the larger P3 gamut, more commonly used in theaters and in digital production houses (with small variations and improvements to color coverage), as well as most of best 4K/WCG TVs.

And a new standard is now promoted against P3, referred to Rec-2020 and UHDTV.

It is still debatable if this is going to be adopted at consumer level beyond the P3, mainly due to lack of hardware supporting it. But initial tests do prove that it would be a future proof investment.

www.colour-science.org/anders-langlands/

Rec. 2020 is ultimately designed for television, and not cinema. Therefore, it is to be expected that its properties must behave according to current signal processing standards. In this respect, its foundation is based on current HD and SD video signal characteristics.

As far as color bit depth is concerned, it allows for a maximum of 12 bits, which is more than enough for humans.

Comparing standards, REC-709 covers 35.9% of the human visible spectrum. P3 45.5%. And REC-2020 75.8%.

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.htmlComparing coverage to hardware devices

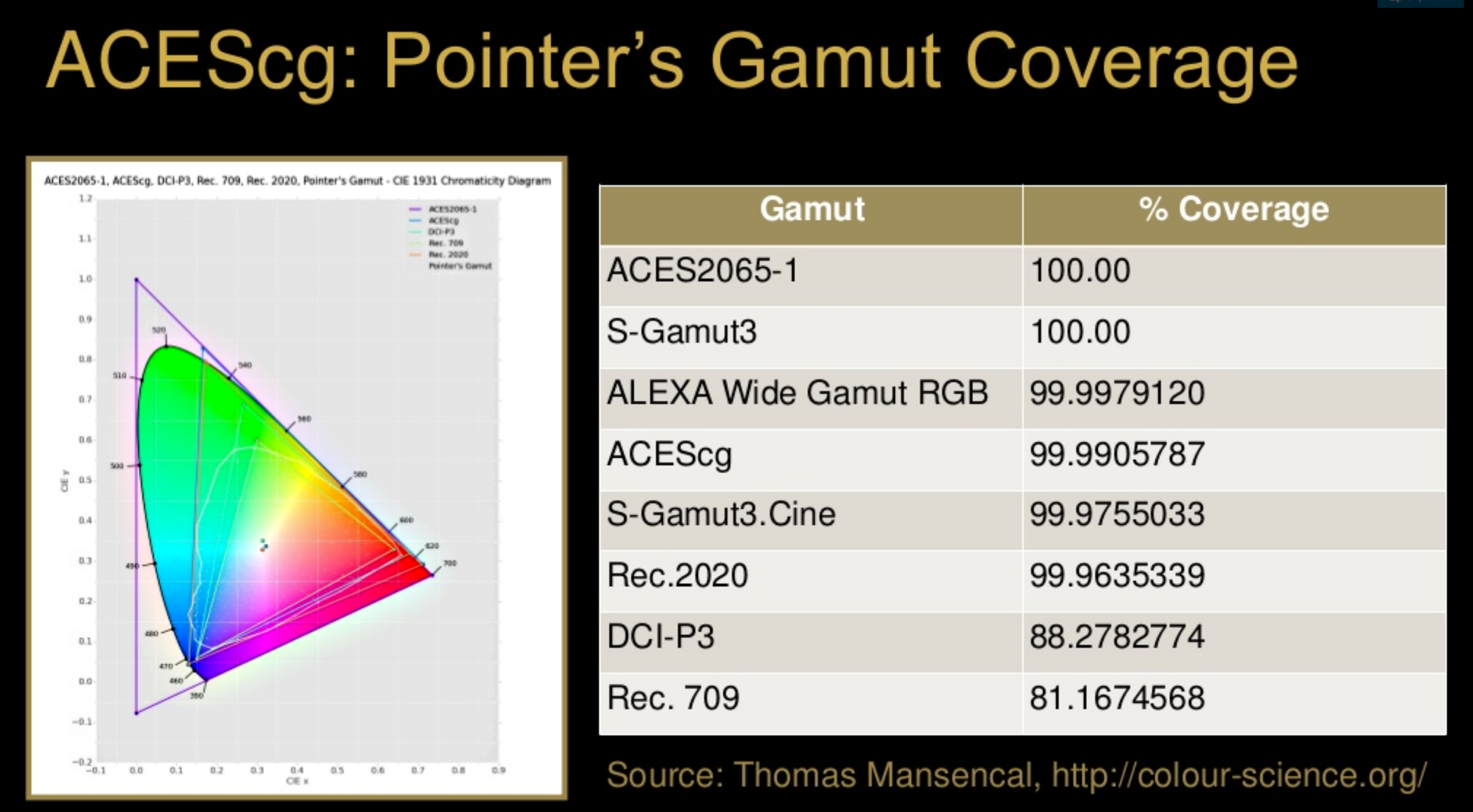

To note that all the new standards generally score very high on the Pointer’s Gamut chart. But with REC-2020 scoring 99.9% vs P3 at 88.2%.

www.tftcentral.co.uk/articles/pointers_gamut.htmhttps://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

The Pointer’s gamut is (an approximation of) the gamut of real surface colors as can be seen by the human eye, based on the research by Michael R. Pointer (1980). What this means is that every color that can be reflected by the surface of an object of any material is inside the Pointer’s gamut. Basically establishing a widely respected target for color reproduction. Visually, Pointers Gamut represents the colors we see about us in the natural world. Colors outside Pointers Gamut include those that do not occur naturally, such as neon lights and computer-generated colors possible in animation. Which would partially be accounted for with the new gamuts.

cinepedia.com/picture/color-gamut/

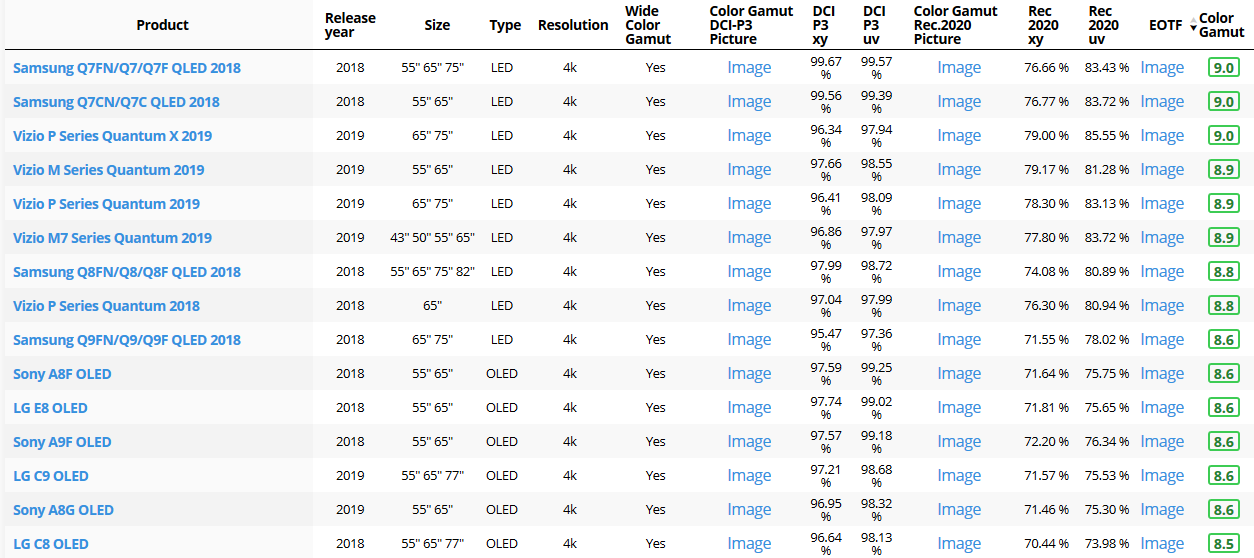

Not all current TVs can support the full spread of the new gamuts. Here is a list of modern TVs’ color coverage in percentage:

www.rtings.com/tv/tests/picture-quality/wide-color-gamut-rec-709-dci-p3-rec-2020There are no TVs that can come close to displaying all the colors within Rec.2020, and there likely won’t be for at least a few years. However, to help future-proof the technology, Rec.2020 support is already baked into the HDR spec. That means that the same genuine HDR media that fills the DCI P3 space on a compatible TV now, will in a few years also fill Rec.2020 on a TV supporting that larger space.

Rec.2020’s main gains are in the number of new tones of green that it will display, though it also offers improvements to the number of blue and red colors as well. Altogether, Rec.2020 will cover about 75% of the visual spectrum, which is a sizeable increase in coverage even over DCI P3.

Dolby Vision

https://www.highdefdigest.com/news/show/what-is-dolby-vision/39049

https://www.techhive.com/article/3237232/dolby-vision-vs-hdr10-which-is-best.html

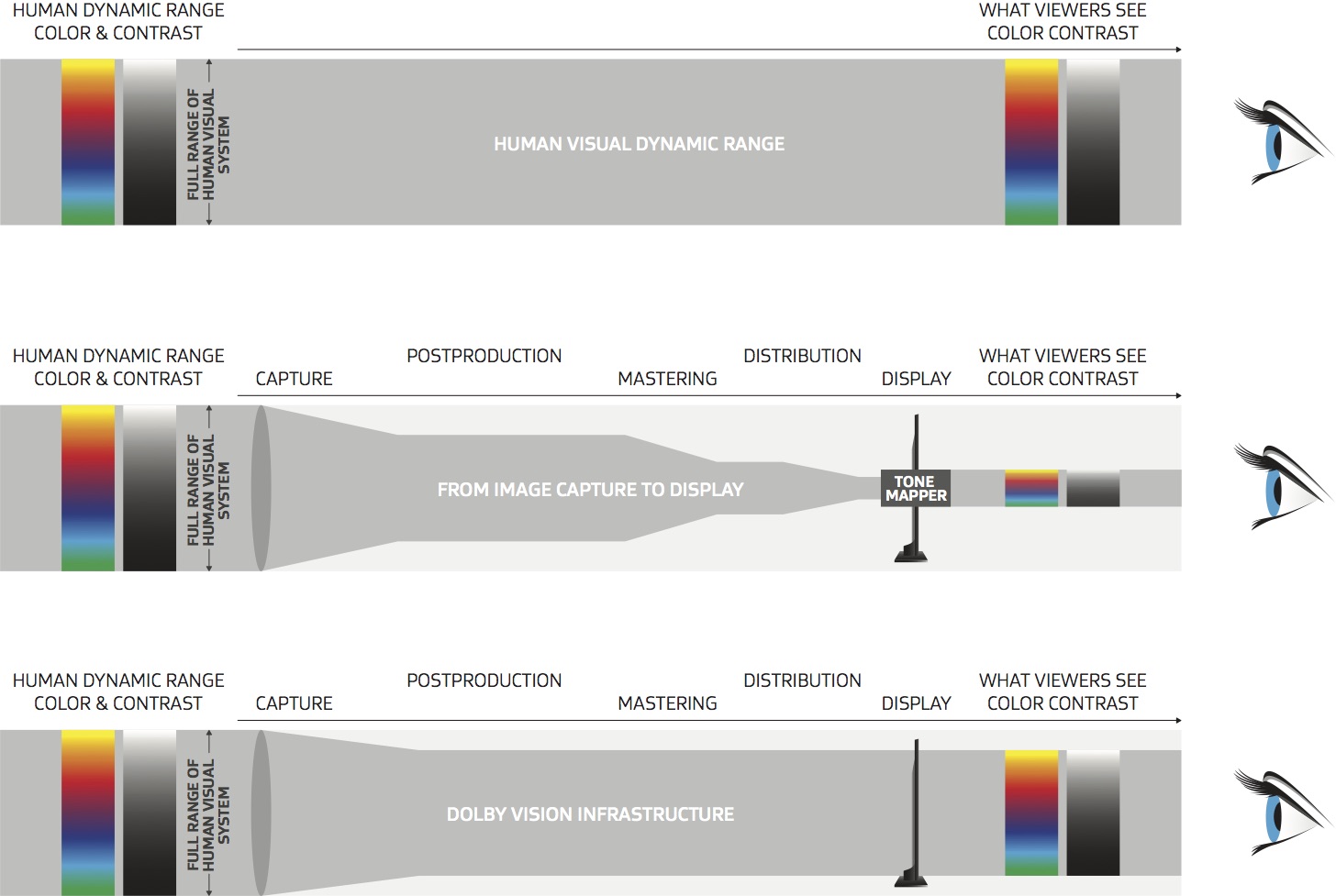

Dolby Vision is a proprietary end-to-end High Dynamic Range (HDR) format that covers content creation and playback through select cinemas, Ultra HD displays, and 4K titles. Like other HDR standards, the process uses expanded brightness to improve contrast between dark and light aspects of an image, bringing out deeper black levels and more realistic details in specular highlights — like the sun reflecting off of an ocean — in specially graded Dolby Vision material.

The iPhone 12 Pro gets the ability to record 4K 10-bit HDR video. According to Apple, it is the very first smartphone that is capable of capturing Dolby Vision HDR.

The iPhone 12 Pro takes two separate exposures and runs them through Apple’s custom image signal processor to create a histogram, which is a graph of the tonal values in each frame. The Dolby Vision metadata is then generated based on that histogram. In Laymen’s terms, it is essentially doing real-time grading while you are shooting. This is only possible due to the A14 Bionic chip.

Dolby Vision also allows for 12-bit color, as opposed to HDR10’s and HDR10+’s 10-bit color. While no retail TV we’re aware of supports 12-bit color, Dolby claims it can be down-sampled in such a way as to render 10-bit color more accurately.

Resources for more reading:

https://www.avsforum.com/forum/166-lcd-flat-panel-displays/2812161-what-color-volume.html

wolfcrow.com/say-hello-to-rec-2020-the-color-space-of-the-future/

www.cnet.com/news/ultra-hd-tv-color-part-ii-the-future/

-

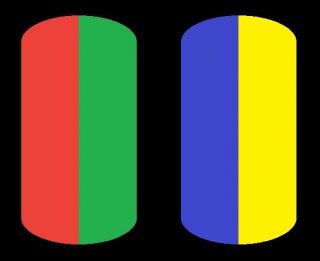

The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t See

Read more: The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t Seewww.livescience.com/17948-red-green-blue-yellow-stunning-colors.html

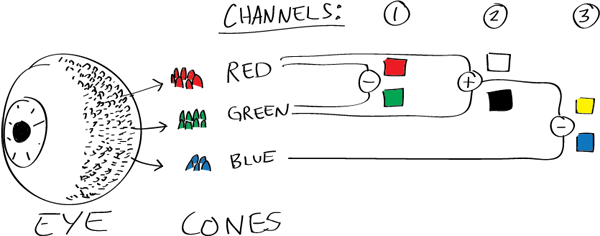

While the human eye has red, green, and blue-sensing cones, those cones are cross-wired in the retina to produce a luminance channel plus a red-green and a blue-yellow channel, and it’s data in that color space (known technically as “LAB”) that goes to the brain. That’s why we can’t perceive a reddish-green or a yellowish-blue, whereas such colors can be represented in the RGB color space used by digital cameras.

https://en.rockcontent.com/blog/the-use-of-yellow-in-data-design

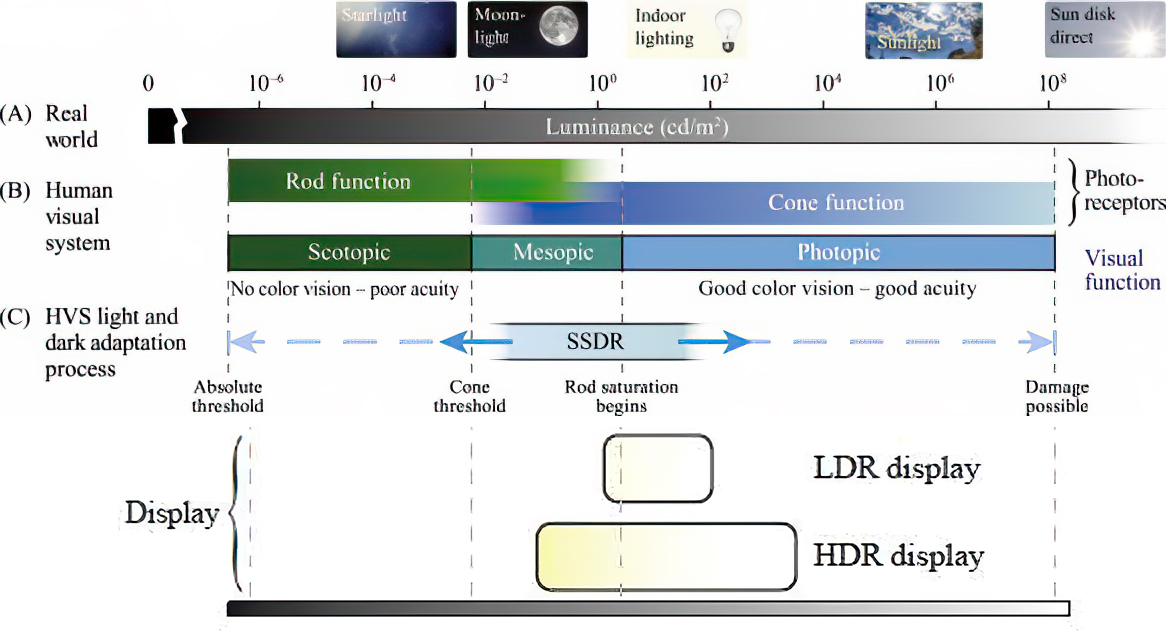

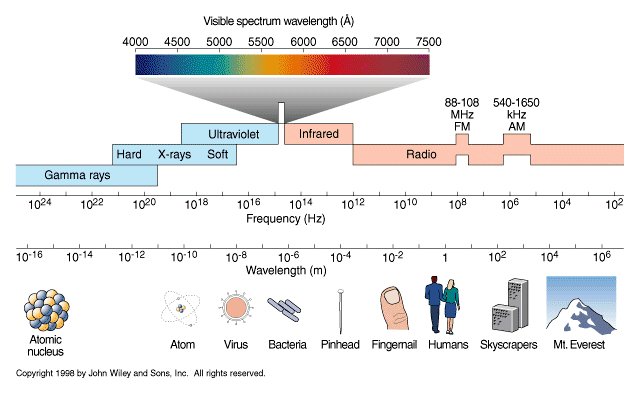

The back of the retina is covered in light-sensitive neurons known as cone cells and rod cells. There are three types of cone cells, each sensitive to different ranges of light. These ranges overlap, but for convenience the cones are referred to as blue (short-wavelength), green (medium-wavelength), and red (long-wavelength). The rod cells are primarily used in low-light situations, so we’ll ignore those for now.

When light enters the eye and hits the cone cells, the cones get excited and send signals to the brain through the visual cortex. Different wavelengths of light excite different combinations of cones to varying levels, which generates our perception of color. You can see that the red cones are most sensitive to light, and the blue cones are least sensitive. The sensitivity of green and red cones overlaps for most of the visible spectrum.

Here’s how your brain takes the signals of light intensity from the cones and turns it into color information. To see red or green, your brain finds the difference between the levels of excitement in your red and green cones. This is the red-green channel.

To get “brightness,” your brain combines the excitement of your red and green cones. This creates the luminance, or black-white, channel. To see yellow or blue, your brain then finds the difference between this luminance signal and the excitement of your blue cones. This is the yellow-blue channel.

From the calculations made in the brain along those three channels, we get four basic colors: blue, green, yellow, and red. Seeing blue is what you experience when low-wavelength light excites the blue cones more than the green and red.

Seeing green happens when light excites the green cones more than the red cones. Seeing red happens when only the red cones are excited by high-wavelength light.

Here’s where it gets interesting. Seeing yellow is what happens when BOTH the green AND red cones are highly excited near their peak sensitivity. This is the biggest collective excitement that your cones ever have, aside from seeing pure white.

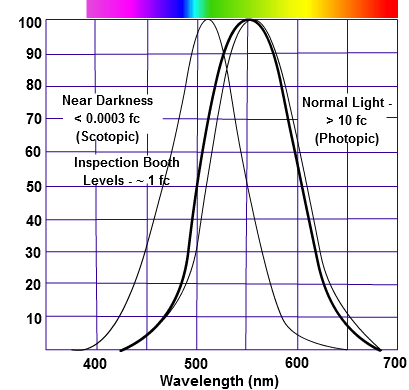

Notice that yellow occurs at peak intensity in the graph to the right. Further, the lens and cornea of the eye happen to block shorter wavelengths, reducing sensitivity to blue and violet light.

-

Sensitivity of human eye

Read more: Sensitivity of human eyehttp://www.wikilectures.eu/index.php/Spectral_sensitivity_of_the_human_eye

http://www.normankoren.com/Human_spectral_sensitivity_small.jpg

Spectral sensitivity of eye is influenced by light intensity. And the light intensity determines the level of activity of cones cell and rod cell. This is the main characteristic of human vision. Sensitivity to individual colors, in other words, wavelengths of the light spectrum, is explained by the RGB (red-green-blue) theory. This theory assumed that there are three kinds of cones. It’s selectively sensitive to red (700-630 nm), green (560-500 nm), and blue (490-450 nm) light. And their mutual interaction allow to perceive all colors of the spectrum.

http://weeklysciencequiz.blogspot.com/2013/01/violet-skies-are-for-birds.html

Sensitivity of human eye Sensitivity of human eyes to light increase with the decrease in light intensity. In day-light condition, the cones cell is responding to this condition. And the eye is most sensitive at 555 nm. In darkness condition, the rod cell is responding to this condition. And the eye is most sensitive at 507 nm.

As light intensity decreases, cone function changes more effective way. And when decrease the light intensity, it prompt to accumulation of rhodopsin. Furthermore, in activates rods, it allow to respond to stimuli of light in much lower intensity.

The three curves in the figure above shows the normalized response of an average human eye to various amounts of ambient light. The shift in sensitivity occurs because two types of photoreceptors called cones and rods are responsible for the eye’s response to light. The curve on the right shows the eye’s response under normal lighting conditions and this is called the photopic response. The cones respond to light under these conditions.

As mentioned previously, cones are composed of three different photo pigments that enable color perception. This curve peaks at 555 nanometers, which means that under normal lighting conditions, the eye is most sensitive to a yellowish-green color. When the light levels drop to near total darkness, the response of the eye changes significantly as shown by the scotopic response curve on the left. At this level of light, the rods are most active and the human eye is more sensitive to the light present, and less sensitive to the range of color. Rods are highly sensitive to light but are comprised of a single photo pigment, which accounts for the loss in ability to discriminate color. At this very low light level, sensitivity to blue, violet, and ultraviolet is increased, but sensitivity to yellow and red is reduced. The heavier curve in the middle represents the eye’s response at the ambient light level found in a typical inspection booth. This curve peaks at 550 nanometers, which means the eye is most sensitive to yellowish-green color at this light level. Fluorescent penetrant inspection materials are designed to fluoresce at around 550 nanometers to produce optimal sensitivity under dim lighting conditions.

-

Mysterious animation wins best illusion of 2011 – Motion silencing illusion

Read more: Mysterious animation wins best illusion of 2011 – Motion silencing illusionThe 2011 Best Illusion of the Year uses motion to render color changes invisible, and so reveals a quirk in our visual systems that is new to scientists.

https://en.wikipedia.org/wiki/Motion_silencing_illusion

“It is a really beautiful effect, revealing something about how our visual system works that we didn’t know before,” said Daniel Simons, a professor at the University of Illinois, Champaign-Urbana. Simons studies visual cognition, and did not work on this illusion. Before its creation, scientists didn’t know that motion had this effect on perception, Simons said.

A viewer stares at a speck at the center of a ring of colored dots, which continuously change color. When the ring begins to rotate around the speck, the color changes appear to stop. But this is an illusion. For some reason, the motion causes our visual system to ignore the color changes. (You can, however, see the color changes if you follow the rotating circles with your eyes.)

-

No one could see the colour blue until modern times

Read more: No one could see the colour blue until modern timeshttps://www.businessinsider.com/what-is-blue-and-how-do-we-see-color-2015-2

The way that humans see the world… until we have a way to describe something, even something so fundamental as a colour, we may not even notice that something it’s there.

Ancient languages didn’t have a word for blue — not Greek, not Chinese, not Japanese, not Hebrew, not Icelandic cultures. And without a word for the colour, there’s evidence that they may not have seen it at all.

https://www.wnycstudios.org/story/211119-colors

Every language first had a word for black and for white, or dark and light. The next word for a colour to come into existence — in every language studied around the world — was red, the colour of blood and wine.

After red, historically, yellow appears, and later, green (though in a couple of languages, yellow and green switch places). The last of these colours to appear in every language is blue.

The only ancient culture to develop a word for blue was the Egyptians — and as it happens, they were also the only culture that had a way to produce a blue dye.

https://mymodernmet.com/shades-of-blue-color-history/

Considered to be the first ever synthetically produced color pigment, Egyptian blue (also known as cuprorivaite) was created around 2,200 B.C. It was made from ground limestone mixed with sand and a copper-containing mineral, such as azurite or malachite, which was then heated between 1470 and 1650°F. The result was an opaque blue glass which then had to be crushed and combined with thickening agents such as egg whites to create a long-lasting paint or glaze.

If you think about it, blue doesn’t appear much in nature — there aren’t animals with blue pigments (except for one butterfly, Obrina Olivewing, all animals generate blue through light scattering), blue eyes are rare (also blue through light scattering), and blue flowers are mostly human creations. There is, of course, the sky, but is that really blue?

So before we had a word for it, did people not naturally see blue? Do you really see something if you don’t have a word for it?

A researcher named Jules Davidoff traveled to Namibia to investigate this, where he conducted an experiment with the Himba tribe, who speak a language that has no word for blue or distinction between blue and green. When shown a circle with 11 green squares and one blue, they couldn’t pick out which one was different from the others.

When looking at a circle of green squares with only one slightly different shade, they could immediately spot the different one. Can you?

Davidoff says that without a word for a colour, without a way of identifying it as different, it’s much harder for us to notice what’s unique about it — even though our eyes are physically seeing the blocks it in the same way.

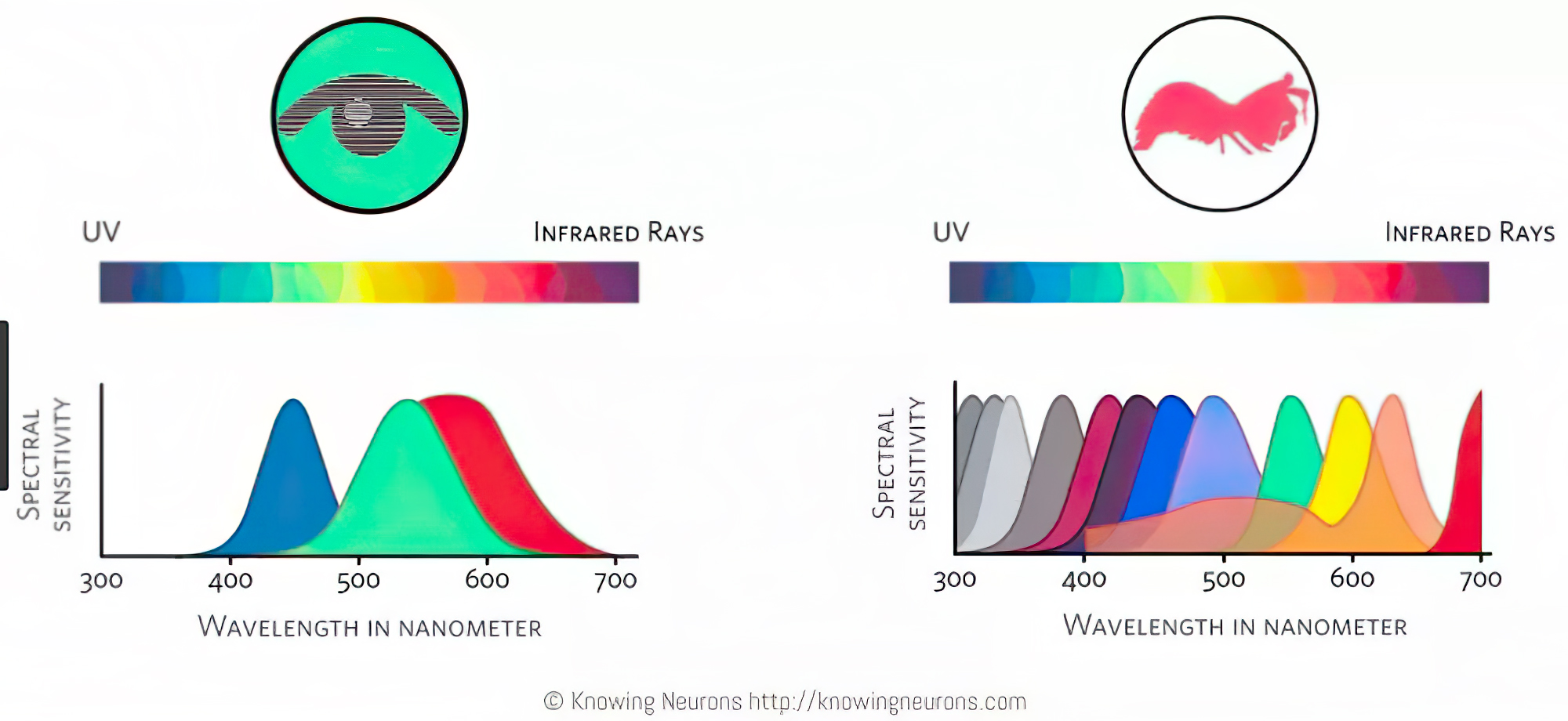

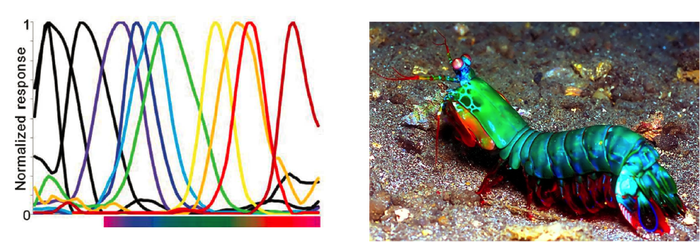

Further research brought to wider discussions about color perception in humans. Everything that we make is based on the fact that humans are trichromatic. The television only has 3 colors. Our color printers have 3 different colors. But some people, and in specific some women seemed to be more sensible to color differences… mainly because they’re just more aware or – because of the job that they do.

Eventually this brought to the discovery of a small percentage of the population, referred to as tetrachromats, which developed an extra cone sensitivity to yellow, likely due to gene modifications.

The interesting detail about these is that even between tetrachromats, only the ones that had a reason to develop, label and work with extra color sensitivity actually developed the ability to use their native skills.

So before blue became a common concept, maybe humans saw it. But it seems they didn’t know they were seeing it.

If you see something yet can’t see it, does it exist? Did colours come into existence over time? Not technically, but our ability to notice them… may have…

-

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

Image rendering bit depth

Read more: Image rendering bit depthThe terms 8-bit, 16-bit, 16-bit float, and 32-bit refer to different data formats used to store and represent image information, as bits per pixel.

https://en.wikipedia.org/wiki/Color_depth

In color technology, color depth also known as bit depth, is either the number of bits used to indicate the color of a single pixel, OR the number of bits used for each color component of a single pixel.

When referring to a pixel, the concept can be defined as bits per pixel (bpp).

When referring to a color component, the concept can be defined as bits per component, bits per channel, bits per color (all three abbreviated bpc), and also bits per pixel component, bits per color channel or bits per sample (bps). Modern standards tend to use bits per component, but historical lower-depth systems used bits per pixel more often.

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed; the other aspect is how broad a range of colors can be expressed (the gamut). The definition of both color precision and gamut is accomplished with a color encoding specification which assigns a digital code value to a location in a color space.

Here’s a simple explanation of each.

8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

They can store around 5 stops of light and each pixel carry a value from 0 (black) to 255 (white).

As a comparison, DSLR cameras can capture ~12-15 stops of light and they use RAW files to store the information.16-bit: This format is commonly referred to as “half-precision.” It uses 16 bits of data to represent color values for each pixel. With 16 bits, you can have 65,536 discrete levels of color, allowing for relatively high precision and smooth gradients. However, it has a limited dynamic range, meaning it cannot accurately represent extremely bright or dark values. It is commonly used for regular images and textures.

16-bit float: This format is an extension of the 16-bit format but uses floating-point numbers instead of fixed integers. Floating-point numbers allow for more precise calculations and a larger dynamic range. In this case, the 16 bits are used to store both the color value and the exponent, which controls the range of values that can be represented. The 16-bit float format provides better accuracy and a wider dynamic range than regular 16-bit, making it useful for high-dynamic-range imaging (HDRI) and computations that require more precision.

32-bit: (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range. This format, also known as “full-precision” or “float,” uses 32 bits to represent color values and offers the highest precision and dynamic range among the three options. With 32 bits, you have a significantly larger number of discrete levels, allowing for extremely accurate color representation, smooth gradients, and a wide range of brightness values. It is commonly used for professional rendering, visual effects, and scientific applications where maximum precision is required.

Bits and HDR coverage

High Dynamic Range (HDR) images are designed to capture a wide range of luminance values, from the darkest shadows to the brightest highlights, in order to reproduce a scene with more accuracy and detail. The bit depth of an image refers to the number of bits used to represent each pixel’s color information. When comparing 32-bit float and 16-bit float HDR images, the drop in accuracy primarily relates to the precision of the color information.

A 32-bit float HDR image offers a higher level of precision compared to a 16-bit float HDR image. In a 32-bit float format, each color channel (red, green, and blue) is represented by 32 bits, allowing for a larger range of values to be stored. This increased precision enables the image to retain more details and subtleties in color and luminance.

On the other hand, a 16-bit float HDR image utilizes 16 bits per color channel, resulting in a reduced range of values that can be represented. This lower precision leads to a loss of fine details and color nuances, especially in highly contrasted areas of the image where there are significant differences in luminance.

The drop in accuracy between 32-bit and 16-bit float HDR images becomes more noticeable as the exposure range of the scene increases. Exposure range refers to the span between the darkest and brightest areas of an image. In scenes with a limited exposure range, where the luminance differences are relatively small, the loss of accuracy may not be as prominent or perceptible. These images usually are around 8-10 exposure levels.

However, in scenes with a wide exposure range, such as a landscape with deep shadows and bright highlights, the reduced precision of a 16-bit float HDR image can result in visible artifacts like color banding, posterization, and loss of detail in both shadows and highlights. The image may exhibit abrupt transitions between tones or colors, which can appear unnatural and less realistic.

To provide a rough estimate, it is often observed that exposure values beyond approximately ±6 to ±8 stops from the middle gray (18% reflectance) may be more prone to accuracy issues in a 16-bit float format. This range may vary depending on the specific implementation and encoding scheme used.

To summarize, the drop in accuracy between 32-bit and 16-bit float HDR images is mainly related to the reduced precision of color information. This decrease in precision becomes more apparent in scenes with a wide exposure range, affecting the representation of fine details and leading to visible artifacts in the image.

In practice, this means that exposure values beyond a certain range will experience a loss of accuracy and detail when stored in a 16-bit float format. The exact range at which this loss occurs depends on the encoding scheme and the specific implementation. However, in general, extremely bright or extremely dark values that fall outside the representable range may be subject to quantization errors, resulting in loss of detail, banding, or other artifacts.

HDRs used for lighting purposes are usually slightly convolved to improve on sampling speed and removing specular artefacts. To that extent, 16 bit float HDRIs tend to me most used in CG cycles.

LIGHTING

-

What is the Light Field?

Read more: What is the Light Field?http://lightfield-forum.com/what-is-the-lightfield/

The light field consists of the total of all light rays in 3D space, flowing through every point and in every direction.

How to Record a Light Field

- a single, robotically controlled camera

- a rotating arc of cameras

- an array of cameras or camera modules

- a single camera or camera lens fitted with a microlens array

-

Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010

Read more: Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

-

VFX pipeline – Render Wall management topics

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent

-

Scene Referred vs Display Referred color workflows

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

-

Cinematographers Blueprint 300dpi poster

-

AI Search – Find The Best AI Tools & Apps

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.