COMPOSITION

-

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat SheetWhere is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

What type of lighting?-> High key lighting.

Features bright, even illumination and few conspicuous shadows. This lighting key is often used in musicals and comedies.Low key lighting

Features diffused shadows and atmospheric pools of light. This lighting key is often used in mysteries and thrillers.High contrast lighting

Features harsh shafts of lights and dramatic streaks of blackness. This type of lighting is often used in tragedies and melodramas.What type of shot?

Extreme long shot

Taken from a great distance, showing much of the locale. Ifpeople are included in these shots, they usually appear as mere specks-> Long shot

Corresponds to the space between the audience and the stage in a live theater. The long shots show the characters and some of the locale.Full shot

Range with just enough space to contain the human body in full. The full shot shows the character and a minimal amount of the locale.Medium shot

Shows the human figure from the knees or waist up.Close-Up

Concentrates on a relatively small object and show very little if any locale.Extreme close-up

Focuses on an unnaturally small portion of an object, giving that part great detail and symbolic significance.What angle?

Bird’s-eye view.

The shot is photographed directly from above. This type of shot can be disorienting, and the people photographed seem insignificant.High angle.

This angle reduces the size of the objects photographed. A person photographed from this angle seems harmless and insignificant, but to a lesser extent than with the bird’s-eye view.-> Eye-level shot.

The clearest view of an object, but seldom intrinsically dramatic, because it tends to be the norm.Low angle.

This angle increases high and a sense of verticality, heightening the importance of the object photographed. A person shot from this angle is given a sense of power and respect.Oblique angle.

For this angle, the camera is tilted laterally, giving the image a slanted appearance. Oblique angles suggest tension, transition, a impending movement. They are also called canted or dutch angles.What is the dominant color?

The use of color in this shot is symbolic. The scene is set in warehouse. Both the set and characters are blues, blacks and whites.

This was intentional allowing for the scenes and shots with blood to have a great level of contrast.

What is the Lens/Filter/Stock?

Telephoto lens.

A lens that draws objects closer but also diminishes the illusion of depth.Wide-angle lens.

A lens that takes in a broad area and increases the illusion of depth but sometimes distorts the edges of the image.Fast film stock.

Highly sensitive to light, it can register an image with little illumination. However, the final product tends to be grainy.Slow film stock.

Relatively insensitive to light, it requires a great deal of illumination. The final product tends to look polished.The lens is not wide-angle because there isn’t a great sense of depth, nor are several planes in focus. The lens is probably long but not necessarily a telephoto lens because the depth isn’t inordinately compressed.

The stock is fast because of the grainy quality of the image.

Subsidiary Contrast; where does the eye go next?

The two guns.

How much visual information is packed into the image? Is the texture stark, moderate, or highly detailed?

Minimalist clutter in the warehouse allows a focus on a character driven thriller.

What is the Composition?

Horizontal.

Compositions based on horizontal lines seem visually at rest and suggest placidity or peacefulness.Vertical.

Compositions based on vertical lines seem visually at rest and suggest strength.-> Diagonal.

Compositions based on diagonal, or oblique, lines seem dynamic and suggest tension or anxiety.-> Binary. Binary structures emphasize parallelism.

Triangle.

Triadic compositions stress the dynamic interplay among three mainCircle.

Circular compositions suggest security and enclosure.Is the form open or closed? Does the image suggest a window that arbitrarily isolates a fragment of the scene? Or a proscenium arch, in which the visual elements are carefully arranged and held in balance?

The most nebulous of all the categories of mise en scene, the type of form is determined by how consciously structured the mise en scene is. Open forms stress apparently simple techniques, because with these unself-conscious methods the filmmaker is able to emphasize the immediate, the familiar, the intimate aspects of reality. In open-form images, the frame tends to be deemphasized. In closed form images, all the necessary information is carefully structured within the confines of the frame. Space seems enclosed and self-contained rather than continuous.

Could argue this is a proscenium arch because this is such a classic shot with parallels and juxtapositions.

Is the framing tight or loose? Do the character have no room to move around, or can they move freely without impediments?

Shots where the characters are placed at the edges of the frame and have little room to move around within the frame are considered tight.

Longer shots, in which characters have room to move around within the frame, are considered loose and tend to suggest freedom.

Center-framed giving us the entire scene showing isolation, place and struggle.

Depth of Field. On how many planes is the image composed (how many are in focus)? Does the background or foreground comment in any way on the mid-ground?

Standard DOF, one background and clearly defined foreground.

Which way do the characters look vis-a-vis the camera?

An actor can be photographed in any of five basic positions, each conveying different psychological overtones.

Full-front (facing the camera):

the position with the most intimacy. The character is looking in our direction, inviting our complicity.Quarter Turn:

the favored position of most filmmakers. This position offers a high degree of intimacy but with less emotional involvement than the full-front.-> Profile (looking of the frame left or right):

More remote than the quarter turn, the character in profile seems unaware of being observed, lost in his or her own thoughts.Three-quarter Turn:

More anonymous than the profile, this position is useful for conveying a character’s unfriendly or antisocial feelings, for in effect, the character is partially turning his or her back on us, rejecting our interest.Back to Camera:

The most anonymous of all positions, this position is often used to suggest a character’s alienation from the world. When a character has his or her back to the camera, we can only guess what’s taking place internally, conveying a sense of concealment, or mystery.How much space is there between the characters?

Extremely close, for a gunfight.

The way people use space can be divided into four proxemic patterns.

Intimate distances.

The intimate distance ranges from skin contact to about eighteen inches away. This is the distance of physical involvement–of love, comfort, and tenderness between individuals.-> Personal distances.

The personal distance ranges roughly from eighteen inches away to about four feet away. These distances tend to be reserved for friends and acquaintances. Personal distances preserve the privacy between individuals, yet these rages don’t necessarily suggest exclusion, as intimate distances often do.Social distances.

The social distance rages from four feet to about twelve feet. These distances are usually reserved for impersonal business and casual social gatherings. It’s a friendly range in most cases, yet somewhat more formal than the personal distance.Public distances.

The public distance extends from twelve feet to twenty-five feet or more. This range tends to be formal and rather detached.

DESIGN

COLOR

-

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

-

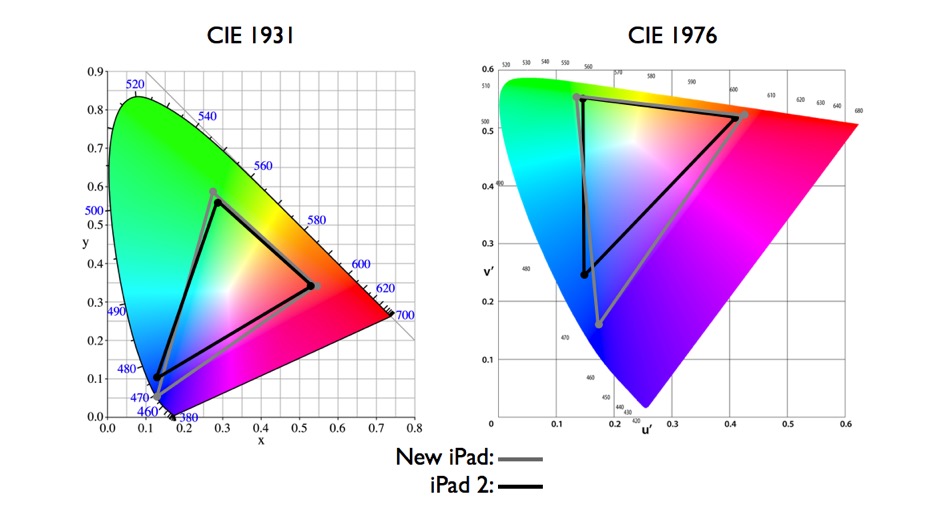

What is a Gamut or Color Space and why do I need to know about CIE

Read more: What is a Gamut or Color Space and why do I need to know about CIEhttp://www.xdcam-user.com/2014/05/what-is-a-gamut-or-color-space-and-why-do-i-need-to-know-about-it/

In video terms gamut is normally related to as the full range of colours and brightness that can be either captured or displayed.

Generally speaking all color gamuts recommendations are trying to define a reasonable level of color representation based on available technology and hardware. REC-601 represents the old TVs. REC-709 is currently the most distributed solution. P3 is mainly available in movie theaters and is now being adopted in some of the best new 4K HDR TVs. Rec2020 (a wider space than P3 that improves on visibke color representation) and ACES (the full coverage of visible color) are other common standards which see major hardware development these days.

To compare and visualize different solution (across video and printing solutions), most developers use the CIE color model chart as a reference.

The CIE color model is a color space model created by the International Commission on Illumination known as the Commission Internationale de l’Elcairage (CIE) in 1931. It is also known as the CIE XYZ color space or the CIE 1931 XYZ color space.

This chart represents the first defined quantitative link between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. Or basically, the range of color a typical human eye can perceive through visible light.Note that while the human perception is quite wide, and generally speaking biased towards greens (we are apes after all), the amount of colors available through nature, generated through light reflection, tend to be a much smaller section. This is defined by the Pointer’s Chart.

In short. Color gamut is a representation of color coverage, used to describe data stored in images against available hardware and viewer technologies.

Camera color encoding from

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applicationsCIE 1976

http://bernardsmith.eu/computatrum/scan_and_restore_archive_and_print/scanning/

https://store.yujiintl.com/blogs/high-cri-led/understanding-cie1931-and-cie-1976

The CIE 1931 standard has been replaced by a CIE 1976 standard. Below we can see the significance of this.

People have observed that the biggest issue with CIE 1931 is the lack of uniformity with chromaticity, the three dimension color space in rectangular coordinates is not visually uniformed.

The CIE 1976 (also called CIELUV) was created by the CIE in 1976. It was put forward in an attempt to provide a more uniform color spacing than CIE 1931 for colors at approximately the same luminance

The CIE 1976 standard colour space is more linear and variations in perceived colour between different people has also been reduced. The disproportionately large green-turquoise area in CIE 1931, which cannot be generated with existing computer screens, has been reduced.

If we move from CIE 1931 to the CIE 1976 standard colour space we can see that the improvements made in the gamut for the “new” iPad screen (as compared to the “old” iPad 2) are more evident in the CIE 1976 colour space than in the CIE 1931 colour space, particularly in the blues from aqua to deep blue.

https://dot-color.com/2012/08/14/color-space-confusion/

Despite its age, CIE 1931, named for the year of its adoption, remains a well-worn and familiar shorthand throughout the display industry. CIE 1931 is the primary language of customers. When a customer says that their current display “can do 72% of NTSC,” they implicitly mean 72% of NTSC 1953 color gamut as mapped against CIE 1931.

-

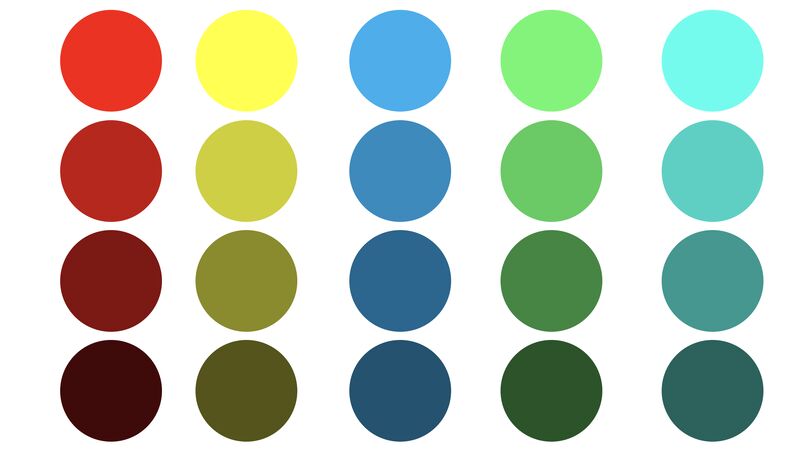

Is it possible to get a dark yellow

Read more: Is it possible to get a dark yellowhttps://www.patreon.com/posts/102660674

https://www.linkedin.com/posts/stephenwestland_here-is-a-post-about-the-dark-yellow-problem-activity-7187131643764092929-7uCL

-

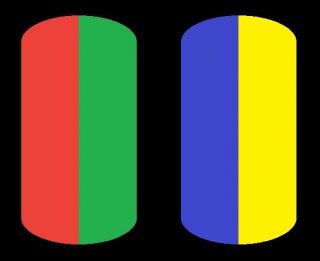

The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t See

Read more: The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t Seewww.livescience.com/17948-red-green-blue-yellow-stunning-colors.html

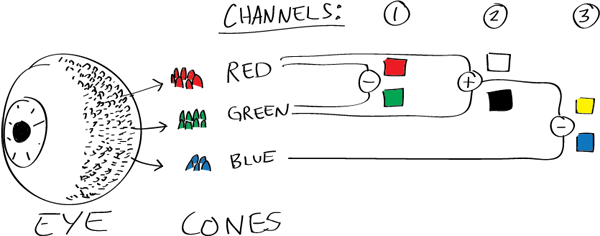

While the human eye has red, green, and blue-sensing cones, those cones are cross-wired in the retina to produce a luminance channel plus a red-green and a blue-yellow channel, and it’s data in that color space (known technically as “LAB”) that goes to the brain. That’s why we can’t perceive a reddish-green or a yellowish-blue, whereas such colors can be represented in the RGB color space used by digital cameras.

https://en.rockcontent.com/blog/the-use-of-yellow-in-data-design

The back of the retina is covered in light-sensitive neurons known as cone cells and rod cells. There are three types of cone cells, each sensitive to different ranges of light. These ranges overlap, but for convenience the cones are referred to as blue (short-wavelength), green (medium-wavelength), and red (long-wavelength). The rod cells are primarily used in low-light situations, so we’ll ignore those for now.

When light enters the eye and hits the cone cells, the cones get excited and send signals to the brain through the visual cortex. Different wavelengths of light excite different combinations of cones to varying levels, which generates our perception of color. You can see that the red cones are most sensitive to light, and the blue cones are least sensitive. The sensitivity of green and red cones overlaps for most of the visible spectrum.

Here’s how your brain takes the signals of light intensity from the cones and turns it into color information. To see red or green, your brain finds the difference between the levels of excitement in your red and green cones. This is the red-green channel.

To get “brightness,” your brain combines the excitement of your red and green cones. This creates the luminance, or black-white, channel. To see yellow or blue, your brain then finds the difference between this luminance signal and the excitement of your blue cones. This is the yellow-blue channel.

From the calculations made in the brain along those three channels, we get four basic colors: blue, green, yellow, and red. Seeing blue is what you experience when low-wavelength light excites the blue cones more than the green and red.

Seeing green happens when light excites the green cones more than the red cones. Seeing red happens when only the red cones are excited by high-wavelength light.

Here’s where it gets interesting. Seeing yellow is what happens when BOTH the green AND red cones are highly excited near their peak sensitivity. This is the biggest collective excitement that your cones ever have, aside from seeing pure white.

Notice that yellow occurs at peak intensity in the graph to the right. Further, the lens and cornea of the eye happen to block shorter wavelengths, reducing sensitivity to blue and violet light.

-

A Brief History of Color in Art

Read more: A Brief History of Color in Artwww.artsy.net/article/the-art-genome-project-a-brief-history-of-color-in-art

Of all the pigments that have been banned over the centuries, the color most missed by painters is likely Lead White.

This hue could capture and reflect a gleam of light like no other, though its production was anything but glamorous. The 17th-century Dutch method for manufacturing the pigment involved layering cow and horse manure over lead and vinegar. After three months in a sealed room, these materials would combine to create flakes of pure white. While scientists in the late 19th century identified lead as poisonous, it wasn’t until 1978 that the United States banned the production of lead white paint.

More reading:

www.canva.com/learn/color-meanings/https://www.infogrades.com/history-events-infographics/bizarre-history-of-colors/

-

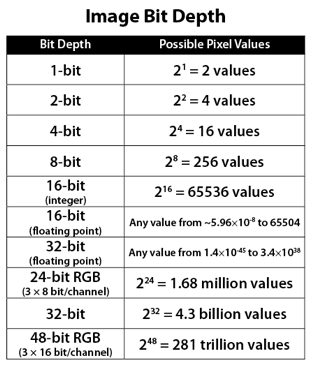

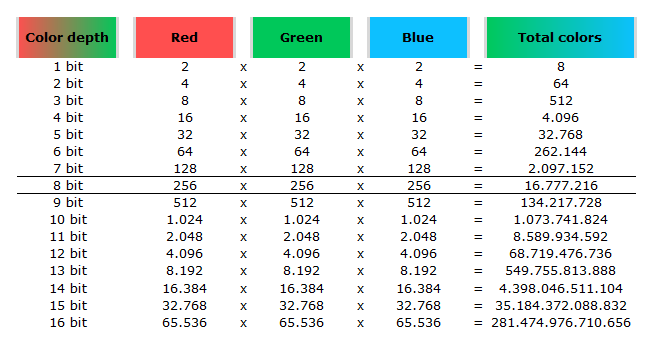

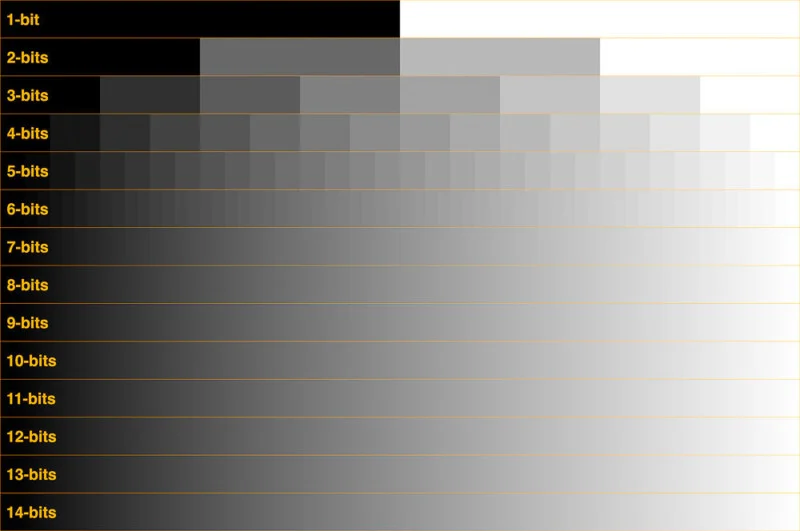

Image rendering bit depth

Read more: Image rendering bit depthThe terms 8-bit, 16-bit, 16-bit float, and 32-bit refer to different data formats used to store and represent image information, as bits per pixel.

https://en.wikipedia.org/wiki/Color_depth

In color technology, color depth also known as bit depth, is either the number of bits used to indicate the color of a single pixel, OR the number of bits used for each color component of a single pixel.

When referring to a pixel, the concept can be defined as bits per pixel (bpp).

When referring to a color component, the concept can be defined as bits per component, bits per channel, bits per color (all three abbreviated bpc), and also bits per pixel component, bits per color channel or bits per sample (bps). Modern standards tend to use bits per component, but historical lower-depth systems used bits per pixel more often.

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed; the other aspect is how broad a range of colors can be expressed (the gamut). The definition of both color precision and gamut is accomplished with a color encoding specification which assigns a digital code value to a location in a color space.

Here’s a simple explanation of each.

8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

They can store around 5 stops of light and each pixel carry a value from 0 (black) to 255 (white).

As a comparison, DSLR cameras can capture ~12-15 stops of light and they use RAW files to store the information.16-bit: This format is commonly referred to as “half-precision.” It uses 16 bits of data to represent color values for each pixel. With 16 bits, you can have 65,536 discrete levels of color, allowing for relatively high precision and smooth gradients. However, it has a limited dynamic range, meaning it cannot accurately represent extremely bright or dark values. It is commonly used for regular images and textures.

16-bit float: This format is an extension of the 16-bit format but uses floating-point numbers instead of fixed integers. Floating-point numbers allow for more precise calculations and a larger dynamic range. In this case, the 16 bits are used to store both the color value and the exponent, which controls the range of values that can be represented. The 16-bit float format provides better accuracy and a wider dynamic range than regular 16-bit, making it useful for high-dynamic-range imaging (HDRI) and computations that require more precision.

32-bit: (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range. This format, also known as “full-precision” or “float,” uses 32 bits to represent color values and offers the highest precision and dynamic range among the three options. With 32 bits, you have a significantly larger number of discrete levels, allowing for extremely accurate color representation, smooth gradients, and a wide range of brightness values. It is commonly used for professional rendering, visual effects, and scientific applications where maximum precision is required.

Bits and HDR coverage

High Dynamic Range (HDR) images are designed to capture a wide range of luminance values, from the darkest shadows to the brightest highlights, in order to reproduce a scene with more accuracy and detail. The bit depth of an image refers to the number of bits used to represent each pixel’s color information. When comparing 32-bit float and 16-bit float HDR images, the drop in accuracy primarily relates to the precision of the color information.

A 32-bit float HDR image offers a higher level of precision compared to a 16-bit float HDR image. In a 32-bit float format, each color channel (red, green, and blue) is represented by 32 bits, allowing for a larger range of values to be stored. This increased precision enables the image to retain more details and subtleties in color and luminance.

On the other hand, a 16-bit float HDR image utilizes 16 bits per color channel, resulting in a reduced range of values that can be represented. This lower precision leads to a loss of fine details and color nuances, especially in highly contrasted areas of the image where there are significant differences in luminance.

The drop in accuracy between 32-bit and 16-bit float HDR images becomes more noticeable as the exposure range of the scene increases. Exposure range refers to the span between the darkest and brightest areas of an image. In scenes with a limited exposure range, where the luminance differences are relatively small, the loss of accuracy may not be as prominent or perceptible. These images usually are around 8-10 exposure levels.

However, in scenes with a wide exposure range, such as a landscape with deep shadows and bright highlights, the reduced precision of a 16-bit float HDR image can result in visible artifacts like color banding, posterization, and loss of detail in both shadows and highlights. The image may exhibit abrupt transitions between tones or colors, which can appear unnatural and less realistic.

To provide a rough estimate, it is often observed that exposure values beyond approximately ±6 to ±8 stops from the middle gray (18% reflectance) may be more prone to accuracy issues in a 16-bit float format. This range may vary depending on the specific implementation and encoding scheme used.

To summarize, the drop in accuracy between 32-bit and 16-bit float HDR images is mainly related to the reduced precision of color information. This decrease in precision becomes more apparent in scenes with a wide exposure range, affecting the representation of fine details and leading to visible artifacts in the image.

In practice, this means that exposure values beyond a certain range will experience a loss of accuracy and detail when stored in a 16-bit float format. The exact range at which this loss occurs depends on the encoding scheme and the specific implementation. However, in general, extremely bright or extremely dark values that fall outside the representable range may be subject to quantization errors, resulting in loss of detail, banding, or other artifacts.

HDRs used for lighting purposes are usually slightly convolved to improve on sampling speed and removing specular artefacts. To that extent, 16 bit float HDRIs tend to me most used in CG cycles.

LIGHTING

-

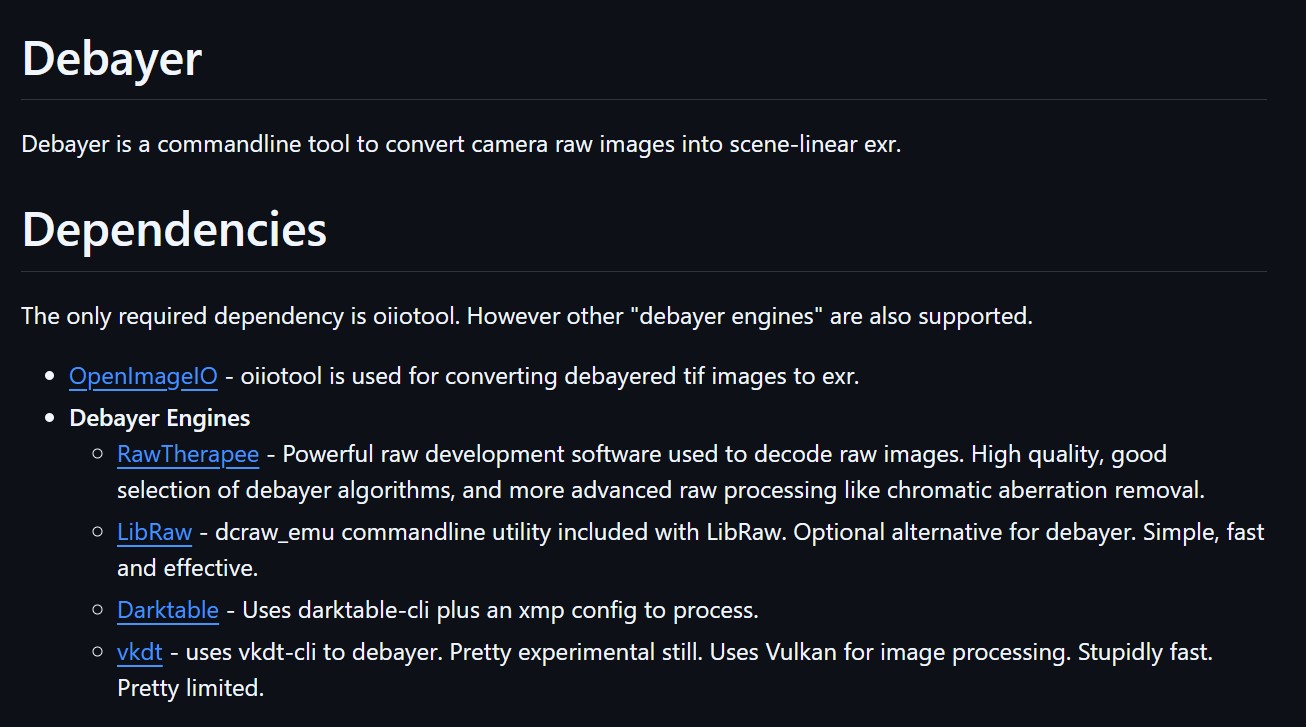

Debayer – A free command line tool to convert camera raw images into scene-linear exr

Read more: Debayer – A free command line tool to convert camera raw images into scene-linear exr

https://github.com/jedypod/debayer

The only required dependency is oiiotool. However other “debayer engines” are also supported.

- OpenImageIO – oiiotool is used for converting debayered tif images to exr.

- Debayer Engines

- RawTherapee – Powerful raw development software used to decode raw images. High quality, good selection of debayer algorithms, and more advanced raw processing like chromatic aberration removal.

- LibRaw – dcraw_emu commandline utility included with LibRaw. Optional alternative for debayer. Simple, fast and effective.

- Darktable – Uses darktable-cli plus an xmp config to process.

- vkdt – uses vkdt-cli to debayer. Pretty experimental still. Uses Vulkan for image processing. Stupidly fast. Pretty limited.

-

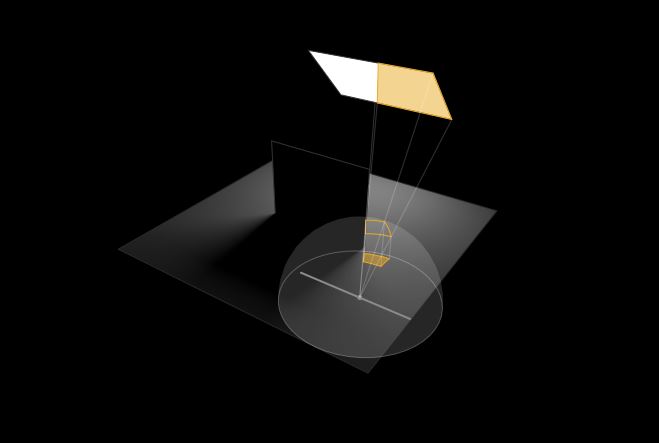

HDRI shooting and editing by Xuan Prada and Greg Zaal

Read more: HDRI shooting and editing by Xuan Prada and Greg Zaalwww.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

- Plant the tripod where the action happens, stabilise it and level it

- Set manual focus

- Set white balance

- Set ISO

- Set raw+jpg

- Set apperture

- Metering exposure

- Set neutral exposure

- Read histogram and adjust neutral exposure if necessary

- Shot slate (operator name, location, date, time, project code name, etc)

- Set auto bracketing

- Shot 5 to 7 exposures with 3 stops difference covering the whole environment

- Place the aromatic kit where the tripod was placed, and take 3 exposures. Keep half of the grey sphere hit by the sun and half in shade.

- Place the Macbeth chart 1m away from tripod on the floor and take 3 exposures

- Take backplates and ground/floor texture references

- Shoot reference materials

- Write down measurements of the scene, specially if you are shooting interiors.

- If shooting artificial lights take HDR samples of each individual lighting source.

Exposures starting point

- Day light sun visible ISO 100 F22

- Day light sun hidden ISO 100 F16

- Cloudy ISO 320 F16

- Sunrise/Sunset ISO 100 F11

- Interior well lit ISO 320 F16

- Interior ambient bright ISO 320 F10

- Interior bad light ISO 640 F10

- Interior ambient dark ISO 640 F8

- Low light situation ISO 640 F5

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

Here are the tips for using the chromatic ball in VFX projects, written in English:

https://www.linkedin.com/posts/bellrodrigo_here-are-the-tips-for-using-the-chromatic-activity-7200950595438940160-AGBpTips for Using the Chromatic Ball in VFX Projects**

The chromatic ball is an invaluable tool in VFX work, helping to capture lighting and reflection data crucial for integrating CGI elements seamlessly. Here are some tips to maximize its effectiveness:

1. **Positioning**:

– Place the chromatic ball in the same lighting conditions as the main subject. Ensure it is visible in the camera frame but not obstructing the main action.

– Ideally, place the ball where the CGI elements will be integrated to match the lighting and reflections accurately.2. **Recording Reference Footage**:

– Capture reference footage of the chromatic ball at the beginning and end of each scene or lighting setup. This ensures you have consistent lighting data for the entire shoot.3. **Consistent Angles**:

– Use consistent camera angles and heights when recording the chromatic ball. This helps in comparing and matching lighting setups across different shots.4. **Combine with a Gray Ball**:

– Use a gray ball alongside the chromatic ball. The gray ball provides a neutral reference for exposure and color balance, complementing the chromatic ball’s reflection data.5. **Marking Positions**:

– Mark the position of the chromatic ball on the set to ensure consistency when shooting multiple takes or different camera angles.6. **Lighting Analysis**:

– Analyze the chromatic ball footage to understand the light sources, intensity, direction, and color temperature. This information is crucial for creating realistic CGI lighting and shadows.7. **Reflection Analysis**:

– Use the chromatic ball to capture the environment’s reflections. This helps in accurately reflecting the CGI elements within the same scene, making them blend seamlessly.8. **Use HDRI**:

– Capture High Dynamic Range Imagery (HDRI) of the chromatic ball. HDRI provides detailed lighting information and can be used to light CGI scenes with greater realism.9. **Communication with VFX Team**:

– Ensure that the VFX team is aware of the chromatic ball’s data and how it was captured. Clear communication ensures that the data is used effectively in post-production.10. **Post-Production Adjustments**:

– In post-production, use the chromatic ball data to adjust the CGI elements’ lighting and reflections. This ensures that the final output is visually cohesive and realistic. -

7 Easy Portrait Lighting Setups

Read more: 7 Easy Portrait Lighting SetupsButterfly

Loop

Rembrandt

Split

Rim

Broad

Short

-

Aputure AL-F7 – dimmable Led Video Light, CRI95+, 3200-9500K

Read more: Aputure AL-F7 – dimmable Led Video Light, CRI95+, 3200-9500KHigh CRI of ≥95

256 LEDs with 45° beam angle

3200 to 9500K variable color temperature

1 to 100% Stepless Dimming, 1500 Lux Brightness at 3.3′

LCD Info Screen. Powered by an L-series battery, D-Tap, or USB-C

Because the light has a variable color range of 3200 to 9500K, when the light is set to 5500K (daylight balanced) both sets of LEDs are on at full, providing the maximum brightness from this fixture when compared to using the light at 3200 or 9500K.

The LCD screen provides information on the fixture’s output as well as the charge state of the battery. The screen also indicates whether the adjustment knob is controlling brightness or color temperature. To switch from brightness to CCT or CCT to brightness, just apply a short press to the adjustment knob.

The included cold shoe ball joint adapter enables mounting the light to your camera’s accessory shoe via the 1/4″-20 threaded hole on the fixture. In addition, the bottom of the cold shoe foot features a 3/8″-16 threaded hole, and includes a 3/8″-16 to 1/4″-20 reducing bushing.

-

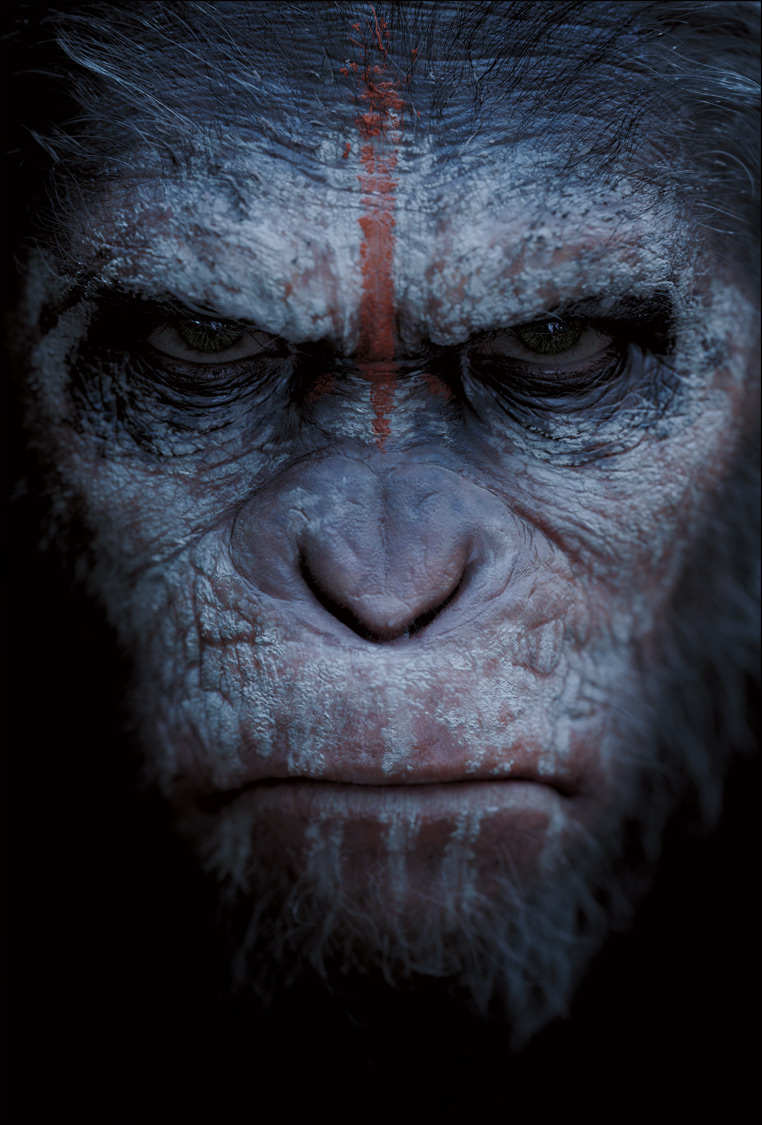

Ethan Roffler interviews CG Supervisor Daniele Tosti

Read more: Ethan Roffler interviews CG Supervisor Daniele TostiEthan Roffler

I recently had the honor of interviewing this VFX genius and gained great insight into what it takes to work in the entertainment industry. Keep in mind, these questions are coming from an artist’s perspective but can be applied to any creative individual looking for some wisdom from a professional. So grab a drink, sit back, and enjoy this fun and insightful conversation.

Ethan

To start, I just wanted to say thank you so much for taking the time for this interview!Daniele

My pleasure.

When I started my career I struggled to find help. Even people in the industry at the time were not that helpful. Because of that, I decided very early on that I was going to do exactly the opposite. I spend most of my weekends talking or helping students. ;)Ethan

That’s awesome! I have also come across the same struggle! Just a heads up, this will probably be the most informal interview you’ll ever have haha! Okay, so let’s start with a small introduction!Daniele

Short introduction: I worked very hard and got lucky enough to work on great shows with great people. ;) Slightly longer version: I started working for a TV channel, very early, while I was learning about CG. Slowly made my way across the world, working along very great people and amazing shows. I learned that to be successful in this business, you have to really love what you do as much as respecting the people around you. What you do will improve to the final product; the way you work with people will make a difference in your life.

Ethan

How long have you been an artist?Daniele

Loaded question. I believe I am still trying and craving to be one. After each production I finish I realize how much I still do not know. And how many things I would like to try. I guess in my CG Sup and generalist world, being an artist is about learning as much about the latest technologies and production cycles as I can, then putting that in practice. Having said that, I do consider myself a cinematographer first, as I have been doing that for about 25 years now.Ethan

Words of true wisdom, the more I know the less I know:) How did you get your start in the industry?

How did you break into such a competitive field?Daniele

There were not many schools when I started. It was all about a few magazines, some books, and pushing software around trying to learn how to make pretty images. Opportunities opened because of that knowledge! The true break was learning to work hard to achieve a Suspension of Disbelief in my work that people would recognize as such. It’s not something everyone can do, but I was fortunate to not be scared of working hard, being a quick learner and having very good supervisors and colleagues to learn from.Ethan

Which do you think is better, having a solid art degree or a strong portfolio?Daniele

Very good question. A strong portfolio will get you a job now. A solid strong degree will likely get you a job for a longer period. Let me digress here; Working as an artist is not about being an artist, it’s about making money as an artist. Most people fail to make that difference and have either a poor career or lack the understanding to make a stable one. One should never mix art with working as an artist. You can do both only if you understand business and are fair to yourself.

Ethan

That’s probably the most helpful answer to that question I have ever heard.

What’s some advice you can offer to someone just starting out who wants to break into the industry?Daniele

Breaking in the industry is not just about knowing your art. It’s about knowing good business practices. Prepare a good demo reel based on the skill you are applying for; research all the places where you want to apply and why; send as many reels around; follow up each reel with a phone call. Business is all about right time, right place.Ethan

A follow-up question to that is: Would you consider it a bad practice to send your demo reels out in mass quantity rather than focusing on a handful of companies to research and apply for?Daniele

Depends how desperate you are… I would say research is a must. To improve your options, you need to know which company is working on what and what skills they are after. If you were selling vacuum cleaners you probably would not want to waste energy contacting shoemakers or cattle farmers.Ethan

What do you think the biggest killer of creativity and productivity is for you?Daniele

Money…If you were thinking as an artist. ;) If you were thinking about making money as an artist… then I would say “thinking that you work alone”.Ethan

Best. Answer. Ever.

What are ways you fight complacency and maintain fresh ideas, outlooks, and perspectivesDaniele

Two things: Challenge yourself to go outside your comfort zone. And think outside of the box.Ethan

What are the ways/habits you have that challenge yourself to get out of your comfort zone and think outside the box?Daniele

If you think you are a good character painter, pick up a camera and go take pictures of amazing landscapes. If you think you are good only at painting or sketching, learn how to code in python. If you cannot solve a problem, that being a project or a person, learn to ask for help or learn about looking at the problem from various perspectives. If you are introvert, learn to be extrovert. And vice versa. And so on…

Ethan

How do you avoid burnout?Daniele

Oh… I wish I learned about this earlier. I think anyone that has a passion in something is at risk of burning out. Artists, more than many, because we see the world differently and our passion goes deep. You avoid burnouts by thinking that you are in a long term plan and that you have an obligation to pay or repay your talent by supporting and cherishing yourself and your family, not your paycheck. You do this by treating your art as a business and using business skills when dealing with your career and using artistic skills only when you are dealing with a project itself.Ethan

Looking back, what was a big defining moment for you?Daniele

Recognizing that people around you, those being colleagues, friends or family, come first.

It changed my career overnight.Ethan

Who are some of your personal heroes?Daniele

Too many to list. Most recently… James Cameron; Joe Letteri; Lawrence Krauss; Richard Dawkins. Because they all mix science, art, and poetry in their own way.Ethan

Last question:

What’s your dream job? ;)Daniele

Teaching artists to be better at being business people… as it will help us all improve our lives and the careers we took…

Being a VFX artist is fundamentally based on mistrust.

This because schedules, pipelines, technology, creative calls… all have a native and naive instability to them that causes everyone to grow a genuine but beneficial lack of trust in the status quo. This is a fine balance act to build into your character. The VFX motto: “Love everyone but trust no one” is born on that.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Guide to Prompt Engineering

-

Photography basics: Color Temperature and White Balance

-

How to paint a boardgame miniatures

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

-

QR code logos

-

Scene Referred vs Display Referred color workflows

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

-

Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI | Lex Fridman Podcast #416

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.