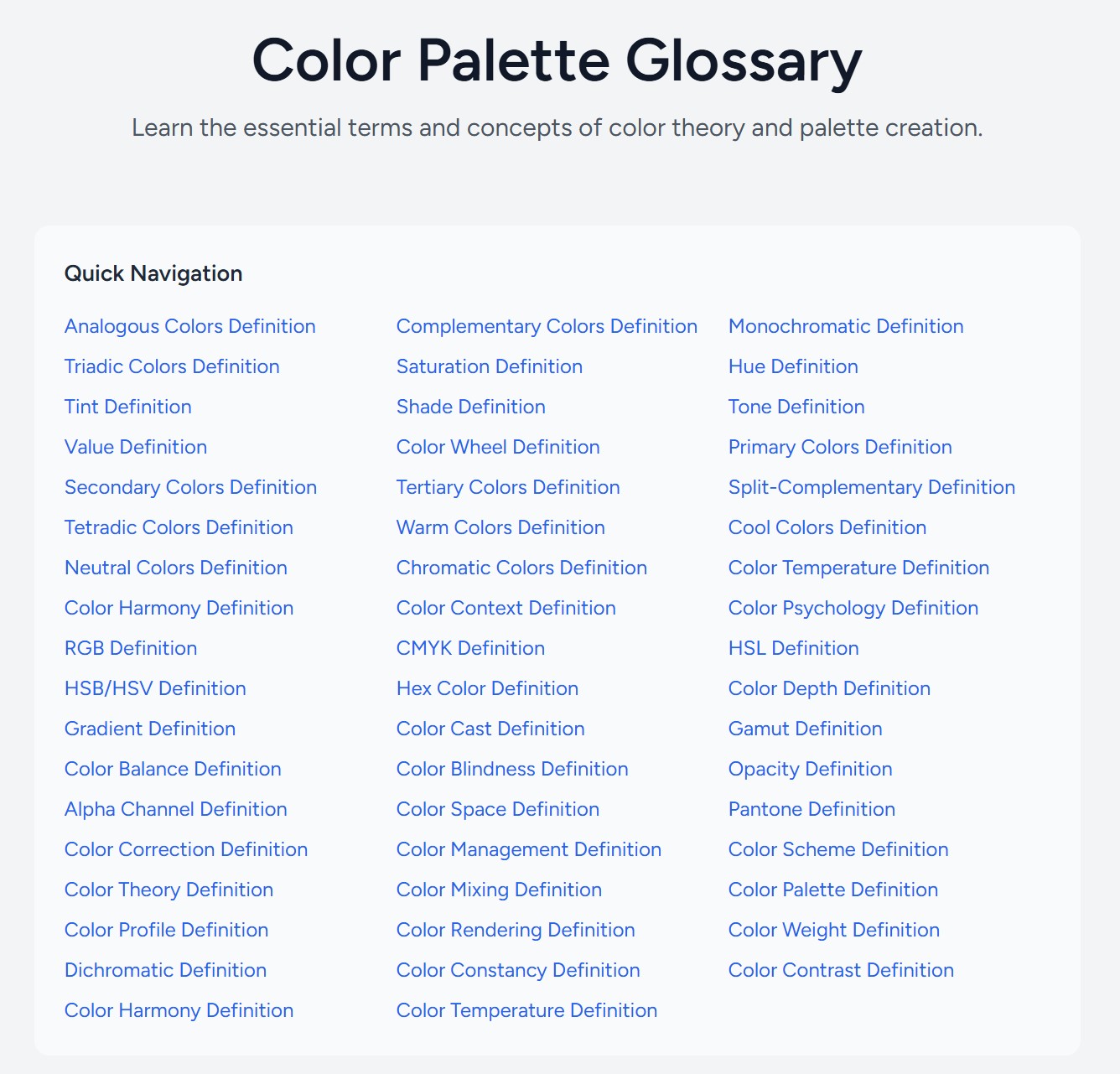

COMPOSITION

-

7 Commandments of Film Editing and composition

Read more: 7 Commandments of Film Editing and composition1. Watch every frame of raw footage twice. On the second time, take notes. If you don’t do this and try to start developing a scene premature, then it’s a big disservice to yourself and to the director, actors and production crew.

2. Nurture the relationships with the director. You are the secondary person in the relationship. Be calm and continually offer solutions. Get the main intention of the film as soon as possible from the director.

3. Organize your media so that you can find any shot instantly.

4. Factor in extra time for renders, exports, errors and crashes.

5. Attempt edits and ideas that shouldn’t work. It just might work. Until you do it and watch it, you won’t know. Don’t rule out ideas just because they don’t make sense in your mind.

6. Spend more time on your audio. It’s the glue of your edit. AUDIO SAVES EVERYTHING. Create fluid and seamless audio under your video.

7. Make cuts for the scene, but always in context for the whole film. Have a macro and a micro view at all times.

-

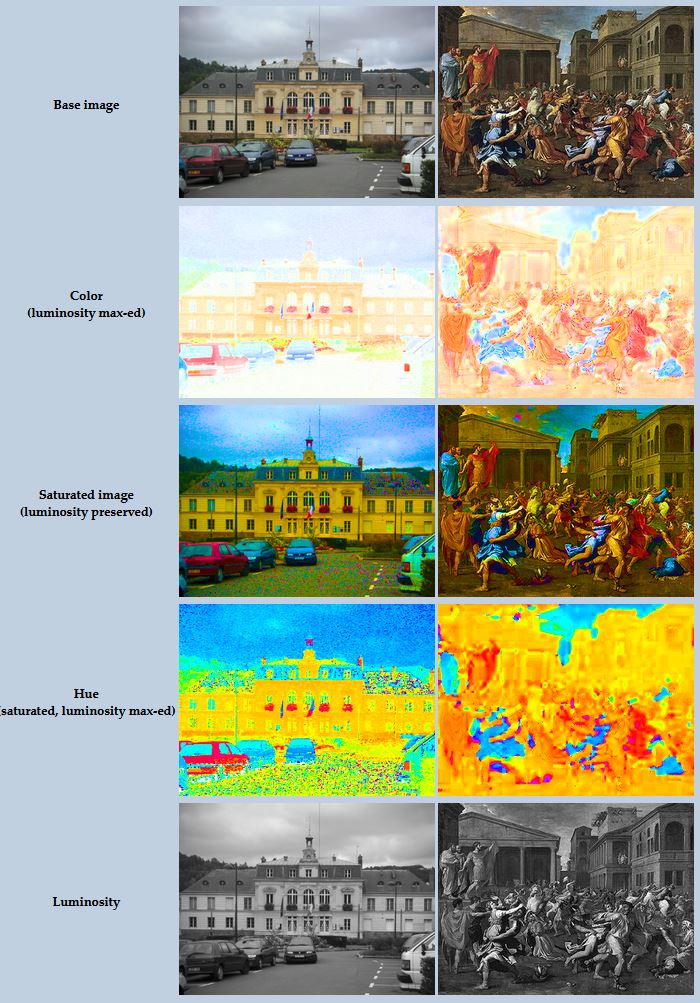

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat SheetWhere is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

What type of lighting?-> High key lighting.

Features bright, even illumination and few conspicuous shadows. This lighting key is often used in musicals and comedies.Low key lighting

Features diffused shadows and atmospheric pools of light. This lighting key is often used in mysteries and thrillers.High contrast lighting

Features harsh shafts of lights and dramatic streaks of blackness. This type of lighting is often used in tragedies and melodramas.What type of shot?

Extreme long shot

Taken from a great distance, showing much of the locale. Ifpeople are included in these shots, they usually appear as mere specks-> Long shot

Corresponds to the space between the audience and the stage in a live theater. The long shots show the characters and some of the locale.Full shot

Range with just enough space to contain the human body in full. The full shot shows the character and a minimal amount of the locale.Medium shot

Shows the human figure from the knees or waist up.Close-Up

Concentrates on a relatively small object and show very little if any locale.Extreme close-up

Focuses on an unnaturally small portion of an object, giving that part great detail and symbolic significance.What angle?

Bird’s-eye view.

The shot is photographed directly from above. This type of shot can be disorienting, and the people photographed seem insignificant.High angle.

This angle reduces the size of the objects photographed. A person photographed from this angle seems harmless and insignificant, but to a lesser extent than with the bird’s-eye view.-> Eye-level shot.

The clearest view of an object, but seldom intrinsically dramatic, because it tends to be the norm.Low angle.

This angle increases high and a sense of verticality, heightening the importance of the object photographed. A person shot from this angle is given a sense of power and respect.Oblique angle.

For this angle, the camera is tilted laterally, giving the image a slanted appearance. Oblique angles suggest tension, transition, a impending movement. They are also called canted or dutch angles.What is the dominant color?

The use of color in this shot is symbolic. The scene is set in warehouse. Both the set and characters are blues, blacks and whites.

This was intentional allowing for the scenes and shots with blood to have a great level of contrast.

What is the Lens/Filter/Stock?

Telephoto lens.

A lens that draws objects closer but also diminishes the illusion of depth.Wide-angle lens.

A lens that takes in a broad area and increases the illusion of depth but sometimes distorts the edges of the image.Fast film stock.

Highly sensitive to light, it can register an image with little illumination. However, the final product tends to be grainy.Slow film stock.

Relatively insensitive to light, it requires a great deal of illumination. The final product tends to look polished.The lens is not wide-angle because there isn’t a great sense of depth, nor are several planes in focus. The lens is probably long but not necessarily a telephoto lens because the depth isn’t inordinately compressed.

The stock is fast because of the grainy quality of the image.

Subsidiary Contrast; where does the eye go next?

The two guns.

How much visual information is packed into the image? Is the texture stark, moderate, or highly detailed?

Minimalist clutter in the warehouse allows a focus on a character driven thriller.

What is the Composition?

Horizontal.

Compositions based on horizontal lines seem visually at rest and suggest placidity or peacefulness.Vertical.

Compositions based on vertical lines seem visually at rest and suggest strength.-> Diagonal.

Compositions based on diagonal, or oblique, lines seem dynamic and suggest tension or anxiety.-> Binary. Binary structures emphasize parallelism.

Triangle.

Triadic compositions stress the dynamic interplay among three mainCircle.

Circular compositions suggest security and enclosure.Is the form open or closed? Does the image suggest a window that arbitrarily isolates a fragment of the scene? Or a proscenium arch, in which the visual elements are carefully arranged and held in balance?

The most nebulous of all the categories of mise en scene, the type of form is determined by how consciously structured the mise en scene is. Open forms stress apparently simple techniques, because with these unself-conscious methods the filmmaker is able to emphasize the immediate, the familiar, the intimate aspects of reality. In open-form images, the frame tends to be deemphasized. In closed form images, all the necessary information is carefully structured within the confines of the frame. Space seems enclosed and self-contained rather than continuous.

Could argue this is a proscenium arch because this is such a classic shot with parallels and juxtapositions.

Is the framing tight or loose? Do the character have no room to move around, or can they move freely without impediments?

Shots where the characters are placed at the edges of the frame and have little room to move around within the frame are considered tight.

Longer shots, in which characters have room to move around within the frame, are considered loose and tend to suggest freedom.

Center-framed giving us the entire scene showing isolation, place and struggle.

Depth of Field. On how many planes is the image composed (how many are in focus)? Does the background or foreground comment in any way on the mid-ground?

Standard DOF, one background and clearly defined foreground.

Which way do the characters look vis-a-vis the camera?

An actor can be photographed in any of five basic positions, each conveying different psychological overtones.

Full-front (facing the camera):

the position with the most intimacy. The character is looking in our direction, inviting our complicity.Quarter Turn:

the favored position of most filmmakers. This position offers a high degree of intimacy but with less emotional involvement than the full-front.-> Profile (looking of the frame left or right):

More remote than the quarter turn, the character in profile seems unaware of being observed, lost in his or her own thoughts.Three-quarter Turn:

More anonymous than the profile, this position is useful for conveying a character’s unfriendly or antisocial feelings, for in effect, the character is partially turning his or her back on us, rejecting our interest.Back to Camera:

The most anonymous of all positions, this position is often used to suggest a character’s alienation from the world. When a character has his or her back to the camera, we can only guess what’s taking place internally, conveying a sense of concealment, or mystery.How much space is there between the characters?

Extremely close, for a gunfight.

The way people use space can be divided into four proxemic patterns.

Intimate distances.

The intimate distance ranges from skin contact to about eighteen inches away. This is the distance of physical involvement–of love, comfort, and tenderness between individuals.-> Personal distances.

The personal distance ranges roughly from eighteen inches away to about four feet away. These distances tend to be reserved for friends and acquaintances. Personal distances preserve the privacy between individuals, yet these rages don’t necessarily suggest exclusion, as intimate distances often do.Social distances.

The social distance rages from four feet to about twelve feet. These distances are usually reserved for impersonal business and casual social gatherings. It’s a friendly range in most cases, yet somewhat more formal than the personal distance.Public distances.

The public distance extends from twelve feet to twenty-five feet or more. This range tends to be formal and rather detached.

DESIGN

-

Interactive Maps of Earthquakes around the world

Read more: Interactive Maps of Earthquakes around the worldhttps://ralucanicola.github.io/JSAPI_demos/earthquakes

https://ralucanicola.github.io/JSAPI_demos/earthquakes-depth

https://ralucanicola.github.io/JSAPI_demos/ridgecrest-earthquake

https://ralucanicola.github.io/JSAPI_demos/last-earthquakes

COLOR

-

A Brief History of Color in Art

Read more: A Brief History of Color in Artwww.artsy.net/article/the-art-genome-project-a-brief-history-of-color-in-art

Of all the pigments that have been banned over the centuries, the color most missed by painters is likely Lead White.

This hue could capture and reflect a gleam of light like no other, though its production was anything but glamorous. The 17th-century Dutch method for manufacturing the pigment involved layering cow and horse manure over lead and vinegar. After three months in a sealed room, these materials would combine to create flakes of pure white. While scientists in the late 19th century identified lead as poisonous, it wasn’t until 1978 that the United States banned the production of lead white paint.

More reading:

www.canva.com/learn/color-meanings/https://www.infogrades.com/history-events-infographics/bizarre-history-of-colors/

-

About green screens

Read more: About green screenshackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting. -

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. ”

Use CRI, Luminous Efficacy and color temperature controls to match your needs.

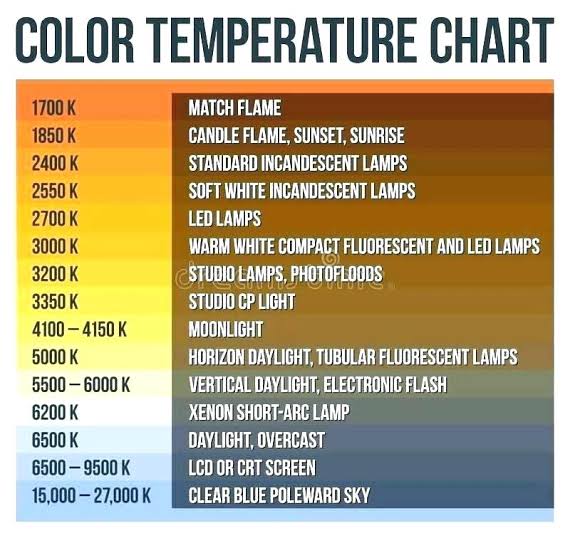

Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”https://www.studiobinder.com/blog/what-is-color-rendering-index/

https://en.wikipedia.org/wiki/Color_rendering_index

Light source CCT (K) CRI Low-pressure sodium (LPS/SOX) 1800 −44 Clear mercury-vapor 6410 17 High-pressure sodium (HPS/SON) 2100 24 Coated mercury-vapor 3600 49 Halophosphate warm-white fluorescent 2940 51 Halophosphate cool-white fluorescent 4230 64 Tri-phosphor warm-white fluorescent 2940 73 Halophosphate cool-daylight fluorescent 6430 76 “White” SON 2700 82 Standard LED Lamp 2700–5000 83 Quartz metal halide 4200 85 Tri-phosphor cool-white fluorescent 4080 89 High-CRI LED lamp (blue LED) 2700–5000 95 Ceramic discharge metal-halide lamp 5400 96 Ultra-high-CRI LED lamp (violet LED) 2700–5000 99 Incandescent/halogen bulb 3200 100 Luminous Efficacy

Luminous efficacy is a measure of how well a light source produces visible light, watts out versus watts in, measured in lumens per watt. In other words it is a measurement that indicates the ability of a light source to emit visible light using a given amount of power. It is a ratio of the visible energy to the power that goes into the bulb.FILM LIGHT TYPES

Consumer light types

Tungsten Lights

Light interiors and match domestic places or office locations. Daylight.Advantages of Tungsten Lights

Almost perfect color rendition

Low cost

Does not use mercury like CFLs (fluorescent) or mercury vapor lights

Better color temperature than standard tungsten

Longer life than a conventional incandescent

Instant on to full brightness, no warm-up time, and it is dimmableDisadvantages of Tungsten Lights

Extremely hot

High power requirement

The lamp is sensitive to oils and cannot be touched

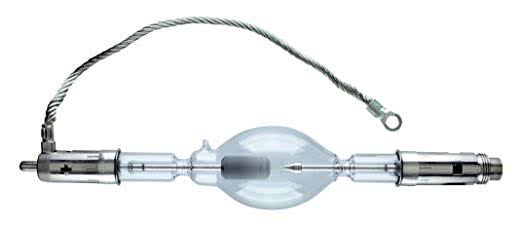

The bulb is capable of blowing and sending hot glass shards outward. A screen or layer of glass on the outside of the lamp can protect users.Hydrargyrum medium-arc iodide lights

HMI’s are used when high output is required. They are also used to recreate sun shining through windows or to fake additional sun while shooting exteriors. HMIs can light huge areas at once.Advantages of HMI lights

High light output

Higher efficiency

High color temperatureDisadvantages of HMI lights:

High cost

High power requirement

Dims only to about 50%

the color temperature increases with dimming

HMI bulbs will explode is dropped and release toxic chemicalsFluorescent

Fluorescent film lighting is achieved by laying multiple tubes next to each other, combining as many as you want for the desired brightness. The good news is you can choose your bulbs to either be warm or cool depending on the scenario you’re shooting. You want to get these bulbs close to the subject because they’re not great at opening up spaces. Fluorescent lighting is used to light interiors and is more compact and cooler than tungsten or HMI lighting.Advantages of Fluorescent lights

High efficiency

Low power requirement

Low cost

Long lamp life

Cool

Capable of soft even lighting over a large area

LightweightDisadvantages of Fluorescent lights

Flicker

High CRI

Domestic tubes have low CRI & poor color rendition.LED

LED’s are more and more common on film sets. You can use batteries to power them. That makes them portable and sleek – no messy cabled needed. You can rig your own panels of LED lights to fit any space necessary as well. LED’s can also power Fresnel style lamp heads such as the Arri L-series.Advantages of LED light

Soft, even lighting

Pure light without UV-artifacts

High efficiency

Low power consumption, can be battery powered

Excellent dimming by means of pulse width modulation control

Long lifespan

Environmentally friendly

Insensitive to shock

No risk of explosionDisadvantages of LED light

High cost.

LED’s are currently still expensive for their total light output -

PBR Color Reference List for Materials – by Grzegorz Baran

Read more: PBR Color Reference List for Materials – by Grzegorz Baran“The list should be helpful for every material artist who work on PBR materials as it contains over 200 color values measured with PCE-RGB2 1002 Color Spectrometer device and presented in linear and sRGB (2.2) gamma space.

All color values, HUE and Saturation in this list come from measurements taken with PCE-RGB2 1002 Color Spectrometer device and are presented in linear and sRGB (2.2) gamma space (more info at the end of this video) I calculated Relative Luminance and Luminance values based on captured color using my own equation which takes color based luminance perception into consideration. Bare in mind that there is no ‘one’ color per substance as nothing in nature is even 100% uniform and any value in +/-10% range from these should be considered as correct one. Therefore this list should be always considered as a color reference for material’s albedos, not ulitimate and absolute truth.“

LIGHTING

-

What light is best to illuminate gems for resale

Read more: What light is best to illuminate gems for resalewww.palagems.com/gem-lighting2

Artificial light sources, not unlike the diverse phases of natural light, vary considerably in their properties. As a result, some lamps render an object’s color better than others do.

The most important criterion for assessing the color-rendering ability of any lamp is its spectral power distribution curve.

Natural daylight varies too much in strength and spectral composition to be taken seriously as a lighting standard for grading and dealing colored stones. For anything to be a standard, it must be constant in its properties, which natural light is not.

For dealers in particular to make the transition from natural light to an artificial light source, that source must offer:

1- A degree of illuminance at least as strong as the common phases of natural daylight.

2- Spectral properties identical or comparable to a phase of natural daylight.A source combining these two things makes gems appear much the same as when viewed under a given phase of natural light. From the viewpoint of many dealers, this corresponds to a naturalappearance.

The 6000° Kelvin xenon short-arc lamp appears closest to meeting the criteria for a standard light source. Besides the strong illuminance this lamp affords, its spectrum is very similar to CIE standard illuminants of similar color temperature.

-

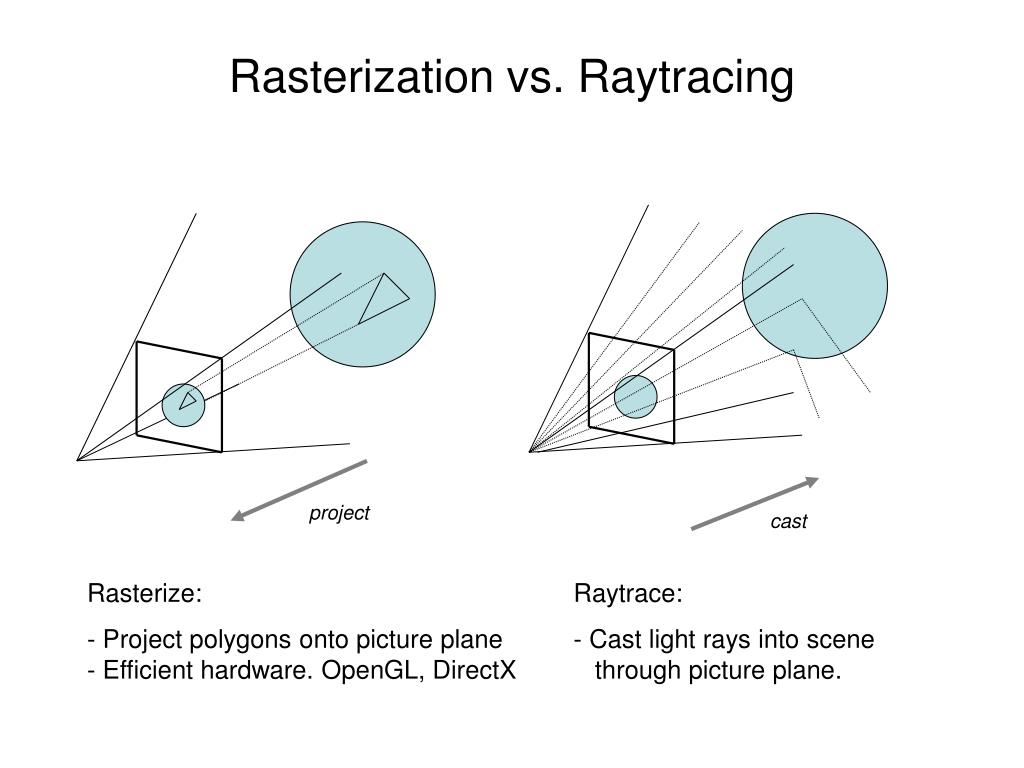

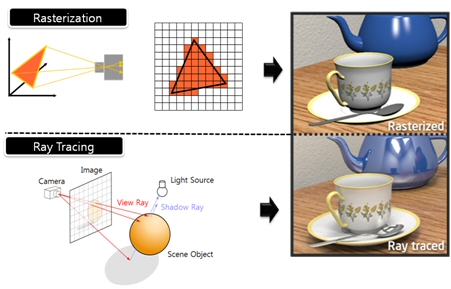

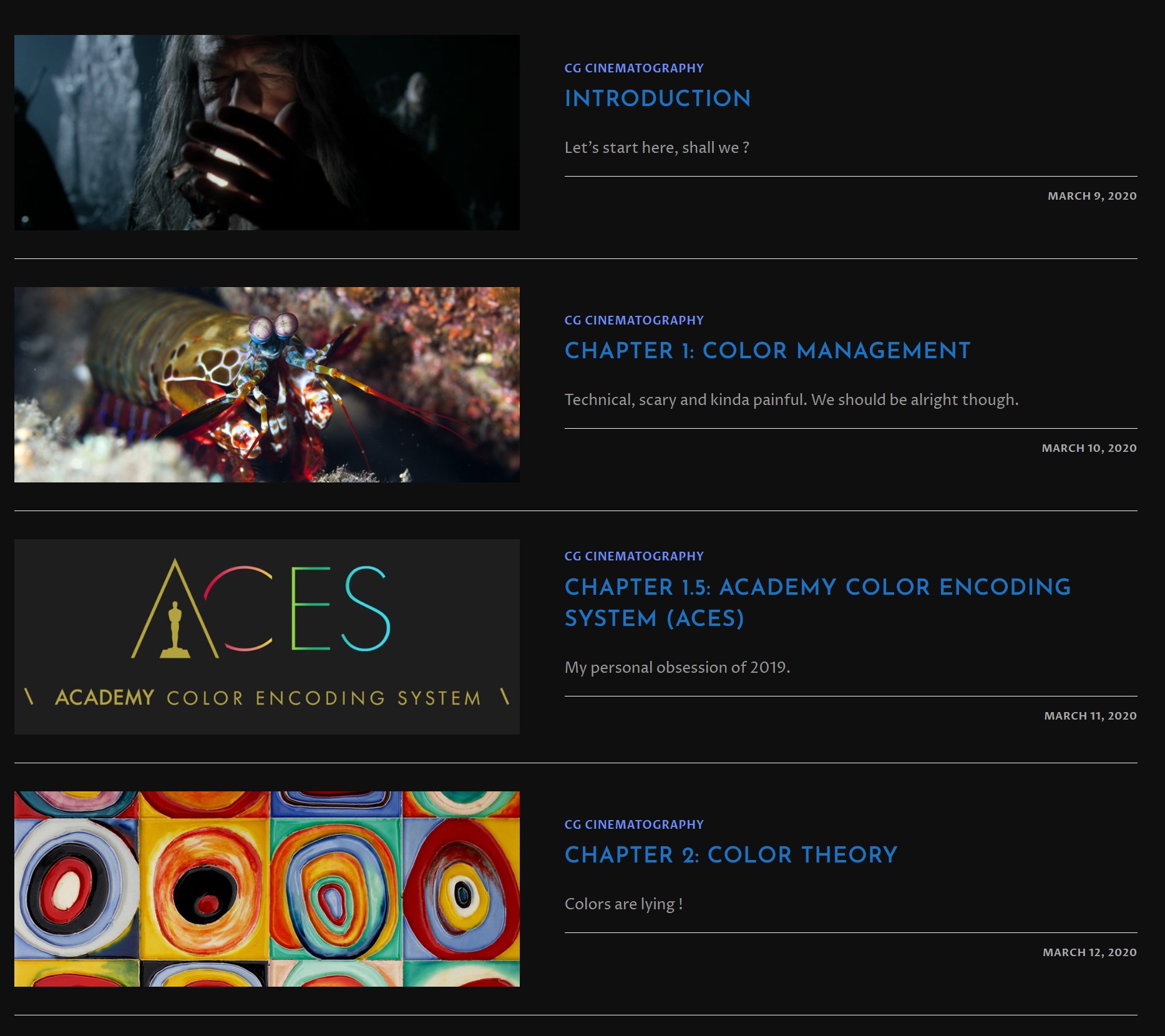

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

Read more: What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…RASTERIZATION

Rasterisation (or rasterization) is the task of taking the information described in a vector graphics format OR the vertices of triangles making 3D shapes and converting them into a raster image (a series of pixels, dots or lines, which, when displayed together, create the image which was represented via shapes), or in other words “rasterizing” vectors or 3D models onto a 2D plane for display on a computer screen.For each triangle of a 3D shape, you project the corners of the triangle on the virtual screen with some math (projective geometry). Then you have the position of the 3 corners of the triangle on the pixel screen. Those 3 points have texture coordinates, so you know where in the texture are the 3 corners. The cost is proportional to the number of triangles, and is only a little bit affected by the screen resolution.

In computer graphics, a raster graphics or bitmap image is a dot matrix data structure that represents a generally rectangular grid of pixels (points of color), viewable via a monitor, paper, or other display medium.

With rasterization, objects on the screen are created from a mesh of virtual triangles, or polygons, that create 3D models of objects. A lot of information is associated with each vertex, including its position in space, as well as information about color, texture and its “normal,” which is used to determine the way the surface of an object is facing.

Computers then convert the triangles of the 3D models into pixels, or dots, on a 2D screen. Each pixel can be assigned an initial color value from the data stored in the triangle vertices.

Further pixel processing or “shading,” including changing pixel color based on how lights in the scene hit the pixel, and applying one or more textures to the pixel, combine to generate the final color applied to a pixel.

The main advantage of rasterization is its speed. However, rasterization is simply the process of computing the mapping from scene geometry to pixels and does not prescribe a particular way to compute the color of those pixels. So it cannot take shading, especially the physical light, into account and it cannot promise to get a photorealistic output. That’s a big limitation of rasterization.

There are also multiple problems:

If you have two triangles one is behind the other, you will draw twice all the pixels. you only keep the pixel from the triangle that is closer to you (Z-buffer), but you still do the work twice.

The borders of your triangles are jagged as it is hard to know if a pixel is in the triangle or out. You can do some smoothing on those, that is anti-aliasing.

You have to handle every triangles (including the ones behind you) and then see that they do not touch the screen at all. (we have techniques to mitigate this where we only look at triangles that are in the field of view)

Transparency is hard to handle (you can’t just do an average of the color of overlapping transparent triangles, you have to do it in the right order)

RAY CASTING

It is almost the exact reverse of rasterization: you start from the virtual screen instead of the vector or 3D shapes, and you project a ray, starting from each pixel of the screen, until it intersect with a triangle.The cost is directly correlated to the number of pixels in the screen and you need a really cheap way of finding the first triangle that intersect a ray. In the end, it is more expensive than rasterization but it will, by design, ignore the triangles that are out of the field of view.

You can use it to continue after the first triangle it hit, to take a little bit of the color of the next one, etc… This is useful to handle the border of the triangle cleanly (less jagged) and to handle transparency correctly.

RAYTRACING

Same idea as ray casting except once you hit a triangle you reflect on it and go into a different direction. The number of reflection you allow is the “depth” of your ray tracing. The color of the pixel can be calculated, based off the light source and all the polygons it had to reflect off of to get to that screen pixel.The easiest way to think of ray tracing is to look around you, right now. The objects you’re seeing are illuminated by beams of light. Now turn that around and follow the path of those beams backwards from your eye to the objects that light interacts with. That’s ray tracing.

Ray tracing is eye-oriented process that needs walking through each pixel looking for what object should be shown there, which is also can be described as a technique that follows a beam of light (in pixels) from a set point and simulates how it reacts when it encounters objects.

Compared with rasterization, ray tracing is hard to be implemented in real time, since even one ray can be traced and processed without much trouble, but after one ray bounces off an object, it can turn into 10 rays, and those 10 can turn into 100, 1000…The increase is exponential, and the the calculation for all these rays will be time consuming.

Historically, computer hardware hasn’t been fast enough to use these techniques in real time, such as in video games. Moviemakers can take as long as they like to render a single frame, so they do it offline in render farms. Video games have only a fraction of a second. As a result, most real-time graphics rely on the another technique called rasterization.

PATH TRACING

Path tracing can be used to solve more complex lighting situations.

Path tracing is a type of ray tracing. When using path tracing for rendering, the rays only produce a single ray per bounce. The rays do not follow a defined line per bounce (to a light, for example), but rather shoot off in a random direction. The path tracing algorithm then takes a random sampling of all of the rays to create the final image. This results in sampling a variety of different types of lighting.When a ray hits a surface it doesn’t trace a path to every light source, instead it bounces the ray off the surface and keeps bouncing it until it hits a light source or exhausts some bounce limit.

It then calculates the amount of light transferred all the way to the pixel, including any color information gathered from surfaces along the way.

It then averages out the values calculated from all the paths that were traced into the scene to get the final pixel color value.It requires a ton of computing power and if you don’t send out enough rays per pixel or don’t trace the paths far enough into the scene then you end up with a very spotty image as many pixels fail to find any light sources from their rays. So when you increase the the samples per pixel, you can see the image quality becomes better and better.

Ray tracing tends to be more efficient than path tracing. Basically, the render time of a ray tracer depends on the number of polygons in the scene. The more polygons you have, the longer it will take.

Meanwhile, the rendering time of a path tracer can be indifferent to the number of polygons, but it is related to light situation: If you add a light, transparency, translucence, or other shader effects, the path tracer will slow down considerably.blogs.nvidia.com/blog/2018/03/19/whats-difference-between-ray-tracing-rasterization/

https://en.wikipedia.org/wiki/Rasterisation

https://www.quora.com/Whats-the-difference-between-ray-tracing-and-path-tracing

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

Read more: RawTherapee – a free, open source, cross-platform raw image and HDRi processing program5.10 of this tool includes excellent tools to clean up cr2 and cr3 used on set to support HDRI processing.

Converting raw to AcesCG 32 bit tiffs with metadata. -

domeble – Hi-Resolution CGI Backplates and 360° HDRI

Read more: domeble – Hi-Resolution CGI Backplates and 360° HDRIWhen collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Convert 2D Images to 3D Models

-

Sensitivity of human eye

-

AI Search – Find The Best AI Tools & Apps

-

How to paint a boardgame miniatures

-

Cinematographers Blueprint 300dpi poster

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

-

How does Stable Diffusion work?

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

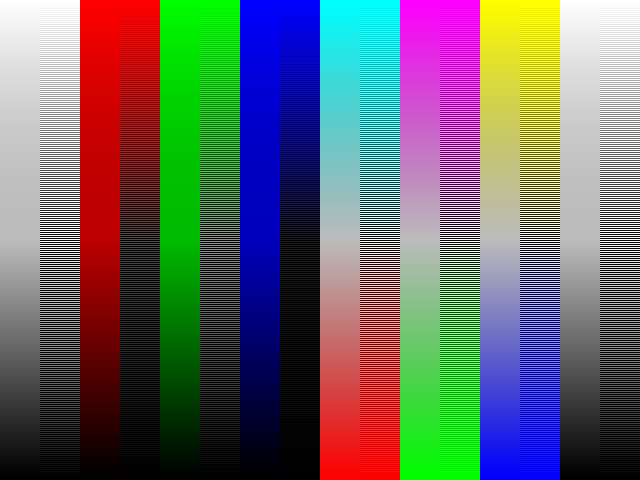

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)