COMPOSITION

-

Composition – These are the basic lighting techniques you need to know for photography and film

Read more: Composition – These are the basic lighting techniques you need to know for photography and filmhttp://www.diyphotography.net/basic-lighting-techniques-need-know-photography-film/

Amongst the basic techniques, there’s…

1- Side lighting – Literally how it sounds, lighting a subject from the side when they’re faced toward you

2- Rembrandt lighting – Here the light is at around 45 degrees over from the front of the subject, raised and pointing down at 45 degrees

3- Back lighting – Again, how it sounds, lighting a subject from behind. This can help to add drama with silouettes

4- Rim lighting – This produces a light glowing outline around your subject

5- Key light – The main light source, and it’s not necessarily always the brightest light source

6- Fill light – This is used to fill in the shadows and provide detail that would otherwise be blackness

7- Cross lighting – Using two lights placed opposite from each other to light two subjects

DESIGN

COLOR

-

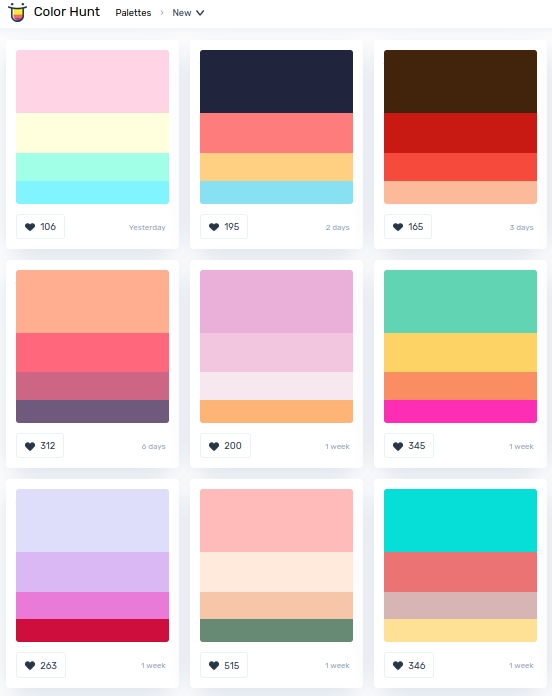

colorhunt.co

Read more: colorhunt.coColor Hunt is a free and open platform for color inspiration with thousands of trendy hand-picked color palettes.

-

Victor Perez – ACES Color Management in DaVinci Resolve

Read more: Victor Perez – ACES Color Management in DaVinci Resolvehttpv://www.youtube.com/watch?v=i–TS88-6xA

-

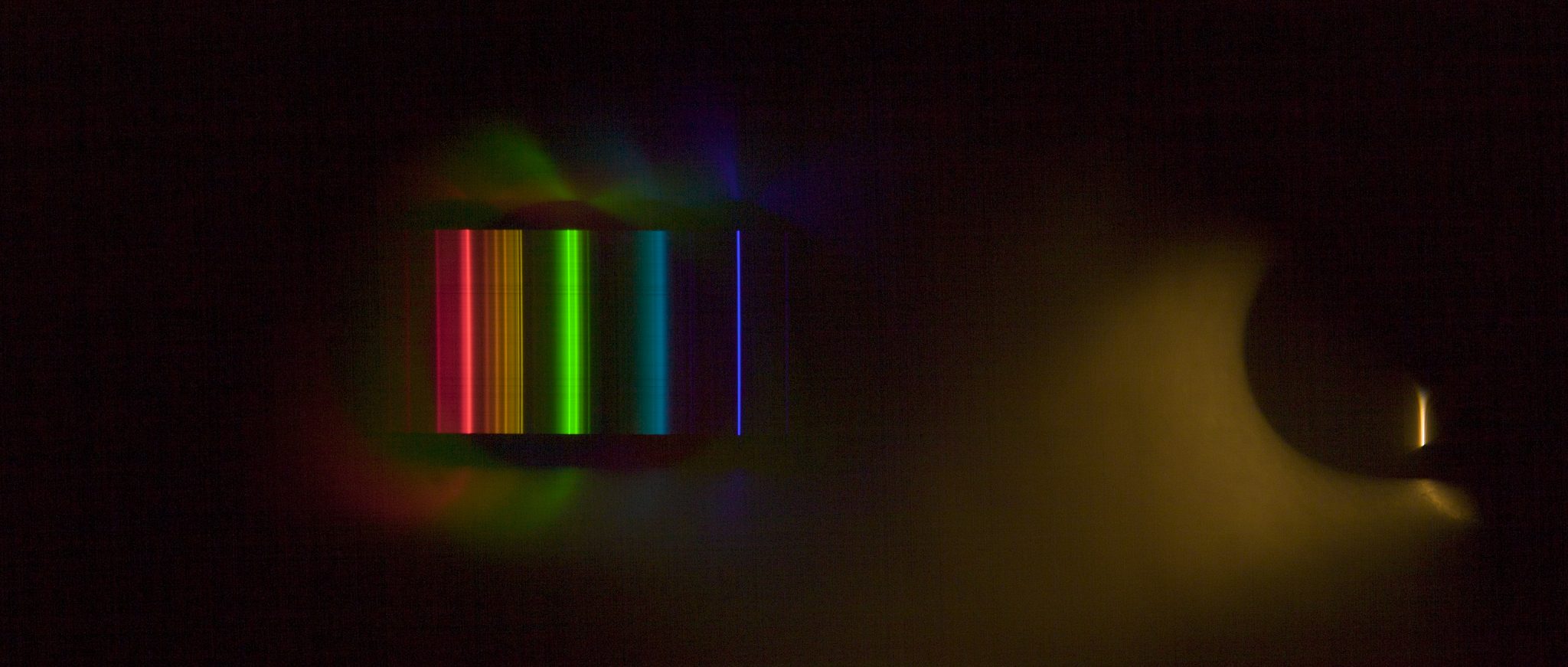

Scientists claim to have discovered ‘new colour’ no one has seen before: Olo

Read more: Scientists claim to have discovered ‘new colour’ no one has seen before: Olohttps://www.bbc.com/news/articles/clyq0n3em41o

By stimulating specific cells in the retina, the participants claim to have witnessed a blue-green colour that scientists have called “olo”, but some experts have said the existence of a new colour is “open to argument”.

The findings, published in the journal Science Advances on Friday, have been described by the study’s co-author, Prof Ren Ng from the University of California, as “remarkable”.

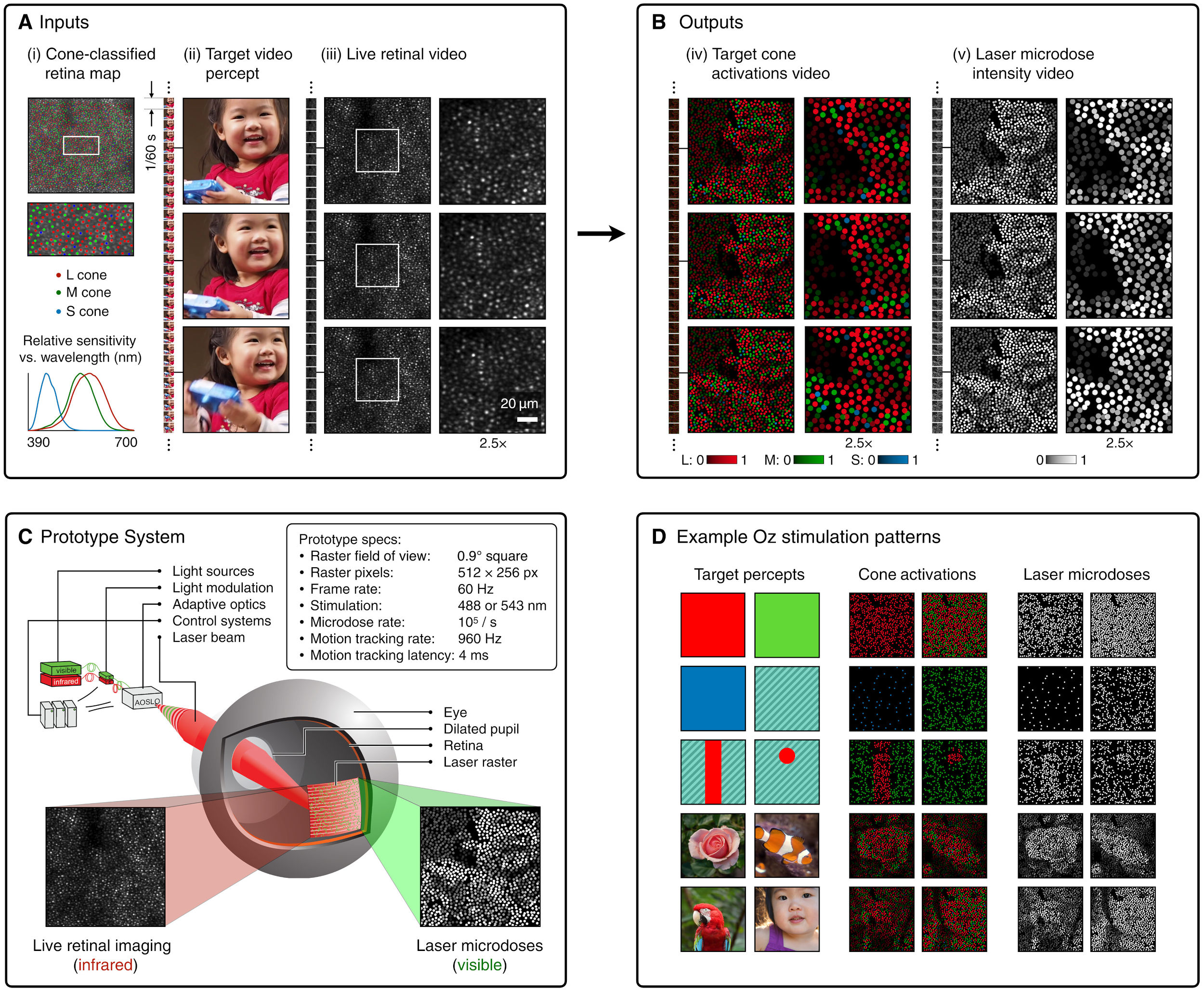

(A) System inputs. (i) Retina map of 103 cone cells preclassified by spectral type (7). (ii) Target visual percept (here, a video of a child, see movie S1 at 1:04). (iii) Infrared cellular-scale imaging of the retina with 60-frames-per-second rolling shutter. Fixational eye movement is visible over the three frames shown.

(B) System outputs. (iv) Real-time per-cone target activation levels to reproduce the target percept, computed by: extracting eye motion from the input video relative to the retina map; identifying the spectral type of every cone in the field of view; computing the per-cone activation the target percept would have produced. (v) Intensities of visible-wavelength 488-nm laser microdoses at each cone required to achieve its target activation level.

(C) Infrared imaging and visible-wavelength stimulation are physically accomplished in a raster scan across the retinal region using AOSLO. By modulating the visible-wavelength beam’s intensity, the laser microdoses shown in (v) are delivered. Drawing adapted with permission [Harmening and Sincich (54)].

(D) Examples of target percepts with corresponding cone activations and laser microdoses, ranging from colored squares to complex imagery. Teal-striped regions represent the color “olo” of stimulating only M cones.

-

Anders Langlands – Render Color Spaces

Read more: Anders Langlands – Render Color Spaceshttps://www.colour-science.org/anders-langlands/

This page compares images rendered in Arnold using spectral rendering and different sets of colourspace primaries: Rec.709, Rec.2020, ACES and DCI-P3. The SPD data for the GretagMacbeth Color Checker are the measurements of Noburu Ohta, taken from Mansencal, Mauderer and Parsons (2014) colour-science.org.

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

Read more: Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminancehttps://www.translatorscafe.com/unit-converter/en-US/illumination/1-11/

The power output of a light source is measured using the unit of watts W. This is a direct measure to calculate how much power the light is going to drain from your socket and it is not relatable to the light brightness itself.

The amount of energy emitted from it per second. That energy comes out in a form of photons which we can crudely represent with rays of light coming out of the source. The higher the power the more rays emitted from the source in a unit of time.

Not all energy emitted is visible to the human eye, so we often rely on photometric measurements, which takes in account the sensitivity of human eye to different wavelenghts

Details in the post

(more…)

LIGHTING

-

Disney’s Moana Island Scene – Free data set

Read more: Disney’s Moana Island Scene – Free data sethttps://www.disneyanimation.com/resources/moana-island-scene/

This data set contains everything necessary to render a version of the Motunui island featured in the 2016 film Moana.

-

Romain Chauliac – LightIt a lighting script for Maya and Arnold

Read more: Romain Chauliac – LightIt a lighting script for Maya and ArnoldLightIt is a script for Maya and Arnold that will help you and improve your lighting workflow.

Thanks to preset studio lighting components (lights, backdrop…), high quality studio scenes and HDRI library manager.https://www.artstation.com/artwork/393emJ

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

Read more: Narcis Calin’s Galaxy Engine – A free, open source simulation softwareThis 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

HDRI Median Cut plugin

-

Alejandro Villabón and Rafał Kaniewski – Recover Highlights With 8-Bit to High Dynamic Range Half Float Copycat – Nuke

-

Matt Hallett – WAN 2.1 VACE Total Video Control in ComfyUI

-

Key/Fill ratios and scene composition using false colors and Nuke node

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

-

WhatDreamsCost Spline-Path-Control – Create motion controls for ComfyUI

-

Emmanuel Tsekleves – Writing Research Papers

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.