Views : 1,024

3Dprinting (176) A.I. (761) animation (340) blender (197) colour (229) commercials (49) composition (152) cool (360) design (636) Featured (69) hardware (308) IOS (109) jokes (134) lighting (282) modeling (131) music (186) photogrammetry (178) photography (751) production (1254) python (87) quotes (491) reference (310) software (1336) trailers (297) ves (538) VR (219)

Category: software

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

5.10 of this tool includes excellent tools to clean up cr2 and cr3 used on set to support HDRI processing.

Converting raw to AcesCG 32 bit tiffs with metadata. -

Intel Open Source Image Denoise in Blender – High-Performance Denoising Library for Ray Tracing

https://www.openimagedenoise.org/

https://github.com/OpenImageDenoise/oidn/releases/tag/v1.3.0

-

Python for beginners

https://www.freecodecamp.org/news/python-code-examples-simple-python-program-example/

If the text does not load well, please download the pdf locally to your machine.

The pdf plugin may not work well under Linux os. -

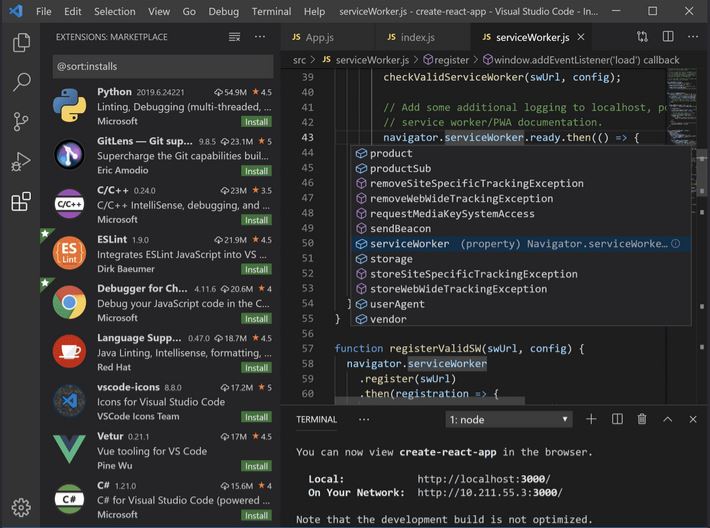

Visual Code Studio – Free. Built on open source. Runs everywhere code editor.

https://www.freecodecamp.org/news/how-to-set-up-vs-code-for-web-development

Visual Studio Code is a lightweight but powerful source code editor which runs on your desktop and is available for Windows, macOS and Linux. It comes with built-in support for JavaScript, TypeScript and Node.js and has a rich ecosystem of extensions for other languages (such as C++, C#, Java, Python, PHP, Go) and runtimes (such as .NET and Unity).

Visual Studio Code is a lightweight but powerful source code editor which runs on your desktop and is available for Windows, macOS and Linux. It comes with built-in support for JavaScript, TypeScript and Node.js and has a rich ecosystem of extensions for other languages (such as C++, C#, Java, Python, PHP, Go) and runtimes (such as .NET and Unity). -

Learn Low Poly Modeling in Blender 2.9 / 2.8

TIPS

Loop cut a selected face

1- select face

2- shift-H to hide all but the selection. Loop cut doesn’t work on hidden faces.

3- loop cut

4- Alt+H to unhide everything when you’re done. -

Python and TCL: Tips and Tricks for Foundry Nuke

www.andreageremia.it/tutorial_python_tcl.html

https://www.gatimedia.co.uk/list-of-knobs-2

https://learn.foundry.com/nuke/developers/63/ndkdevguide/knobs-and-handles/knobtypes.html

http://www.andreageremia.it/tutorial_python_tcl.html

http://thoughtvfx.blogspot.com/2012/12/nuke-tcl-tips.html

Check final image quality

https://www.compositingpro.com/tech-check-compositing-shot-in-nuke/Local copy:

http://pixelsham.com/wp-content/uploads/2023/03/compositing_pro_tech_check_nuke_script.nkNuke tcl procedures

https://www.gatimedia.co.uk/nuke-tcl-proceduresKnobs

https://learn.foundry.com/nuke/developers/63/ndkdevguide/knobs-and-handles/knobtypes.html

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Convert 2D Images to 3D Models

-

The CG Career YouTube channel is live!

-

Types of AI Explained in a few Minutes – AI Glossary

-

JavaScript how-to free resources

-

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

-

Matt Gray – How to generate a profitable business

-

Film Production walk-through – pipeline – I want to make a … movie

-

59 AI Filmmaking Tools For Your Workflow

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.