https://grisoon.github.io/DreamActor-M1

3Dprinting (179) A.I. (900) animation (353) blender (217) colour (241) commercials (53) composition (154) cool (368) design (657) Featured (91) hardware (317) IOS (109) jokes (140) lighting (300) modeling (156) music (189) photogrammetry (197) photography (757) production (1308) python (101) quotes (498) reference (317) software (1379) trailers (308) ves (573) VR (221)

POPULAR SEARCHES unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

Category: A.I.

-

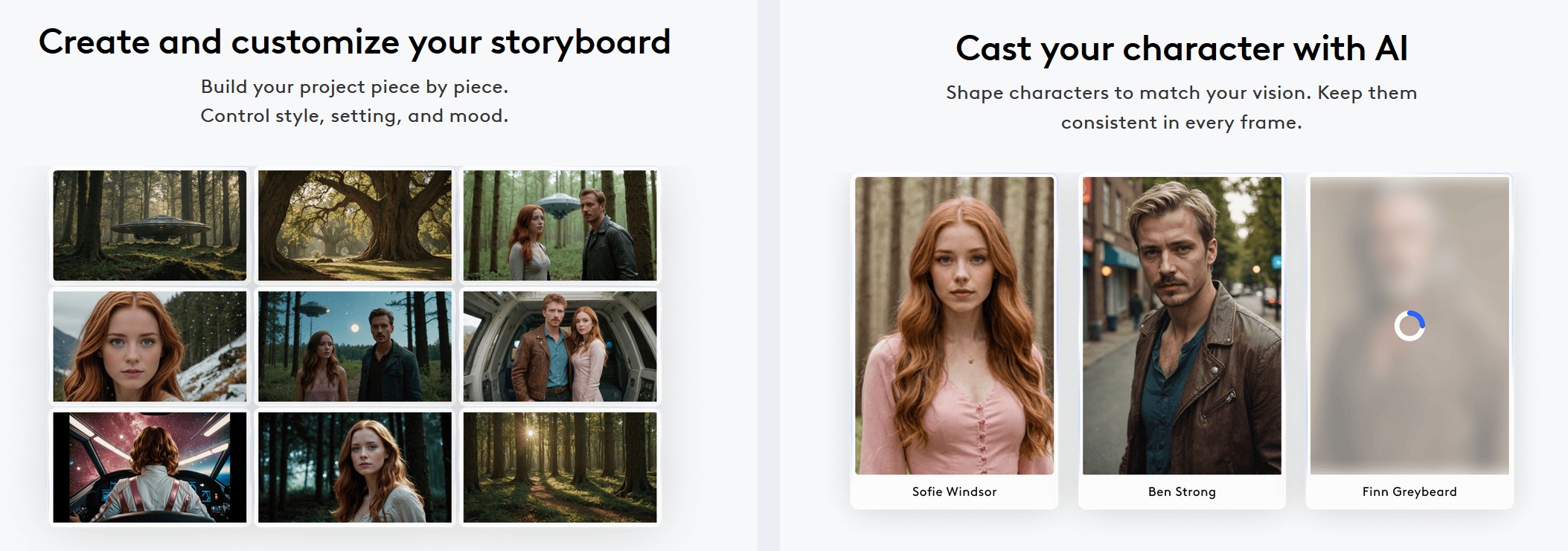

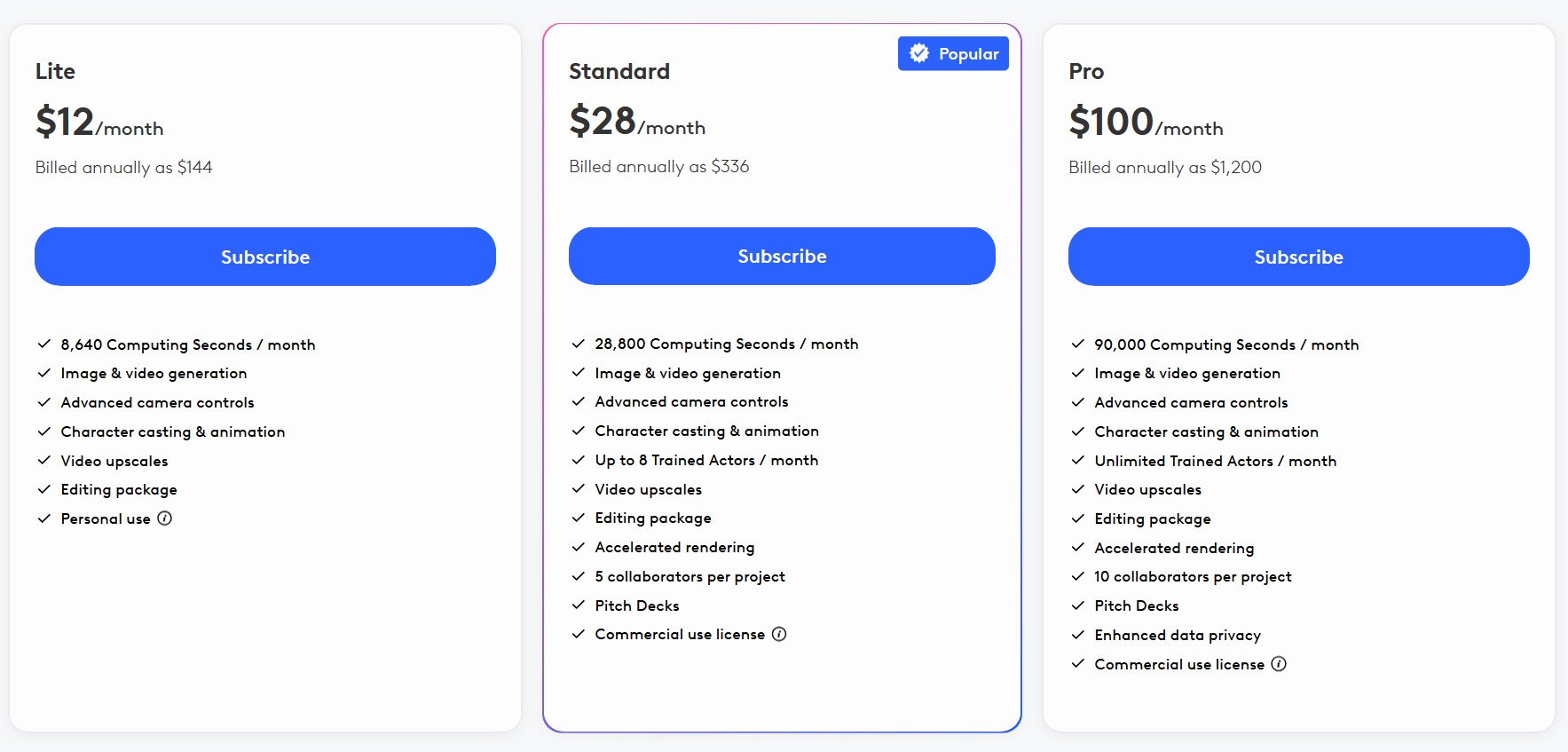

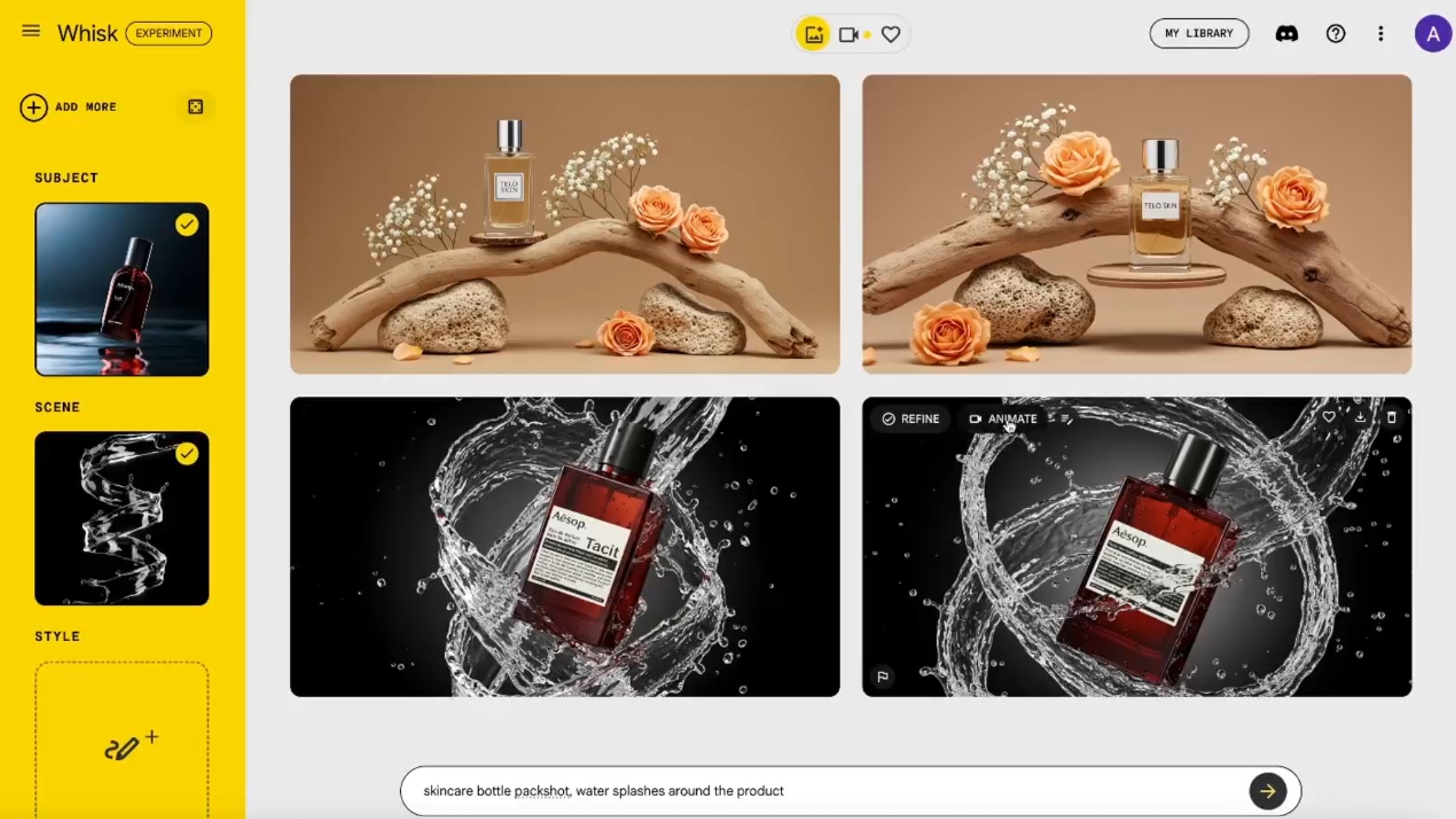

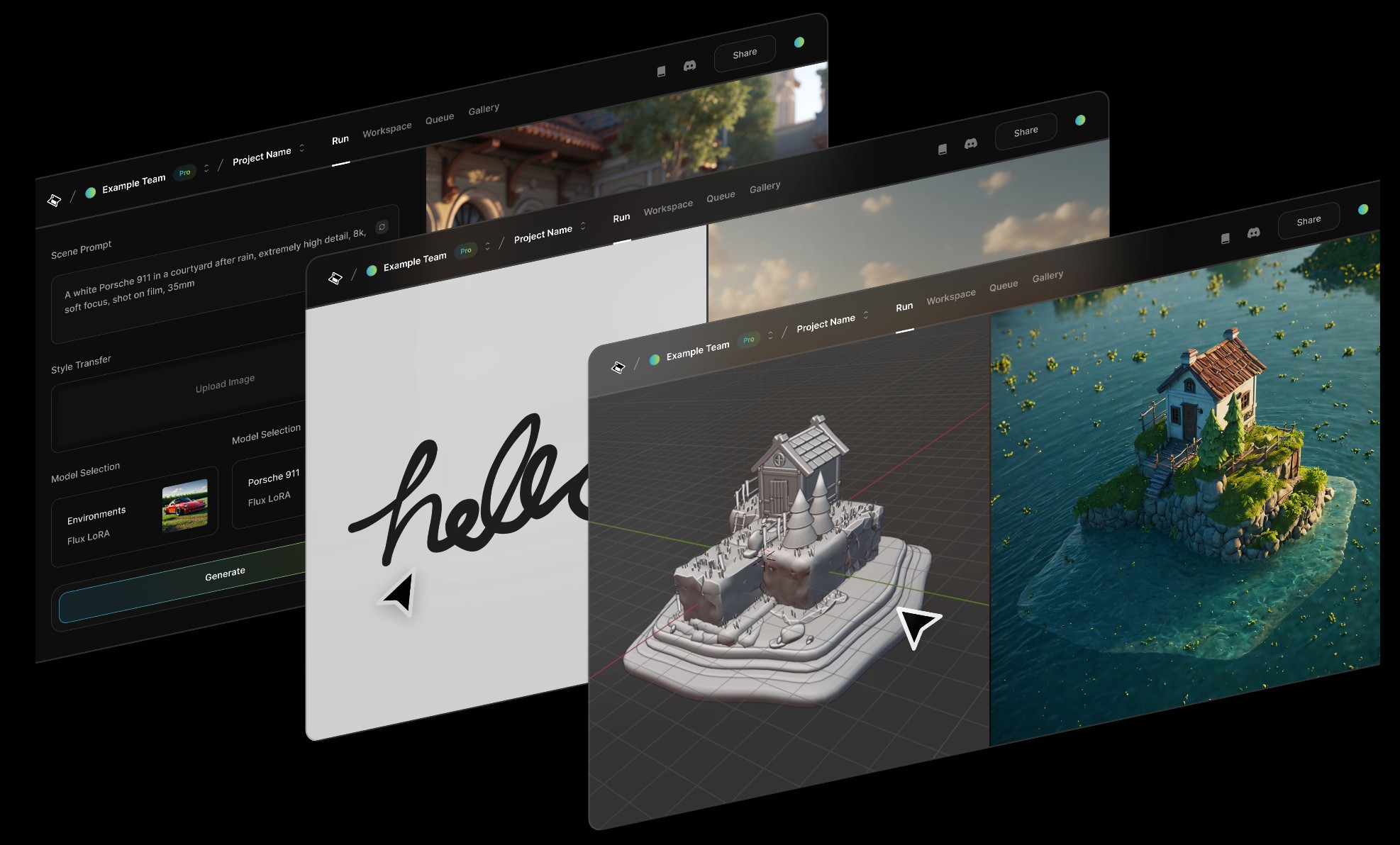

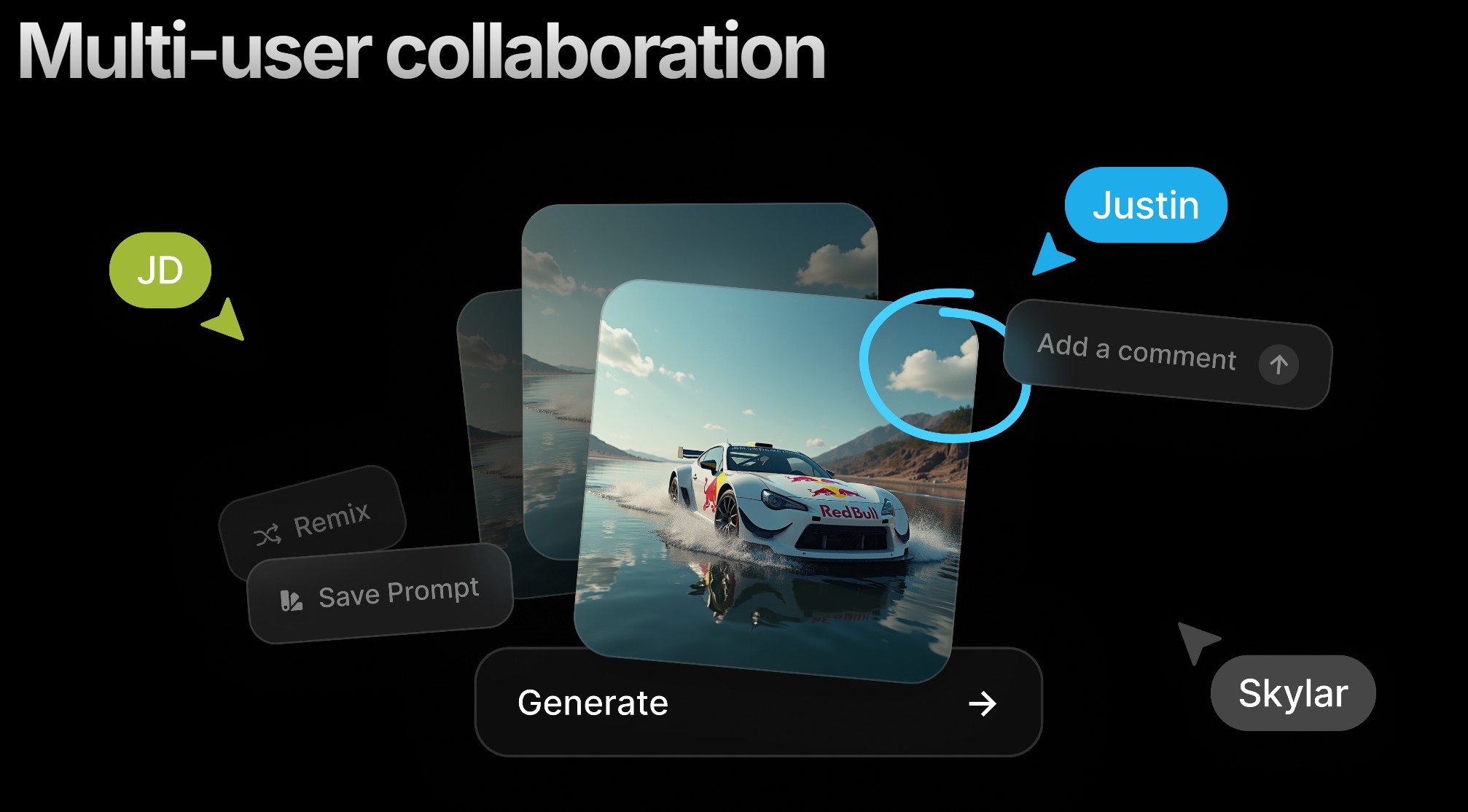

PlayBook3D – Creative controls for all media formats

Playbook3d.com is a diffusion-based render engine that reduces the time to final image with AI. It is accessible via web editor and API with support for scene segmentation and re-lighting, integration with production pipelines and frame-to-frame consistency for image, video, and real-time 3D formats.

-

AI and the Law – AI Creativity – Genius or Gimmick?

7:59-9:50 Justine Bateman:

“I mean first I want to give people, help people have a little bit of a definition of what generative AI is—

think of it as like a blender and if you have a blender at home and you turn it on, what does it do? It depends on what I put into it, so it cannot function unless it’s fed things.

Then you turn on the blender and you give it a prompt, which is your little spoon, and you get a little spoonful—little Frankenstein spoonful—out of what you asked for.

So what is going into the blender? Every but a hundred years of film and television or many, many years of, you know, doctor’s reports or students’ essays or whatever it is.

In the film business, in particular, that’s what we call theft; it’s the biggest violation. And the term that continues to be used is “all we did.” I think the CTO of OpenAI—believe that’s her position; I forget her name—when she was asked in an interview recently what she had to say about the fact that they didn’t ask permission to take it in, she said, “Well, it was all publicly available.”

And I will say this: if you own a car—I know we’re in New York City, so it’s not going to be as applicable—but if I see a car in the street, it’s publicly available, but somehow it’s illegal for me to take it. That’s what we have the copyright office for, and I don’t know how well staffed they are to handle something like this, but this is the biggest copyright violation in the history of that office and the US government” -

Aze Alter – What If Humans and AI Unite? | AGE OF BEYOND

https://www.patreon.com/AzeAlter

Voices & Sound Effects: https://elevenlabs.io/

Video Created mainly with Luma: https://lumalabs.ai/

LUMA LABS

KLING

RUNWAY

ELEVEN LABS

MINIMAX

MIDJOURNEY

Music By Scott Buckley -

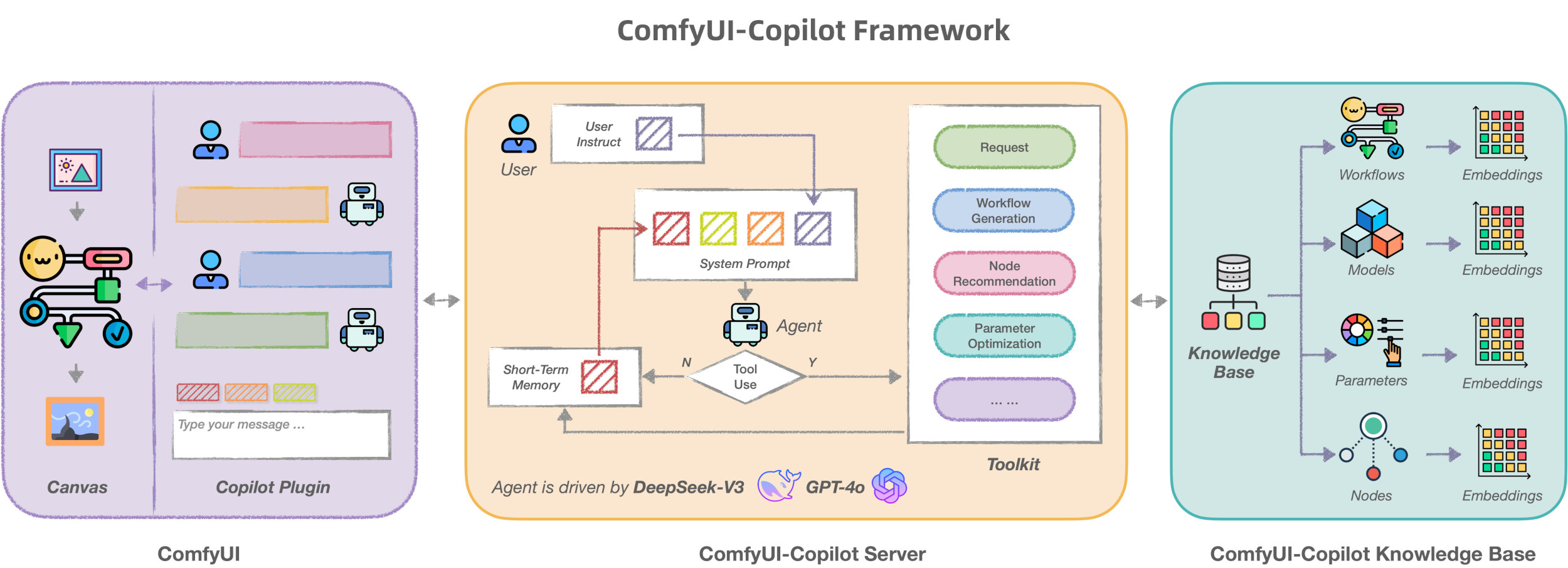

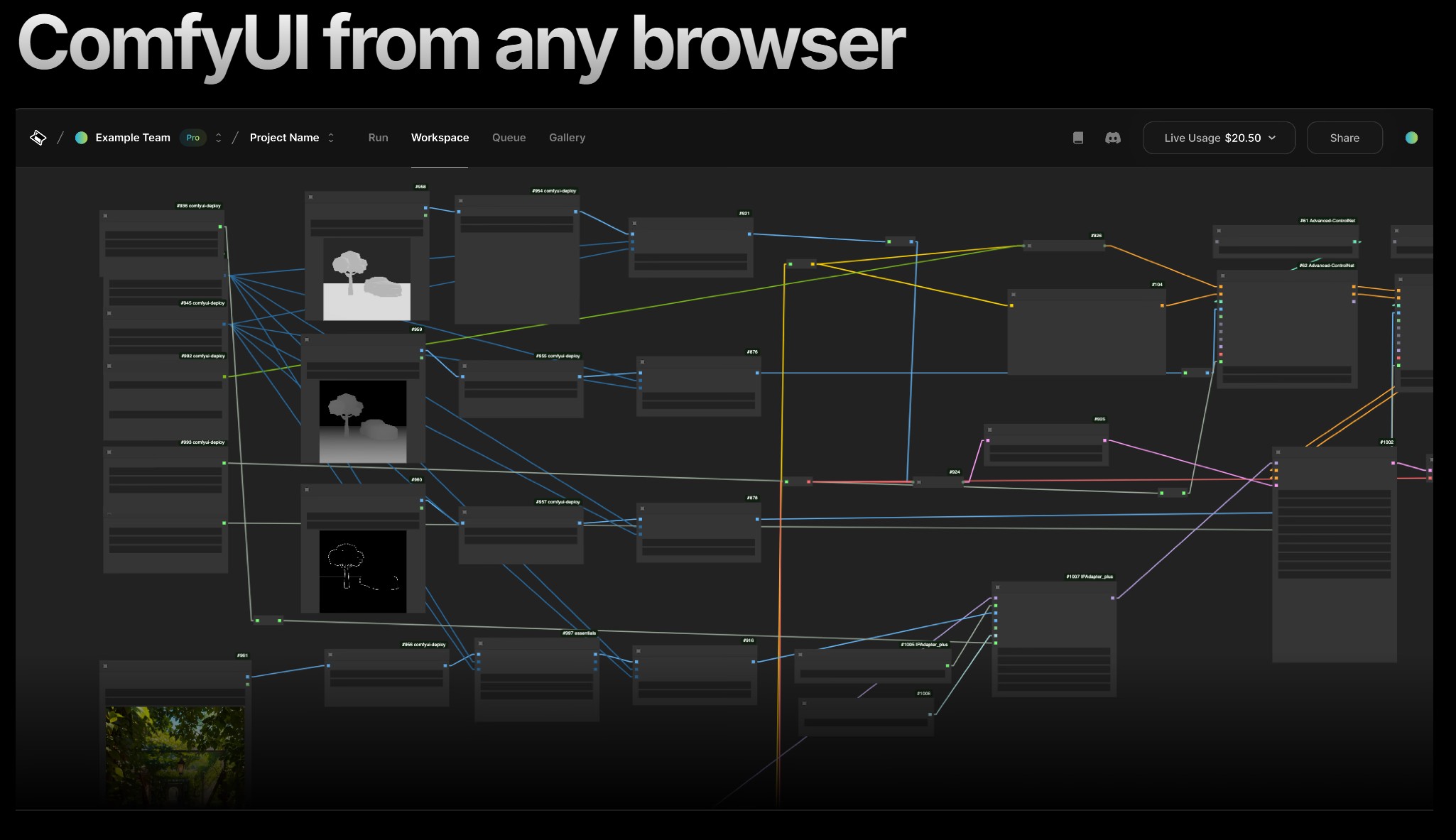

ComfyUI-Manager Joins Comfy-Org

https://blog.comfy.org/p/comfyui-manager-joins-comfy-org

On March 28, ComfyUI-Manager will be moving to the Comfy-Org GitHub organization as Comfy-Org/ComfyUI-Manager. This represents a natural evolution as they continue working to improve the custom node experience for all ComfyUI users.

What This Means For You

This change is primarily about improving support and development velocity. There are a few practical considerations:

- Automatic GitHub redirects will ensure all existing links, git commands, and references to the repository will continue to work seamlessly without any action needed

- For developers: Any existing PRs and issues will be transferred to the new repository location

- For users: ComfyUI-Manager will continue to function exactly as before—no action needed

- For workflow authors: Resources that reference ComfyUI-Manager will continue to work without interruption

-

AccVideo – Accelerating Video Diffusion Model with Synthetic Dataset

https://aejion.github.io/accvideo

https://github.com/aejion/AccVideo

https://huggingface.co/aejion/AccVideo

AccVideo is a novel efficient distillation method to accelerate video diffusion models with synthetic datset. This method is 8.5x faster than HunyuanVideo.

-

Bennett Waisbren – ChatGPT 4 video generation

1. Rankin/Bass – That nostalgic stop-motion look like Rudolph the Red-Nosed Reindeer. Cozy and janky.

2. Don Bluth – Lavish hand-drawn fantasy. Lush lighting, expressive eyes, dramatic weight.

3. Fleischer Studios – 1930s rubber-hose style, like Betty Boop and Popeye. Surreal, bouncy, jazz-age energy.

4. Pixar – Clean, subtle facial animation, warm lighting, and impeccable shot composition.

5. Toei Animation (Classic Era) – Foundation of mainstream anime. Big eyes, clean lines, iconic nostalgia.

6. Cow and Chicken / Cartoon Network Gross-Out – Elastic, grotesque, hyper-exaggerated. Ugly-cute characters, zoom-ins on feet and meat, lowbrow chaos.

7. Max Fleischer’s Superman – Retro-futurist noir from the ’40s, bold shadows and heroic lighting.

8. Sylvain Chomet – French surrealist like The Triplets of Belleville. Slender, elongated, moody weirdness. -

Reve Image 1.0 Halfmoon – A new model trained from the ground up to excel at prompt adherence, aesthetics, and typography

A little-known AI image generator called Reve Image 1.0 is trying to make a name in the text-to-image space, potentially outperforming established tools like Midjourney, Flux, and Ideogram. Users receive 100 free credits to test the service after signing up, with additional credits available at $5 for 500 generations—pretty cheap when compared to options like MidJourney or Ideogram, which start at $8 per month and can reach $120 per month, depending on the usage. It also offers 20 free generations per day.

-

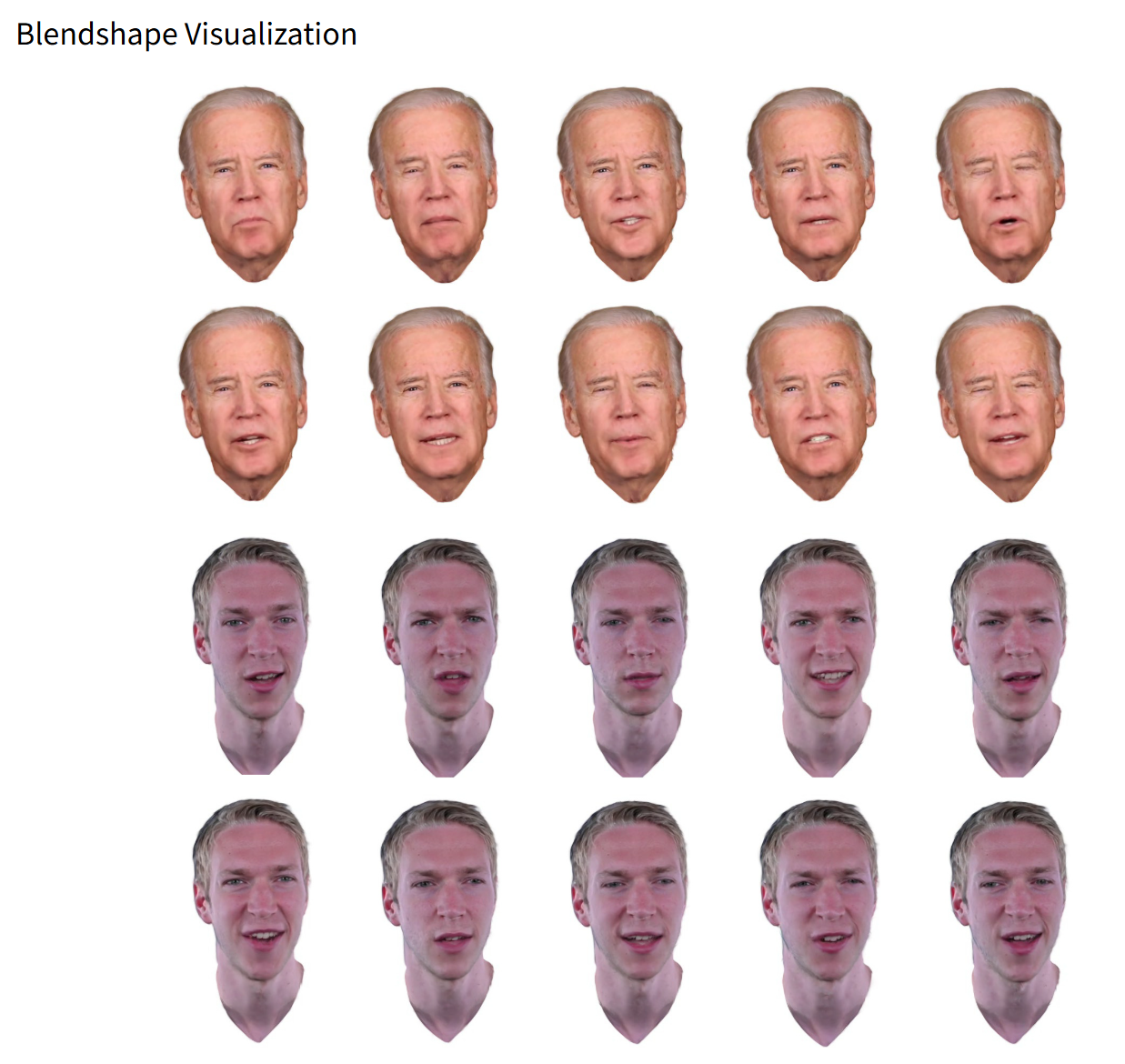

RGBAvatar – Reduced Gaussian Blendshapes for Online Modeling of Head Avatars

https://gapszju.github.io/RGBAvatar

A method for reconstructing photorealistic, animatable head avatars at speeds sufficient for on-the-fly reconstruction. Unlike prior approaches that utilize linear bases from 3D morphable models (3DMM) to model Gaussian blendshapes, our method maps tracked 3DMM parameters into reduced blendshape weights with an MLP, leading to a compact set of blendshape bases.

https://github.com/gapszju/RGBAvatar

-

Robert Legato joins Stability AI as Chief Pipeline Architect

https://stability.ai/news/introducing-our-new-chief-pipeline-architect-rob-legato

“Joining Stability AI is an incredible opportunity, and I couldn’t be more excited to help shape the next era of filmmaking,” said Legato. “With dynamic leaders like Prem Akkaraju and James Cameron driving the vision, the potential here is limitless. What excites me most is Stability AI’s commitment to filmmakers—building a tool that is as intuitive as it is powerful, designed to elevate creativity rather than replace it. It’s an artist-first approach to AI, and I’m thrilled to be part of it.”

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

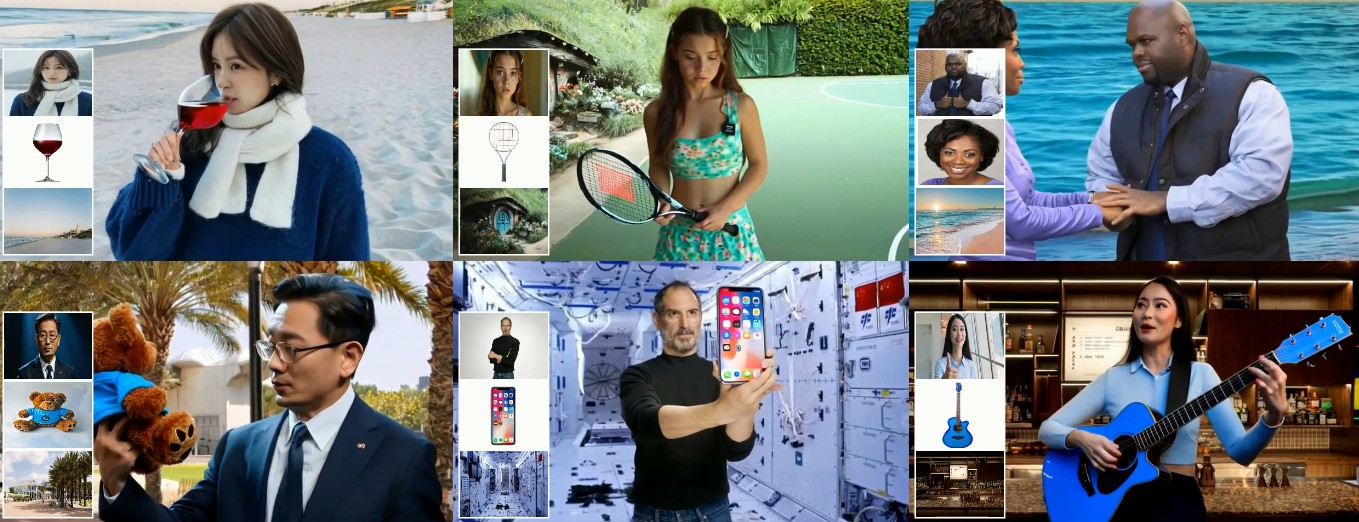

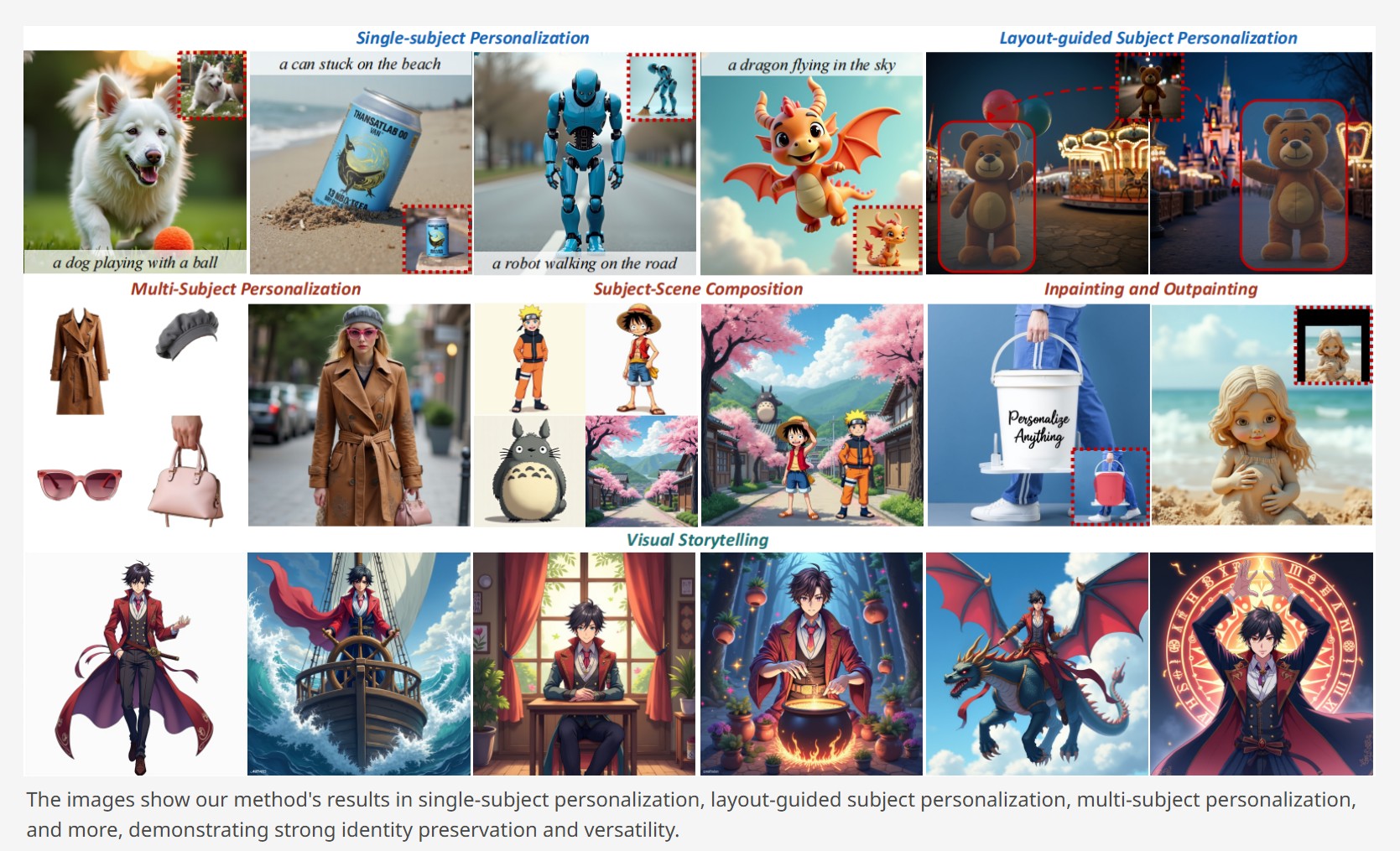

Personalize Anything – For Free with Diffusion Transformer

https://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Want to build a start up company that lasts? Think three-layer cake

-

Python and TCL: Tips and Tricks for Foundry Nuke

-

Glossary of Lighting Terms – cheat sheet

-

AI and the Law – Netflix : Using Generative AI in Content Production

-

UV maps

-

59 AI Filmmaking Tools For Your Workflow

-

How does Stable Diffusion work?

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.