3Dprinting (176) A.I. (761) animation (340) blender (197) colour (229) commercials (49) composition (152) cool (360) design (636) Featured (69) hardware (308) IOS (109) jokes (134) lighting (282) modeling (131) music (186) photogrammetry (178) photography (751) production (1254) python (87) quotes (491) reference (310) software (1336) trailers (297) ves (538) VR (219)

Category: A.I.

-

MidJourney – Terms Of Service item 10

https://midjourney.gitbook.io/docs/terms-of-service

10. Limitation of Liability and Indemnity

We provide the service as is, and we make no promises or guarantees about it.

You understand and agree that we will not be liable to you or any third party for any loss of profits, use, goodwill, or data, or for any incidental, indirect, special, consequential or exemplary damages, however they arise.

You are responsible for your use of the service. If you harm someone else or get into a dispute with someone else, we will not be involved.

If you knowingly infringe someone else’s intellectual property, and that costs us money, we’re going to come find you and collect that money from you. We might also do other stuff, like try to get a court to make you pay our attorney’s fees. Don’t do it. -

Mohsen Tabasi – Stable Diffusion for Houdini through DreamStudio

https://github.com/proceduralit/StableDiffusion_Houdini

https://github.com/proceduralit/StableDiffusion_Houdini/wiki/

This is a Houdini HDA that submits the render output as the init_image and with getting help from PDG, enables artists to easily define variations on the Stable Diffusion parameters like Sampling Method, Steps, Prompt Strength, and Noise Strength.

Right now DreamStudio is the only public server that the HDA is supporting. So you need to have an account there and connect the HDA to your account.

DreamStudio: https://beta.dreamstudio.ai/membership -

AI Dresses by MaryAnna

https://www.linkedin.com/feed/update/urn:li:activity:7015985798567067648

Created by Discord user: @MaryAnna

-

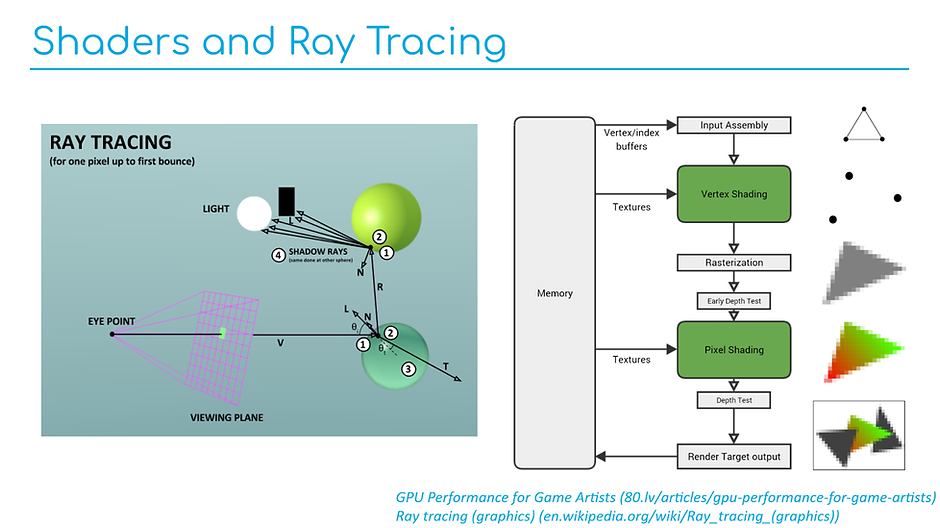

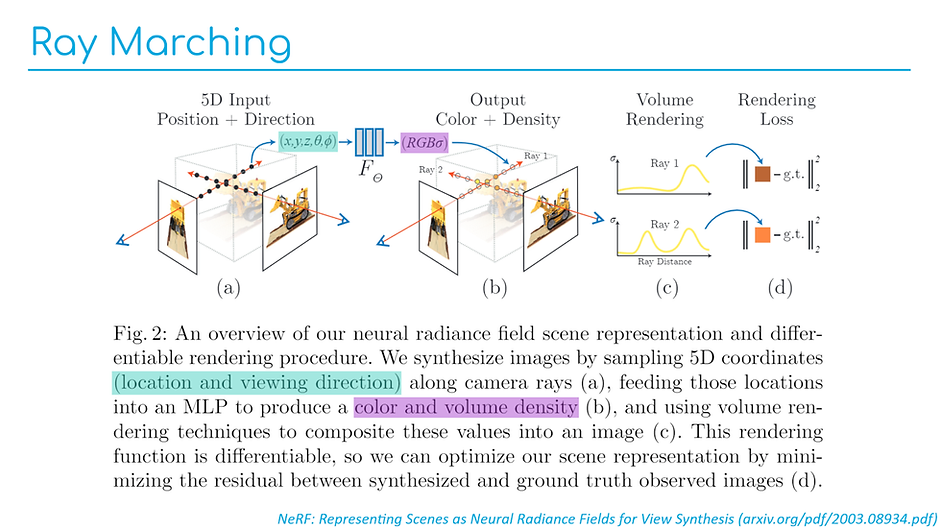

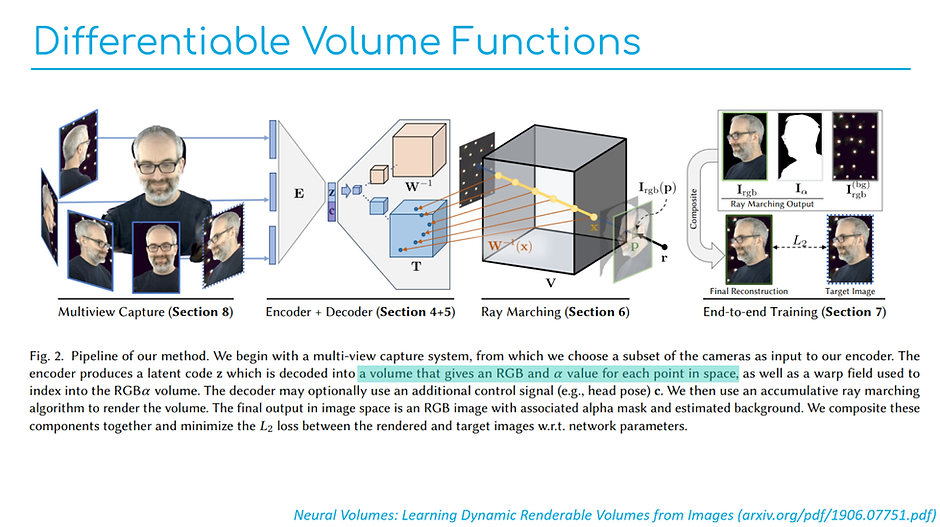

What is Neural Rendering?

https://www.zumolabs.ai/post/what-is-neural-rendering

“The key concept behind neural rendering approaches is that they are differentiable. A differentiable function is one whose derivative exists at each point in the domain. This is important because machine learning is basically the chain rule with extra steps: a differentiable rendering function can be learned with data, one gradient descent step at a time. Learning a rendering function statistically through data is fundamentally different from the classic rendering methods we described above, which calculate and extrapolate from the known laws of physics.”

-

Foundry Nuke Cattery – A library of open source machine learning models

The Cattery is a library of free third-party machine learning models converted to .cat files to run natively in Nuke, designed to bridge the gap between academia and production, providing all communities access to different ML models that all run in Nuke. Users will have access to state-of-the-art models addressing segmentation, depth estimation, optical flow, upscaling, denoising, and style transfer, with plans to expand the models hosted in the future.

https://www.foundry.com/insights/machine-learning/the-artists-guide-to-cattery

https://community.foundry.com/cattery

-

A.J. Ghergich – MJ and Stable Diffusion 1.5 to imagine polaroids of superheroes in dive bars circa 1982

Prompt: Captain Marvel at dive-bar in 1982, polaroid, flash photo, ultra-detailed –q 2 –no hands –v 4

-

chat.openai – A conversational AI system that listens, learns, and challenges

https://openai.com/blog/chatgpt/

Tell me about when Christopher Columbus came to the US in 2015

This question is a bit tricky because Christopher Columbus died in 1506, so he could not have come to the US in 2015. But let’s pretend for a moment that he did!

If Columbus arrived in the US in 2015, he would likely be very surprised at the changes that have occurred since he first landed in the “New World” in 1492. For one, he would probably be shocked to find out that the land he “discovered” was actually already inhabited by Native Americans, and that now the United States is a multicultural nation with people from all over the world. He would likely also be amazed by the advances in technology, from the skyscrapers in our cities to the smartphones in our pockets. Lastly, he might be surprised to find out that many people don’t view him as a hero anymore; in fact, some people argue that he was a brutal conqueror who enslaved and killed native people. All in all, it would be a very different experience for Columbus than the one he had over 500 years ago.

-

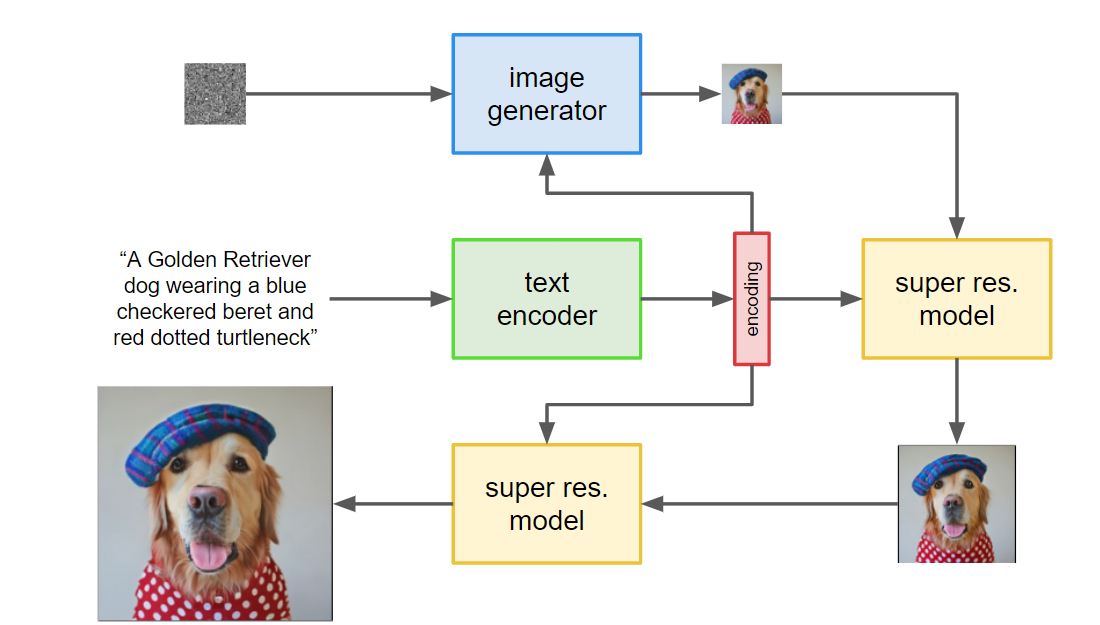

Stability.AI – Stable Diffusion 2.0 open source release

https://stability.ai/blog/stable-diffusion-v2-release

- New Text-to-Image Diffusion Models

- Super-resolution Upscaler Diffusion Models

- Depth-to-Image Diffusion Model

- Updated Inpainting Diffusion Model

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Want to build a start up company that lasts? Think three-layer cake

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

UV maps

-

Mastering The Art Of Photography – PixelSham.com Photography Basics

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

-

Kling 1.6 and competitors – advanced tests and comparisons

-

Glossary of Lighting Terms – cheat sheet

-

Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI | Lex Fridman Podcast #416

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.