https://www.theverge.com/2023/2/22/23611278/midjourney-ai-copyright-office-kristina-kashtanova

3Dprinting (176) A.I. (761) animation (340) blender (197) colour (229) commercials (49) composition (152) cool (360) design (636) Featured (69) hardware (308) IOS (109) jokes (134) lighting (282) modeling (131) music (186) photogrammetry (178) photography (751) production (1254) python (87) quotes (491) reference (310) software (1336) trailers (297) ves (538) VR (219)

Author: pIXELsHAM.com

-

Every Political Ideology and Government

https://youtu.be/9cz4ikFcwMY?si=OSeQk8k6i8yO4r6f

https://youtu.be/rtUg_itWEz8?si=_A2I_ECHzjQDZ3x4

-

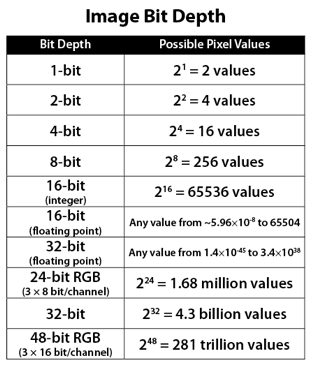

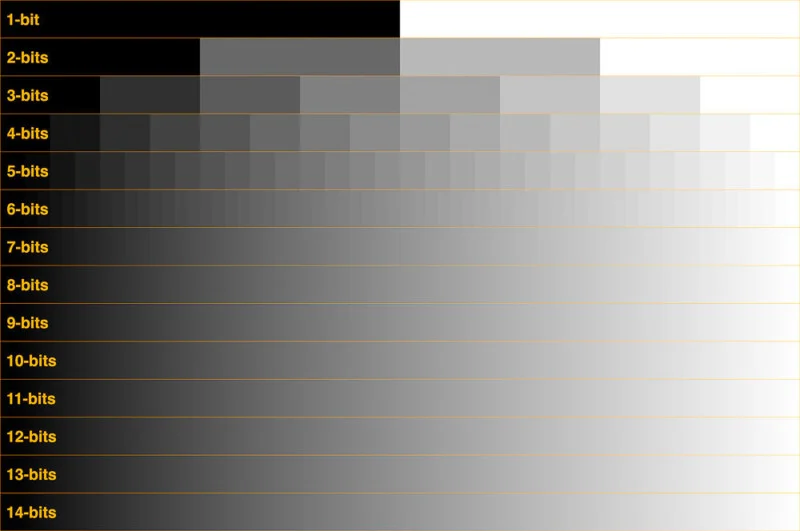

Image rendering bit depth

The terms 8-bit, 16-bit, 16-bit float, and 32-bit refer to different data formats used to store and represent image information, as bits per pixel.

https://en.wikipedia.org/wiki/Color_depth

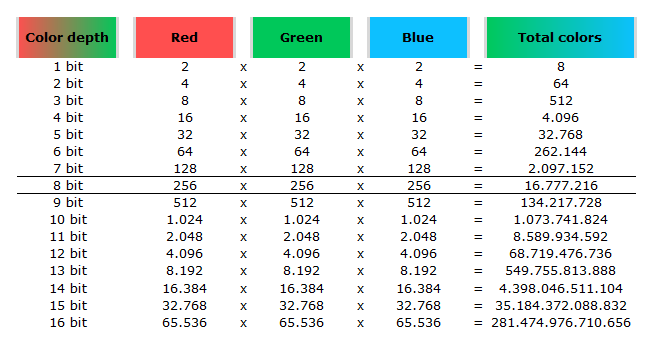

In color technology, color depth also known as bit depth, is either the number of bits used to indicate the color of a single pixel, OR the number of bits used for each color component of a single pixel.

When referring to a pixel, the concept can be defined as bits per pixel (bpp).

When referring to a color component, the concept can be defined as bits per component, bits per channel, bits per color (all three abbreviated bpc), and also bits per pixel component, bits per color channel or bits per sample (bps). Modern standards tend to use bits per component, but historical lower-depth systems used bits per pixel more often.

Color depth is only one aspect of color representation, expressing the precision with which the amount of each primary can be expressed; the other aspect is how broad a range of colors can be expressed (the gamut). The definition of both color precision and gamut is accomplished with a color encoding specification which assigns a digital code value to a location in a color space.

Here’s a simple explanation of each.

8-bit images (i.e. 24 bits per pixel for a color image) are considered Low Dynamic Range.

They can store around 5 stops of light and each pixel carry a value from 0 (black) to 255 (white).

As a comparison, DSLR cameras can capture ~12-15 stops of light and they use RAW files to store the information.16-bit: This format is commonly referred to as “half-precision.” It uses 16 bits of data to represent color values for each pixel. With 16 bits, you can have 65,536 discrete levels of color, allowing for relatively high precision and smooth gradients. However, it has a limited dynamic range, meaning it cannot accurately represent extremely bright or dark values. It is commonly used for regular images and textures.

16-bit float: This format is an extension of the 16-bit format but uses floating-point numbers instead of fixed integers. Floating-point numbers allow for more precise calculations and a larger dynamic range. In this case, the 16 bits are used to store both the color value and the exponent, which controls the range of values that can be represented. The 16-bit float format provides better accuracy and a wider dynamic range than regular 16-bit, making it useful for high-dynamic-range imaging (HDRI) and computations that require more precision.

32-bit: (i.e. 96 bits per pixel for a color image) are considered High Dynamic Range. This format, also known as “full-precision” or “float,” uses 32 bits to represent color values and offers the highest precision and dynamic range among the three options. With 32 bits, you have a significantly larger number of discrete levels, allowing for extremely accurate color representation, smooth gradients, and a wide range of brightness values. It is commonly used for professional rendering, visual effects, and scientific applications where maximum precision is required.

Bits and HDR coverage

High Dynamic Range (HDR) images are designed to capture a wide range of luminance values, from the darkest shadows to the brightest highlights, in order to reproduce a scene with more accuracy and detail. The bit depth of an image refers to the number of bits used to represent each pixel’s color information. When comparing 32-bit float and 16-bit float HDR images, the drop in accuracy primarily relates to the precision of the color information.

A 32-bit float HDR image offers a higher level of precision compared to a 16-bit float HDR image. In a 32-bit float format, each color channel (red, green, and blue) is represented by 32 bits, allowing for a larger range of values to be stored. This increased precision enables the image to retain more details and subtleties in color and luminance.

On the other hand, a 16-bit float HDR image utilizes 16 bits per color channel, resulting in a reduced range of values that can be represented. This lower precision leads to a loss of fine details and color nuances, especially in highly contrasted areas of the image where there are significant differences in luminance.

The drop in accuracy between 32-bit and 16-bit float HDR images becomes more noticeable as the exposure range of the scene increases. Exposure range refers to the span between the darkest and brightest areas of an image. In scenes with a limited exposure range, where the luminance differences are relatively small, the loss of accuracy may not be as prominent or perceptible. These images usually are around 8-10 exposure levels.

However, in scenes with a wide exposure range, such as a landscape with deep shadows and bright highlights, the reduced precision of a 16-bit float HDR image can result in visible artifacts like color banding, posterization, and loss of detail in both shadows and highlights. The image may exhibit abrupt transitions between tones or colors, which can appear unnatural and less realistic.

To provide a rough estimate, it is often observed that exposure values beyond approximately ±6 to ±8 stops from the middle gray (18% reflectance) may be more prone to accuracy issues in a 16-bit float format. This range may vary depending on the specific implementation and encoding scheme used.

To summarize, the drop in accuracy between 32-bit and 16-bit float HDR images is mainly related to the reduced precision of color information. This decrease in precision becomes more apparent in scenes with a wide exposure range, affecting the representation of fine details and leading to visible artifacts in the image.

In practice, this means that exposure values beyond a certain range will experience a loss of accuracy and detail when stored in a 16-bit float format. The exact range at which this loss occurs depends on the encoding scheme and the specific implementation. However, in general, extremely bright or extremely dark values that fall outside the representable range may be subject to quantization errors, resulting in loss of detail, banding, or other artifacts.

HDRs used for lighting purposes are usually slightly convolved to improve on sampling speed and removing specular artefacts. To that extent, 16 bit float HDRIs tend to me most used in CG cycles.

-

Victor Perez – The Color Management Handbook for Visual Effects Artists

Digital Color Principles, Color Management Fundamentals & ACES Workflows

-

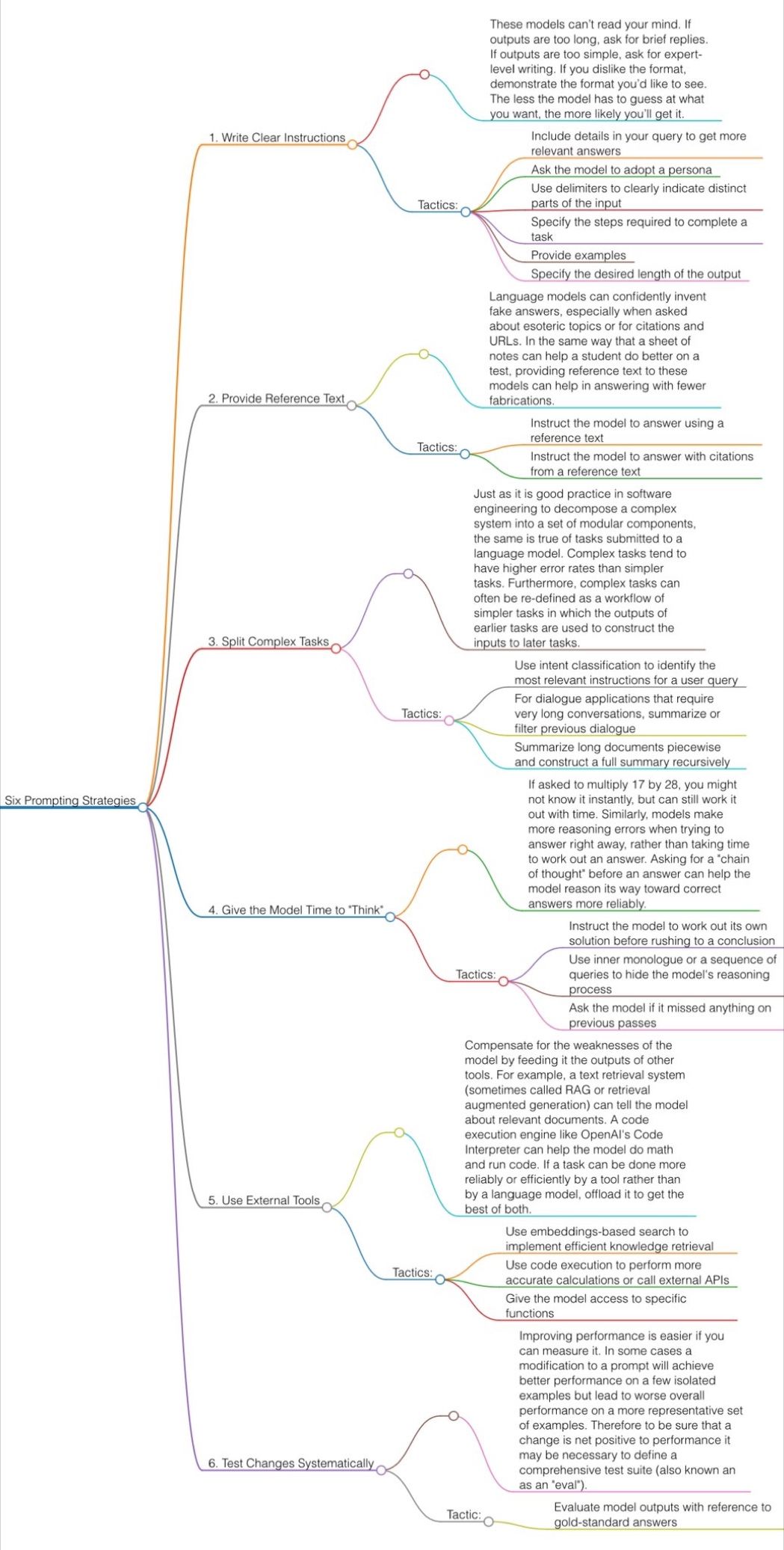

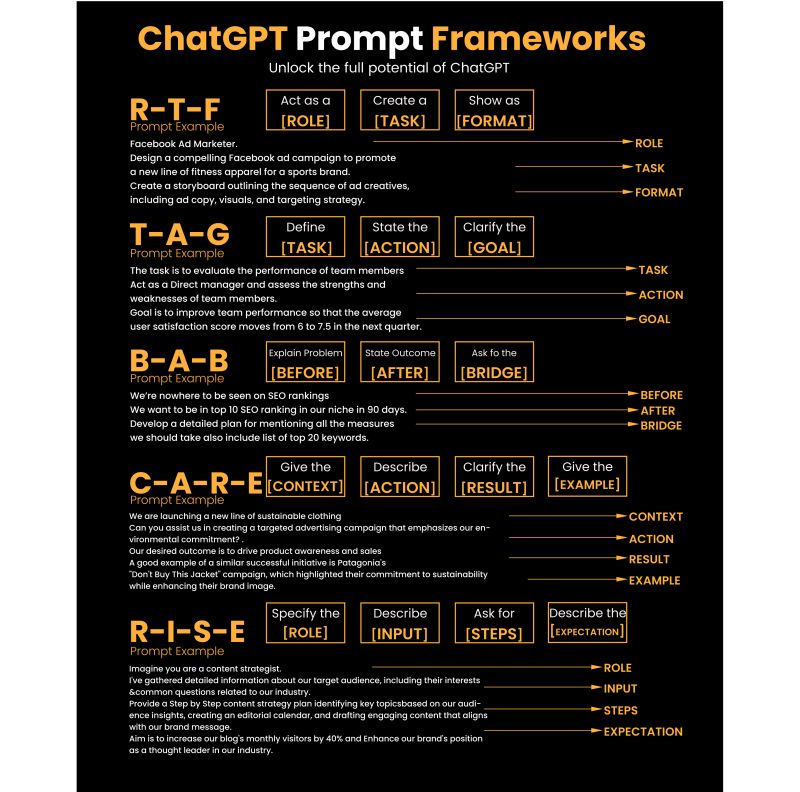

Guide to Prompt Engineering

Quick prompting scheme:

1- pass an image to JoyCaption

https://www.pixelsham.com/2024/12/23/joy-caption-alpha-two-free-automatic-caption-of-images/

2- tune the caption with ChatGPT as suggested by Pixaroma:

Craft detailed prompts for Al (image/video) generation, avoiding quotation marks. When I provide a description or image, translate it into a prompt that captures a cinematic, movie-like quality, focusing on elements like scene, style, mood, lighting, and specific visual details. Ensure that the prompt evokes a rich, immersive atmosphere, emphasizing textures, depth, and realism. Always incorporate (static/slow) camera or cinematic movement to enhance the feeling of fluidity and visual storytelling. Keep the wording precise yet descriptive, directly usable, and designed to achieve a high-quality, film-inspired result.

https://www.reddit.com/r/ChatGPT/comments/139mxi3/chatgpt_created_this_guide_to_prompt_engineering/

1. Use the 80/20 principle to learn faster

Prompt: “I want to learn about [insert topic]. Identify and share the most important 20% of learnings from this topic that will help me understand 80% of it.”

2. Learn and develop any new skill

Prompt: “I want to learn/get better at [insert desired skill]. I am a complete beginner. Create a 30-day learning plan that will help a beginner like me learn and improve this skill.”

3. Summarize long documents and articles

Prompt: “Summarize the text below and give me a list of bullet points with key insights and the most important facts.” [Insert text]

4. Train ChatGPT to generate prompts for you

Prompt: “You are an AI designed to help [insert profession]. Generate a list of the 10 best prompts for yourself. The prompts should be about [insert topic].”

5. Master any new skill

Prompt: “I have 3 free days a week and 2 months. Design a crash study plan to master [insert desired skill].”

6. Simplify complex information

Prompt: “Break down [insert topic] into smaller, easier-to-understand parts. Use analogies and real-life examples to simplify the concept and make it more relatable.”

More suggestions under the post…

(more…) -

Blockade Labs – Sketch-a-skybox free 360° image generator

Skybox AI is a free 360° image generator. Use the power of AI to imagine stunning worlds in seconds and fine tune them for use in immersive VR, XR, or games.

https://skybox.blockadelabs.com/

-

-

Tobia Montanari – Memory Colors: an essential tool for Colorists

https://www.tobiamontanari.com/memory-colors-an-essential-tool-for-colorists/

“Memory colors are colors that are universally associated with specific objects, elements or scenes in our environment. They are the colors that we expect to see in specific situations: these colors are based on our expectation of how certain objects should look based on our past experiences and memories.

For instance, we associate specific hues, saturation and brightness values with human skintones and a slight variation can significantly affect the way we perceive a scene.

Similarly, we expect blue skies to have a particular hue, green trees to be a specific shade and so on.

Memory colors live inside of our brains and we often impose them onto what we see. By considering them during the grading process, the resulting image will be more visually appealing and won’t distract the viewer from the intended message of the story. Even a slight deviation from memory colors in a movie can create a sense of discordance, ultimately detracting from the viewer’s experience.”

-

Laurence Van Elegem – The era of gigantic AI models like GPT-4 is coming to an end

https://www.linkedin.com/feed/update/urn:li:activity:7061987804548870144

Sam Altman, CEO of OpenAI, dropped a 💣 at a recent MIT event, declaring that the era of gigantic AI models like GPT-4 is coming to an end. He believes that future progress in AI needs new ideas, not just bigger models.

So why is that revolutionary? Well, this is how OpenAI’s LLMs (the models that ‘feed’ chatbots like ChatGPT & Google Bard) grew exponentially over the years:

➡️GPT-2 (2019): 1.5 billion parameters

➡️GPT-3 (2020): 175 billion parameters

➡️GPT-4: (2023): amount undisclosed – but likely trillions of parametersThat kind of parameter growth is no longer tenable, feels Altman.

Why?:

➡️RETURNS: scaling up model size comes with diminishing returns.

➡️PHYSICAL LIMITS: there’s a limit to how many & how quickly data centers can be built.

➡️COST: ChatGPT cost over over 100 million dollars to develop.What is he NOT saying? That access to data is becoming damned hard & expensive. So if you have a model that keeps needing more data to become better, that’s a problem.

Why is it becoming harder and more expensive to access data?

🎨Copyright conundrums: Getty Images, individual artists like Sarah Andersen, Kelly McKernan & Karloa Otiz are suing AI companies over unauthorized use of their content. Universal Music asked Spotify & Apple Music to stop AI companies from accessing their songs for training.

🔐Privacy matters & regulation: Italy banned ChatGPT over privacy concerns (now back after changes). Germany, France, Ireland, Canada, and Spain remain suspicious. Samsung even warned employees not to use AI tools like ChatGPT for security reasons.

💸Data monetization: Twitter, Reddit, Stack Overflow & others want AI companies to pay up for training on their data. Contrary to most artists, Grimes is allowing anyone to use her voice for AI-generated songs … for a 50% profit share.

🕸️Web3’s impact: If Web3 fulfills its promise, users could store data in personal vaults or cryptocurrency wallets, making it harder for LLMs to access the data they crave.

🌎Geopolitics: it’s increasingly difficult for data to cross country borders. Just think about China and TikTok.

😷Data contamination: We have this huge amount of ‘new’ – and sometimes hallucinated – data that is being generated by generative AI chatbots. What will happen if we feed that data back into their LLMs?

No wonder that people like Sam Altman are looking for ways to make the models better without having to use more data. If you want to know more, check our brand new Radar podcast episode (link in the comments), where I talked about this & more with Steven Van Belleghem, Peter Hinssen, Pascal Coppens & Julie Vens – De Vos. We also discussed Twitter, TikTok, Walmart, Amazon, Schmidt Futures, our Never Normal Tour with Mediafin in New York (link in the comments), the human energy crisis, Apple’s new high-yield savings account, the return of China, BYD, AI investment strategies, the power of proximity, the end of Buzzfeed news & much more.

-

ChatGPT’s watermarks can help Google detect AI generated text

https://www.binance.com/en/feed/post/144141

OpenAI, the corporation behind ChatGPT, has announced plans to introduce a new watermarking feature to help Google detect AI generated text. Watermarked text in ChatGPT will include cryptography in the form of embedding a word pattern, letters, and punctuation in the form of a secret code.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

-

How to paint a boardgame miniatures

-

JavaScript how-to free resources

-

Emmanuel Tsekleves – Writing Research Papers

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

How does Stable Diffusion work?

-

Photography basics: Solid Angle measures

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.