https://app.pixverse.ai/onboard

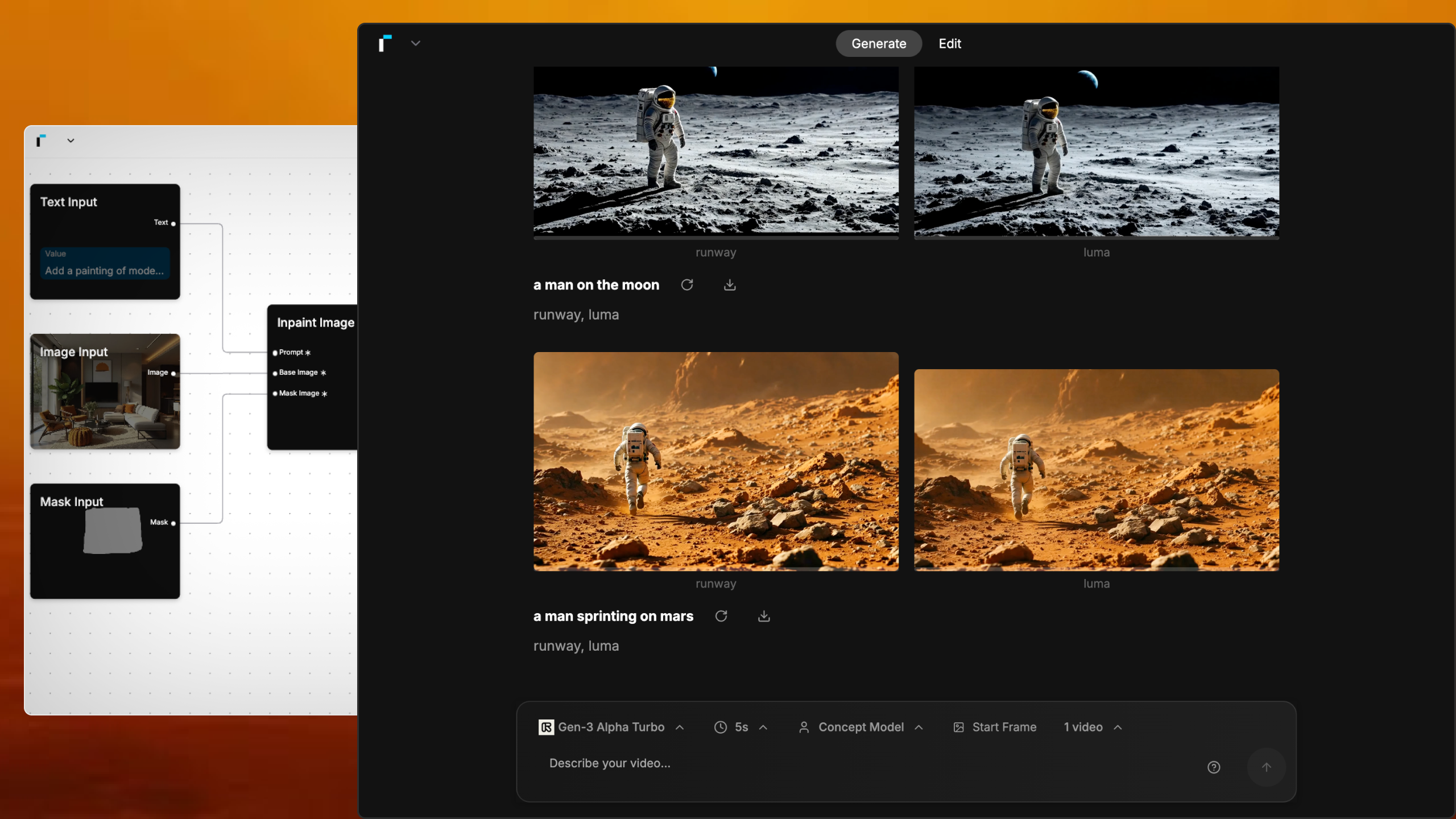

PixVerse now has 3 main features:

text to video➡️ How To Generate Videos With Text Promptsimage to video➡️ How To Animate Your Images And Bring Them To Lifeupscale➡️ How to Upscale Your Video

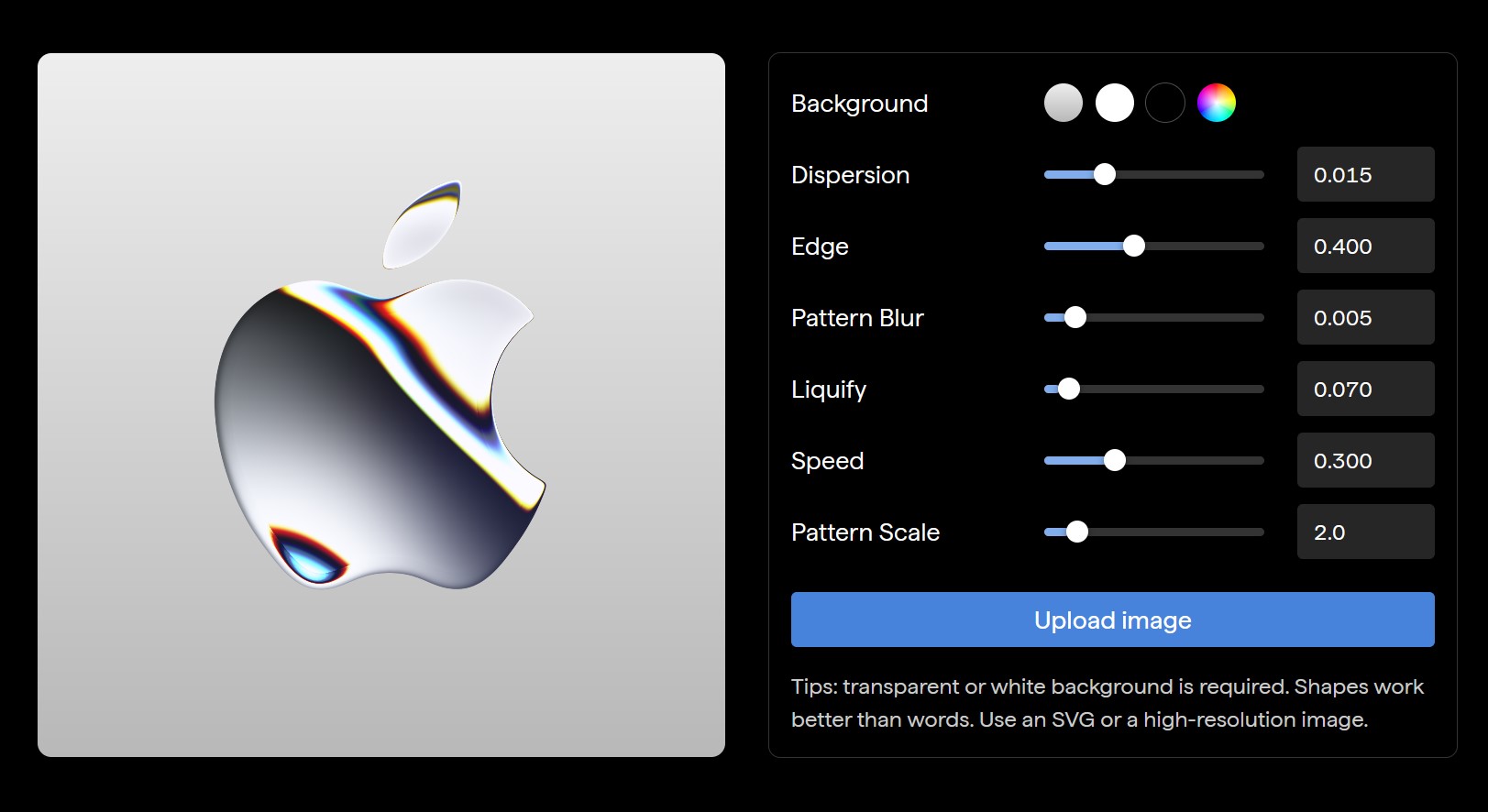

Enhanced Capabilities

– Improved Prompt Understanding: Achieve more accurate prompt interpretation and stunning video dynamics.

– Supports Various Video Ratios: Choose from 16:9, 9:16, 3:4, 4:3, and 1:1 ratios.

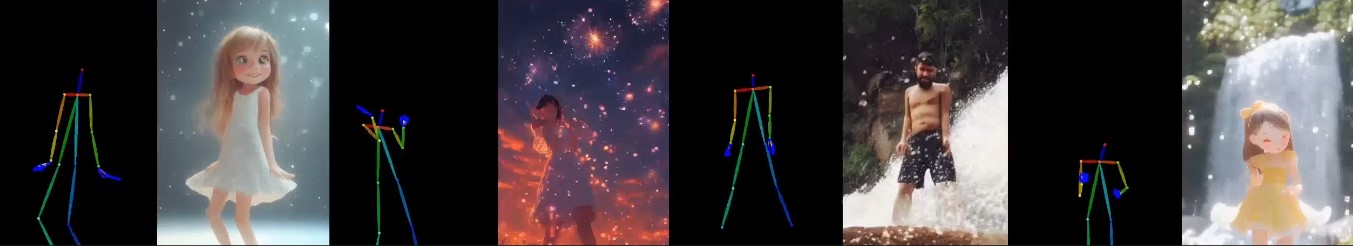

– Upgraded Styles: Style functionality returns with options like Anime, Realistic, Clay, and 3D. It supports both text-to-video and image-to-video stylization.

New Features

– Lipsync: The new Lipsync feature enables users to add text or upload audio, and PixVerse will automatically sync the characters’ lip movements in the generated video based on the text or audio.

– Effect: Offers 8 creative effects, including Zombie Transformation, Wizard Hat, Monster Invasion, and other Halloween-themed effects, enabling one-click creativity.

– Extend: Extend the generated video by an additional 5-8 seconds, with control over the content of the extended segment.