3Dprinting (178) A.I. (833) animation (348) blender (206) colour (233) commercials (52) composition (152) cool (361) design (646) Featured (79) hardware (311) IOS (109) jokes (138) lighting (288) modeling (144) music (186) photogrammetry (189) photography (754) production (1287) python (91) quotes (496) reference (314) software (1350) trailers (305) ves (549) VR (221)

Search results for: “hdr”

-

Lighting Every Darkness with 3DGS: Fast Training and Real-Time Rendering and Denoising for HDR View Synthesis

https://srameo.github.io/projects/le3d/

LE3D is a method for real-time HDR view synthesis from RAW images. It is particularly effective for nighttime scenes.

https://github.com/Srameo/LE3D

-

Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRI

https://github.com/Sosoyan/make-hdr

Feature notes

- Merge up to 16 inputs with 8, 10 or 12 bit depth processing

- User friendly logarithmic Tone Mapping controls within the tool

- Advanced controls such as Sampling rate and Smoothness

Available at cross platform on Linux, MacOS and Windows Works consistent in compositing applications like Nuke, Fusion, Natron.

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

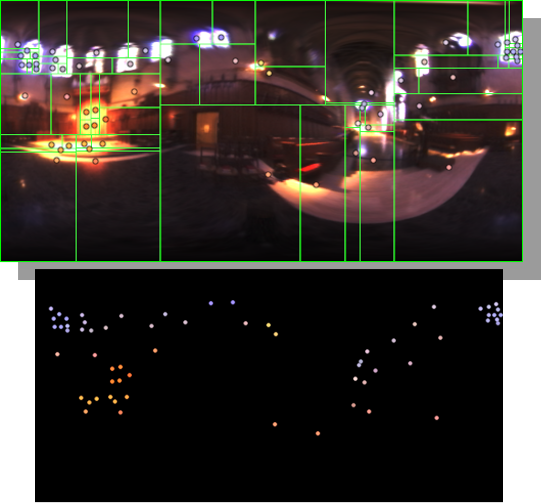

Paul d’Herbermont – Nuke HDRI model detection with TorchScript

A tool that detects, crops, and presents reference & cg spheres

https://www.patreon.com/posts/nuke-auto-ai-96524139

-

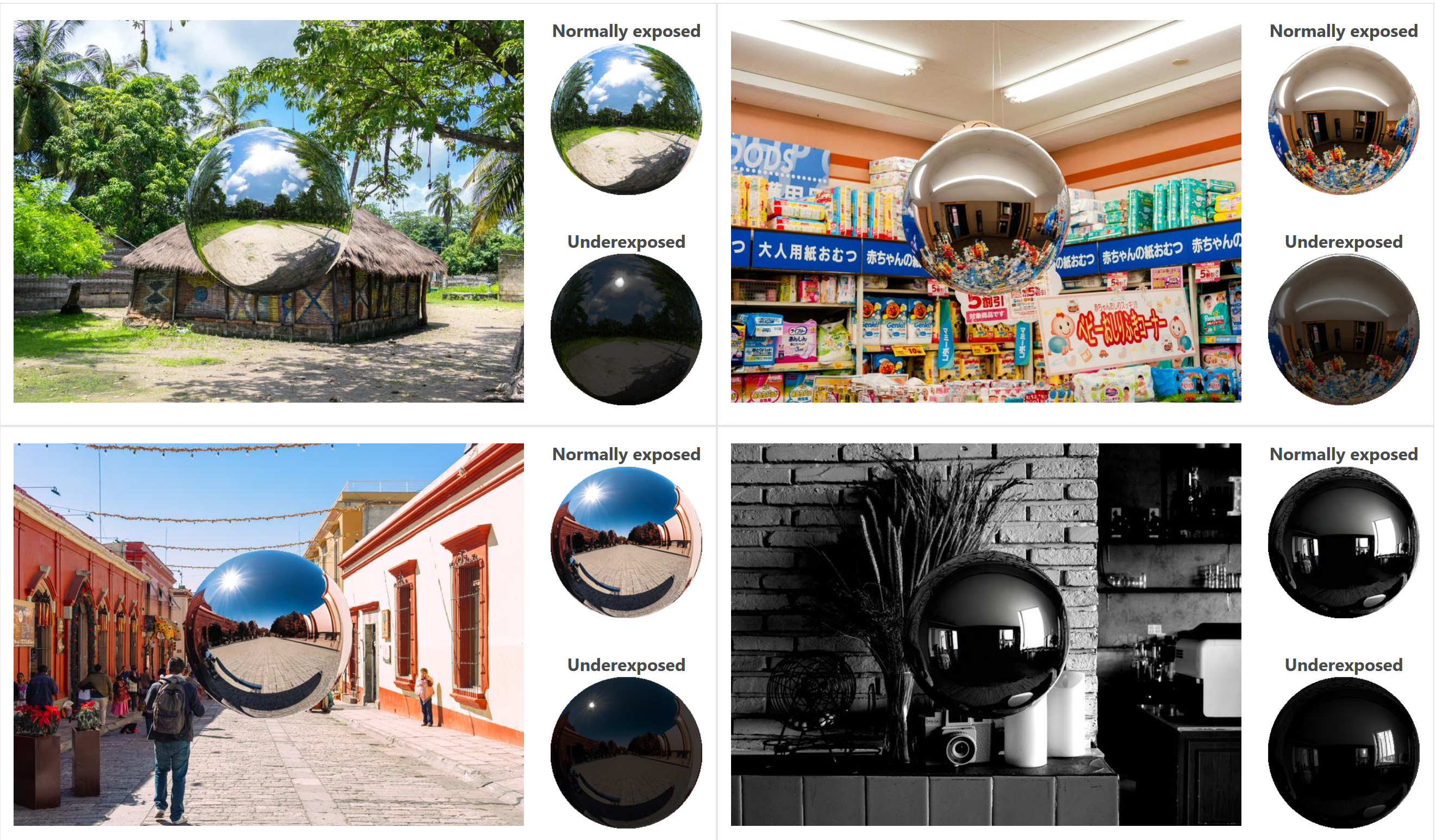

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

https://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”

-

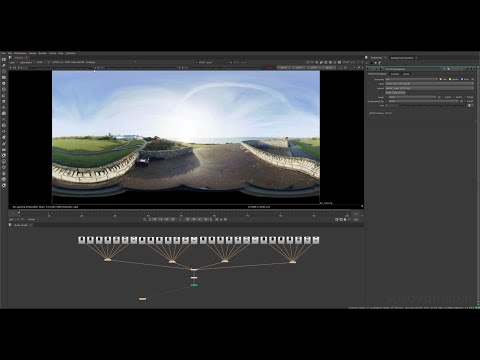

Simulon – a Hollywood production studio app in the hands of an independent creator with access to consumer hardware, LDRi to HDRi through ML

Divesh Naidoo: The video below was made with a live in-camera preview and auto-exposure matching, no camera solve, no HDRI capture and no manual compositing setup. Using the new Simulon phone app.

LDR to HDR through ML

https://simulon.typeform.com/betatest

Process example

-

Fast, optimized ‘for’ pixel loops with OpenCV and Python to create tone mapped HDR images

https://pyimagesearch.com/2017/08/28/fast-optimized-for-pixel-loops-with-opencv-and-python/

https://learnopencv.com/exposure-fusion-using-opencv-cpp-python/

Exposure Fusion is a method for combining images taken with different exposure settings into one image that looks like a tone mapped High Dynamic Range (HDR) image.

-

HDRI Resources

Text2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360

-

Unity – HDRI processing

The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

Local copy

-

NVIDIA GauGAN360 – AI driven latlong HDRI creation tool

https://blogs.nvidia.com/blog/2022/08/09/neural-graphics-sdk-metaverse-content/

Unfortunately, png output only at the moment:

http://imaginaire.cc/gaugan360/

-

Erik Winquist – The Definitive Weta Digital Guide to IBL hdri capture

www.fxguide.com/fxfeatured/the-definitive-weta-digital-guide-to-ibl

Notes:

- Camera type: full frame with exposure bracketing and an 8mm circular fish eye lens.

- Bracketing: 7 exposures at 2 stops increments.

- Tripod: supporting 120 degrees locked offsets

- Camera angle: should point up 7.5 degrees for better sky or upper dome coverage.

- Camera focus: set and tape locked to manual

- Start shooting looking towards the sun direction with and without the ND3 filter; The other angles will not require the ND3 filter.

- Documenting shooting with a slate (measure distance to slate, day, location, camera info, camera temperature, camera position)

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

5.10 of this tool includes excellent tools to clean up cr2 and cr3 used on set to support HDRI processing.

Converting raw to AcesCG 32 bit tiffs with metadata. -

HDRI shooting and editing by Xuan Prada and Greg Zaal

www.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

- Plant the tripod where the action happens, stabilise it and level it

- Set manual focus

- Set white balance

- Set ISO

- Set raw+jpg

- Set apperture

- Metering exposure

- Set neutral exposure

- Read histogram and adjust neutral exposure if necessary

- Shot slate (operator name, location, date, time, project code name, etc)

- Set auto bracketing

- Shot 5 to 7 exposures with 3 stops difference covering the whole environment

- Place the aromatic kit where the tripod was placed, and take 3 exposures. Keep half of the grey sphere hit by the sun and half in shade.

- Place the Macbeth chart 1m away from tripod on the floor and take 3 exposures

- Take backplates and ground/floor texture references

- Shoot reference materials

- Write down measurements of the scene, specially if you are shooting interiors.

- If shooting artificial lights take HDR samples of each individual lighting source.

Exposures starting point

- Day light sun visible ISO 100 F22

- Day light sun hidden ISO 100 F16

- Cloudy ISO 320 F16

- Sunrise/Sunset ISO 100 F11

- Interior well lit ISO 320 F16

- Interior ambient bright ISO 320 F10

- Interior bad light ISO 640 F10

- Interior ambient dark ISO 640 F8

- Low light situation ISO 640 F5

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

Here are the tips for using the chromatic ball in VFX projects, written in English:

https://www.linkedin.com/posts/bellrodrigo_here-are-the-tips-for-using-the-chromatic-activity-7200950595438940160-AGBpTips for Using the Chromatic Ball in VFX Projects**

The chromatic ball is an invaluable tool in VFX work, helping to capture lighting and reflection data crucial for integrating CGI elements seamlessly. Here are some tips to maximize its effectiveness:

1. **Positioning**:

– Place the chromatic ball in the same lighting conditions as the main subject. Ensure it is visible in the camera frame but not obstructing the main action.

– Ideally, place the ball where the CGI elements will be integrated to match the lighting and reflections accurately.2. **Recording Reference Footage**:

– Capture reference footage of the chromatic ball at the beginning and end of each scene or lighting setup. This ensures you have consistent lighting data for the entire shoot.3. **Consistent Angles**:

– Use consistent camera angles and heights when recording the chromatic ball. This helps in comparing and matching lighting setups across different shots.4. **Combine with a Gray Ball**:

– Use a gray ball alongside the chromatic ball. The gray ball provides a neutral reference for exposure and color balance, complementing the chromatic ball’s reflection data.5. **Marking Positions**:

– Mark the position of the chromatic ball on the set to ensure consistency when shooting multiple takes or different camera angles.6. **Lighting Analysis**:

– Analyze the chromatic ball footage to understand the light sources, intensity, direction, and color temperature. This information is crucial for creating realistic CGI lighting and shadows.7. **Reflection Analysis**:

– Use the chromatic ball to capture the environment’s reflections. This helps in accurately reflecting the CGI elements within the same scene, making them blend seamlessly.8. **Use HDRI**:

– Capture High Dynamic Range Imagery (HDRI) of the chromatic ball. HDRI provides detailed lighting information and can be used to light CGI scenes with greater realism.9. **Communication with VFX Team**:

– Ensure that the VFX team is aware of the chromatic ball’s data and how it was captured. Clear communication ensures that the data is used effectively in post-production.10. **Post-Production Adjustments**:

– In post-production, use the chromatic ball data to adjust the CGI elements’ lighting and reflections. This ensures that the final output is visually cohesive and realistic. -

Free HDRI libraries

noahwitchell.com

http://www.noahwitchell.com/freebieslocationtextures.com

https://locationtextures.com/panoramas/maxroz.com

https://www.maxroz.com/hdri/listHDRI Haven

https://hdrihaven.com/Poly Haven

https://polyhaven.com/hdrisDomeble

https://www.domeble.com/IHDRI

https://www.ihdri.com/HDRMaps

https://hdrmaps.com/NoEmotionHdrs.net

http://noemotionhdrs.net/hdrday.htmlOpenFootage.net

https://www.openfootage.net/hdri-panorama/HDRI-hub

https://www.hdri-hub.com/hdrishop/hdri.zwischendrin

https://www.zwischendrin.com/en/browse/hdriLonger list here:

https://cgtricks.com/list-sites-free-hdri/

-

HDRI Median Cut plugin

www.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

# bottom left coordinates = 0,0 import numpy as np import cv2 # Load the HDR or EXR image image = cv2.imread('your_image_path.exr', cv2.IMREAD_UNCHANGED) # Load as-is without modification # Calculate the luminance from the HDR channels (assuming RGB format) luminance = np.dot(image[..., :3], [0.299, 0.587, 0.114]) # Set a threshold value based on estimated EV threshold_value = 2.4 # Estimated threshold value based on 4.8 EV # Apply the threshold to identify bright areas # Theluminancearray contains the calculated luminance values for each pixel in the image. # Thethreshold_valueis a user-defined value that represents a cutoff point, separating "bright" and "dark" areas in terms of perceived luminance.thresholded = (luminance > threshold_value) * 255 # Convert the thresholded image to uint8 for contour detection thresholded = thresholded.astype(np.uint8) # Find contours of the bright areas contours, _ = cv2.findContours(thresholded, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # Create a list to store the bounding boxes of bright areas bright_areas = [] # Iterate through contours and extract bounding boxes for contour in contours: x, y, w, h = cv2.boundingRect(contour) # Adjust y-coordinate based on bottom-left origin y_bottom_left_origin = image.shape[0] - (y + h) bright_areas.append((x, y_bottom_left_origin, x + w, y_bottom_left_origin + h)) # Store as (x1, y1, x2, y2) # Print the identified bright areas print("Bright Areas (x1, y1, x2, y2):") for area in bright_areas: print(area)More details

Luminance and Exposure in an EXR Image:

- An EXR (Extended Dynamic Range) image format is often used to store high dynamic range (HDR) images that contain a wide range of luminance values, capturing both dark and bright areas.

- Luminance refers to the perceived brightness of a pixel in an image. In an RGB image, luminance is often calculated using a weighted sum of the red, green, and blue channels, where different weights are assigned to each channel to account for human perception.

- In an EXR image, the pixel values can represent radiometrically accurate scene values, including actual radiance or irradiance levels. These values are directly related to the amount of light emitted or reflected by objects in the scene.

The luminance line is calculating the luminance of each pixel in the image using a weighted sum of the red, green, and blue channels. The three float values [0.299, 0.587, 0.114] are the weights used to perform this calculation.

These weights are based on the concept of luminosity, which aims to approximate the perceived brightness of a color by taking into account the human eye’s sensitivity to different colors. The values are often derived from the NTSC (National Television System Committee) standard, which is used in various color image processing operations.

Here’s the breakdown of the float values:

- 0.299: Weight for the red channel.

- 0.587: Weight for the green channel.

- 0.114: Weight for the blue channel.

The weighted sum of these channels helps create a grayscale image where the pixel values represent the perceived brightness. This technique is often used when converting a color image to grayscale or when calculating luminance for certain operations, as it takes into account the human eye’s sensitivity to different colors.

For the threshold, remember that the exact relationship between EV values and pixel values can depend on the tone-mapping or normalization applied to the HDR image, as well as the dynamic range of the image itself.

To establish a relationship between exposure and the threshold value, you can consider the relationship between linear and logarithmic scales:

- Linear and Logarithmic Scales:

- Exposure values in an EXR image are often represented in logarithmic scales, such as EV (exposure value). Each increment in EV represents a doubling or halving of the amount of light captured.

- Threshold values for luminance thresholding are usually linear, representing an actual luminance level.

- Conversion Between Scales:

- To establish a mathematical relationship, you need to convert between the logarithmic exposure scale and the linear threshold scale.

- One common method is to use a power function. For instance, you can use a power function to convert EV to a linear intensity value.

threshold_value = base_value * (2 ** EV)Here,

EVis the exposure value,base_valueis a scaling factor that determines the relationship between EV and threshold_value, and2 ** EVis used to convert the logarithmic EV to a linear intensity value. - Choosing the Base Value:

- The

base_valuefactor should be determined based on the dynamic range of your EXR image and the specific luminance values you are dealing with. - You may need to experiment with different values of

base_valueto achieve the desired separation of bright areas from the rest of the image.

- The

Let’s say you have an EXR image with a dynamic range of 12 EV, which is a common range for many high dynamic range images. In this case, you want to set a threshold value that corresponds to a certain number of EV above the middle gray level (which is often considered to be around 0.18).

Here’s an example of how you might determine a

base_valueto achieve this:# Define the dynamic range of the image in EV dynamic_range = 12 # Choose the desired number of EV above middle gray for thresholding desired_ev_above_middle_gray = 2 # Calculate the threshold value based on the desired EV above middle gray threshold_value = 0.18 * (2 ** (desired_ev_above_middle_gray / dynamic_range)) print("Threshold Value:", threshold_value) -

-

Capturing the world in HDR for real time projects – Call of Duty: Advanced Warfare

Real-World Measurements for Call of Duty: Advanced Warfare

www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Local version

Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

-

domeble – Hi-Resolution CGI Backplates and 360° HDRI

When collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Photography basics: Shutter angle and shutter speed and motion blur

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

-

Glossary of Lighting Terms – cheat sheet

-

Mastering The Art Of Photography – PixelSham.com Photography Basics

-

Convert 2D Images or Text to 3D Models

-

How do LLMs like ChatGPT (Generative Pre-Trained Transformer) work? Explained by Deep-Fake Ryan Gosling

-

ComfyUI FLOAT – A container for FLOAT Generative Motion Latent Flow Matching for Audio-driven Talking Portrait – lip sync

-

Scene Referred vs Display Referred color workflows

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.