Views :

58

-

CupiX Vista – reconstruct environments from off the shelf 360 cameras

CupixVista is a new Al that can convert 360° video footage into the 3D map and virtual tour.

-

Blockade Labs – Sketch-a-skybox free 360° image generator

Skybox AI is a free 360° image generator. Use the power of AI to imagine stunning worlds in seconds and fine tune them for use in immersive VR, XR, or games.

https://skybox.blockadelabs.com/

-

RICOH THETA Z1 51GB camera – 360° images in RAW format

https://theta360.com/en/about/theta/z1.html

- 23MP(6720 x 3360, 7K)

- superior noise reduction performance

- F2.1, F3.5 and F5.6

- 4K videos (3840 x 1920, 29.97fps)

- RAW (DNG) image format

- 360° live streaming in 4K

- record sound from 4 different directions when shooting video

- editing of 360° images in Adobe Photoshop Lightroom Classic CC

- Android™ base system for the OS. Use plug-ins to customize your own THETA.

- Wireless 2.4 GHz: 1 to 11ch or 1 to 13ch

- Wireless 5 GHz: W52 (36 to 48ch, channel bandwidth 20/40/80 MHz supported)

Theta Z1 is Ricoh’s flagship 360 camera that features 1-inch sensors, which are the largest available for dual lens 360 cameras. It has been a highly regarded camera among 360 photographers because of its excellent image quality, color accuracy, and its ability to shoot Raw DNG photos with exceptional exposure latitude.

Bracketing mode 2022

Rquirement: Basic app iOS ver.2.20.0, Android ver.2.5.0, Camera firmware ver.2.10.3

https://community.theta360.guide/t/new-feature-ae-bracket-added-in-the-shooting-mode-z1-only/8247

HDRi for VFX

https://community.theta360.guide/t/create-high-quality-hdri-for-vfx-using-ricoh-theta-z1/4789/4

ND filtering

https://community.theta360.guide/t/neutral-density-solution-for-most-theta-cameras/7331

https://community.theta360.guide/t/long-exposure-nd-filter-for-ricoh-theta/1100

-

NVIDIA GauGAN360 – AI driven latlong HDRI creation tool

https://blogs.nvidia.com/blog/2022/08/09/neural-graphics-sdk-metaverse-content/

Unfortunately, png output only at the moment:

http://imaginaire.cc/gaugan360/

-

360 degree panorama of Mars from Nasa Perseverance Rover

https://www.indiatimes.com/technology/news/360-degree-panorama-of-mars-nasa-perseverance-rover-535052.html

-

domeble – Hi-Resolution CGI Backplates and 360° HDRI

When collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

-

Equirectangular 360 videos/photos to Unity3D to VR

SUMMARY

- A lot of 360 technology is natively supported in Unity3D. Examples here: https://assetstore.unity.com/packages/essentials/tutorial-projects/vr-samples-51519

- Use the Google Cardboard VR API to export for Android or iOS. https://developers.google.com/vr/?hl=en https://developers.google.com/vr/develop/unity/get-started-ios

- Images and videos are for the most equirectangular 2:1 360 captures, mapped onto a skybox (stills) or an inverted sphere (videos). Panoramas are also supported.

- Stereo is achieved in different formats, but mostly with a 2:1 over-under layout.

- Videos can be streamed from a server.

- You can export 360 mono/stereo stills/videos from Unity3D with VR Panorama.

- 4K is probably the best average resolution size for mobiles.

- Interaction can be driven through the Google API gaze scripts/plugins or through Google Cloud Speech Recognition (paid service, https://assetstore.unity.com/packages/add-ons/machinelearning/google-cloud-speech-recognition-vr-ar-desktop-desktop-72625 )

DETAILS

- Google VR game to iOS in 15 minutes

- Step by Step Google VR and responding to events with Unity3D 2017.x

https://boostlog.io/@mohammedalsayedomar/create-cardboard-apps-in-unity-5ac8f81e47018500491f38c8

https://www.sitepoint.com/building-a-google-cardboard-vr-app-in-unity/- Gaze interaction examples

https://assetstore.unity.com/packages/tools/gui/gaze-ui-for-canvas-70881

https://s3.amazonaws.com/xrcommunity/tutorials/vrgazecontrol/VRGazeControl.unitypackage

https://assetstore.unity.com/packages/tools/gui/cardboard-vr-touchless-menu-trigger-58897

- Basics details about equirectangular 2:1 360 images and videos.

- Skybox cubemap texturing, shading and camera component for stills.

- Video player component on a sphere’s with a flipped normals shader.

- Note that you can also use a pre-modeled sphere with inverted normals.

- Note that for audio you will need an audio component on the sphere model.

- Setup a Full 360 stereoscopic video playback using an over-under layout split onto two cameras.

- Note you cannot generate a stereoscopic image from two 360 captures, it has to be done through a dedicated consumer rig.

http://bernieroehl.com/360stereoinunity/

VR Actions for Playmaker

https://assetstore.unity.com/packages/tools/vr-actions-for-playmaker-52109100 Best Unity3d VR Assets

http://meta-guide.com/embodiment/100-best-unity3d-vr-assets…find more tutorials/reference under this blog page

(more…) -

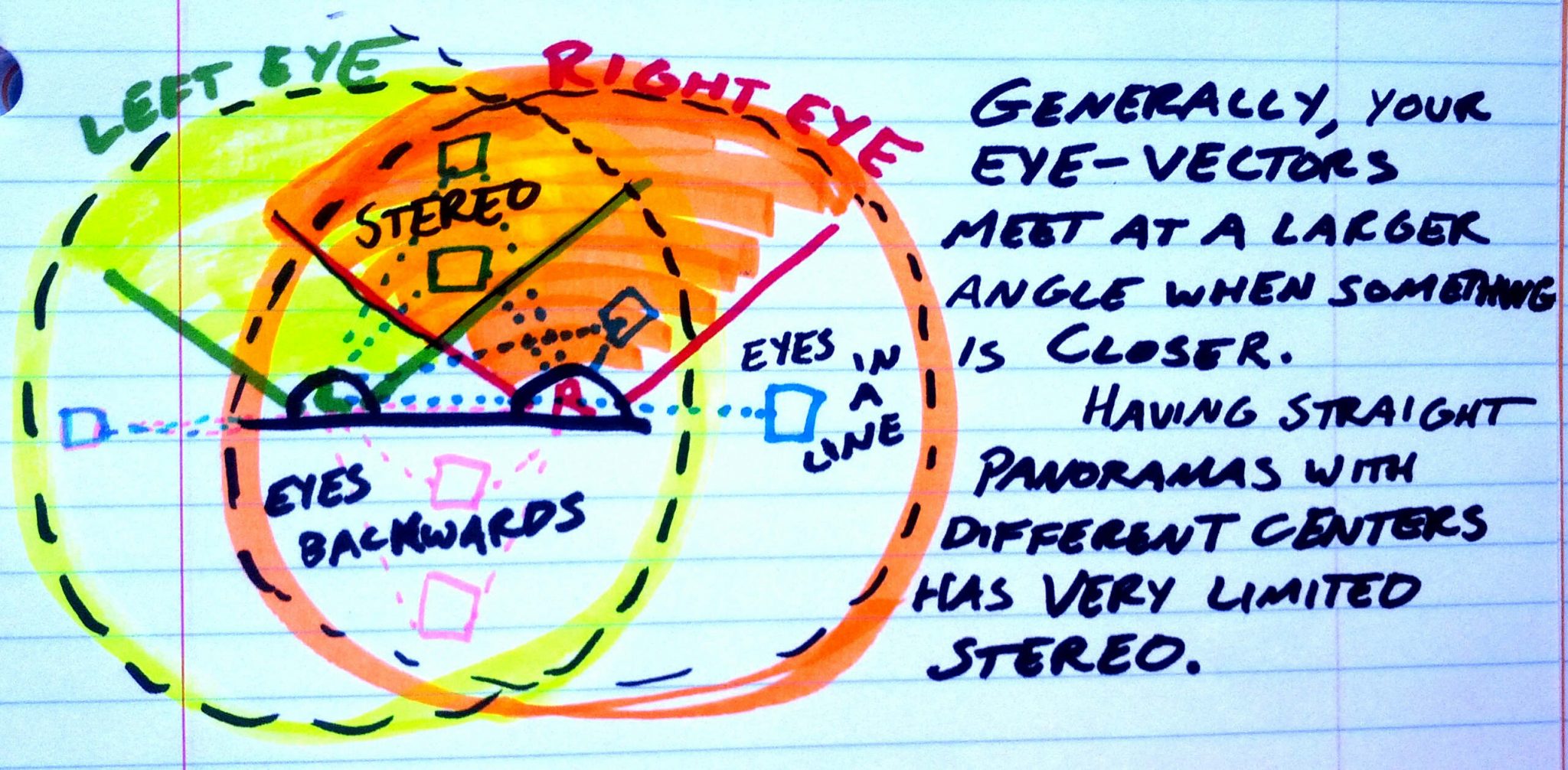

why you cannot generate a stereoscopic image from just two 360 captures

elevr.com/elevrant-panoramic-twist/

Today we discuss panoramic 3d video capture and how understanding its geometry leads to some new potential focus techniques.

With ordinary 2-camera stereoscopy, like you see at a 3d movie, each camera captures its own partial panorama of video, so the two partial circles of video are part of two side-by-side panoramas, each centering on a different point (where the cameras are).

This is great if you want to stare straight ahead from a fixed position. The eyes can measure the depth of any object in the middle of this Venn diagram of overlap. I think of the line of sight as being vectors shooting out of your eyeballs, and when those vectors hit an object from different angles, you get 3d information. When something’s closer, the vectors hit at a wider angle, and when an object is really far away, the vectors approach being parallel.

But even if both these cameras captured spherically, you’d have problems once you turn your head. Your ability to measure depth lessens and lessens, with generally smaller vector angles, until when you’re staring directly to the right they overlap entirely, zero angle no matter how close or far something is. And when you turn to face behind you, the panoramas are backwards, in a way that makes it impossible to focus your eyes on anything.

So a setup with two separate 360 panoramas captured an eye-width apart is no good for actual panoramas.

But you can stitch together a panorama using pairs of cameras an eye-width apart, where the center of the panorama is not on any one camera but at the center of a ball of cameras. Depending on the field of view that gets captured and how it’s stitched together, a four-cameras-per-eye setup might produce something with more or less twist, and more or less twist-reduction between cameras. Ideally, you’d have a many camera setup that lets you get a fully symmetric twist around each panorama. Or, for a circle of lots of cameras facing directly outward, you could crop the footage for each camera: stitch together the right parts of each camera’s capture for the left eye, and the left parts of each camera’s capture for the right eye.

-

Photogrammetry from 360 cameras

Agisoft PhotoScan is one of the most common tools used, but you will need the professional version to work with panos.

These do not support 360 cameras:

– Autodesk Recap

– Reality Capture

– MeshLabmedium.com/@smitty/spherical-and-panoramic-photogrammetry-resources-2edbaeac13ac

www.nctechimaging.com//downloads-files/PhotoScan_Application_Note_v1.1.pdf

360rumors.com/2017/11/software-institut-pascal-converts-360-video-3d-model-vr.html

WalkAboutWorlds

https://sketchfab.com/models/9bc44ba457104b57943c29a79e4103bd -

Spatial Media Metadata Injector – for 360 videos

The Spatial Media Metadata Injector adds metadata to a video file indicating that the file contains 360 video. Use the metadata injector to prepare 360 videos for upload to YouTube.

github.com/google/spatial-media/releases/tag/v2.1

The Windows release requires a 64-bit version of Windows. If you’re using a 32-bit version of Windows, you can still run the metadata injector from the Python source code as follows:

- Install Python.

- Download and extract the metadata injector source code.

- From the “spatialmedia” directory in Windows Explorer, double click on “gui”. Alternatively, from the command prompt, change to the “spatialmedia” directory, and run “python gui.py”.

360.Video.Metadata.Tool.mac.zip

360.Video.Metadata.Tool.win.zip

Categories

- 3Dprinting (167)

- A.I. (521)

- animation (328)

- blender (178)

- colour (220)

- commercials (45)

- composition (144)

- cool (357)

- design (606)

- Featured (46)

- hardware (289)

- IOS (106)

- jokes (133)

- lighting (268)

- modeling (102)

- music (182)

- photogrammetry (150)

- photography (736)

- production (1,217)

- python (77)

- quotes (475)

- reference (295)

- software (1,270)

- trailers (287)

- ves (504)

- VR (218)