-

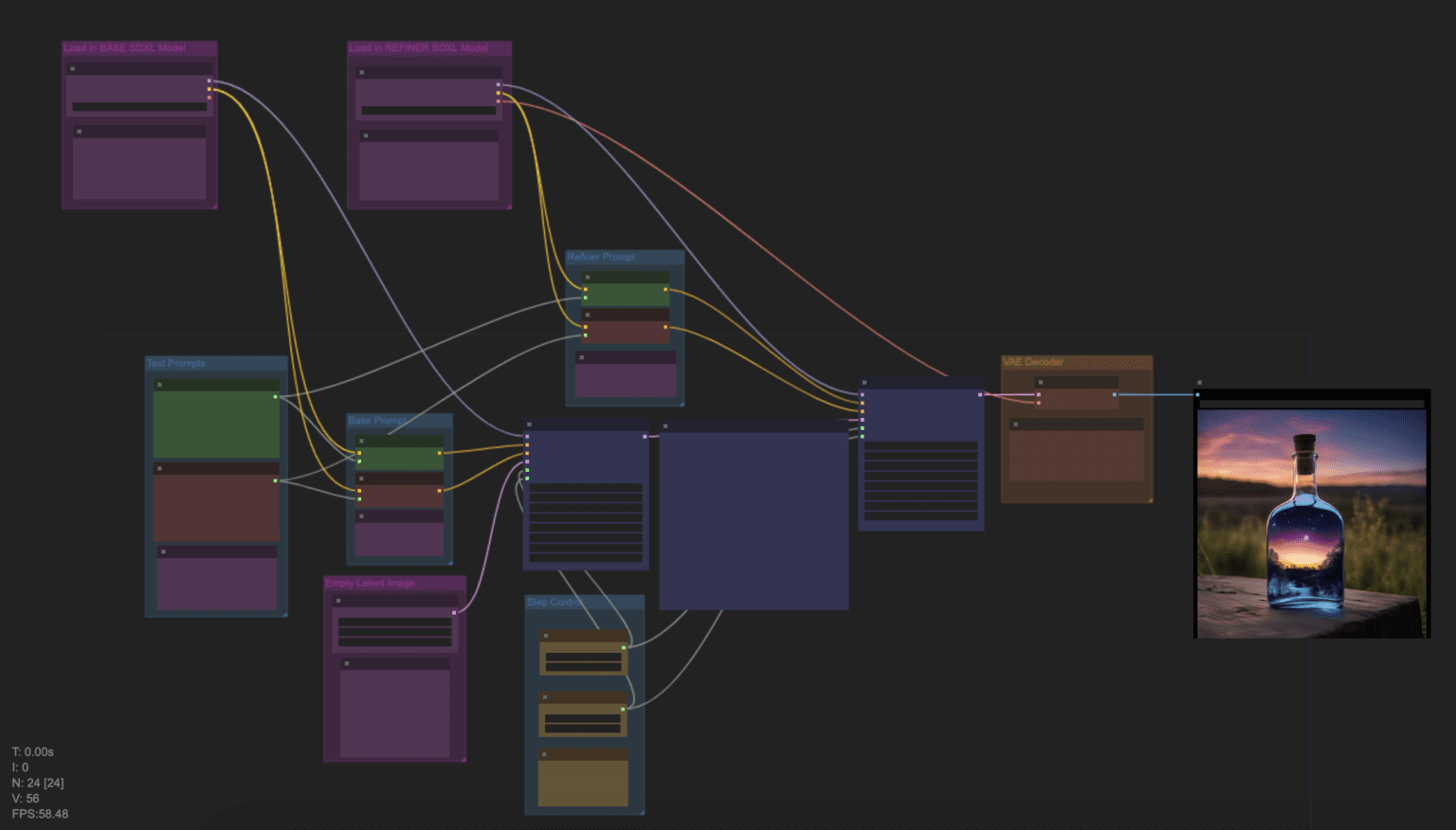

ComfyUI Tutorial Series Ep 25 – LTX Video – Fast AI Video Generator Model

https://comfyanonymous.github.io/ComfyUI_examples/ltxv

LTX-Video 2B v0.9.1 Checkpoint model

https://huggingface.co/Lightricks/LTX-Video/tree/main

More details under the post

(more…) -

The AI-Copyright Trap document by Carys Craig

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4905118

“There are many good reasons to be concerned about the rise of generative AI(…). Unfortunately, there are also many good reasons to be concerned about copyright’s growing prevalence in the policy discourse around AI’s regulation. Insisting that copyright protects an exclusive right to use materials for text and data mining practices (whether for informational analysis or machine learning to train generative AI models) is likely to do more harm than good. As many others have explained, imposing copyright constraints will certainly limit competition in the AI industry, creating cost-prohibitive barriers to quality data and ensuring that only the most powerful players have the means to build the best AI tools (provoking all of the usual monopoly concerns that accompany this kind of market reality but arguably on a greater scale than ever before). It will not, however, prevent the continued development and widespread use of generative AI.”

…

“(…) As Michal Shur-Ofry has explained, the technical traits of generative AI already mean that its outputs will tend towards the dominant, likely reflecting ‘a relatively narrow, mainstream view, prioritizing the popular and conventional over diverse contents and narratives.’ Perhaps, then, if the political goal is to push for equality, participation, and representation in the AI age, critics’ demands should focus not on exclusivity but inclusivity. If we want to encourage the development of ethical and responsible AI, maybe we should be asking what kind of material and training data must be included in the inputs and outputs of AI to advance that goal. Certainly, relying on copyright and the market to dictate what is in and what is out is unlikely to advance a public interest or equality-oriented agenda.”

…

“If copyright is not the solution, however, it might reasonably be asked: what is? The first step to answering that question—to producing a purposively sound prescription and evidence-based prognosis, is to correctly diagnose the problem. If, as I have argued, the problem is not that AI models are being trained on copyright works without their owners’ consent, then requiring copyright owners’ consent and/or compensation for the use of their work in AI-training datasets is not the appropriate solution. (…)If the only real copyright problem is that the outputs of generative AI may be substantially similar to specific human-authored and copyright-protected works, then copyright law as we know it already provides the solution.” -

Newton’s Cradle – An AI Film By Jeff Synthesized

Narrative voice via Artlistai, News Reporter PlayAI, All other voices are V2V in Elevenlabs.

Powered by (in order of amount) ‘HailuoAI’, ‘KlingAI’ and of course some of our special source. Performance capture by ‘Runway’s Act-One’.

Edited and color graded in ‘DaVinci Resolve’. Composited with ‘After Effects’.

In this film, the ‘Newton’s Cradle’ isn’t just a symbolic object—it represents the fragile balance between control and freedom in a world where time itself is being manipulated. The oscillation of the cradle reflects the constant push and pull of power in this dystopian society. By the end of the film, we discover that this seemingly innocuous object holds the potential to disrupt the system, offering a glimmer of hope that time can be reset and balance restored. -

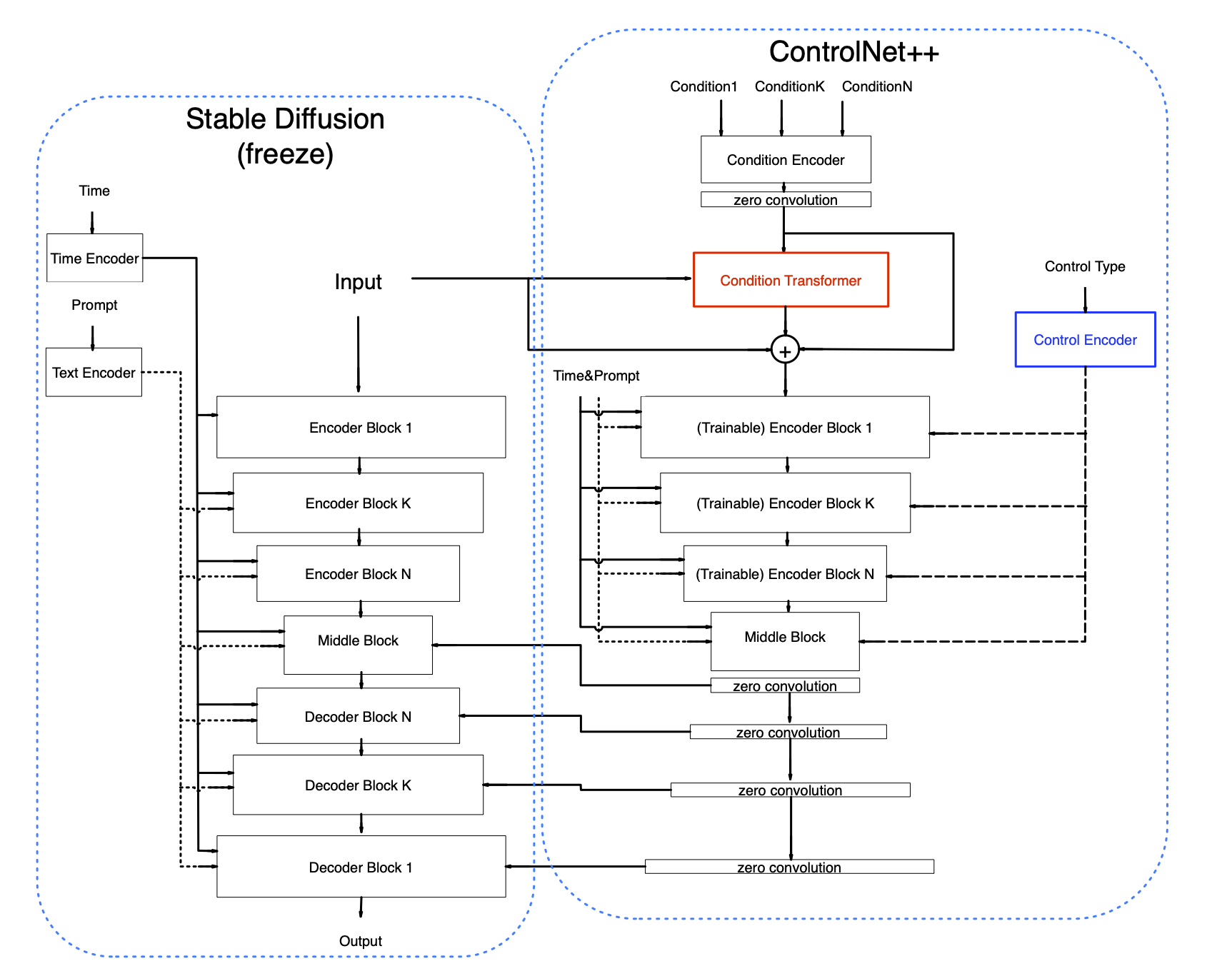

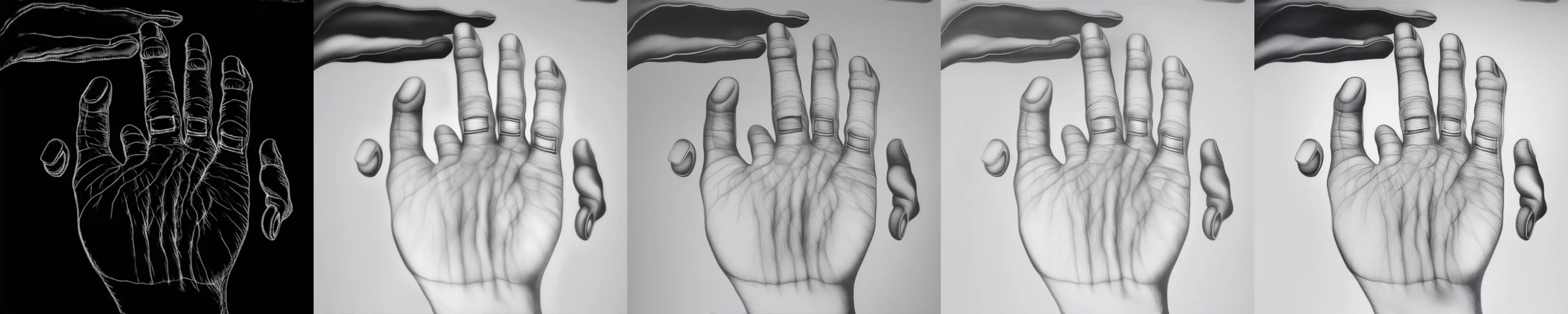

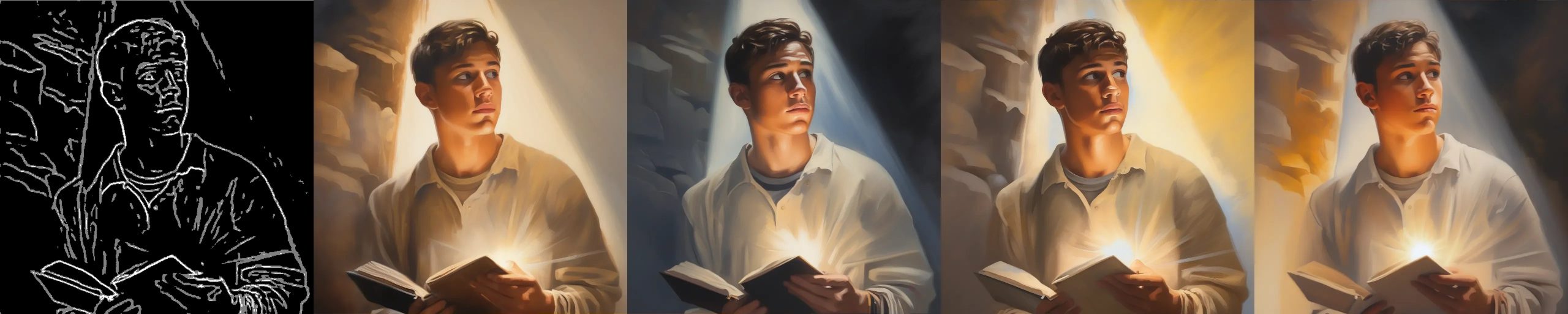

xinsir – controlnet-union-sdxl-1.0 examples

https://huggingface.co/xinsir/controlnet-union-sdxl-1.0

deblur

inpainting

outpainting

upscale

openpose

depthmap

canny

lineart

anime lineart

mlsd

scribble

hed

softedge

ted

segmentation

normals

openpose + canny

-

What is deepfake GAN (Generative Adversarial Network) technology?

https://www.techtarget.com/whatis/definition/deepfake

Deepfake technology is a type of artificial intelligence used to create convincing fake images, videos and audio recordings. The term describes both the technology and the resulting bogus content and is a portmanteau of deep learning and fake.

Deepfakes often transform existing source content where one person is swapped for another. They also create entirely original content where someone is represented doing or saying something they didn’t do or say.

Deepfakes aren’t edited or photoshopped videos or images. In fact, they’re created using specialized algorithms that blend existing and new footage. For example, subtle facial features of people in images are analyzed through machine learning (ML) to manipulate them within the context of other videos.

Deepfakes uses two algorithms — a generator and a discriminator — to create and refine fake content. The generator builds a training data set based on the desired output, creating the initial fake digital content, while the discriminator analyzes how realistic or fake the initial version of the content is. This process is repeated, enabling the generator to improve at creating realistic content and the discriminator to become more skilled at spotting flaws for the generator to correct.

The combination of the generator and discriminator algorithms creates a generative adversarial network.

A GAN uses deep learning to recognize patterns in real images and then uses those patterns to create the fakes.

When creating a deepfake photograph, a GAN system views photographs of the target from an array of angles to capture all the details and perspectives.

When creating a deepfake video, the GAN views the video from various angles and analyzes behavior, movement and speech patterns.

This information is then run through the discriminator multiple times to fine-tune the realism of the final image or video. -

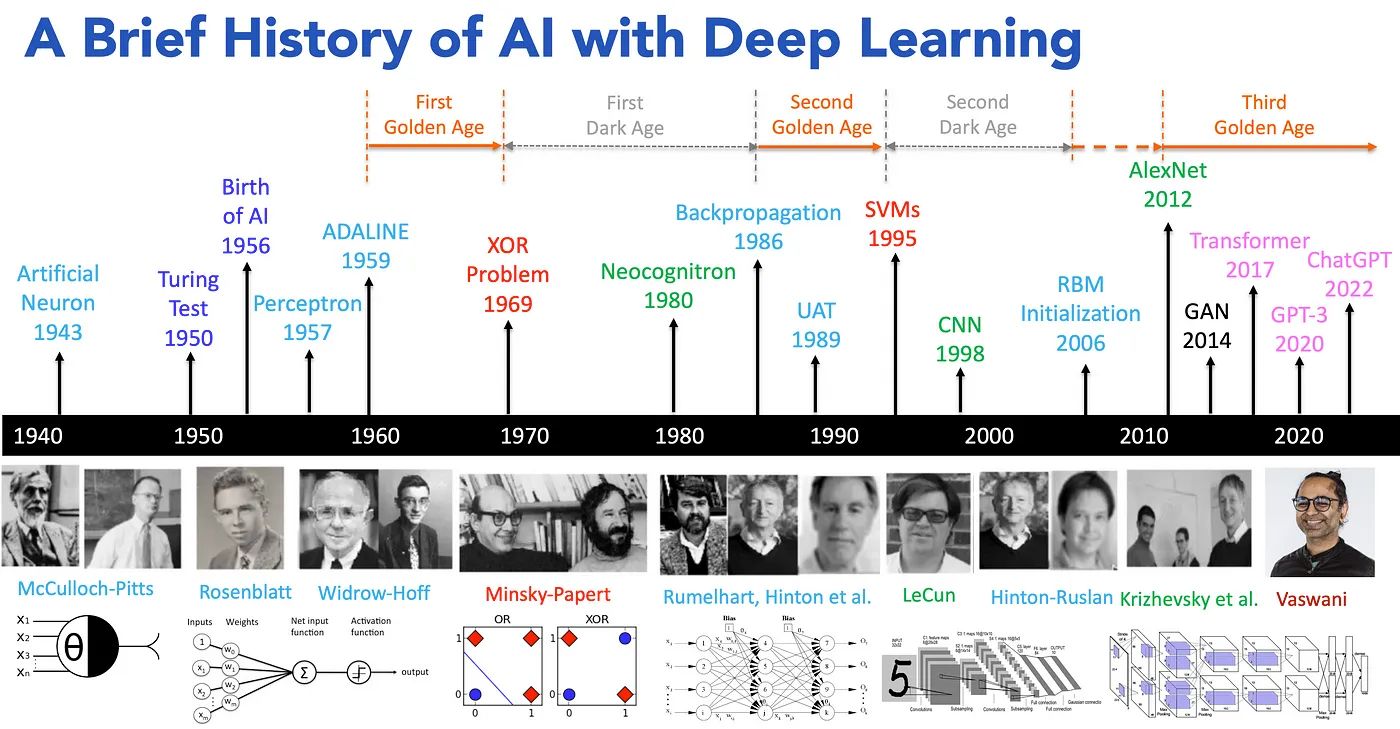

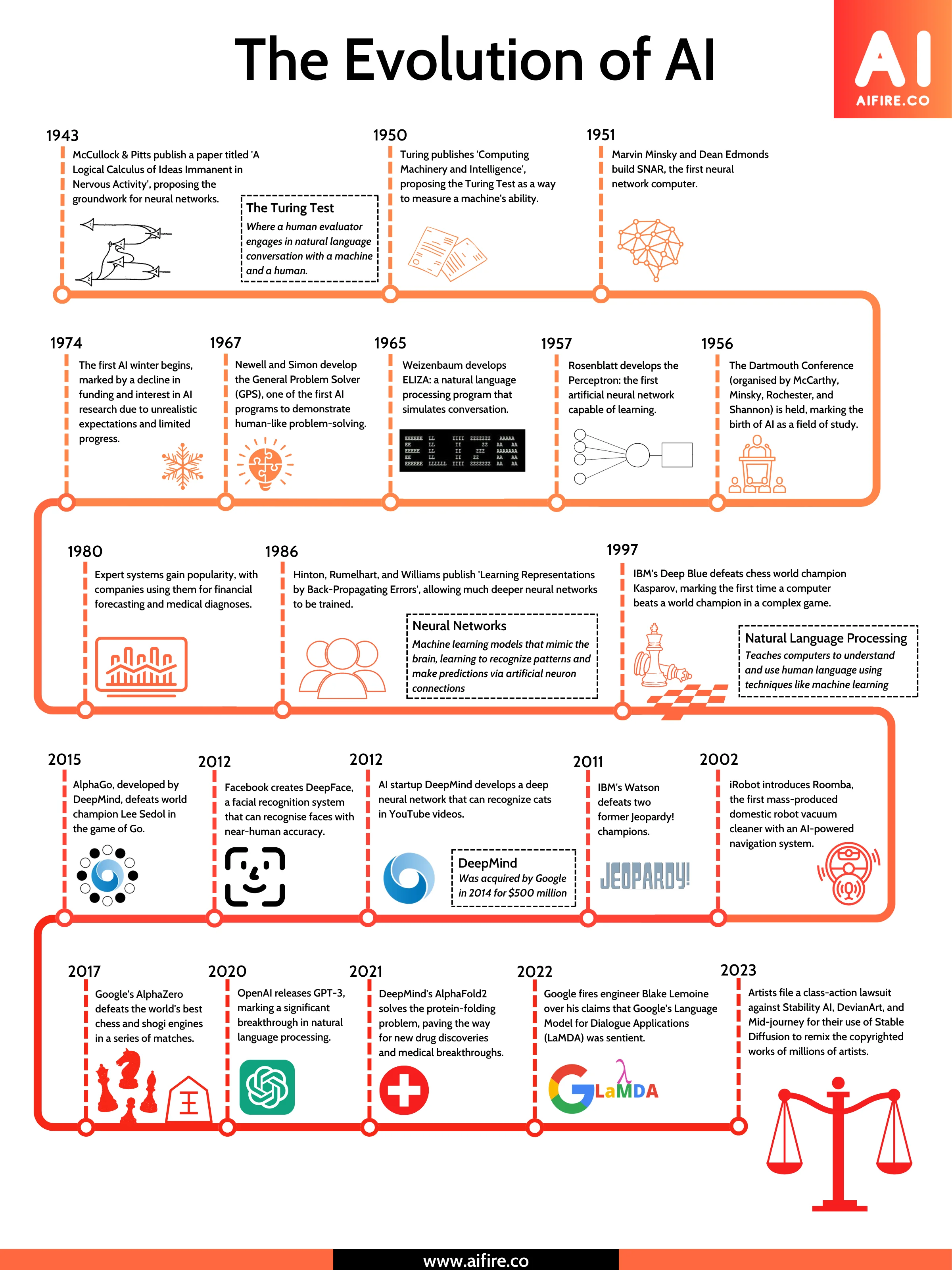

The History, Evolution and Rise of AI

https://medium.com/@lmpo/a-brief-history-of-ai-with-deep-learning-26f7948bc87b

🔹 1943: 𝗠𝗰𝗖𝘂𝗹𝗹𝗼𝗰𝗵 & 𝗣𝗶𝘁𝘁𝘀 create the first artificial neuron.

🔹 1950: 𝗔𝗹𝗮𝗻 𝗧𝘂𝗿𝗶𝗻𝗴 introduces the Turing Test, forever changing the way we view intelligence.

🔹 1956: 𝗝𝗼𝗵𝗻 𝗠𝗰𝗖𝗮𝗿𝘁𝗵𝘆 coins the term “Artificial Intelligence,” marking the official birth of the field.

🔹 1957: 𝗙𝗿𝗮𝗻𝗸 𝗥𝗼𝘀𝗲𝗻𝗯𝗹𝗮𝘁𝘁 invents the Perceptron, one of the first neural networks.

🔹 1959: 𝗕𝗲𝗿𝗻𝗮𝗿𝗱 𝗪𝗶𝗱𝗿𝗼𝘄 and 𝗧𝗲𝗱 𝗛𝗼𝗳𝗳 create ADALINE, a model that would shape neural networks.

🔹 1969: 𝗠𝗶𝗻𝘀𝗸𝘆 & 𝗣𝗮𝗽𝗲𝗿𝘁 solve the XOR problem, but also mark the beginning of the “first AI winter.”

🔹 1980: 𝗞𝘂𝗻𝗶𝗵𝗶𝗸𝗼 𝗙𝘂𝗸𝘂𝘀𝗵𝗶𝗺𝗮 introduces Neocognitron, laying the groundwork for deep learning.

🔹 1986: 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 and 𝗗𝗮𝘃𝗶𝗱 𝗥𝘂𝗺𝗲𝗹𝗵𝗮𝗿𝘁 introduce backpropagation, making neural networks viable again.

🔹 1989: 𝗝𝘂𝗱𝗲𝗮 𝗣𝗲𝗮𝗿𝗹 advances UAT (Understanding and Reasoning), building a foundation for AI’s logical abilities.

🔹 1995: 𝗩𝗹𝗮𝗱𝗶𝗺𝗶𝗿 𝗩𝗮𝗽𝗻𝗶𝗸 and 𝗖𝗼𝗿𝗶𝗻𝗻𝗮 𝗖𝗼𝗿𝘁𝗲𝘀 develop Support Vector Machines (SVMs), a breakthrough in machine learning.

🔹 1998: 𝗬𝗮𝗻𝗻 𝗟𝗲𝗖𝘂𝗻 popularizes Convolutional Neural Networks (CNNs), revolutionizing image recognition.

🔹 2006: 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 and 𝗥𝘂𝘀𝗹𝗮𝗻 𝗦𝗮𝗹𝗮𝗸𝗵𝘂𝘁𝗱𝗶𝗻𝗼𝘃 introduce deep belief networks, reigniting interest in deep learning.

🔹 2012: 𝗔𝗹𝗲𝘅 𝗞𝗿𝗶𝘇𝗵𝗲𝘃𝘀𝗸𝘆 and 𝗚𝗲𝗼𝗳𝗳𝗿𝗲𝘆 𝗛𝗶𝗻𝘁𝗼𝗻 launch AlexNet, sparking the modern AI revolution in deep learning.

🔹 2014: 𝗜𝗮𝗻 𝗚𝗼𝗼𝗱𝗳𝗲𝗹𝗹𝗼𝘄 introduces Generative Adversarial Networks (GANs), opening new doors for AI creativity.

🔹 2017: 𝗔𝘀𝗵𝗶𝘀𝗵 𝗩𝗮𝘀𝘄𝗮𝗻𝗶 and team introduce Transformers, redefining natural language processing (NLP).

🔹 2020: OpenAI unveils GPT-3, setting a new standard for language models and AI’s capabilities.

🔹 2022: OpenAI releases ChatGPT, democratizing conversational AI and bringing it to the masses.

-

Eddie Yoon – There’s a big misconception about AI creative

You’re being tricked into believing that AI can produce Hollywood-level videos…

We’re far from it.

Yes, we’ve made huge progress.

A video sample like this, created using Kling 1.6, is light-years ahead of what was possible a year ago. But there’s still a significant limitation: visual continuity beyond 5 seconds.

Right now, no AI model can maintain consistency beyond a few seconds. That’s why most AI-generated concepts you’re seeing on your feed rely on 2–5 second cuts – it’s all the tech can handle before things start to fall apart.

This isn’t necessarily a problem for creating movie trailers or spec ads. Trailers, for instance, are designed for quick, attention-grabbing rapid cuts, and AI excels at this style of visual storytelling.

But, making a popular, full-length movie with nothing but 5-second shots? That’s absurd.

There are very few exceptions to this rule in modern cinema (e.g., the Bourne franchise).

To bridge the gap between trailers and full-length cinema, AI creative needs to reach 2 key milestones:

– 5-12 sec average: ASL for slower, non-action scenes in contemporary films – think conversations, emotional moments, or establishing shots

– 30+ sec sequences: Longer, uninterrupted takes are essential for genres that require immersion – drama, romance, thrillers, or any scene that builds tension or atmosphere

Mastering longer cuts is crucial.

30-second continuous shots require a higher level of craftsmanship and visual consistency – you need that 20-30 seconds of breathing room to piece together a variety of scenes and create a compelling movie.

So, where does AI creative stand now?

AI is already transforming industries like auto, fashion, and CPG. These brands can use AI today because short, 2–5 second cuts work perfectly in their visual language. Consumers are accustomed to it, and it simply works. This psychological dynamic is unlikely to change anytime soon.

But for AI to produce true cinema (not just flashy concepts) it needs to extend its visual consistency. And every GenAI company is racing to get there.

The timeline?

Next year, expect breakthroughs in AI-generated content holding consistency for 10+ seconds. By then, full-length commercials, shows, and movies (in that order) will start to feel more crafted, immersive, and intentional, not just stitched together.

If you’re following AI’s impact on creativity, this is the development to watch. The companies that solve continuity will redefine what’s possible in film.

-

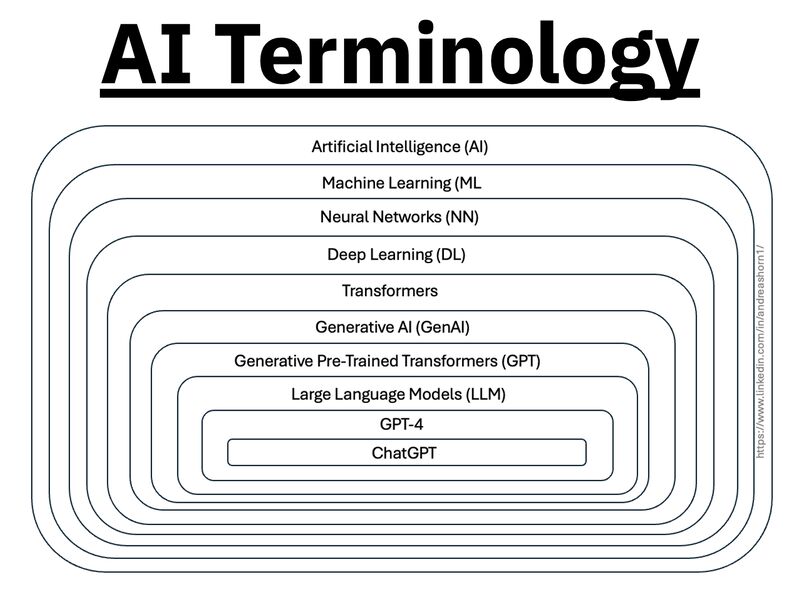

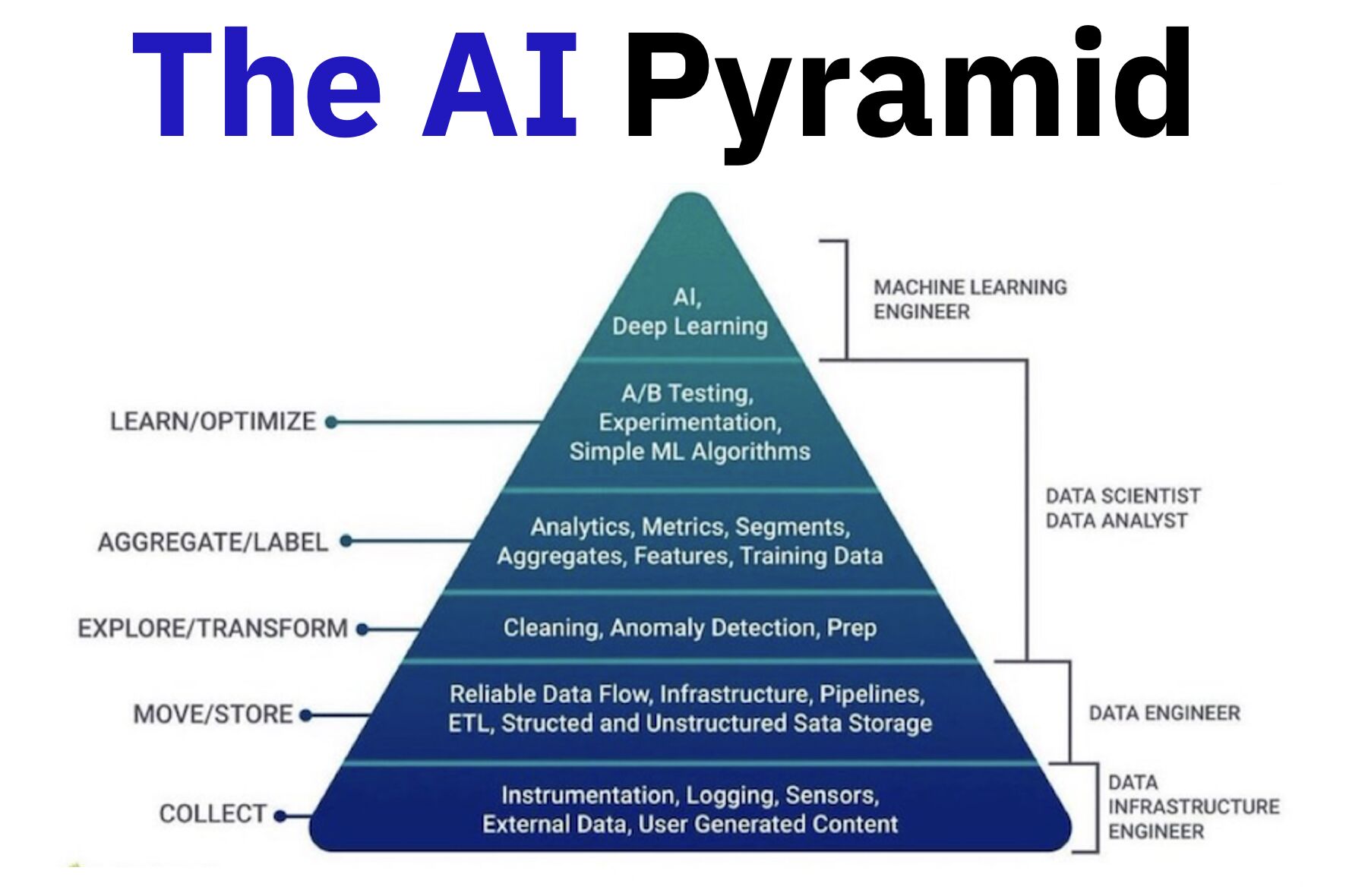

Andreas Horn – Want cutting edge AI?

𝗧𝗵𝗲 𝗯𝘂𝗶𝗹𝗱𝗶𝗻𝗴 𝗯𝗹𝗼𝗰𝗸𝘀 𝗼𝗳 𝗔𝗜 𝗮𝗻𝗱 𝗲𝘀𝘀𝗲𝗻𝘁𝗶𝗮𝗹 𝗽𝗿𝗼𝗰𝗲𝘀𝘀𝗲𝘀:

– Collect: Data from sensors, logs, and user input.

– Move/Store: Build infrastructure, pipelines, and reliable data flow.

– Explore/Transform: Clean, prep, and detect anomalies to make the data usable.

– Aggregate/Label: Add analytics, metrics, and labels to create training data.

– Learn/Optimize: Experiment, test, and train AI models.𝗧𝗵𝗲 𝗹𝗮𝘆𝗲𝗿𝘀 𝗼𝗳 𝗱𝗮𝘁𝗮 𝗮𝗻𝗱 𝗵𝗼𝘄 𝘁𝗵𝗲𝘆 𝗯𝗲𝗰𝗼𝗺𝗲 𝗶𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝘁:

– Instrumentation and logging: Sensors, logs, and external data capture the raw inputs.

– Data flow and storage: Pipelines and infrastructure ensure smooth movement and reliable storage.

– Exploration and transformation: Data is cleaned, prepped, and anomalies are detected.

– Aggregation and labeling: Analytics, metrics, and labels create structured, usable datasets.

– Experimenting/AI/ML: Models are trained and optimized using the prepared data.

– AI insights and actions: Advanced AI generates predictions, insights, and decisions at the top.𝗪𝗵𝗼 𝗺𝗮𝗸𝗲𝘀 𝗶𝘁 𝗵𝗮𝗽𝗽𝗲𝗻 𝗮𝗻𝗱 𝗸𝗲𝘆 𝗿𝗼𝗹𝗲𝘀:

– Data Infrastructure Engineers: Build the foundation — collect, move, and store data.

– Data Engineers: Prep and transform the data into usable formats.

– Data Analysts & Scientists: Aggregate, label, and generate insights.

– Machine Learning Engineers: Optimize and deploy AI models.𝗧𝗵𝗲 𝗺𝗮𝗴𝗶𝗰 𝗼𝗳 𝗔𝗜 𝗶𝘀 𝗶𝗻 𝗵𝗼𝘄 𝘁𝗵𝗲𝘀𝗲 𝗹𝗮𝘆𝗲𝗿𝘀 𝗮𝗻𝗱 𝗿𝗼𝗹𝗲𝘀 𝘄𝗼𝗿𝗸 𝘁𝗼𝗴𝗲𝘁𝗵𝗲𝗿. 𝗧𝗵𝗲 𝘀𝘁𝗿𝗼𝗻𝗴𝗲𝗿 𝘆𝗼𝘂𝗿 𝗳𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻, 𝘁𝗵𝗲 𝘀𝗺𝗮𝗿𝘁𝗲𝗿 𝘆𝗼𝘂𝗿 𝗔𝗜.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.