-

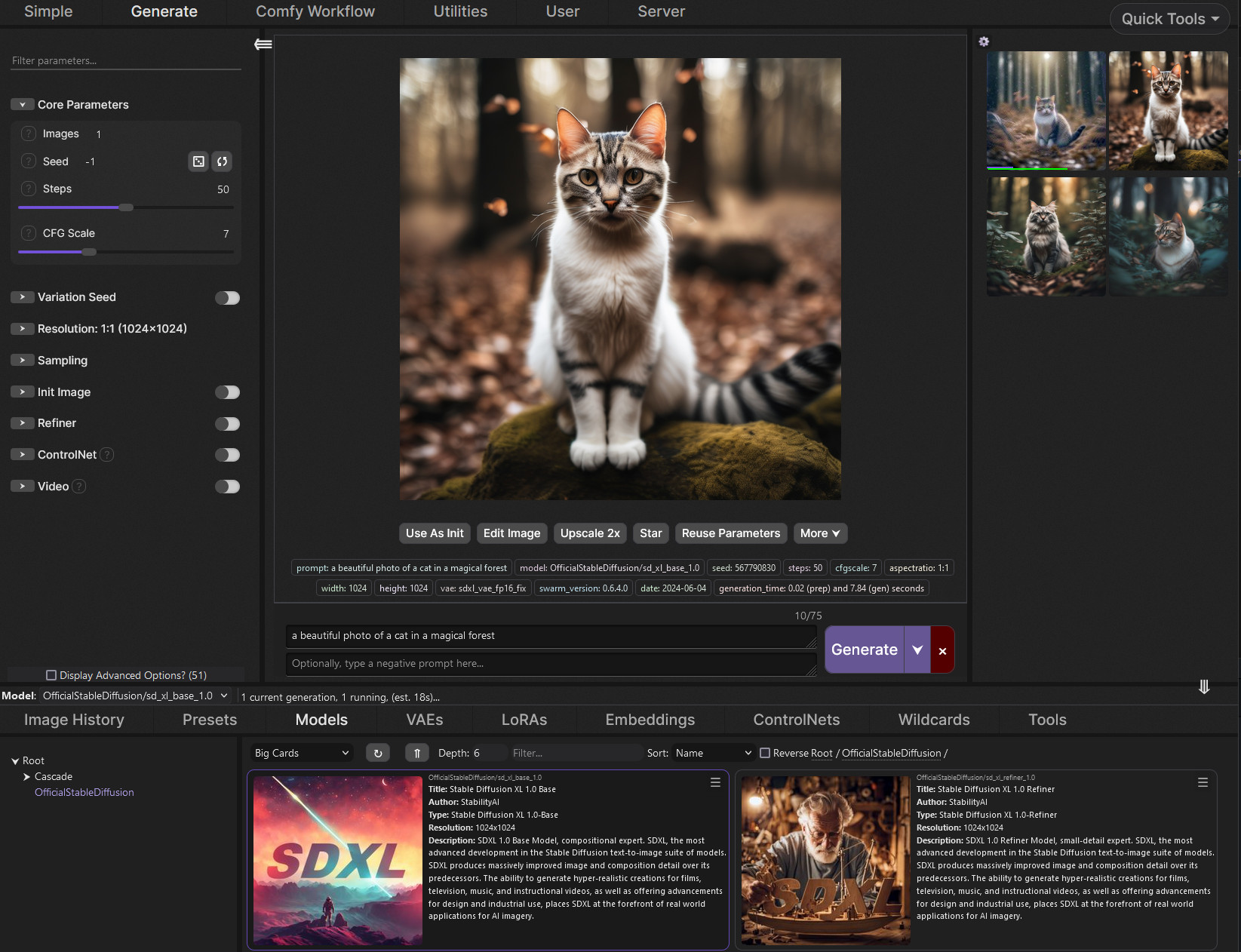

SwarmUI.net – A free, open source, modular AI image generation Web-User-Interface

https://github.com/mcmonkeyprojects/SwarmUI

A Modular AI Image Generation Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility. Supports AI image models (Stable Diffusion, Flux, etc.), and AI video models (LTX-V, Hunyuan Video, Cosmos, Wan, etc.), with plans to support eg audio and more in the future.

SwarmUI by default runs entirely locally on your own computer. It does not collect any data from you.

SwarmUI is 100% Free-and-Open-Source software, under the MIT License. You can do whatever you want with it.

-

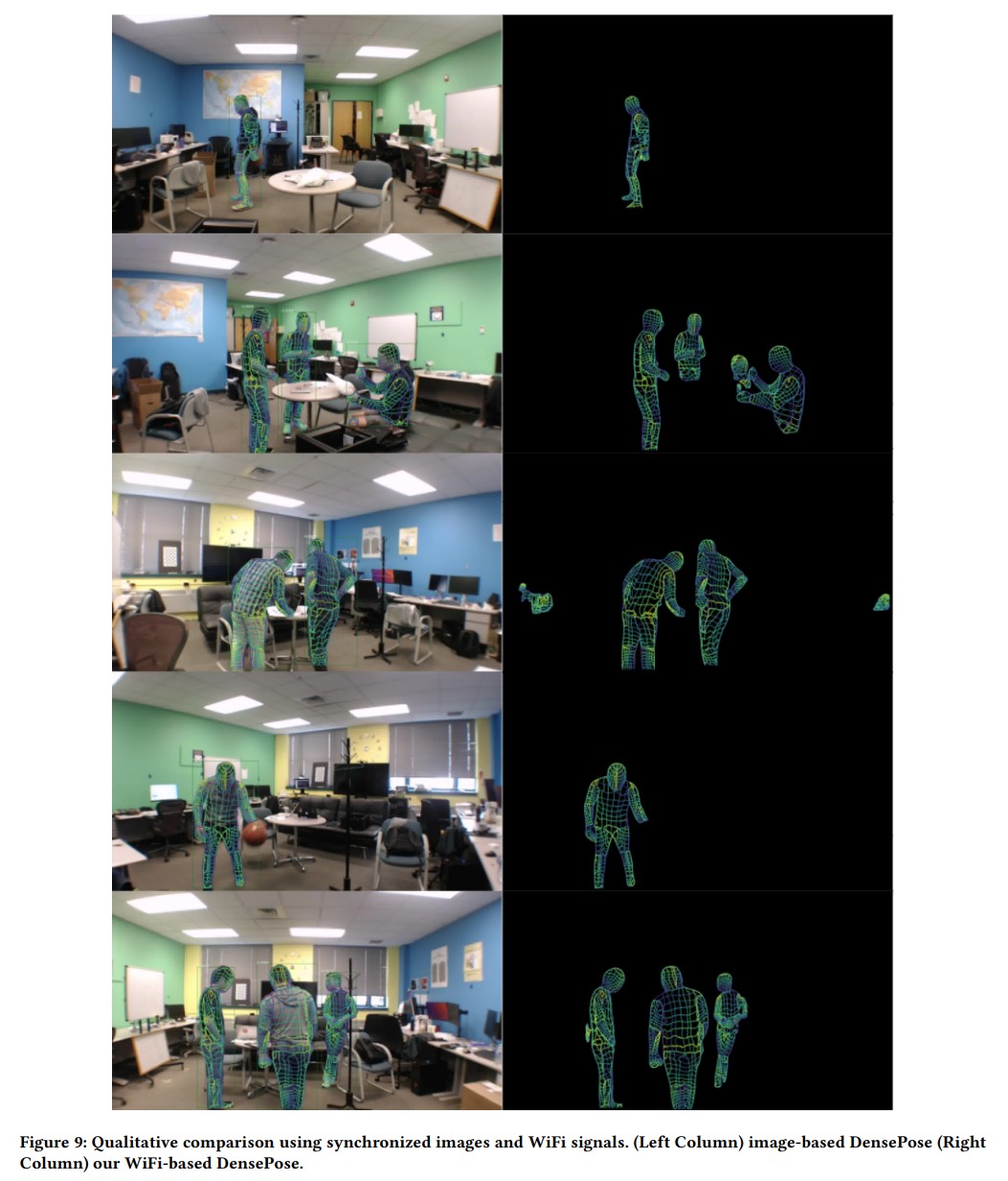

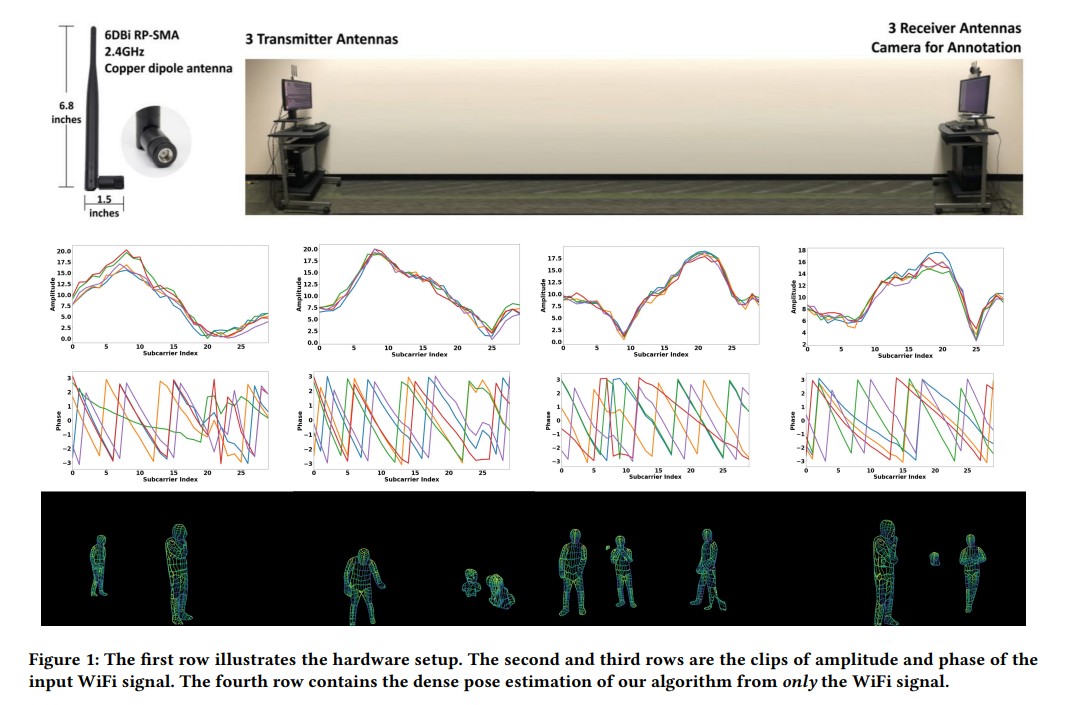

DensePose From WiFi using ML

https://arxiv.org/pdf/2301.00250

https://www.xrstager.com/en/ai-based-motion-detection-without-cameras-using-wifi

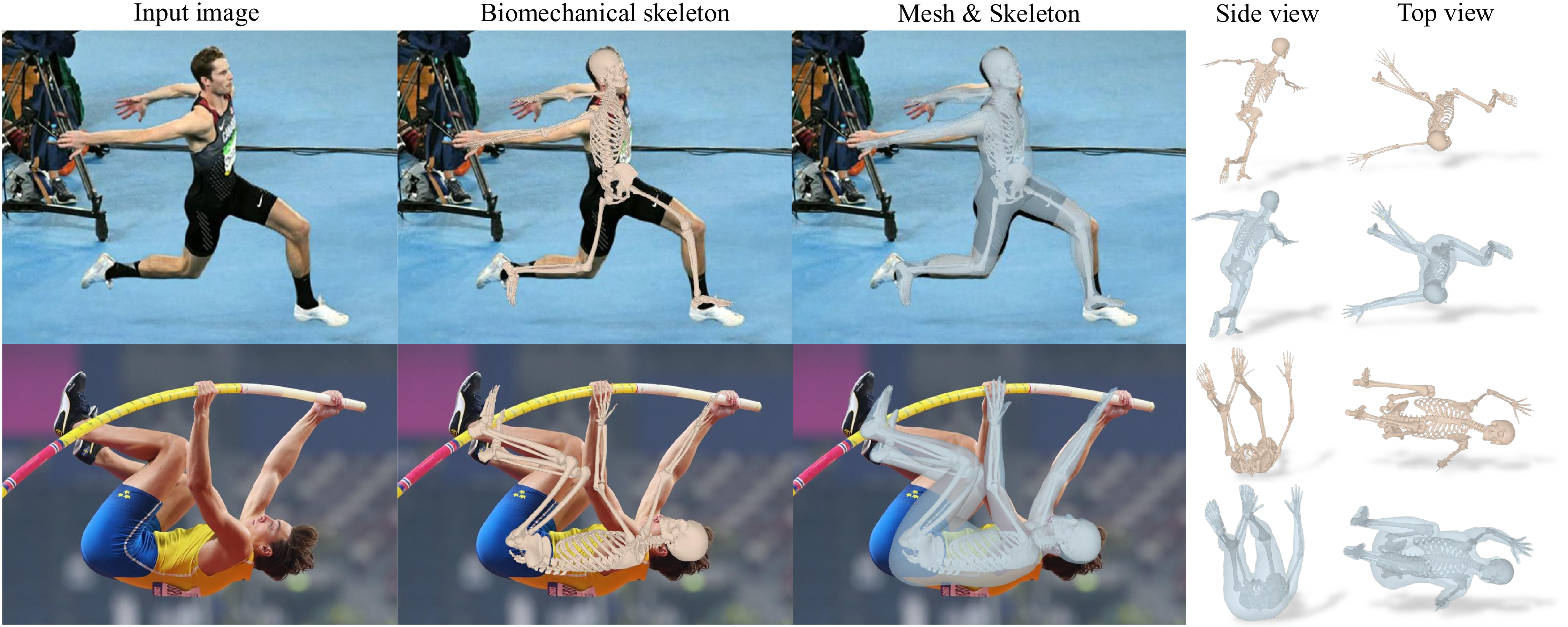

Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation using RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by common issues such as occlusion and lighting, which can significantly hinder performance in various scenarios.

Radar and LiDAR technologies, while useful, require specialized hardware that is both expensive and power-intensive. Moreover, deploying these sensors in non-public areas raises important privacy concerns, further limiting their practical applications.

To overcome these limitations, recent research has explored the use of WiFi antennas, which are one-dimensional sensors, for tasks like body segmentation and key-point body detection. Building on this idea, the current study expands the use of WiFi signals in combination with deep learning architectures—techniques typically used in computer vision—to estimate dense human pose correspondence.

In this work, a deep neural network was developed to map the phase and amplitude of WiFi signals to UV coordinates across 24 human regions. The results demonstrate that the model is capable of estimating the dense pose of multiple subjects with performance comparable to traditional image-based approaches, despite relying solely on WiFi signals. This breakthrough paves the way for developing low-cost, widely accessible, and privacy-preserving algorithms for human sensing.

-

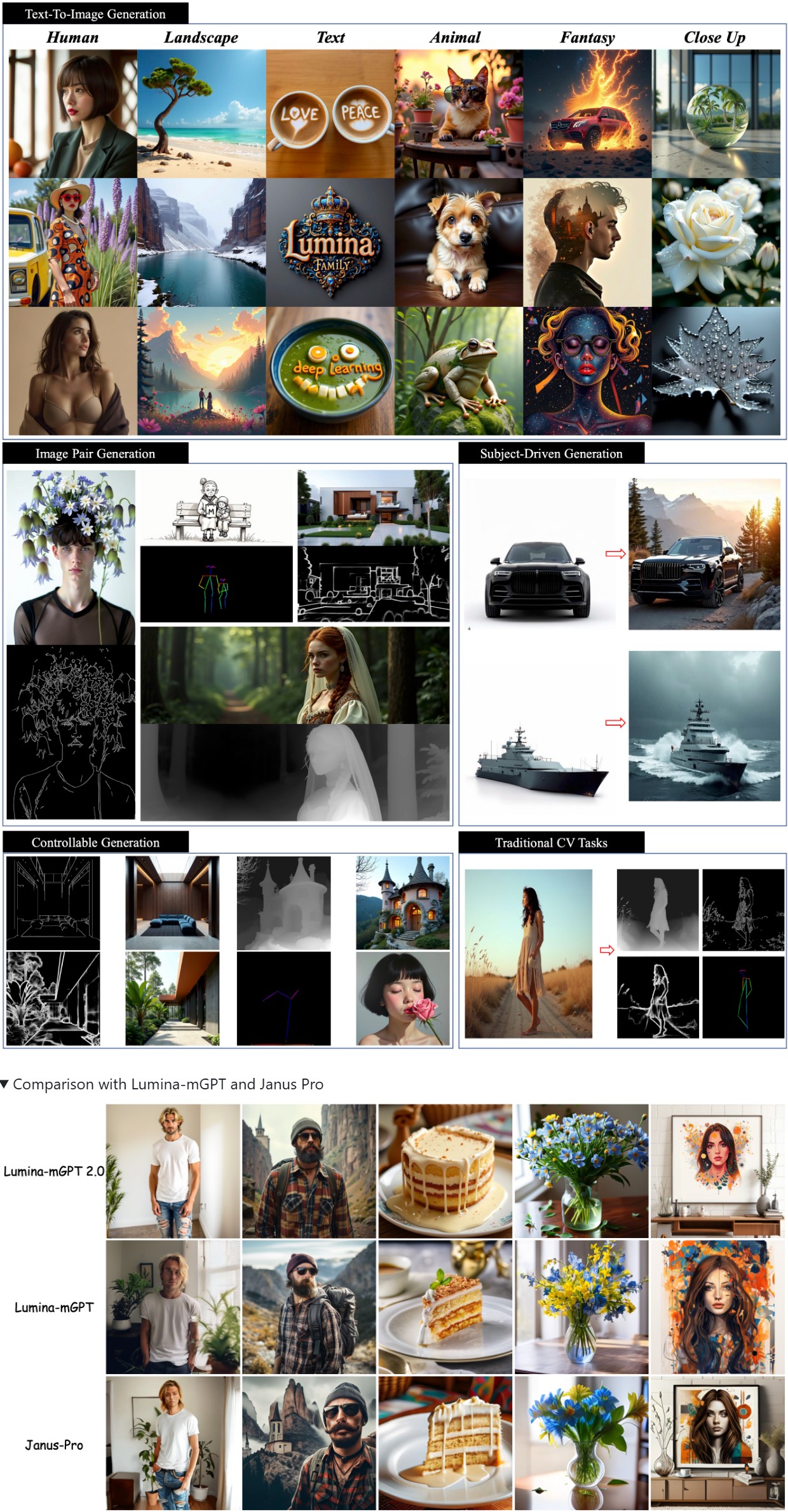

Lumina-mGPT 2.0 – Stand-alone Autoregressive Image Modeling

A stand-alone, decoder-only autoregressive model, trained from scratch, that unifies a broad spectrum of image generation tasks, including text-to-image generation, image pair generation, subject-driven generation, multi-turn image editing, controllable generation, and dense prediction.

https://github.com/Alpha-VLLM/Lumina-mGPT-2.0

-

Mamba and MicroMamba – A free, open source general software package managers for any kind of software and all operating systems

https://mamba.readthedocs.io/en/latest/user_guide/micromamba.html

https://mamba.readthedocs.io/en/latest/installation/micromamba-installation.html

https://micro.mamba.pm/api/micromamba/win-64/latest

https://prefix.dev/docs/mamba/overview

With mamba, it’s easy to set up

software environments. A software environment is simply a set of different libraries, applications and their dependencies. The power of environments is that they can co-exist: you can easily have an environment called py27 for Python 2.7 and one called py310 for Python 3.10, so that multiple of your projects with different requirements have their dedicated environments. This is similar to “containers” and images. However, mamba makes it easy to add, update or remove software from the environments.

To create a python environment under Windows:

micromamba create -n myenv python=3.10

This will create a myenv allocation under:

C:\Users\<USERNAME>\AppData\Roaming\mamba\envs\myenv

Once the environment is created, activate it with:

micromamba activate myenv

Or to execute a single command in this environment, use:

micromamba run -n myenv mycommandTo add a Windows shortcut to launching the micromamba environment:

cmd.exe /K micromamba activate myenv -

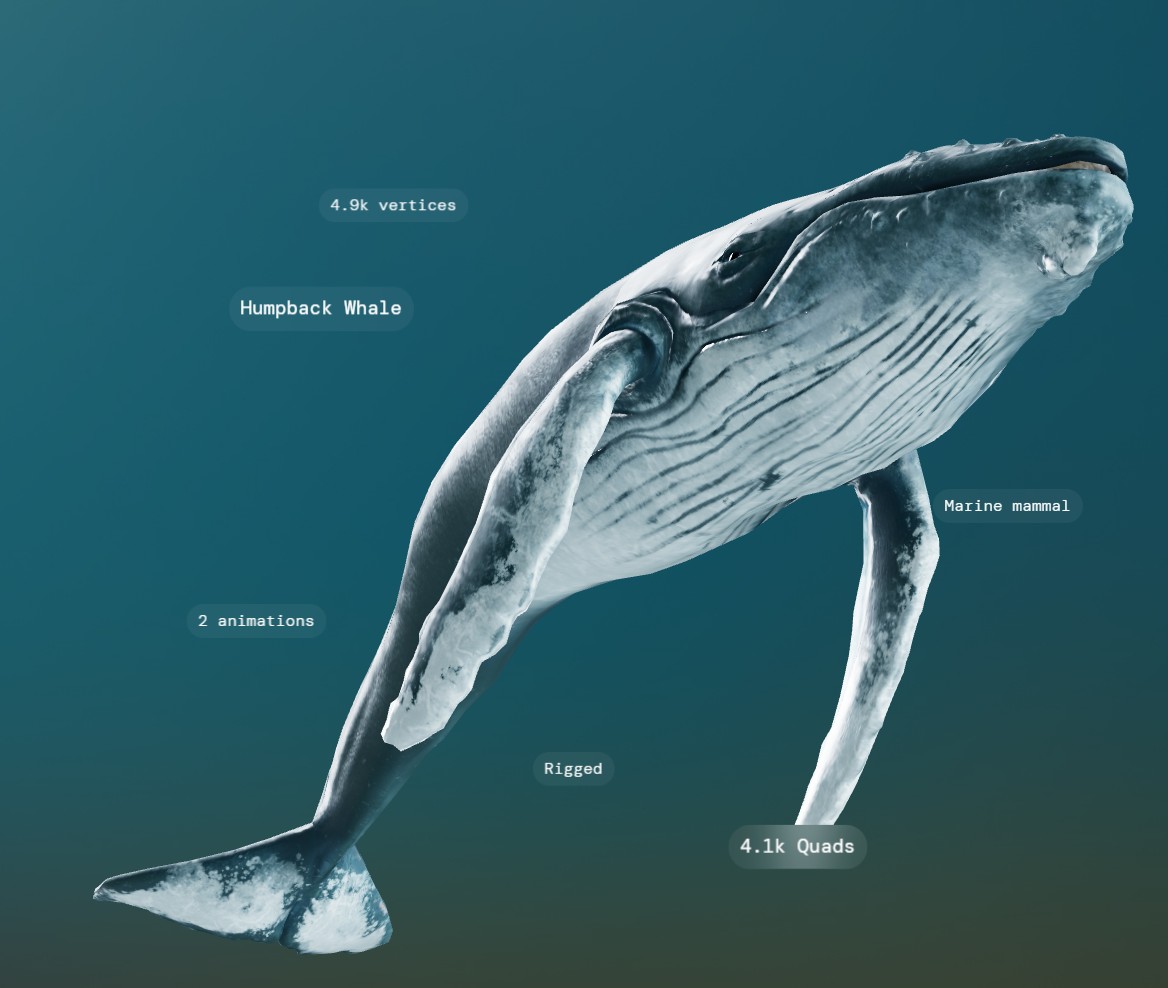

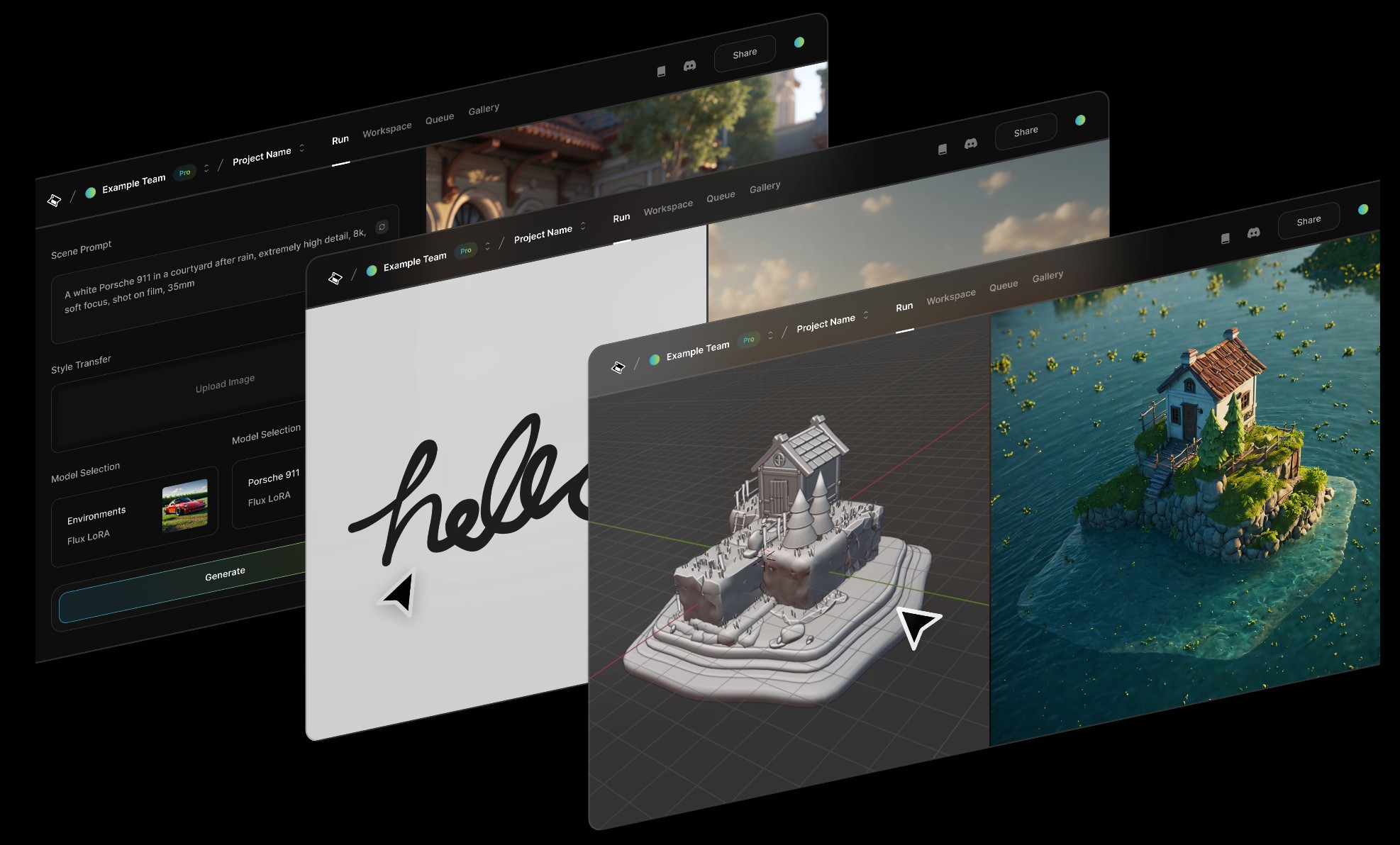

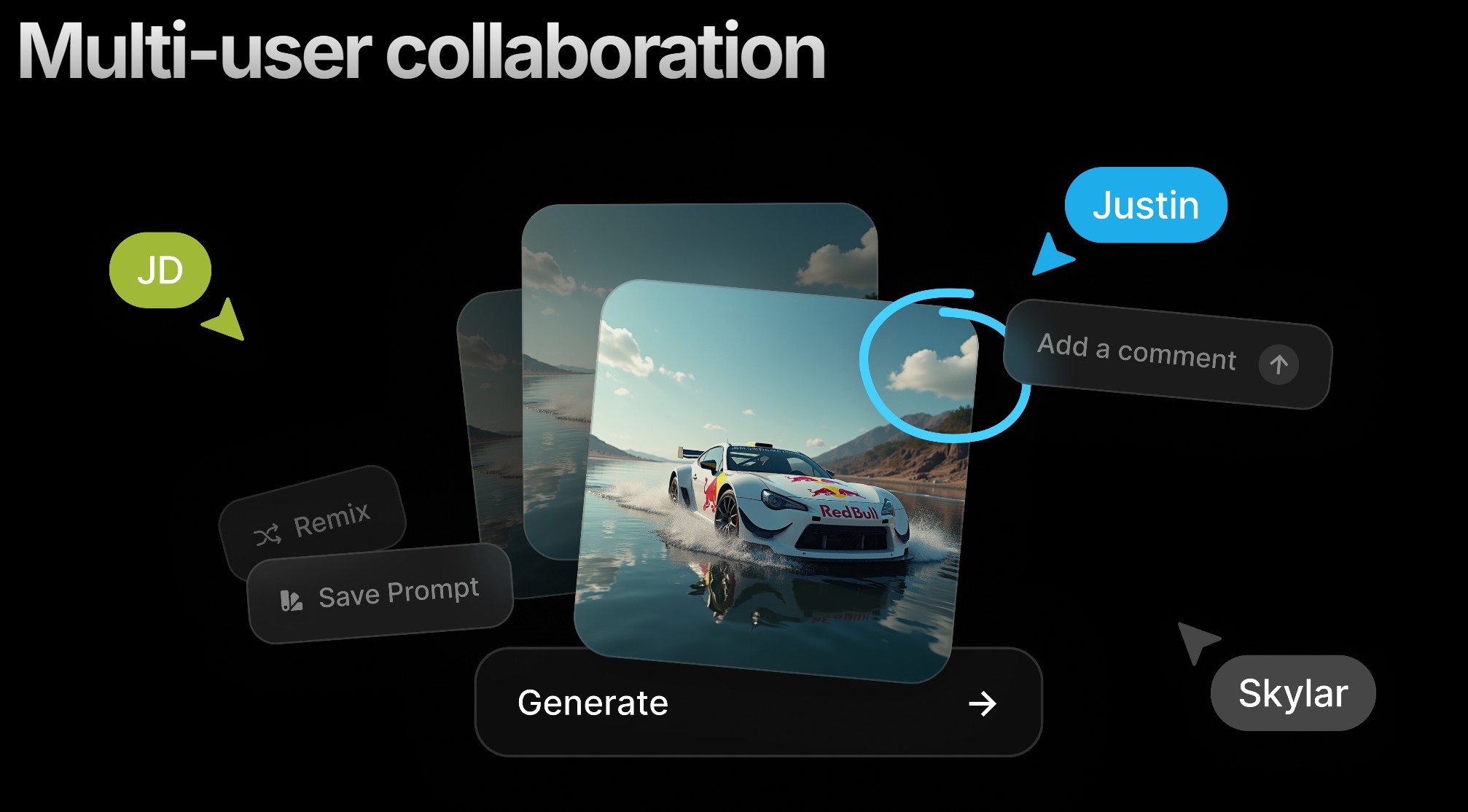

PlayBook3D – Creative controls for all media formats

Playbook3d.com is a diffusion-based render engine that reduces the time to final image with AI. It is accessible via web editor and API with support for scene segmentation and re-lighting, integration with production pipelines and frame-to-frame consistency for image, video, and real-time 3D formats.

-

AI Creativity – Genius or Gimmick?

7:59-9:50 Justine Bateman:

“I mean first I want to give people, help people have a little bit of a definition of what generative AI is—

think of it as like a blender and if you have a blender at home and you turn it on, what does it do? It depends on what I put into it, so it cannot function unless it’s fed things.

Then you turn on the blender and you give it a prompt, which is your little spoon, and you get a little spoonful—little Frankenstein spoonful—out of what you asked for.

So what is going into the blender? Every but a hundred years of film and television or many, many years of, you know, doctor’s reports or students’ essays or whatever it is.

In the film business, in particular, that’s what we call theft; it’s the biggest violation. And the term that continues to be used is “all we did.” I think the CTO of OpenAI—believe that’s her position; I forget her name—when she was asked in an interview recently what she had to say about the fact that they didn’t ask permission to take it in, she said, “Well, it was all publicly available.”

And I will say this: if you own a car—I know we’re in New York City, so it’s not going to be as applicable—but if I see a car in the street, it’s publicly available, but somehow it’s illegal for me to take it. That’s what we have the copyright office for, and I don’t know how well staffed they are to handle something like this, but this is the biggest copyright violation in the history of that office and the US government” -

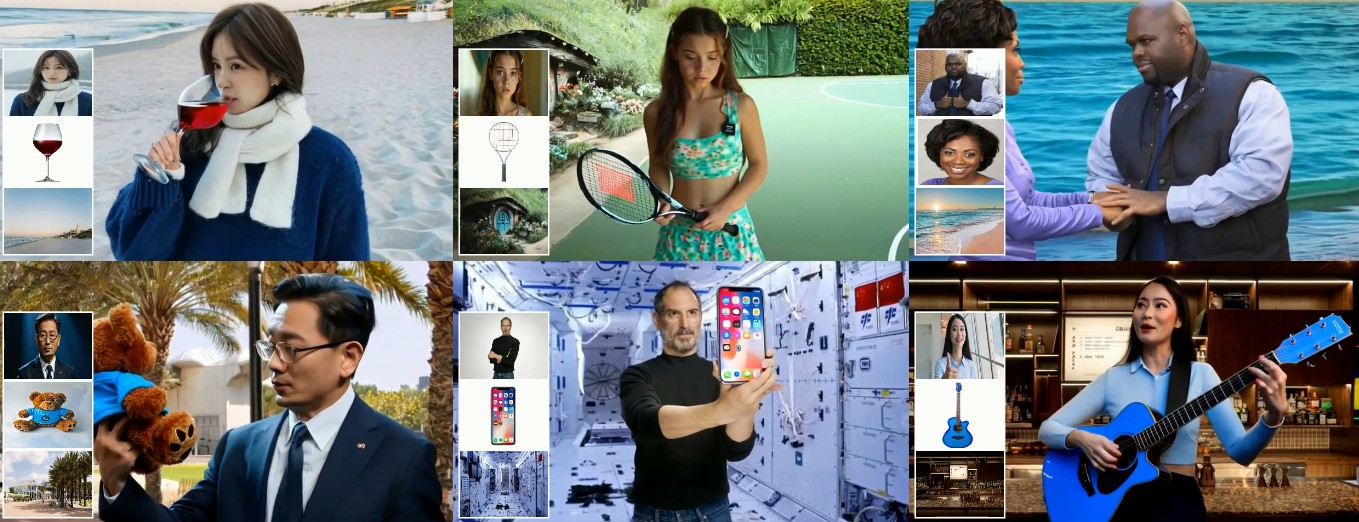

Aze Alter – What If Humans and AI Unite? | AGE OF BEYOND

https://www.patreon.com/AzeAlter

Voices & Sound Effects: https://elevenlabs.io/

Video Created mainly with Luma: https://lumalabs.ai/

LUMA LABS

KLING

RUNWAY

ELEVEN LABS

MINIMAX

MIDJOURNEY

Music By Scott Buckley -

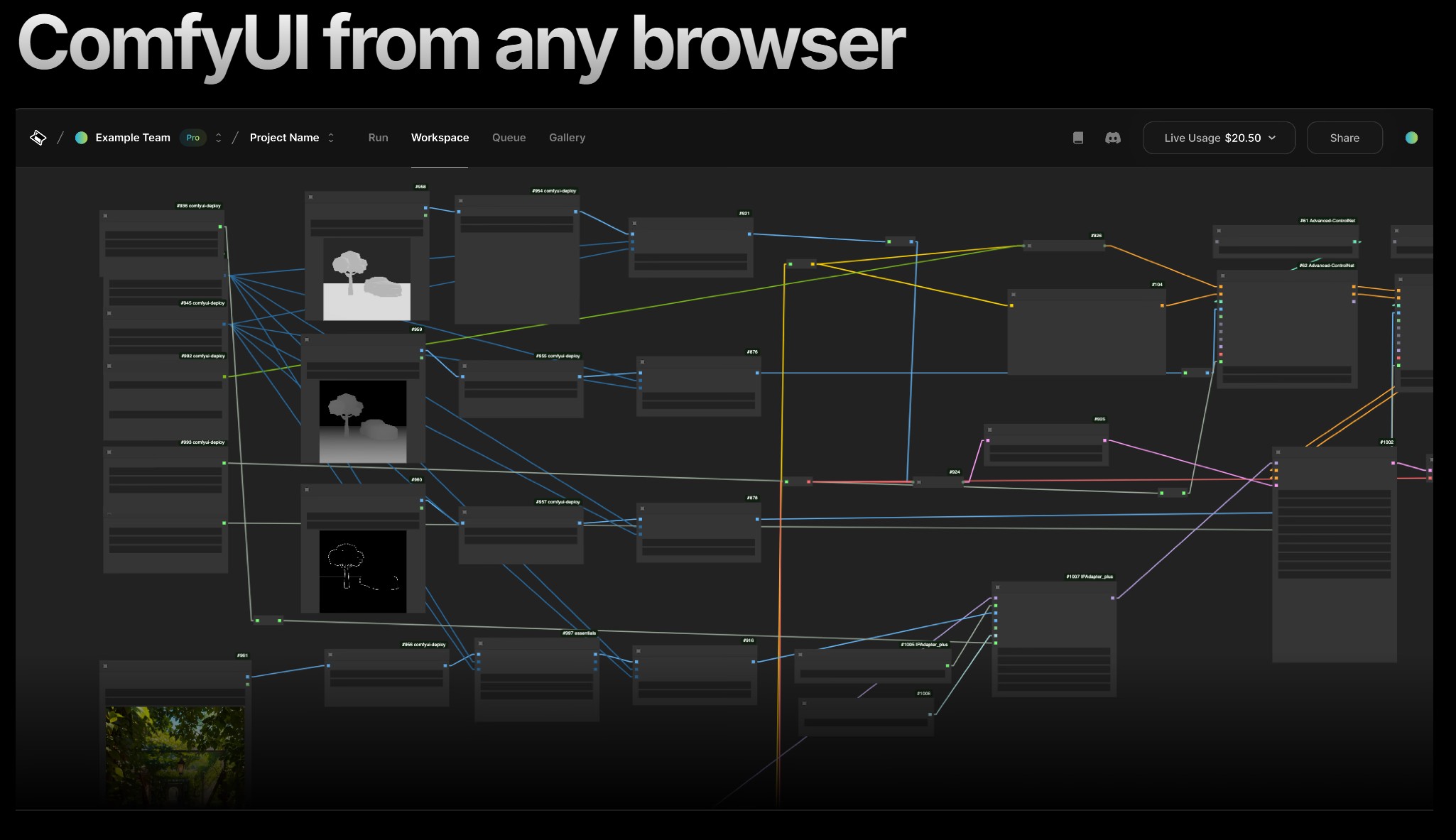

ComfyUI-Manager Joins Comfy-Org

https://blog.comfy.org/p/comfyui-manager-joins-comfy-org

On March 28, ComfyUI-Manager will be moving to the Comfy-Org GitHub organization as Comfy-Org/ComfyUI-Manager. This represents a natural evolution as they continue working to improve the custom node experience for all ComfyUI users.

What This Means For You

This change is primarily about improving support and development velocity. There are a few practical considerations:

- Automatic GitHub redirects will ensure all existing links, git commands, and references to the repository will continue to work seamlessly without any action needed

- For developers: Any existing PRs and issues will be transferred to the new repository location

- For users: ComfyUI-Manager will continue to function exactly as before—no action needed

- For workflow authors: Resources that reference ComfyUI-Manager will continue to work without interruption

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

How to paint a boardgame miniatures

-

Glossary of Lighting Terms – cheat sheet

-

Eddie Yoon – There’s a big misconception about AI creative

-

Jesse Zumstein – Jobs in games

-

Mastering The Art Of Photography – PixelSham.com Photography Basics

-

Gamma correction

-

How does Stable Diffusion work?

-

Want to build a start up company that lasts? Think three-layer cake

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.