https://nielscautaerts.xyz/python-dependency-management-is-a-dumpster-fire.html

For many modern programming languages, the associated tooling has the lock-file based dependency management mechanism baked in. For a great example, consider Rust’s Cargo.

Not so with Python.

The default package manager for Python is pip. The default instruction to install a package is to run pip install package. Unfortunately, this imperative approach for creating your environment is entirely divorced from the versioning of your code. You very quickly end up in a situation where you have 100’s of packages installed. You no longer know which packages you explicitly asked to install, and which packages got installed because they were a transitive dependency. You no longer know which version of the code worked in which environment, and there is no way to roll back to an earlier version of your environment. Installing any new package could break your environment.

…

…

Because Python is mostly a “glue” language, you often end up in a situation where you need non-Python dependencies in your environment. Unfortunately, those are usually not installable through pip, so you need additional tooling to bootstrap your environment.

PIP

Pip is the default package manager that comes with Python. This is a big advantage: you don’t need to install anything else. By default it installs packages from the Pypi.org repository where a huge number of packages are available (500K+).

Until quite recently (2020), pip did not have a robust dependency resolution algorithm, meaning that you could very easily break an environment. Depending on the order in which you installed packages, you could get a different and even inconsistent environment. This is no longer the case, as long as you use pip v20.3 or higher.

The downside of pip is that it’s a Python tool. You first need a Python installation in order to use it, and pip is confined to that Python installation. Pip can not manage Python itself, nor any other non-Python package.

Pip itself does not provide a built‐in “dry run” mode that simulates an installation without making any changes. While you can add the ‑‑verbose (or ‑v) flag to see more details about what pip is doing, it will still go ahead and perform the installation steps.

Alternatives and Workarounds to see modifications first

- Temporary Virtual Environment: One common workaround is to create a temporary virtual environment, run the installation command there, and then inspect the installed packages. Once you’re done, you can simply remove the environment.

- pip-tools (pip-compile): Tools like pip-tools allow you to resolve and list dependencies from a requirements file without immediately installing them. Running

pip-compile --verbose requirements.txtcan give you an overview of the dependency tree.python.exe -m pip install pip-tools

python.exe -m piptools compile --verbose requirements.txt -o compiled_requirements.txt - pip download: Another option is to use

pip download -r requirements.txtto fetch the packages (and their dependencies) without installing them. This won’t execute any installation logic but will give you the package files.

Each of these approaches can help you inspect or collect the packages that would be installed without modifying your current environment.

VENV

One could say that venv is the pip equivalent for virtual environments. It’s a built-in tool that serves to create virtual environments. Inside the virtual environment you can install packages with pip.

The way it works is that it creates a folder, typically within your project directory, which contains the packages you need in your environment. A small shell script inside this folder “activates” the environment by setting the PYTHONHOME and PATH environment variable, which tells Python where to look for packages and executables.

A potential downside of this virtual environment approach is that if you have a lot of packages with similar dependencies, you will have to duplicate them for each project.

UV

uv is relatively new tooling, developed by the same people who created ruff, that aims to be the all-in-one python project and package manager. As it says in the README A single tool to replace pip, pip-tools, pipx, poetry, pyenv, virtualenv, and more.

It indeed delivers on this promise and more. uv feels very much like a tool that aims to do everything, and manages to do it better.

Containers (e.g. Docker)

At my previous place of employment, Conda was a dirty word. Instead, the preferred tooling for managing system dependencies and isolating environments were containers. Inside the container, basic Python tooling like pip, venv and pip-tools were used, although some teams also used Poetry.

Containers have become the industry standard for deploying services. Very crudely, it’s a mini virtual machine that ships applications with all their dependencies in a single artifact. The only required dependencies are a Linux kernel and a container runtime. Once you have a container image, running it is a breeze and fully reproducible.

The main downsides of containers are the chore of building images and shipping them around (push/pull). Containers are built based on a file with build instructions, e.g. a Dockerfile for Docker containers. Writing this file so that a container builds quickly, correctly and in a reproducible way is an art. I’ve written at length about some of the limitations of containers here.

While containers primarily serve as a deployment mechanism, some people also advocate for its use as a tool for development. By isolating all dependencies from the host and developing in a container, we can guarantee that all devs see exactly the same environment. In this way, dev containers can serve as a replacement for virtual environments or Conda environments.

CONDA

With Conda, we come to the bifurcation in the Python ecosystem. The “main” Python ecosystem centers around pip-installable packages from pypi.org. All the previously mentioned tooling orbited in this system. Conda is a completely different package manager developed by Anaconda, a private company. It primarily serves to install packages from anaconda.org, a repository that has no link with pypi.org. Unlike pip, Conda is also able to create virtual environments.

So why have this parallel ecosystem of packages?

The main reason Conda was developed, was to solve the problems faced by the fields of scientific research and data science.

Conclusion

Key Points

- From Simple Script to Catastrophe

- The post opens with an analogy: a quick Python script built for automation eventually grows into multiple scripts, modules, and even a full library.

- Initial success gives way to disaster when others struggle to replicate the working environment, and even minor changes can break everything.

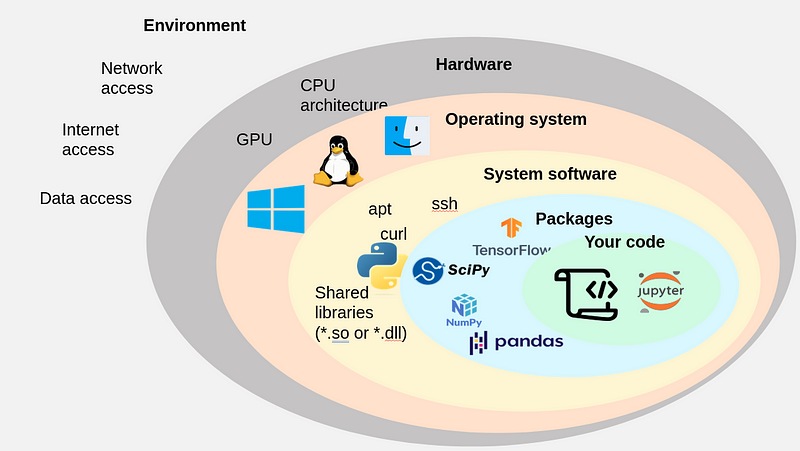

- Layers of Dependencies

- Dependencies aren’t limited to just pip-installable Python packages. They also include:

- System packages: Global libraries (.so/.dll files) managed by the OS.

- Operating systems & hardware: Variations in OS behavior and CPU architectures can affect code execution.

- External environments: Network resources or APIs that your code relies on.

- This layered complexity means that even simple Python code is supported by a vast and intricate ecosystem.

- Dependencies aren’t limited to just pip-installable Python packages. They also include:

- The Reproducibility Challenge

- Reproducibility is crucial across development, build, and deployment stages.

- A reproducible environment guarantees that everyone—from individual developers to large teams—runs the exact same versions of every dependency, minimizing unexpected errors.

- The Role of Definition and Lock Files

- A definition file lists the direct dependencies (e.g., “pandas>=1.5,<2.0”), but this alone is not enough.

- A lock file fixes the versions of all dependencies (including transitive ones) so that the environment remains consistent over time.

- Lock files act as a safeguard, ensuring that the environment can be recreated exactly, regardless of when or where it’s set up.

- Pitfalls with Python’s Default Tools

- The default package manager, pip, encourages an imperative “pip install” approach.

- This method makes it easy to accumulate untracked dependencies and inadvertently break environments, particularly for beginners.

- Pip’s limitations extend to managing non-Python components like the Python interpreter itself, further complicating the dependency landscape.

- Ecosystem Fragmentation and Tooling Choices

- The Python ecosystem offers multiple tools (e.g., Conda, virtual environments, Nix) to manage dependencies and isolation, but no single tool covers all needs.

- Developers must decide which tools best fit their project’s requirements and balance the trade-offs between ease-of-use and complete isolation.

- Irony in the Ecosystem

- Many dependency management tools for Python are themselves written in Python, which can lead to circular dependency issues.

- A recent trend is rewriting these tools in more robust languages like Rust to avoid such pitfalls.

Conclusion

Niels Cautaerts paints a cautionary picture of Python dependency management. He emphasizes that without strict practices—such as using version control, ensuring proper environment isolation, and employing lock files—the seemingly benign act of installing a library can spiral into an intractable mess. The post ultimately serves as a call-to-arms for better tools and practices in the Python community to tame this “dumpster fire.”