https://arstechnica.com/ai/2025/01/nvidias-first-desktop-pc-can-run-local-ai-models-for-3000

https://www.nvidia.com/en-us/project-digits

Some smaller open-weights AI language models (such as Llama 3.1 70B, with 70 billion parameters) and various AI image-synthesis models like Flux.1 dev (12 billion parameters) could probably run comfortably on Project DIGITS, but larger open models like Llama 3.1 405B, with 405 billion parameters, may not. Given the recent explosion of smaller AI models, a creative developer could likely run quite a few interesting models on the unit.

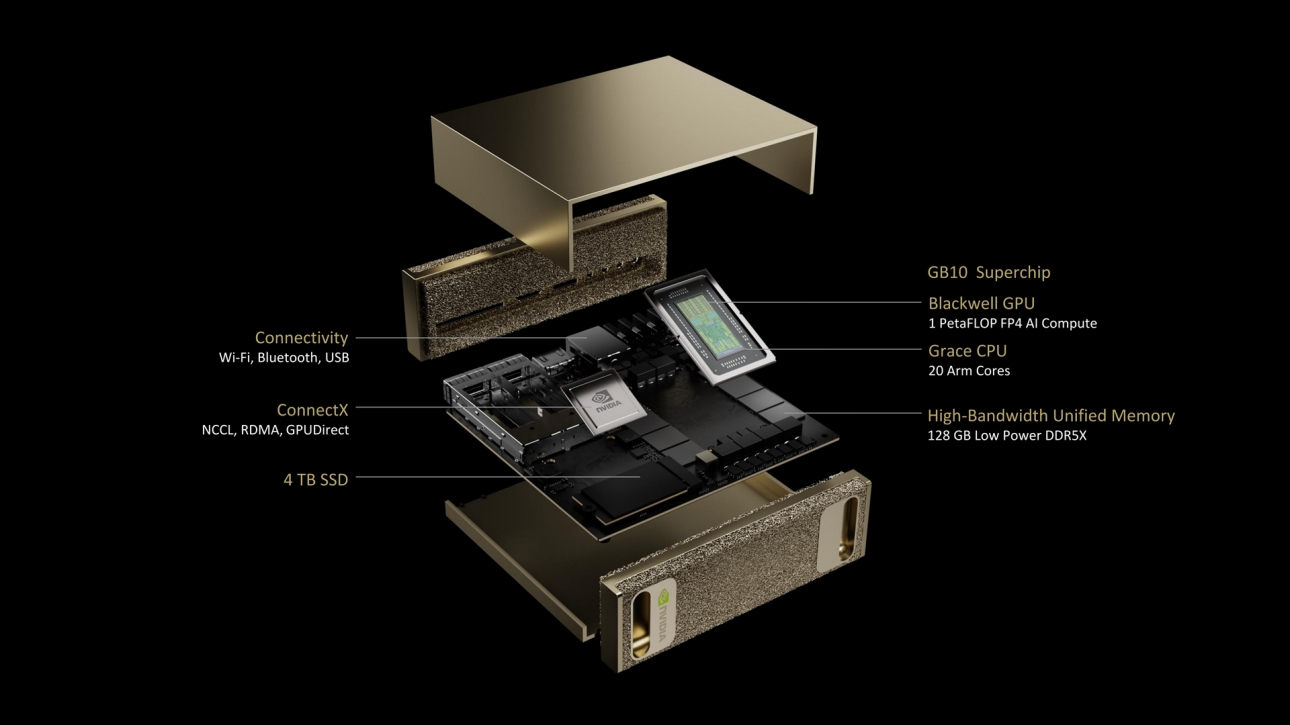

DIGITS’ 128GB of unified RAM is notable because a high-power consumer GPU like the RTX 4090 has only 24GB of VRAM. Memory serves as a hard limit on AI model parameter size, and more memory makes room for running larger local AI models.