BREAKING NEWS

LATEST POSTS

-

ComfyUI SeC Nodes – ComfyUI custom nodes for SeC (Segment Concept) – State-of-the-art video object segmentation that outperforms SAM 2.1, utilizing the SeC-4B model developed by OpenIXCLa

https://github.com/9nate-drake/Comfyui-SecNodes

What is SeC?

SeC (Segment Concept) is a breakthrough in video object segmentation that shifts from simple feature matching to high-level conceptual understanding. Unlike SAM 2.1 which relies primarily on visual similarity, SeC uses a Large Vision-Language Model (LVLM) to understand what an object is conceptually, enabling robust tracking through:

- Semantic Understanding: Recognizes objects by concept, not just appearance

- Scene Complexity Adaptation: Automatically balances semantic reasoning vs feature matching

- Superior Robustness: Handles occlusions, appearance changes, and complex scenes better than SAM 2.1

- SOTA Performance: +11.8 points over SAM 2.1 on SeCVOS benchmark

How SeC Works

- Visual Grounding: You provide initial prompts (points/bbox/mask) on one frame

- Concept Extraction: SeC’s LVLM analyzes the object to build a semantic understanding

- Smart Tracking: Dynamically uses both semantic reasoning and visual features

- Keyframe Bank: Maintains diverse views of the object for robust concept understanding

The result? SeC tracks objects more reliably through challenging scenarios like rapid appearance changes, occlusions, and complex multi-object scenes.

-

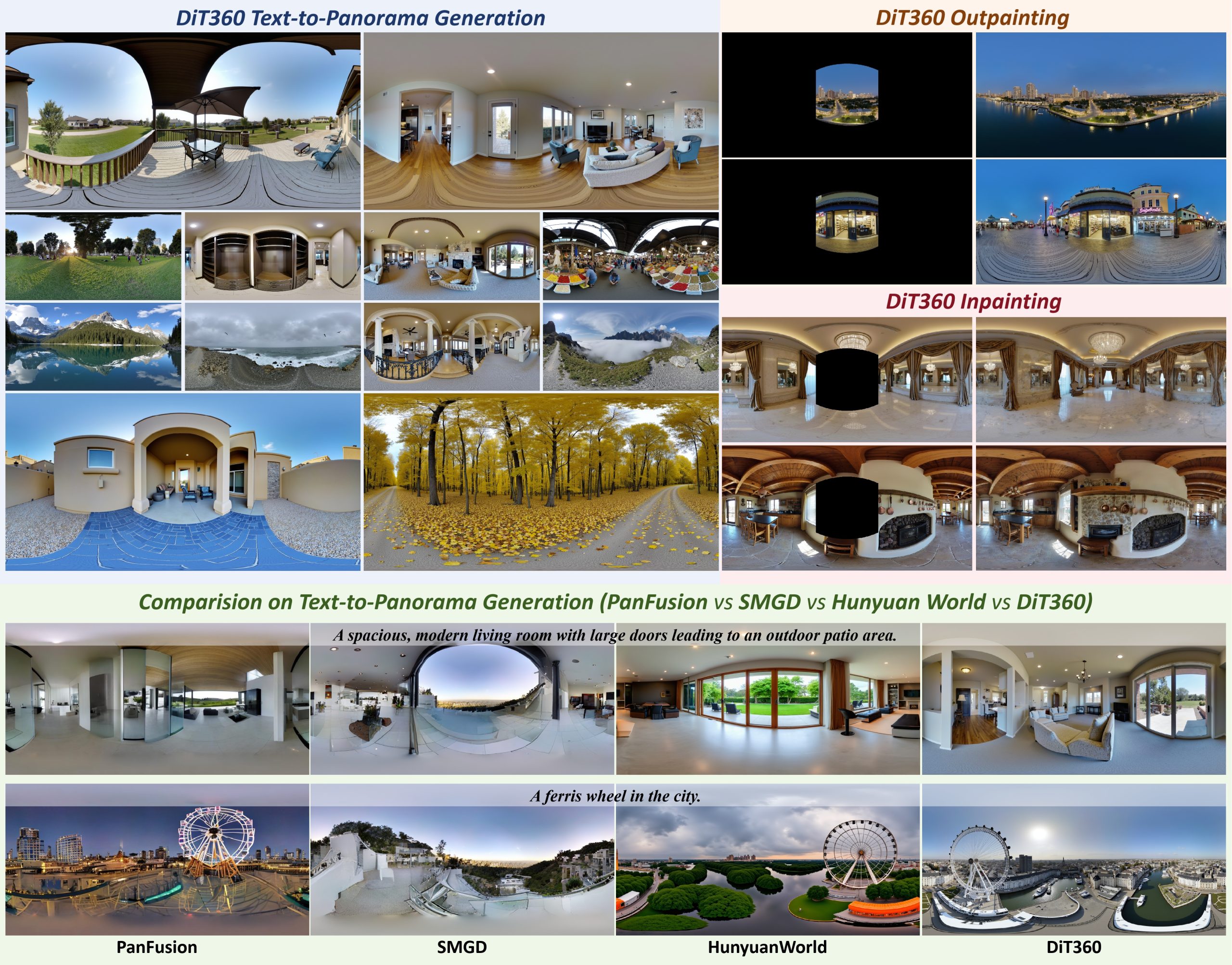

Insta360-Research-Team DiT360 – High-Fidelity Panoramic Image Generation via Hybrid Training

https://github.com/Insta360-Research-Team/DiT360

DiT360 is a framework for high-quality panoramic image generation, leveraging both perspective and panoramic data in a hybrid training scheme. It adopts a two-level strategy—image-level cross-domain guidance and token-level hybrid supervision—to enhance perceptual realism and geometric fidelity.

-

Warner Bros. Discovery puts itself up for sale, citing interest from ‘multiple’ suitors

https://www.cnn.com/2025/10/21/media/warner-bros-wbd-sale-paramount-skydance-suitors

Warner Bros. Discovery, the parent of CNN, is contemplating a sale. … …

Financial and stock performance

- Market Valuation: As of late October 2025, the market cap is approximately $45.36 to $50.37 billion.

- Profitability: The company has a low net margin of around 2% and a low operating margin of 2.5%, indicating profitability challenges.

- Valuation Ratios: The P/E ratio is high at around 61.07, while the P/S ratio is 1.18 and P/B ratio is 1.26, which are near historical highs.

- Growth: The 3-year revenue growth rate is negative at -4.4%, and the company’s Altman Z-Score is in the distress zone at 0.76.

Analyst ratings and market sentiment

- Consensus Rating: The average rating is a “Hold,” based on analyst coverage.

- Analyst Breakdown: Out of 24 analysts, 16 have a “Hold,” 7 have a “Buy,” and 1 has a “Strong Buy” rating.

- Stock Performance: The stock price has surged more than 46% since early September 2025, following reports of interest from other companies.

Strategic review and future outlook

- Strategic Review: The company is exploring “strategic alternatives,” including a potential sale, which has led to interest from potential buyers like Paramount.

- Asset Value: The interest in Warner Bros. Discovery underscores the value of its diverse portfolio, which includes its film and TV studios, HBO Max, and cable networks.

- Separation Plan: A plan to separate the company into a streaming/studios entity and a cable counterpart is still being considered.

- No Guarantee: A sale is not guaranteed and the company has not provided a specific timeline for the review process.

-

Adobe buys InvokeAI and launches Adobe AI Foundry

https://mlq.ai/news/adobe-stock-rises-following-launch-of-adobe-ai-foundry/

https://theaieconomy.substack.com/p/adobe-ai-foundry-invoke-acquisition

Adobe is announcing the acquisition of Invoke, a generative media solution for creative production. The startup’s team will join the AI Foundry to build out AI-powered creative workflows for businesses.

“AI Foundry unites years of Adobe innovation and expertise, spanning our generative AI models and modalities, to help businesses solve today’s most complex content and media production challenges,” Hannah Elsakr, Adobe’s vice president for GenAI new business ventures, says in a release.

FEATURED POSTS

-

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

http://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

-

DeepBeepMeep – AI solutions specifically optimized for low spec GPUs

https://huggingface.co/DeepBeepMeep

https://github.com/deepbeepmeep

Wan2GP – A fast AI Video Generator for the GPU Poor. Supports Wan 2.1/2.2, Qwen Image, Hunyuan Video, LTX Video and Flux.

mmgp – Memory Management for the GPU Poor, run the latest open source frontier models on consumer Nvidia GPUs.

YuEGP – Open full-song generation foundation that transforms lyrics into complete songs.

HunyuanVideoGP – Large video generation model optimized for low-VRAM GPUs.

FluxFillGP – Flux-based inpainting and outpainting tool for low-VRAM GPUs.

Cosmos1GP – Text-to-world and image/video-to-world generator for the GPU Poor.

Hunyuan3D-2GP – GPU-friendly version of Hunyuan3D-2 for 3D content generation.

OminiControlGP – Lightweight version of OminiControl enabling 3D, pose, and control tasks with FLUX.

SageAttention – Quantized attention achieving 2.1–3.1× and 2.7–5.1× speedups over FlashAttention2 and xformers without losing end-to-end accuracy.

insightface – State-of-the-art 2D and 3D face analysis project for recognition, detection, and alignment.

-

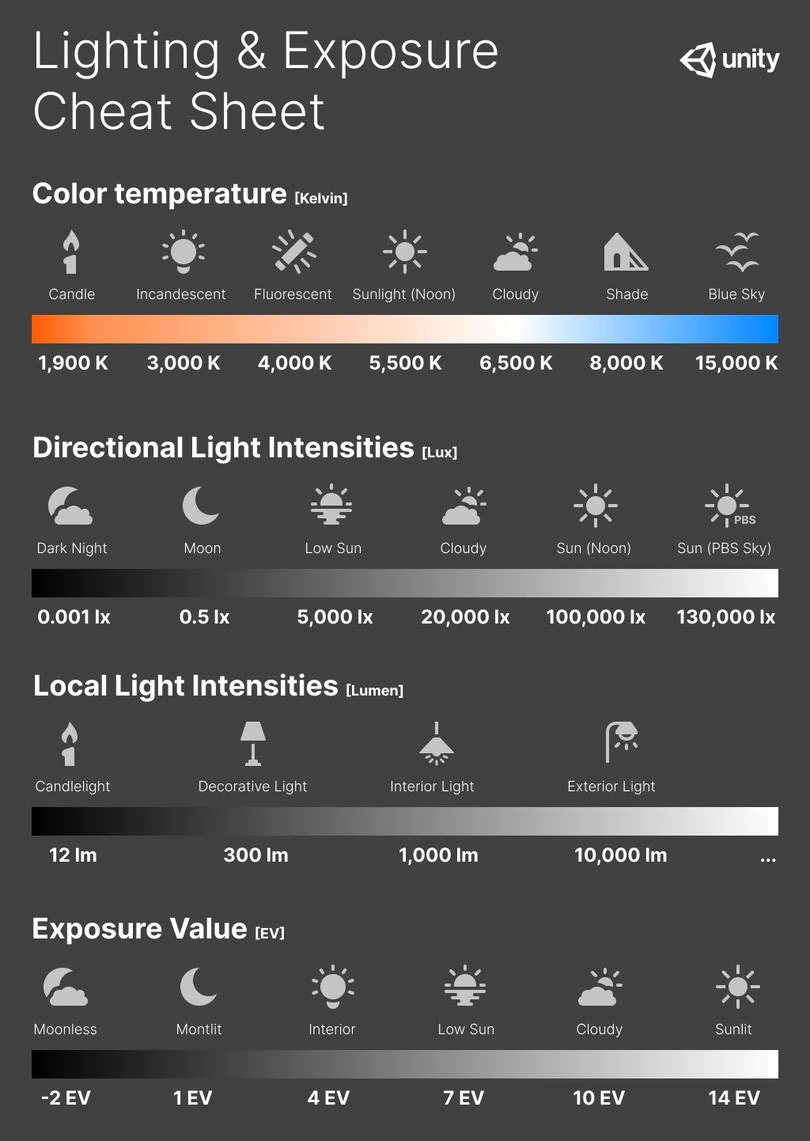

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Also see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

-

SourceTree vs Github Desktop – Which one to use

Sourcetree and GitHub Desktop are both free, GUI-based Git clients aimed at simplifying version control for developers. While they share the same core purpose—making Git more accessible—they differ in features, UI design, integration options, and target audiences.

Installation & Setup

- Sourcetree

- Download: https://www.sourcetreeapp.com/

- Supported OS: Windows 10+, macOS 10.13+

- Prerequisites: Comes bundled with its own Git, or can be pointed to a system Git install.

- Initial Setup: Wizard guides SSH key generation, authentication with Bitbucket/GitHub/GitLab.

- GitHub Desktop

- Download: https://desktop.github.com/

- Supported OS: Windows 10+, macOS 10.15+

- Prerequisites: Bundled Git; seamless login with GitHub.com or GitHub Enterprise.

- Initial Setup: One-click sign-in with GitHub; auto-syncs repositories from your GitHub account.

Feature Comparison

(more…)Feature Sourcetree GitHub Desktop Branch Visualization Detailed graph view with drag-and-drop for rebasing/merging Linear graph, simpler but less configurable Staging & Commit File-by-file staging, inline diff view All-or-nothing staging, side-by-side diff Interactive Rebase Full support via UI Basic support via command line only Conflict Resolution Built-in merge tool integration (DiffMerge, Beyond Compare) Contextual conflict editor with choice panels Submodule Management Native submodule support Limited; requires CLI Custom Actions / Hooks Define custom actions (e.g., launch scripts) No UI for custom Git hooks Git Flow / Hg Flow Built-in support None Performance Can lag on very large repos Generally snappier on medium-sized repos Memory Footprint Higher RAM usage Lightweight Platform Integration Atlassian Bitbucket, Jira Deep GitHub.com / Enterprise integration Learning Curve Steeper for beginners Beginner-friendly - Sourcetree

-

Scene Referred vs Display Referred color workflows

Display Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus