BREAKING NEWS

LATEST POSTS

-

AI and the Law – Disney, Warner Bros. Discovery and NBCUniversal sue Chinese AI firm MiniMax

On Tuesday, the three media companies filed a lawsuit against MiniMax, a Chinese AI company that is reportedly valued at $4 billion, alleging “willful and brazen” copyright infringement

MiniMax operates Hailuo AI

-

Mariko Mori – Kamitate Stone at Sean Kelly Gallery

Mariko Mori, the internationally celebrated artist who blends technology, spirituality, and nature, debuts Kamitate Stone I this October at Sean Kelly Gallery in New York. The work continues her exploration of luminous form, energy, and transcendence.

-

Vimeo Enters into Definitive Agreement to Be Acquired by Bending Spoons for $1.38 Billion

-

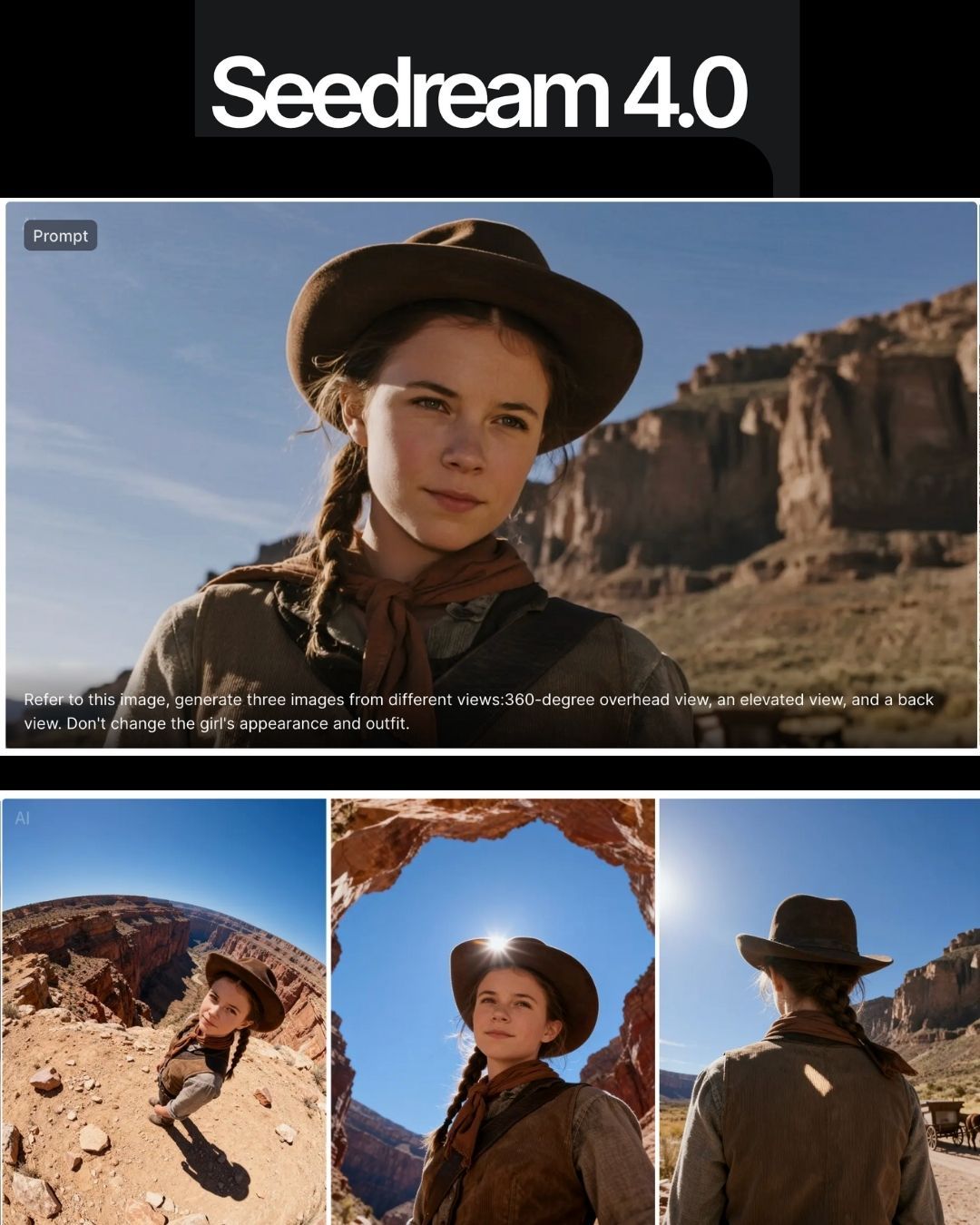

ByteDance Seedream 4.0 – Super‑fast, 4K, multi image support

https://seed.bytedance.com/en/seedream4_0

➤ Super‑fast, high‑resolution results : resolutions up to 4K, producing a 2K image in less than 1.8 seconds, all while maintining sharpness and realism.

➤ At 4K, cost as low as 0.03 $ per generation.

➤ Natural‑language editing – You can instruct the model to “remove the people in the background,” “add a helmet” or “replace this with that,” and it executes without needing complicated prompts.

➤ Multi‑image input and output – It can combine multiple images, transfer styles and produce storyboards or series with consistent characters and themes.

-

OpenAI Backs Critterz, an AI-Made Animated Feature Film

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

FEATURED POSTS

-

SlowMoVideo – How to make a slow motion shot with the open source program

http://slowmovideo.granjow.net/

slowmoVideo is an OpenSource program that creates slow-motion videos from your footage.

Slow motion cinematography is the result of playing back frames for a longer duration than they were exposed. For example, if you expose 240 frames of film in one second, then play them back at 24 fps, the resulting movie is 10 times longer (slower) than the original filmed event….

Film cameras are relatively simple mechanical devices that allow you to crank up the speed to whatever rate the shutter and pull-down mechanism allow. Some film cameras can operate at 2,500 fps or higher (although film shot in these cameras often needs some readjustment in postproduction). Video, on the other hand, is always captured, recorded, and played back at a fixed rate, with a current limit around 60fps. This makes extreme slow motion effects harder to achieve (and less elegant) on video, because slowing down the video results in each frame held still on the screen for a long time, whereas with high-frame-rate film there are plenty of frames to fill the longer durations of time. On video, the slow motion effect is more like a slide show than smooth, continuous motion.

One obvious solution is to shoot film at high speed, then transfer it to video (a case where film still has a clear advantage, sorry George). Another possibility is to cross dissolve or blur from one frame to the next. This adds a smooth transition from one still frame to the next. The blur reduces the sharpness of the image, and compared to slowing down images shot at a high frame rate, this is somewhat of a cheat. However, there isn’t much you can do about it until video can be recorded at much higher rates. Of course, many film cameras can’t shoot at high frame rates either, so the whole super-slow-motion endeavor is somewhat specialized no matter what medium you are using. (There are some high speed digital cameras available now that allow you to capture lots of digital frames directly to your computer, so technology is starting to catch up with film. However, this feature isn’t going to appear in consumer camcorders any time soon.)

-

How the 5G network is going to change the world and A.I.

www.techradar.com/au/news/what-is-5g-everything-you-need-to-know

edition.cnn.com/2019/02/28/tech/5g-benefits-mobile-world-congress/index.html

5G networks are the next generation of mobile internet connectivity, offering faster speeds and more reliable connections on smartphones and other devices than ever before.

Combining cutting-edge network technology and the very latest research, 5G should offer connections that are multitudes faster than current connections, with average download speeds of around 1GBps expected to soon be the norm.

Industry players claim 5G can be 100 times faster than 4G and that a huge number of devices will be able to connect to the network simultaneously.

This will enable self-driving vehicles to talk to each other in real time: they’ll know when another car is changing lanes or braking and can adjust to manage traffic accordingly.

-

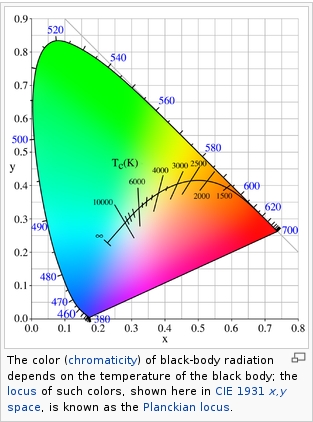

Black Body color aka the Planckian Locus curve for white point eye perception

http://en.wikipedia.org/wiki/Black-body_radiation

Black-body radiation is the type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature. The radiation has a specific spectrum and intensity that depends only on the temperature of the body.

A black-body at room temperature appears black, as most of the energy it radiates is infra-red and cannot be perceived by the human eye. At higher temperatures, black bodies glow with increasing intensity and colors that range from dull red to blindingly brilliant blue-white as the temperature increases.

(more…)

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

https://github.com/zibojia/MiniMax-Remover

MiniMax-Remover is a fast and effective video object remover based on minimax optimization. It operates in two stages: the first stage trains a remover using a simplified DiT architecture, while the second stage distills a robust remover with CFG removal and fewer inference steps.